Key Takeaways

- A network load balancer is a device that balances application or network traffic throughout servers.

- Load balancing offers several benefits, including application availability, security, scalability, performance, etc.

- Some drawbacks associated with load balancing include extra costs, vendor lock-in risks, etc.

- There are several use cases of load balancing, including managing multiple sites and applications, etc.

- Load balancing algorithms include dynamic load balancing and static load balancing.

- Load balancing distributes incoming network traffic through various servers or resources. It helps ensure proper resource utilization and quick response times.

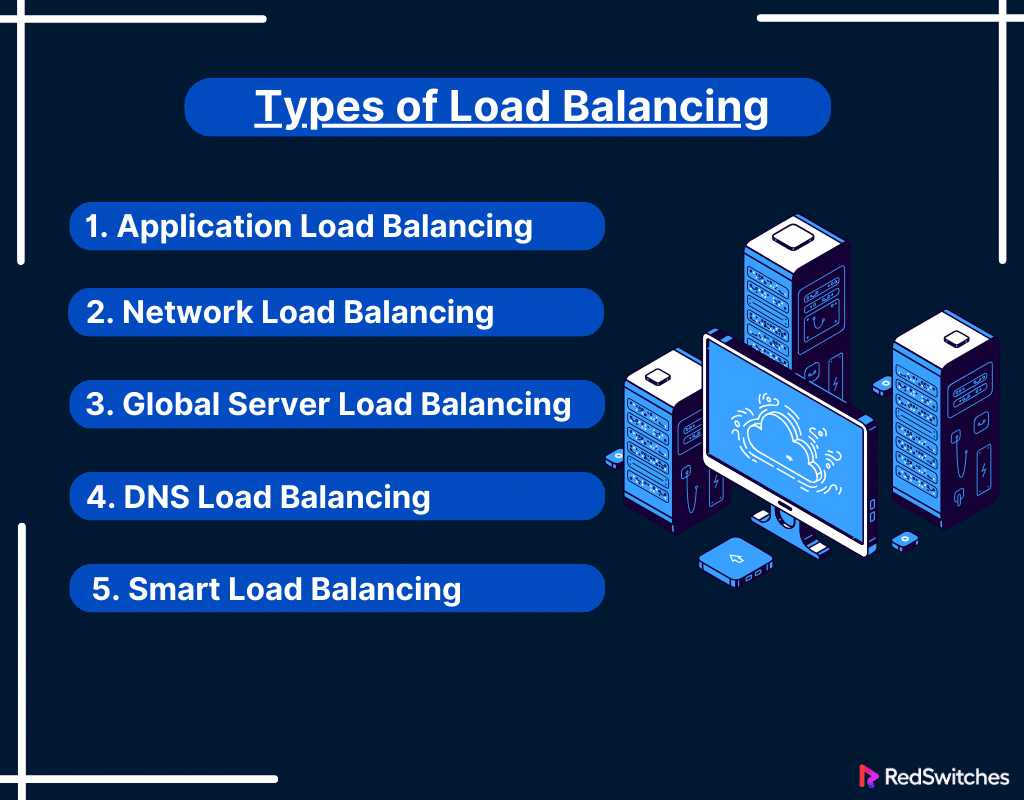

- Some types of load balancing include application load balancing, network load balancing, etc.

- Some benefits of migrating from a classic load balancer include better performance, health checks, etc.

With e-commerce sites increasing focus on offering optimal user experience and fast loading times. The importance of load balancers in optimizing server performance cannot be understated.

According to reports, the worldwide load balancer market size was anticipated to grow from $2.6 billion in 2018 to $5.0 billion by 2023. This statistic highlights the increasing importance of load balancers in online services. From e-commerce giants to news portals, load balancers’ implementation meets the demand for speed and reliability.

This blog will take readers through a beginner’s guide on a load balancer. It will discuss their definition, how they optimizes server performance, and ensure uninterrupted service availability. It will also go over the different types of load balancers like the network load balancer.

Let’s begin!

Table Of Contents

- Key Takeaways

- What is a Load Balancer?

- Benefits of Load Balancing

- Drawbacks of Load Balancing

- Use Cases of Load Balancers

- What Are Load Balancing Algorithms?

- Load Balancer: What is its Working Mechanism

- Types of Load Balancing

- Load Balancer Health Check

- Stateful vs Stateless Load Balancing

- Comparing Stateful and Stateless Load Balancing

- Hardware vs Software Load Balancers

- Comparing Hardware and Software Load Balancers

- Advantages of Migrating From a Classic Load Balancer

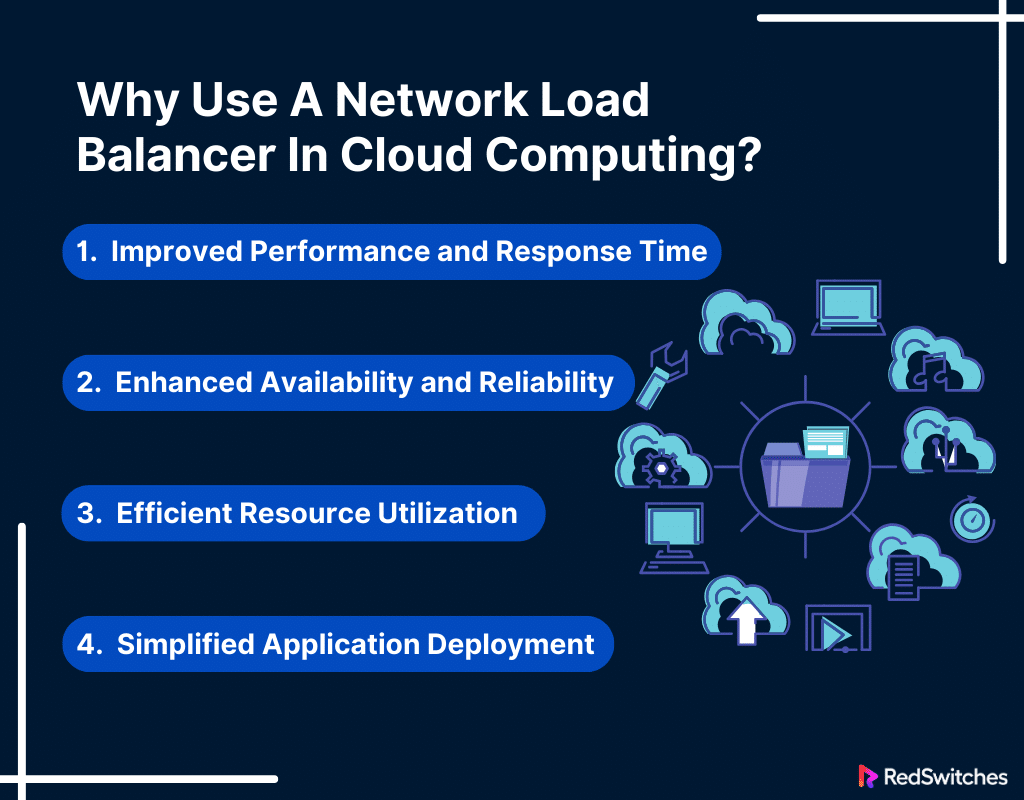

- Why Use A Network Load Balancer In Cloud Computing?

- 5 Strategies to Simplify Load Balancing in the Cloud

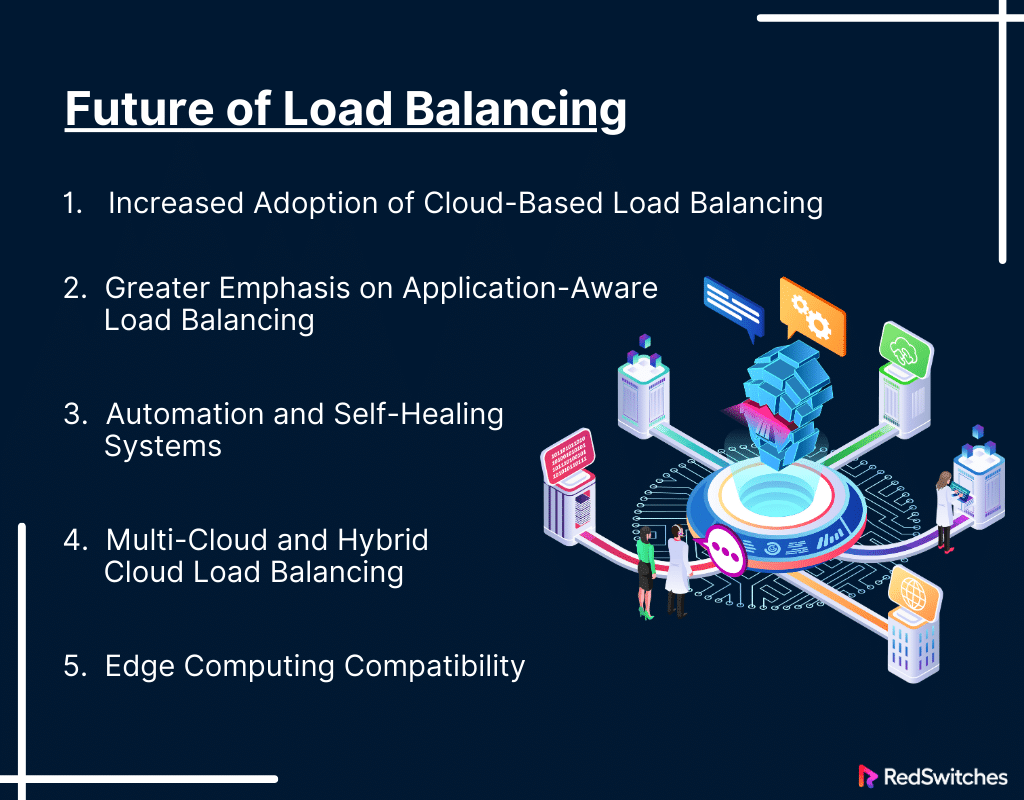

- Future of Load Balancing

- Conclusion – Network Load Balancer

- FAQ

What is a Load Balancer?

A load balancer is a device that balances network or application traffic across servers. Its job is to ensure that no single server faces too much load. By doing this, a load balancer improves the responsiveness and availability of applications or sites.

The network load balancer is a notable variant among various types of load balancers. A network load balancer operates at the OSI model’s transport layer. It routes traffic based on data from network and transport layer protocols.

One key strength of a network load balancer is its ability to handle many concurrent connections. The network load balancer can manage sudden and unpredictable spikes in network traffic. This makes it ideal for applications where short response times are necessary.

Also Read: Set Up HAProxy Load Balancing For CentOS 7 Servers.

History of Load Balancing

Credits: FreePik

Early Stages and Development

Initial Concepts (Late 1960s to 1980s): The concept of load balancing came about with the birth of distributed computing systems between the late 1960s and 1970s. People were looking for a way to optimize resource use, lower response time, and avoid overload on any single resource. The idea of load balancers revolved around evenly distributing workloads across computing resources.

Hardware-based Load Balancers (1990s). The 1990s saw the birth of dedicated hardware-based load balancers. These were primarily used in enterprise settings for balancing network traffic and managing server loads. Organizations like Cisco Systems and F5 Networks were pioneers in this space.

Load Balancer: What is It in the Context of the Internet Boom?

The advent of the Internet (Late 1990s to 2000s). The demand for load balancing peaked with the Internet boom. Websites and online services faced the challenge of serving a growing number of requests. Load balancers became critical for distributing incoming web traffic across multiple servers. Companies needed it to keep any single server from becoming overloaded.

Load Balancing Meets Dedicated Hosting (2000s to 2010s). The role of load balancers evolved as adopting dedicated servers for hosting high-traffic applications became common. Companies started integrating load balancers into dedicated hosting environments. This allowed them to distribute traffic across servers in a single data center, multiple data centers, and cloud environments.

Technological Advancements

Software-Defined Networking and Cloud (2010s to Present). The introduction of software-defined networking and cloud computing brought a shift in load-balancing technologies. Software-based load balancers became popular. These load balancers were more flexible and easier to scale than their hardware-based ones.

This period also saw the rise of cloud-based load-balancing services offered by major cloud providers. This helped further simplify load balancing for a broader range of users.

Also Read: Highly Available Architecture: Definition, Types & Benefits.

Benefits of Load Balancing

Credits: FreePik

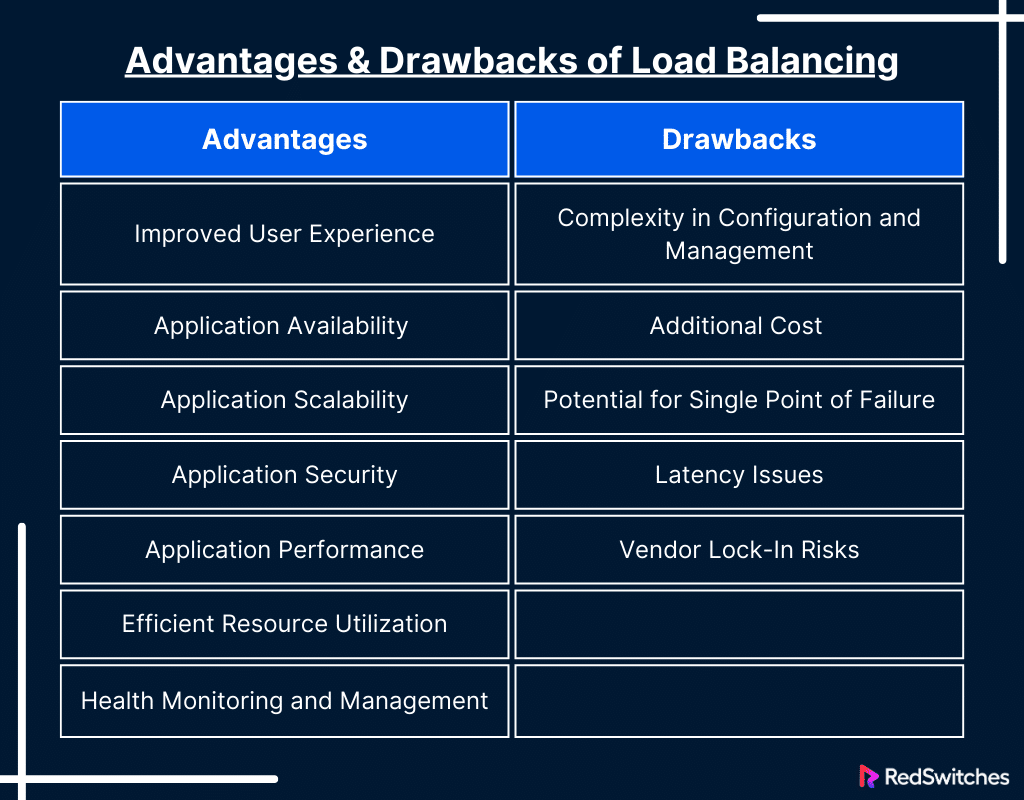

Below are the key benefits of load balancing:

Improved User Experience

Load balancers reduce the response time for users by evenly distributing traffic. This helps maintain faster loading times for websites and applications, directly influencing user satisfaction. A speedy and efficient user experience is crucial for retaining clients and maintaining a competitive edge.

Application Availability

One of the top benefits of load balancing is its impact on application availability. A load balancer ensures that even if one server fails, the application remains available via other servers in the pool. This redundancy is important for maintaining an uninterrupted online presence. Load balancers continuously check the health of servers and reroute traffic only to those that are operational.

Application Security

Load balancing is also critical for application security. It can act as a first defense against attacks such as Distributed Denial of Service. Load balancers help mitigate such attacks by distributing traffic. This allows them to keep one server from being impacted by malicious traffic.

Many advanced load balancers come equipped with features like SSL offloading. This helps manage the encryption and decryption workload, providing an additional security layer.

Application Scalability

Scalability is another significant advantage offered by load balancing. As the demand for your application grows, you can add more servers to your environment, and the load balancer will automatically start distributing traffic to these new servers.

This scalability ensures that your application can manage excess traffic without any degradation in performance. It’s a cost-effective way to grow your digital presence, as you can scale resources up or down based on demand without any major infrastructure overhaul.

Application Performance

Another major pro of load balancing is its ability to boost application performance. By circulating the workload evenly, load balancers ensure optimal server performance.

This improves the response time of applications, as requests are handled more efficiently. Enhanced application performance can help businesses maintain optimally functioning sites, enrich user experience, and get positive feedback online.

Efficient Resource Utilization

A load balancer optimizes the usage of server resources. Ensuring that no single server is underutilized or overburdened maximizes the server infrastructure’s efficiency. This optimal utilization of resources improves performance and contributes to cost savings in the long term.

Health Monitoring and Management

Modern load balancers are equipped with health checks for servers. They continuously monitor the health of connected servers and automatically reroute traffic away from servers that are malfunctioning or under maintenance. This proactive approach prevents potential downtimes and interruptions in service.

Drawbacks of Load Balancing

Below are a few drawbacks associated with load balancing:

Complexity in Configuration and Management

Load balancers demand ongoing management to adapt to evolving network conditions and application updates. They must be regularly updated and patched for security and performance improvements. This demands skilled personnel, which adds to operational overhead.

The complexity increases exponentially in dynamic environments. For instance, those using microservices or container-based architectures. The load balancer must continuously adapt to new instances created and retired. This requires sophisticated, often automated, configuration strategies.

Additional Cost

There are several other costs associated with load balancing besides purchase and installation. Hardware-based solutions may require specialized infrastructure and environmental controls. Software or cloud-based load-balancing solutions may incur recurring subscription or usage-based fees.

These costs can be unpredictable and escalate quickly for organizations with fluctuating traffic. The need for specialized staff to manage and maintain these systems adds to the overall financial burden.

Potential for Single Point of Failure

Organizations must invest in redundant systems and ensure they have failover capabilities to mitigate the risk of the load balancer becoming a single point of failure.

This means setting up multiple load balancers in a high-availability configuration, which adds to the complexity and cost. Synchronizing configurations and maintaining consistency across redundant load balancers can be challenging, especially in large-scale deployments.

Latency Issues

The issue of latency is evident in geographically distributed environments. If a load balancer is not strategically located near the users or the servers it routes traffic to, the added distance the data travels can introduce major delays.

Load balancers that perform deep packet inspection or application-level load balancing can also add processing overhead. This can further contribute to latency.

Vendor Lock-In Risks

Specific load-balancing solutions may require proprietary hardware or software. This can lead to dependency on a single vendor for upgrades, support, and integration with other systems. Vendor lock-in leads to an organization’s freedom to switch vendors. It can incur higher costs and reduced flexibility.

Vendor-specific features might also hinder integrating with other vendors’ solutions. This can constrain the network’s overall architecture or cloud environment.

Also Read: How To Assign Floating IP In Leaseweb With Your Subnet In New Ways.

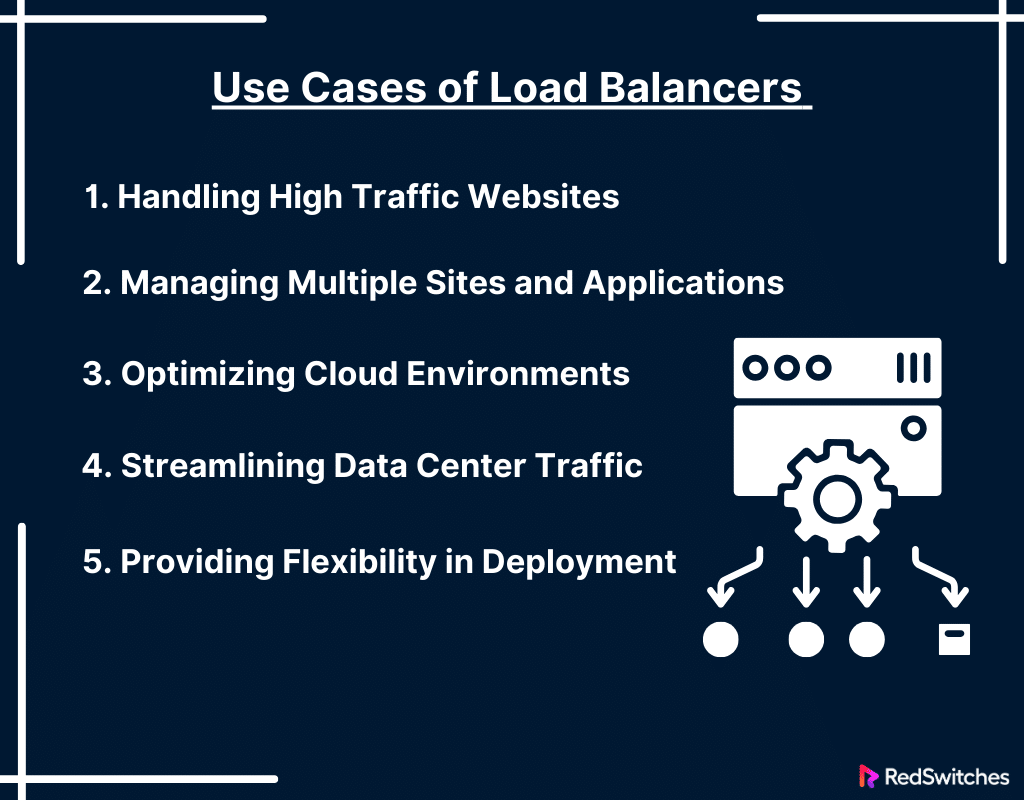

Use Cases of Load Balancers

Below are the top use cases of load balancers:

Handling High Traffic Websites

Load balancers are not just essential. They function like a lifeline for high-traffic websites. Load balancers evenly distribute incoming traffic across several servers. This is especially important for large e-commerce websites, news portals, or sites expecting high traffic.

Load balancers manage the sudden influx of visitors, maintaining site performance and minimizing downtimes during peak times. This capability is vital for user experience and maintaining sales and information circulation during critical times.

Managing Multiple Sites and Applications

Load balancers are invaluable for organizations managing multiple websites or applications. They can distribute traffic based on server capacity and the type of request or the specific application being accessed.

This optimizes performance across various platforms, ensuring each site or application functions efficiently. It’s best for businesses that operate various online services, from customer-facing portals to internal applications, ensuring that all digital assets perform optimally.

Optimizing Cloud Environments

Load balancers are important in cloud computing environments. Their traffic distribution capabilities are the key to maintaining the resilience and performance of cloud-based applications, especially in complex multi-cloud or hybrid cloud environments.

Streamlining Data Center Traffic

Load balancers are instrumental in streamlining network traffic in data centers. They manage the distribution of traffic across servers, ensuring optimal utilization of resources. This boosts the overall efficiency of the data center and reduces the risk of overloading any individual server, thus maintaining a stable infrastructure.

Providing Flexibility in Deployment

Load balancers offer excellent flexibility in terms of deployment. They can be implemented as hardware appliances or as software-defined solutions. Hardware-based load balancers are typically used in high performance.

Software-defined load balancers offer more flexibility and are often more cost-effective. This flexibility allows organizations to choose a load-balancing solution that aligns with their specific infrastructure requirements and budget constraints.

Also Read: Exploring Fault Tolerance Vs High Availability.

Credits: FreePik

What Are Load Balancing Algorithms?

There are a series of algorithms when it comes to load balancing. Each is designed to distribute traffic to maximize efficiency and minimize server overload. Let’s further discuss these algorithms.

Dynamic Load Balancing

Credits: FreePik

Dynamic load balancing algorithms are flexible. They adjust their distribution plan in response to the state of the server load. These algorithms can react instantly to server performance and traffic variations. Their versatility makes them perfect for locations where traffic is unexpected.

Least Connection Method

A common dynamic load balancing algorithm is the least connection method. This algorithm directs new requests to the server with the fewest active connections. This approach is beneficial in scenarios where session duration can be highly variable. It helps prevent any single server from becoming overwhelmed by many time-consuming tasks.

Weighted Least Connection Method

The weighted least connection method adds a layer of sophistication. Every server is assigned a weight based on capacity (like CPU, memory, etc.), in this algorithm. Servers with higher capacity get a higher weight and are allocated more connections.

This method is effective in environments where servers have differing capabilities, ensuring that more powerful machines handle a proportionally larger share of the traffic.

Least Response Time Method

The least response time method is an advanced algorithm that considers both the number of active connections and the response time of each server. Incoming traffic is directed to the server with the fewest active connections and the lowest average response time. This approach effectively ensures quick response times, making it ideal for performance-sensitive applications.

Resource-Based Method

The resource-based method takes a more holistic view, directing traffic based on the overall resource usage of a server. It considers factors like CPU load, memory usage, and network I/O.

By assessing each server’s overall ‘health’ and performance capacity, this method can make more informed decisions about traffic distribution. It also helps optimize for efficiency and server longevity.

Static Load Balancing

Static load balancing is one of the simplest forms of load balancing. This algorithm distributes requests to servers based on pre-defined criteria, like server capacity or specific server roles.

This method doesn’t account for the current state or performance of the servers. It’s often used in environments with predictable and consistent server loads. Static load balancing is straightforward but lacks the flexibility to adapt to changing loads or server conditions.

Round-Robin Method

The Round-Robin algorithm is also among the simplest and most commonly used dynamic load-balancing methods. In this approach, every server in the pool gets a turn to receive a request in a cyclic order. Once the last server receives a request, the load balancer returns to the first server.

This method works well in situations where all servers have similar capabilities and the requests are relatively uniform in resource consumption. However, its simplicity can be a drawback in more complex scenarios, as it does not account for each server’s current load.

Weighted Round-Robin Method

The Weighted Round-Robin serves as an enhancement to the Round-Robin algorithm. This method assigns a weight to every server based on its capacity (CPU, memory, or throughput).

Servers with higher capacities get a larger share of the requests. This allows for a more intelligent load distribution, ensuring more powerful servers handle more requests. It’s an efficient way to manage a mixed pool of servers, where some are more capable than others.

IP Hash Method

The IP hash load balancing method uses the client’s IP address to determine which server will manage the request. The load balancer takes the client’s IP address, applies a hash function, and uses the result to allocate the request to one of the servers.

This method ensures that a user will consistently connect to the same server. This can be great for maintaining user session consistency. It is also helpful in situations like online shopping carts, where session persistence is important.

Are you planning on scaling your servers? This requires an in-depth understanding of server scaling and its strategies. Read our blog, ‘Server Scaling Explained: Definition And Scalability Strategies,’ to learn more about server scaling.

Load Balancer: What is its Working Mechanism

When talking about a load balancer’s working mechanism, it is important to understand that a load balancer does much more than evenly routing client requests across all servers. Let’s discuss the working mechanisms of load balancers to understand this:

Traffic Direction

The main function of a load balancer is to receive incoming network traffic and distribute it across backend servers. It serves as a front door to your server environment, ensuring requests are handled efficiently.

Health Checks

Credits: FreePik

A critical aspect of a load balancer’s functionality is performing health checks on servers. It monitors the health of servers to ensure they are ready and capable of handling requests. If a server fails, the load balancer automatically redirects traffic to the remaining operational servers.

Load Balancing Algorithms

The intelligence of a load balancer lies in its use of various algorithms to distribute traffic, such as:

- Round Robin: Distributing requests sequentially across the server pool.

- Least Connections: Direct traffic to the server with the least active connections.

- IP Hash: Assigning users to a specific server based on their IP address.

Session Persistence

Maintaining a user’s session on the same server for their visit is crucial, in specific applications, like online shopping carts. Load balancers support this through ‘sticky sessions,’ where a user’s session is tied to a specific server.

This ensures that information stored on one server remains consistent throughout user interaction. Users may experience interruptions and inconsistencies in their online activities without session persistence. This can lead to customer dissatisfaction.

Scalability

One of the greatest strengths of a load balancer is its ability to enhance the scalability of an IT environment. New servers can be added to the pool as the demand increases without disrupting ongoing services.

Servers can be decommissioned to reduce costs during low-traffic periods. This flexibility notably benefits businesses experiencing growth or those with variable traffic patterns. It ensures that IT resources align with current needs, optimizing performance and cost-effectiveness.

SSL Termination

Load balancers enhance security and performance through SSL termination. They offload these tasks from the backend servers by handling the decryption of incoming requests and encryption of responses. This reduces the processing load on the servers, freeing them to handle other tasks more efficiently.

SSL termination at the load balancer level allows for centralized management of SSL certificates and encryption. It also simplifies security management and ensures consistent application of security policies.

Types of Load Balancing

Below are a few popular types of load balancing:

1. Application Load Balancing

Application Load Balancing (ALB) operates at the application layer (Layer 7) of the OSI model. An application load balancer is designed to manage user requests for web-based applications more efficiently. ALB does much more than distribute traffic across servers. It understands the content of the request and can route traffic based on the content type or specific application being accessed.

This is useful for complex web applications, where different requests might need different handling. ALB can prioritize certain types of traffic, manage sessions, and make decisions based on application-level information.

2. Network Load Balancing

Network Load Balancing functions at the transport layer (Layer 4) of the OSI model. It deals with directing traffic based on data from network and transport layer protocols, such as IP addresses and TCP/UDP ports.

NLB is less about understanding the content of the packets and more about ensuring that the network traffic is distributed across serves. A network load balancer is well-suited for ensuring that stateless applications can handle high volumes of connections and traffic.

3. Global Server Load Balancing

Global Server Load Balancing is designed for firms with many data centers across different locations. GSLB distributes user requests across these locations based on proximity, server health, and load. This ensures the user is connected to the best-performing and closest data center.

GSLB implementation helps optimize response times and provides a failover mechanism if one of the data centers is down. GSLB is important for multinational firms or services catering to a global audience. It ensures high availability and performance regardless of where the user is located.

4. DNS Load Balancing

DNS Load Balancing operates at the DNS level. It uses the Domain Name System to distribute traffic across several servers. When a user tries to open a website, the DNS load balancer ensures the request is directed to the least busy server.

This method is relatively simple and cost-effective. It doesn’t require specialized hardware or software. It’s effective for initial request routing but lacks the granular control of ALB or NLB.

5. Smart Load Balancing

Smart load balancing is a progressive approach to managing network and application traffic. Smart load balance algorithms use real-time data analysis, AI, and ML algorithms to make more adaptable decisions.

This method monitors traffic patterns, server health, and network conditions. This allows it to respond proactively to changes and optimize resource allocation.

Also Read: 5 Things About Cloud VPS Hosting: Breakdown Of Benefits.

Load Balancer Health Check

Credits: FreePik

A load balancer health check is an important part of load-balancing systems. It was created to ensure that the traffic is only sent to servers that are up and running effectively. It’s a proactive monitoring feature that evaluates the health or status of the servers in a load-balancing pool. Let’s discuss how it works and why it’s important:

Functioning of Load Balancer Health Checks

These checks ensure that traffic is only directed to servers that are online and capable of handling requests. Here’s how health checks typically function in load balancers:

Regular Checks

The load balancer periodically sends requests to the servers in its pool to check their status. Depending on the configuration, these requests can be HTTP/HTTPS requests, TCP connections, or a specific URL on the server.

Criteria for Health

The criteria for determining a server’s health can vary. It can be as simple as ensuring the server responds to a ping, or it may involve checking that an application on the server returns the right content.

Response Analysis

The load balancer evaluates the responses from these checks. If a server’s response is right within a predetermined time frame, it is healthy. If not, it is considered unhealthy.

Traffic Distribution

The load balancer stops directing new traffic once a server is marked unhealthy. This helps maintain the application’s overall performance and prevents users from encountering errors.

Importance of Load Balancer Health Checks

Load balancer health checks is essential for ensuring the smooth and efficient operation of server resources in a network.

Avoids Downtime

Health checks help prevent downtime by redirecting traffic away from unhealthy servers. This ensures that the application remains available to users.

Boost Positive User Experience

Health checks contribute to a smoother user experience. They minimize the chances of users being directed to a malfunctioning server.

Facilitates Maintenance

They allow for effortless maintenance and updates on individual servers without disrupting the service, as the load balancer can temporarily stop sending requests to servers under maintenance.

Efficiency in Resource Utilization

Health checks ensure efficient utilization of resources by distributing traffic only to those servers that are currently capable of handling it effectively.

Scalability and Flexibility

Health checks automatically update the pool of utilized servers in environments where servers are frequently added or removed.

Configuring Health Checks

Configuring health checks in a load balancer involves setting parameters that determine how and when the load balancer should check the status of the servers in its pool. Here are the key considerations for configuring health checks:

Frequency

The frequency of health checks can be configured based on the criticality of the application and server response times.

Thresholds

Setting thresholds for how many failed checks constitute an unhealthy server is crucial to prevent false positives.

Custom Health Check Rules

Depending on the application’s nature, custom health check rules can be created to ensure the servers are up and functioning as expected.

Stateful vs Stateless Load Balancing

It can be easy for individuals to get confused between stateful vs stateless load balancing. The answer to which to choose requires understanding both load balancing forms and considering a few important factors.

What is Stateful Load Balancing

Stateful load balancing refers to keeping track of active connections and the state of every session. This approach involves the load balancer maintaining a record of previous interactions and using this information to direct traffic. This method is ideal in cases where subsequent requests need to be sent to the same server that processed the initial request. It helps maintain a continuous ‘state’ of interaction.

Key Features

Here are the key features of stateful load balancing:

Session Persistence

Ensures a client is consistently connected to the same server for a session.

Context-Aware Distribution

Can make intelligent decisions based on session data and previous interactions.

Dynamic Resource Management

Tweaks resource allocation based on the ongoing state of each session.

Use Cases

Stateful load balancing is particularly beneficial in scenarios where maintaining client session information is crucial for the application’s functionality and user experience. Here are some key use cases for stateful load balancing:

1. E-Commerce Websites

Shopping Cart Consistency

It ensures that the items a customer adds to their cart remain persistent as they explore the website, regardless of the server handling their requests.

Personalized User Experience

E-commerce platforms can offer personalized content by maintaining the session state. Examples include recommendations and targeted promotions based on user behavior during the session.

2. Applications Requiring Real-Time Data Synchronization

Seamless Collaboration

All users must see real-time updates in collaborative work scenarios. Stateful load balancing ensures all interactions are routed through the same server instance. This helps maintain consistency across user sessions.

Gaming and Chat Applications

Maintaining the state is integral for a continuous and integrated user experience for online multiplayer games or real-time chat applications. Stateful load balancing helps keep player actions and chat messages synchronized.

3. Environments with Persistent Transaction States

Financial Transactions

A user session might involve several banking and financial services steps. Stateful load balancing ensures that the same server handles these transactions.

Healthcare Applications

Patient management systems in healthcare require consistent session management to keep track of a patient’s progress, treatment plans, and medical records. Stateful load balancing allows for continuous and secure patient data handling across interactions.

Also Read: Get Managed High Availability Cluster At The Best Prices.

What is Stateless Load Balancing?

Stateless load balancing treats every incoming request independently of previous requests. It doesn’t keep track of the state of network sessions. Instead, it uses predetermined rules and algorithms to distribute incoming traffic across servers.

Key Features

Here are the key features of stateless load balancing:

Simplicity and Speed

Stateless load balancing lack the overhead of tracking session state. This leads to faster processing of requests.

Scalability

Stateless load balancing is simpler to scale. This is because each request is independent and doesn’t require context.

Uniform Load Distribution

This form of load balancing tends to disperse traffic evenly across all servers.

Use Cases

Stateless load balancing, where the load balancer does not retain session or connection information, is well-suited for scenarios where high performance, scalability, and ease of management are prioritized. Here are some key use cases for stateless load balancing:

1. Stateless Applications and Microservices

Microservices Architecture

Each service in a microservices architecture is independent and doesn’t rely on a user’s session state. Stateless load balancing distributes requests across these services without requiring session persistence.

APIs

Stateless load balancing offers an efficient routing method for APIs serving stateless requests.

2. High-Traffic Websites

Content Delivery Networks

CDNs deliver users static content ( like videos and scripts). Since this content is stateless, stateless load balancing distributes user requests to the closest or most optimal server.

Read-Heavy Informational Websites

Stateless load balancing can evenly distribute the load to handle high traffic for websites only serving read-only content.

3. Environments Prioritizing Simplicity and Scalability

Scalable Cloud Services

Cloud-based services that need to scale considerably with demand can use stateless load balancing for its simplicity and ease of scaling.

Temporary Campaign Websites

Stateless load balancing manages incoming traffic for short-term marketing or campaign sites where user session persistence is unnecessary.

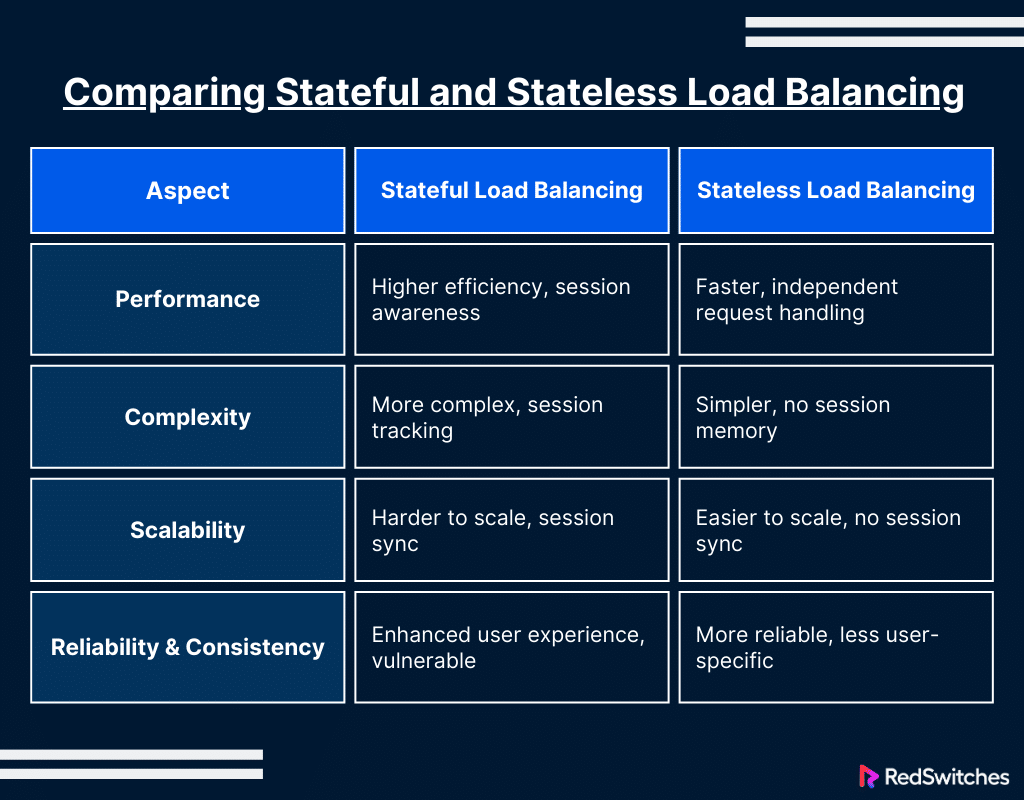

Comparing Stateful and Stateless Load Balancing

Performance

Comparing the performance of stateful and stateless load balancing involves understanding how each approach handles traffic and maintains information about client sessions. Here’s an analysis that could help address potential gaps and enhance clarity:

Stateful Load Balancing

This method is characterized by its ability to remember the state of old interactions. It tracks and stores session data, leading to intelligent decision-making based on the information from past transactions.

This results in efficient performance. It is, however, important to remember that maintaining a session state can add overhead, which impacts performance under heavy loads.

Stateless Load Balancing

Since stateless load balancing treats each request independently without retaining previous details, this approach can lead to faster processing since there’s no need to track the session state.

This allows for more streamlined handling of requests. It is incredibly efficient for applications where each request is self-contained and does not depend on old interactions.

Complexity

Comparing the complexity of stateful and stateless load balancing involves examining how each method handles session data and the implications of this on system design, maintenance, and scalability. Here’s an analysis:

Stateful Load Balancing

The nature of remembering each session makes stateful load balancing more complex. Implementing and managing a stateful load balancer requires a state-of-the-art setup to track and store session data. This can add complexity to the network infrastructure.

Stateless Load Balancing

Stateless load balancing is more simple to implement and manage because of its lack of session memory. The simplicity of treating each request in isolation without the need for tracking session history minimizes the complexity of the network setup.

Scalability

When discussing the scalability of stateful and stateless load balancing, it’s crucial to address how each method handles increasing amounts of traffic and the ability to add or remove resources without disrupting service. Here’s a detailed comparison:

Stateful Load Balancing

Scaling a stateful load balancer can be difficult due to the need for synchronized session data across several load balancers. Ensuring that each load balancer has accurate session information as traffic increases becomes more complex and can limit scalability.

Stateless Load Balancing

Stateless load balancers are more scalable. Adding more load balancers to the network is easier without sharing session states since they do not require session data synchronization. This makes them perfect for fast-scaling environments.

Reliability and Consistency

Reliability and consistency are crucial aspects of load balancing, influencing how effectively a system handles failures and ensures uniform service. Here’s how stateful and stateless load balancing compare in these areas:

Stateful Load Balancing

Maintaining a session state can boost the consistency of user experience. This is especially true for applications where users engage in lengthy interactions. The dependency on session data can also be vulnerable if a load balancer fails, potentially causing a loss of session continuity.

Stateless Load Balancing

Although stateless load balancing may not offer the same user-specific consistency as stateful methods, its independence from session data can offer more reliability. The lack of dependency on session history means that any load balancer in the pool can handle any request. This minimizes the impact of individual load balancer failures on the entire system.

Also Read: Kubernetes 101: Beginners Guide To Kubernetes.

Hardware vs Software Load Balancers

It can be easy for individuals to get confused between hardware load balancers and software load balancers. Below is a comparison of hardware vs software load balancers:

What Are Hardware Load Balancers?

Hardware load balancers are physical devices created to manage network traffic. They are standalone pieces of equipment, including dedicated processors and memory to perform load balancing. These devices are usually kept in data centers and work at the OSI model’s transport layer (Layer 4) or the application layer (Layer 7).

Key Features

Hardware load balancers are dedicated devices designed to manage network traffic and distribute it across multiple servers to optimize performance and reliability. When discussing hardware load balancers, several key points should be addressed:

Performance

Hardware load balancers offer high performance since they are built with specialized processors and optimized to handle a large traffic volume with low latency.

Security

Hardware load balancers often have integrated security features like firewalls, intrusion prevention systems, and SSL offloading.

Reliability

Hardware load balancers are generally more reliable thanks to their dedicated nature. They come with a lower risk of software conflicts and crashes.

Scalability

Although scalable, their capacity is limited by physical constraints and may require added hardware for major scale-ups.

Use Cases

Here are some use cases for hardware load balancers:

1. High-Traffic Websites and Enterprise Applications

When discussing the use cases of hardware load balancers, especially in the context of high-traffic websites and enterprise applications, several key aspects should be highlighted:

E-Commerce Platforms

Large-scale online stores experiencing consistent high traffic, especially during peak shopping seasons, benefit from the optimal performance of hardware load balancers.

Financial Institutions

Banks and financial services handling high-volume transactions demand the reliability and security offered by hardware solutions.

Media Streaming Services

Platforms with a high volume of concurrent streaming sessions need hardware load balancers’ throughput and low-latency processing.

2. Environments Where Security and Performance Are Critical

Credits: Unsplash

In environments where security and performance are critical, hardware load balancers play a pivotal role. These environments often have stringent requirements for data protection, uptime, and response times. Here’s how hardware load balancers fit into such contexts:

Healthcare Data Management

Hospitals and health systems dealing with sensitive patient data and requiring high uptime can depend on the security and performance of hardware load balancers.

Government and Defense

Hardware load balancers are often the go-to pick for government websites and military applications where security and consistent performance are non-negotiable.

3. Organizations Favoring Dedicated Network Management Equipment

Organizations that prefer dedicated network management equipment often prioritize control, performance, and security. Hardware load balancers are a key component in such settings due to their specialized capabilities. Here’s why these organizations might favor hardware load balancers:

Large Data Centers

Organizations operating their own large-scale data centers may pick hardware load balancers for their dedicated nature and ability to manage extensive traffic.

Enterprises with Strict Compliance Requirements

Businesses subject to strict regulatory standards may find hardware load balancers more aligned with their compliance needs due to their excellent security features.

What Are Software Load Balancers?

Software load balancers are services or applications that run on standard hardware or virtual machines. Thanks to their software nature, they perform the same function as hardware load balancers but are more flexible and scalable.

Key Features

Software load balancers, in contrast to their hardware counterparts, are applications that perform load-balancing tasks. They offer a level of flexibility and integration that can be particularly beneficial in dynamic, virtualized environments. Here are key aspects to consider:

Flexibility

Software load balancers can be conveniently configured and updated with new features or protocols.

Cost-Effective

They are usually more pocket-friendly than hardware solutions, which can operate on commodity hardware or cloud environments.

Scalability

Software load balancers are easily scalable. They can grow with your traffic needs, often without added physical infrastructure.

Customization

Software load balancers allow for added customization and can be integrated into complex, cloud-based, or virtualized environments.

Use Cases

Here are some use cases for Software load balancers:

1. Cloud Environments and Virtual Setups

In cloud environments and virtual setups, software load balancers are particularly advantageous due to their adaptability and integration capabilities. Here are some key points regarding their use cases in such environments:

Cloud-Based Startups

Startups use cloud services for their flexibility and scalability and benefit from the adaptability and integration of software load balancers.

Virtual Desktop Infrastructure (VDI)

Organizations deploying VDI solutions can use software load balancers to disperse traffic across their virtual desktop environments.

Also Read: VDI vs VM: Understand The Differences

2. Small to Medium-Sized Businesses or Websites with Variable Traffic

Software load balancers offer a cost-effective and flexible solution for small to medium-sized businesses or websites with variable traffic. Here’s how they can be particularly beneficial in such scenarios:

Seasonal Online Retailers

Retailers with high traffic fluctuations around specific seasons or sales events can use software load balancers’ scalability.

Emerging Tech Companies

Flourishing tech companies require a scalable, cost-effective solution to manage growing traffic as they expand.

3. Organizations Looking for Cost-Effective and Flexible Solutions

Organizations seeking cost-effective and flexible solutions often turn to software load balancers due to their adaptability, ease of deployment, and scalability. Here are the key reasons why they are a preferred choice in such scenarios:

Educational Institutions

Universities and online education platforms with fluctuating online traffic patterns, especially during enrollment phases or exam times.

Small to Medium Enterprises (SMEs) with Diverse Needs

SMEs need a cost-effective solution to support their varying online presence and internal applications.

Also Read: Understanding The Core Components Of Kubernetes Clusters.

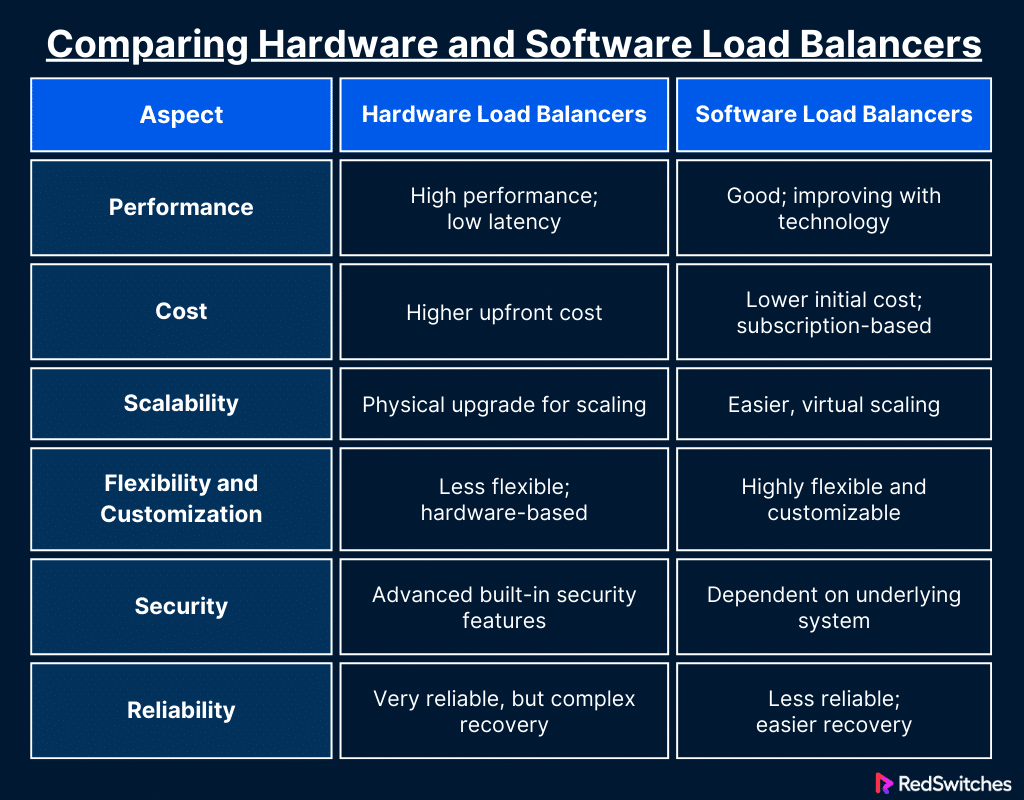

Comparing Hardware and Software Load Balancers

When comparing hardware and software load balancers, it’s crucial to consider various factors such as performance, cost, flexibility, and the environment’s specific needs. Here’s a detailed comparison:

Cost

When comparing the costs associated with hardware and software load balancers, it’s important to consider not just the initial investment but also the long-term expenses related to maintenance, scaling, and updates. Here’s an analysis:

Hardware Load Balancers

The upfront investment is higher. It covers the cost of the physical device and installation and maintenance. This can be a major factor for businesses with limited capital. Hardware can also become outdated as technology develops, leading to further investment in the latest models.

Software Load Balancers

Software load balancers usually follow a subscription model or are available as part of a cloud service package. This makes them more accessible for smaller businesses or those with fluctuating traffic patterns. The operational costs are generally lower. Updates or upgrades can be done with low additional investment.

Performance

In comparing the performance of hardware and software load balancers, several factors such as processing speed, capacity to handle concurrent connections, and response time should be considered. Here’s how they stack up:

Hardware Load Balancers

Hardware load balancers are engineered for optimal performance, featuring dedicated, high-performance hardware. This includes Application-Specific Integrated Circuits (ASICs) and Field-Programmable Gate Arrays (FPGAs), which are tailored for fast network traffic processing. This results in lower latency and higher throughput. This makes them ideal for handling high-traffic loads.

Software Load Balancers

Software load balancers are often known as less potent than hardware. However, hardware and software improvements have narrowed this performance gap. Modern software load balancers use advanced technology to spread internet traffic, even though they might not be as fast as others in high-traffic situations. They do this by using powerful processors and smart methods.

Scalability

Credits: FreePik

Scalability is a crucial factor when comparing hardware and software load balancers, as it determines how well the solution can adapt to changing demands and traffic patterns. Here’s an analysis:

Hardware Load Balancers

Scaling up often requires buying added hardware or higher-capacity models. This can incur notable expenditures and create physical space issues. This may, however, be a manageable aspect for stable environments with predictable growth.

Software Load Balancers

Software load balancers excel in scalability. They can be conveniently scaled up or down based on demand, often automatically. This them ideal for businesses experiencing accelerated growth or fluctuating traffic patterns.

Flexibility and Customization

Flexibility and customization are key considerations when choosing between hardware and software load balancers, as they determine how well the solution can adapt to specific needs and network environments. Here’s how they compare:

Hardware Load Balancers

Hardware load balancers are known to be less flexible regarding deployment and integration. Customization options might be limited to the features provided by the manufacturer. They do, however, offer powerful solutions for standard load-balancing needs.

Software Load Balancers

Software load balancers offer a high degree of customization. Businesses can tailor the load balancer to specific needs, integrate it with other software tools, and deploy it in various environments, like on-premises or hybrid setups.

Security

Security is a paramount concern when comparing hardware and software load balancers, especially considering the increasing sophistication of cyber threats. Here’s how both types of load balancers measure up in terms of security:

Hardware Load Balancers

Hardware load balancers have advanced security features and are less likely to face software-based attacks. This makes them suitable for financial institutions or healthcare, where security is integral.

Software Load Balancers

Software load balancers also offer great security features. The level of security can depend on the underlying platform and how well it’s managed. Regular software updates are crucial for maintaining security.

Reliability

Reliability is a critical factor in ensuring uninterrupted service and maintaining user trust. Here’s how hardware and software load balancers compare in terms of reliability:

Hardware Load Balancers

Hardware load balancers dedicated to nature and lack of reliance on underlying operating systems or third-party software make them reliable. They are less likely to crash and offer consistent performance over time.

Software Load Balancers

While generally reliable, software load balancers can be susceptible to issues related to their broader software environment. This includes potential conflicts with other applications or issues in the underlying operating system.

Also Read: How To Resolve Cloudflare Error 522 | 8 Easy Solutions.

Advantages of Migrating From a Classic Load Balancer

Migrating from a classic load balancer to a more advanced network load balancer can offer many advantages. Below are some key benefits of this migration:

Enhanced Performance and Throughput

A Network load balancer is engineered to manage millions of requests every second while ensuring ultra-low latencies. This is especially beneficial for applications requiring high-performance handling of TCP traffic. Compared to a classic load balancer, a network load balancer offers better throughput and can manage sudden and massive spikes in traffic, ensuring consistent application performance.

Robust Health Checks and Faster Failure Detection

A Network load balancer provides more sophisticated and configurable health check capabilities than classic ones. They can quickly detect unhealthy instances and reroute traffic to healthy ones in real time. This rapid response to potential issues means better availability and reliability of services, minimizing downtime and enhancing user experience.

Higher Security and Isolation

Credits: Unsplash

Transitioning to a network load balancer enhances security. These load balancers operate at the connection level. This offers improved isolation and protection against attacks. They also support integration with advanced security systems and protocols. This offers a more robust defense mechanism than what is available with classic load balancers.

Advanced Routing Capabilities

A Network load balancer supports more sophisticated routing mechanisms. It can route traffic based on IP protocol data, enabling more precise control over traffic distribution. This is great for applications requiring specialized routing rules and significantly improves classic load balancers’ more basic routing capabilities.

Lower Latency

Latency is a critical factor for user satisfaction and application performance. A Network load balancer is designed to minimize latency, offering a more responsive experience for end-users. This reduced latency is especially vital for real-time applications and services where a slight delay can significantly impact performance.

Cost-Effectiveness

While the upfront investment for a network load balancer might be higher than a classic one, the long-term cost benefits are significant. Improved efficiency, reduced downtime, and better resource utilization contribute to a more cost-effective solution in the long run.

Also Read: Learn To Enable & Access NGINX Logs In 5 Minutes.

Why Use A Network Load Balancer In Cloud Computing?

Using a network load balancer in cloud computing has become increasingly important as businesses aim to improve their online services’ performance, reliability, and scalability.

A network load balancer is important in cloud computing since it efficiently manages web traffic and optimizes resource utilization. Below are a few more reasons why implementing a network load balancer is important in a cloud computing context:

1. Improved Performance and Response Time

A network load balancer circulates incoming traffic across multiple servers or instances in the cloud. This distribution helps ensure that no single server is bombarded with excessive load. This improves the overall response time and performance. It handles traffic spikes and high request volumes, ensuring that applications remain responsive.

2. Enhanced Availability and Reliability

A key benefit of using a network load balancer is the application’s increased availability and reliability. A network load balancer ensures no server becomes overloaded. This allows traffic to be rerouted to other healthy servers.

3. Efficient Resource Utilization

A Network load balancer ensures that no server is overworked or underpowered. It achieves this by optimizing server resources in the cloud. This effective task allocation facilitates the optimal use of resources. It helps lower costs and improves operational effectiveness.

4. Simplified Application Deployment

Deploying and managing cloud applications becomes simpler with a network load balancer. It abstracts the challenges of managing individual server workloads. This enables developers to focus more on application development instead of traffic distribution.

5 Strategies to Simplify Load Balancing in the Cloud

Credits: FreePik

Simplifying load balancing in the cloud is crucial for ensuring efficient application performance and user satisfaction. Below are five strategies to simplify load balancing in the cloud:

1. Use Advanced Network Load Balancers

Using a network load balancer is an excellent strategy for simplifying cloud load balancing. These load balancers efficiently distribute network traffic across multiple servers in the cloud.

A network load balancer is designed for high performance and low latency, handling millions of requests per second and making real-time routing decisions. This capability ensures user requests are efficiently distributed across all resources, including dedicated servers.

2. Implement Auto-Scaling with Load Balancers

Auto-scaling with a network load balancer can greatly simplify load balancing in the cloud. This approach automatically adjusts the number of instances or dedicated servers in response to varying load conditions.

By dynamically scaling resources, you ensure that the load balancer always has the optimal number of instances to distribute traffic to, preventing the overloading of servers and maintaining consistent performance levels.

3. Optimize with Cloud-Based Analytics

Use cloud-based analytics to monitor and analyze the performance of your network load balancer and the dedicated server it distributes traffic to. Analytics tools can provide insights into traffic patterns, server health, and load balancer performance.

Using this data, you can make informed decisions about resource allocation, identify potential bottlenecks, and optimize load-balancing rules for better efficiency.

4. Centralize Load Balancing Management

Centralizing the management of your load-balancing infrastructure, including network load balancer and dedicated servers, simplifies administration and oversight. A centralized management platform allows for more straightforward configuration, monitoring, and updating of your load-balancing strategies.

This unified approach reduces the complexity of managing multiple load balancers and servers. It streamlines operations and minimizes the potential for errors.

5. Employ Hybrid Load Balancing Solutions

Implementing a hybrid load-balancing solution with a cloud-based network load balancer and dedicated server can be highly effective for organizations using both cloud and on-premises environments.

This strategy allows for more flexible and efficient traffic distribution. It caters to different workloads and environments. Hybrid solutions ensure that load balancing is optimized across all available resources, no matter their location.

Future of Load Balancing

Significant trends and technical developments will change the future network management and application delivery environment. As the need for error-free, continuous service increases along with network complexity, load-balancing solutions are developing to fulfill these demands. Below, we look at what this vital field may look like in the future:

1. Increased Adoption of Cloud-Based Load Balancing

Credits: FreePik

As more organizations switch to cloud environments, cloud-based load-balancing solutions will likely become more common. These solutions offer flexibility, scalability, and cost-effectiveness, fitting well with the dynamic nature of cloud services. Cloud-native load balancers will likely become the standard, offering improved capabilities like auto-scaling and integration with cloud services.

2. Greater Emphasis on Application-Aware Load Balancing

Future load balancers will be more application-aware. They will distribute traffic based on network parameters and the specific needs and characteristics of the applications. This approach ensures the resources are optimized for the best application performance and user experience.

3. Automation and Self-Healing Systems

Automation in load balancing will become more sophisticated. Systems will be capable of self-healing and self-optimization. Load balancers will automatically detect and rectify issues, adjust configurations, and optimize performance without human intervention.

4. Multi-Cloud and Hybrid Cloud Load Balancing

As organizations increasingly adopt multi-cloud and hybrid cloud strategies, load balancers must operate smoothly across different cloud environments. This will involve balancing loads within a single cloud or data center and across multiple cloud platforms and on-premises infrastructure.

5. Edge Computing Compatibility

With the increasing popularity of edge computing, load balancers will have to adapt to environments where processing is done closer to the data source. This will include distributing traffic between the central data centers and edge locations, ensuring optimal performance and minimal latency for applications that depend on real-time data processing.

Conclusion – Network Load Balancer

After discussing load balancers, it is evident that the role of load balancers in server performance is undeniable. Load balancers are integral components that empower applications to operate at their peak.

RedSwitches offers ideal services for those who want to upgrade their load-balancing solutions. The provider offers the infrastructure and support to optimize your online presence for performance.

Choosing RedSwitches means choosing a partner dedicated to ensuring your digital success. Visit our website today if you have more queries about how our load-balancing solutions can elevate your online platform.

FAQ

Q. What does a network load balancer do?

A network load balancer disperses network traffic throughout several servers in a network. This ensures all servers bear the load equally. This improves the application’s efficiency, reliability, and capacity. It operates at the transport layer and can handle millions of requests per second while ensuring low latencies.

Q. What is the difference between NLB and ALB?

Network Load Balancer (NLB) functions at the fourth layer of the OSI model. They handle traffic at the TCP/UDP level. It’s created for high-performance and low-latency situations. Application Load Balancer (ALB) functions at the seventh layer of the OSI model. It manages traffic at the HTTP/HTTPS level. It’s ideal for managing complex, content-based routing and can make routing decisions based on user requests.

Q. What is a NLB device?

A Network Load Balancer is a hardware or software-based tool that circulates network traffic across many servers. NLBs are effective for ensuring efficient traffic distribution in high-volume networks and can operate transparently to end-users.

Q. What is Network Load Balancing?

Network Load Balancing is a technique that distributes incoming network traffic across many servers. It ensures that no single server gets excessive load. This helps in improving the reliability and scalability of applications and websites.

Q. What are the main benefits of using Cloud Load Balancing?

Cloud Load Balancing helps optimize resource usage and offers flexibility to handle changing traffic patterns. It also enhances the performance and reliability of applications running on the cloud.

Q. How does Load Balancing work across multiple servers?

Load Balancing assigns incoming network traffic to many servers to disperse the load across all servers. It ensures efficient resource utilization and keeps one server from facing excessive traffic.

Q. Can Network Load Balancer operate at both Layer 4 and Layer 7 levels?

A Network Load Balancer operates at Layer 4 of the OSI model, which is the Transport Layer. It focuses on dispersing traffic based on data from network and transport layer protocols (TCP/UDP). Network Load Balancers do not operate at Layer 7, as this layer demands understanding the content of the messages.

Q. How does Load Balancing handle traffic to different target groups?

Load Balancing distinguishes traffic based on the target groups defined in its configuration. It ensures requests are routed to the appropriate servers within the specified target groups. This allows for more granular control over traffic distribution.

Q. Can Load Balancer nodes work with both elastic and static IPs?

Yes, Load Balancer nodes can work with both elastic (dynamic) and static IPs. It provides the flexibility to manage and divide IP addresses per the application’s specific requirements.

Q. What is the significance of Cross-Zone Load Balancing in cloud environments?

Cross-Zone Load Balancing allows Load Balancers to distribute traffic evenly across instances in multiple availability zones. It improves fault tolerance and performs better using resources from different zones.

Q. How does Load Balancing optimize resource usage in cloud environments?

Load Balancing optimizes resource usage by distributing incoming traffic across many servers. This prevents any single server from becoming overwhelmed. It also ensures that resources are utilized effectively, enhancing application performance and availability.

Q. How does Network Load Balancer differ from other load balancing services?

The Network Load Balancer can handle massive traffic. It is better for performance and scalability than other load-balancing services like the Application Load Balancer.

Q. What are the advantages of using a Network Load Balancer for load balancing?

Network Load Balancer offers performance, low latency, and support for static IP addresses. It can also handle millions of requests per second. This makes it a preferred choice for high-performance applications.

Q. What is the difference between layer 4 and layer 7 load balancing?

Layer 4 load balancing operates at the transport layer. They direct traffic based on source and destination IP addresses and ports. Layer 7 load balancing works at the application layer. It considers more factors like HTTP headers and cookies for advanced routing decisions.