Key Takeaways

- Load balancing ensures fast, reliable online services. Various strategies exist, each tailored to specific needs and environments.

- Round Robin is simple and distributes requests evenly but doesn’t consider server load.

- Least Connections focuses on server capacity, directing traffic to the least busy server.

- Least Response Time combines speed and capacity for optimal performance.

- Hash-based ensures consistent routing based on client attributes.

- Weighted strategies (Round Robin and Least Connections) account for server capacity, giving more requests to more robust servers.

- IP Hash maintains user session consistency.

- Resource-based looks at real-time server resources for load distribution.

- Geographic or Location-Based reduces latency by connecting users to the nearest server.

- Predictive Algorithms use machine learning for dynamic, intelligent load distribution.

- Priority-based ensures critical tasks get resources first.

- Implementation challenges include complexity, server mismatch, and security.

- Best practices involve understanding traffic, choosing the right strategy, continuous monitoring, and scalability.

In today’s digital world, websites and applications are integral to our daily lives. Ensuring seamless online experiences is more important than ever. A key player in this arena is load balancing, a technique that’s as crucial as it is fascinating.

By distributing computational workloads across multiple computing resources. Load balancing enhances the efficiency of dedicated servers. Thereby accelerating performance and reducing delays.

It’s like having several checkout lines in a grocery store; with more open, the wait time for customers drastically reduces, translating to a better shopping experience. In the virtual world, this means happier users due to shorter loading times and more reliable access to online services.

In cloud computing, load balancing is crucial. Experts are always looking for better ways to do it. They use extraordinary measures to check how well it’s working. This helps make things even faster and more reliable.

In this article, we’ll look at different ways to balance load. Some are simple; others are more advanced. We’ll see how they help make websites and apps run smoothly. Whether you’re an IT expert or just curious, you’ll learn how load balancing strategies improves the internet.

Table of Contents

- Key Takeaways

- What is Load Balancing?

- What Are The Benefits Of Load Balancing?

- Load Balancing Strategies

- Round Robin Load Balancing

- Least Connections Load Balancing

- Least Response Time Load Balancing

- Hash-Based Load Balancing

- Weighted Least Connections Load Balancing

- IP Hash Load Balancing

- Resource-Based Load Balancing

- Geographic or Location-Based Load Balancing

- Predictive Algorithms (Machine Learning) in Load Balancing

- Priority-Based Load Balancing

- What Is OSI Layer Optimization In Load Balancing?

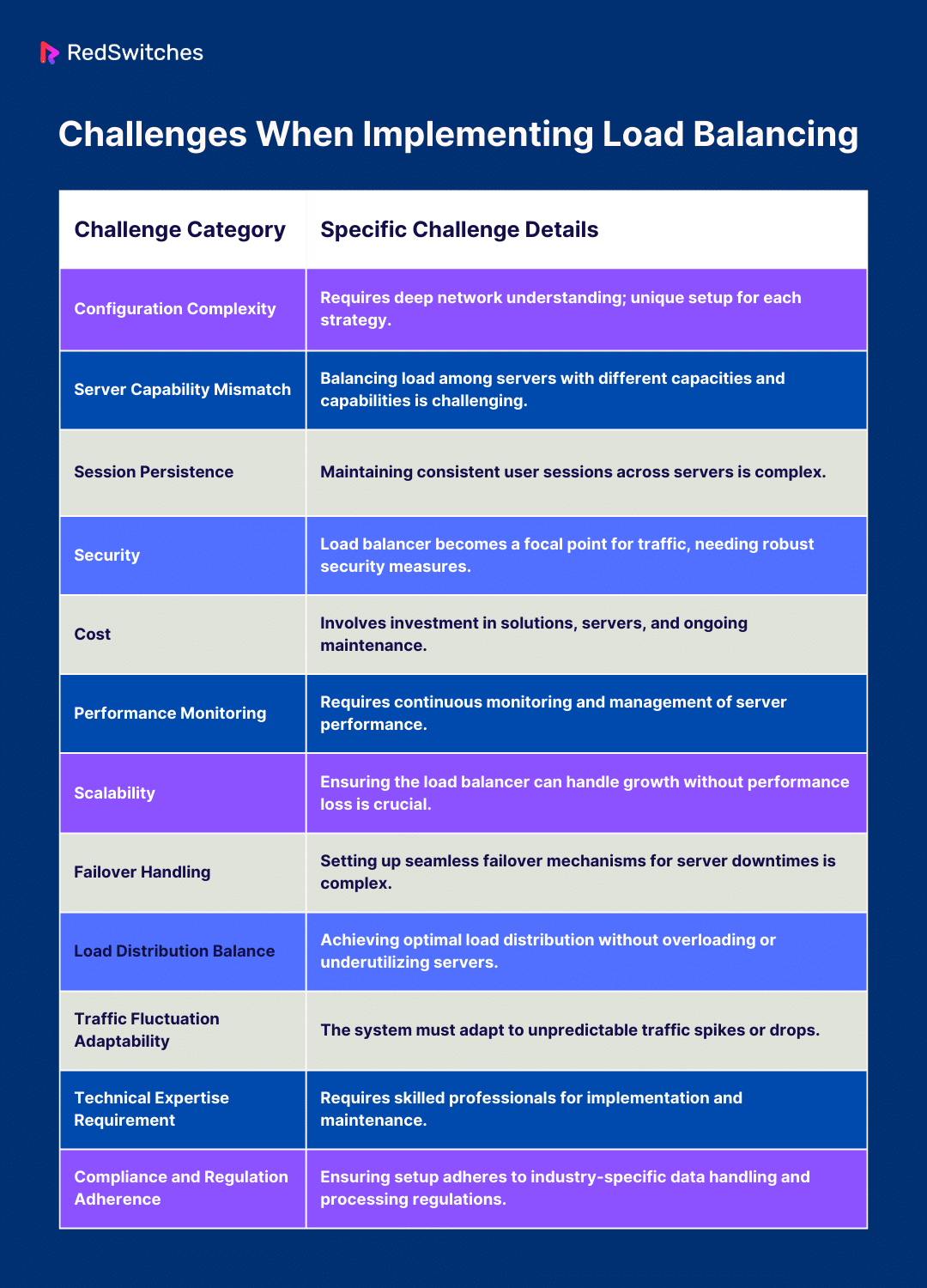

- Challenges When Implementing Load Balancing

- Complex Configuration

- Server Capacity and Capability Mismatch

- Maintaining Session Persistence

- Security Concerns

- Cost Implications

- Performance Monitoring and Management

- Scalability Issues

- Handling Failover

- Balancing Load Distribution

- Adapting to Traffic Fluctuations

- Technical Expertise

- Compliance and Regulation

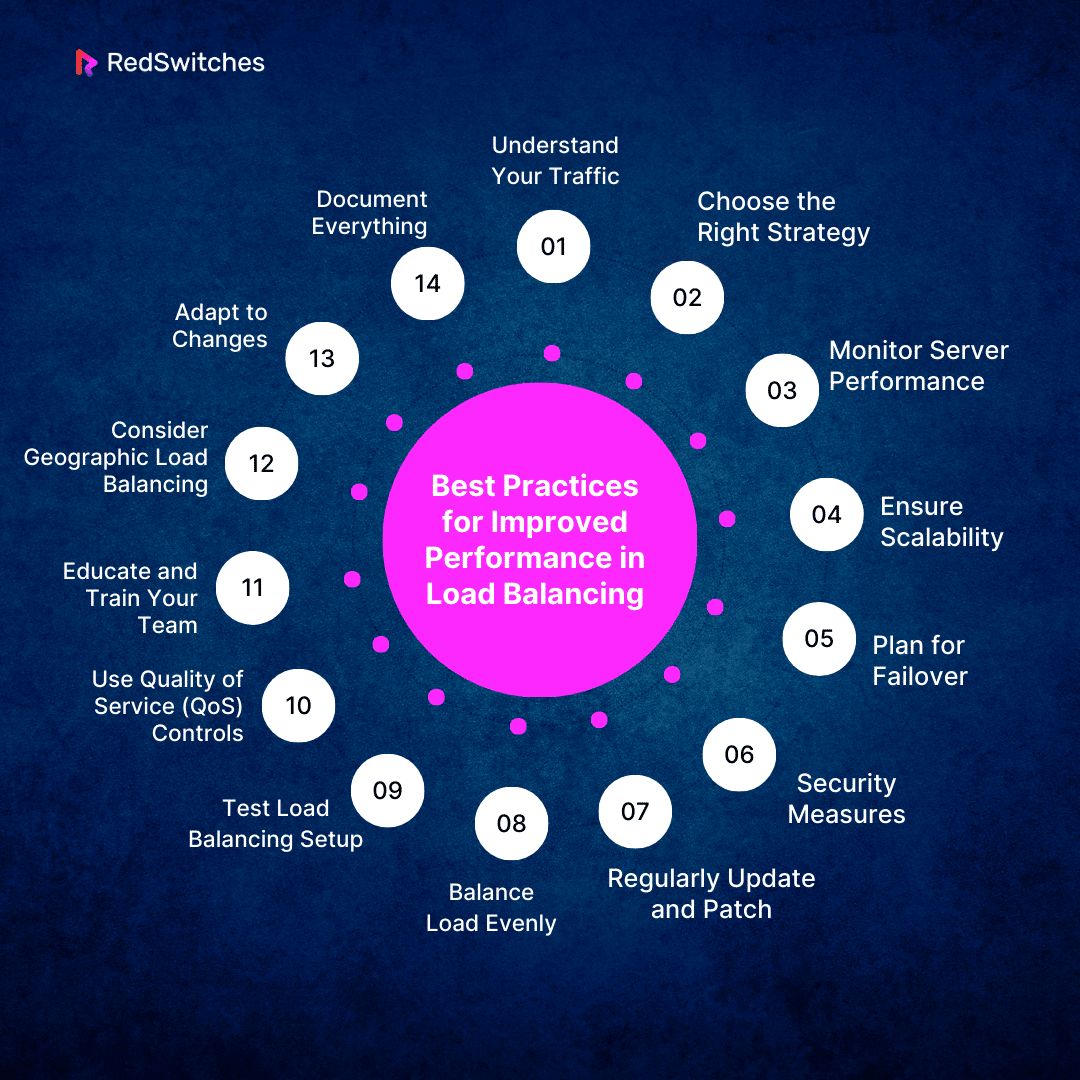

- Best Practices for Improved Performance in Load Balancing

- Understand Your Traffic

- Choose the Right Strategy

- Monitor Server Performance

- Ensure Scalability

- Plan for Failover

- Security Measures

- Regularly Update and Patch

- Balance Load Evenly

- Test Load Balancing Setup

- Use Quality of Service (QoS) Controls

- Educate and Train Your Team

- Consider Geographic Load Balancing

- Adapt to Changes

- Document Everything

- Conclusion

- FAQs

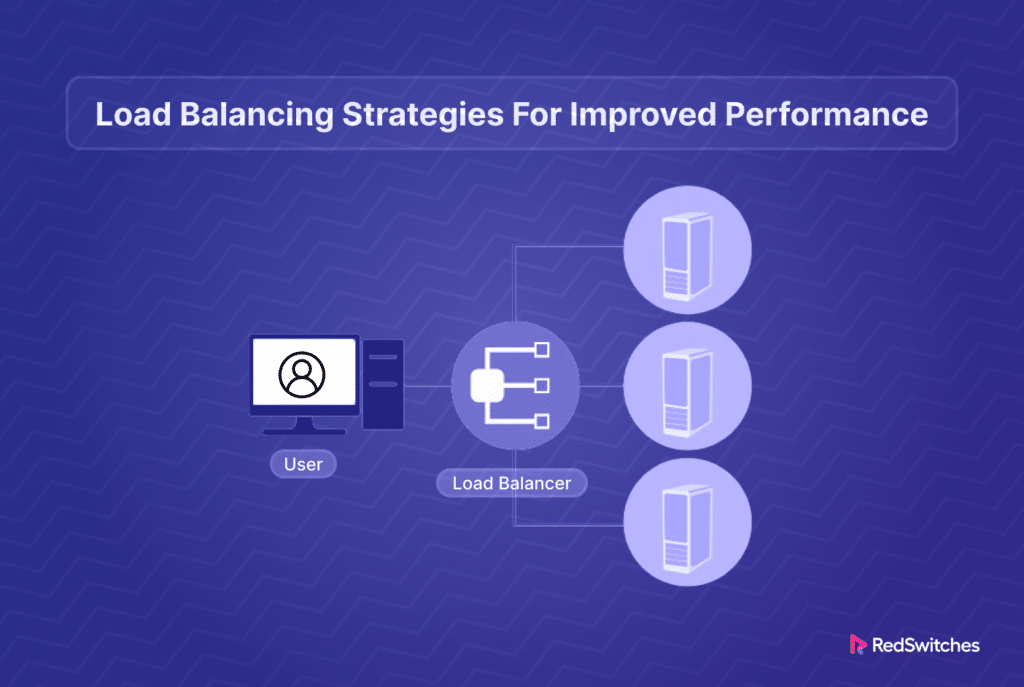

What is Load Balancing?

Credits: Freepik

Load balancing is a process in the digital world. It distributes tasks and network traffic across multiple servers. This distribution ensures that no single dedicated server bears too much load. It’s a technique to optimize resources, maximize throughput, minimize response time, and avoid overload on any single resource.

In technical terms, load balancing improves the distribution of workloads across multiple computing resources. This can include servers, network links, or central processing units.

Also Read Server Scaling Explained: Definition and Scalability Strategies

Why is Load Balancing Important?

Load balancing is crucial for maintaining the health and performance of websites and applications:

- Improves Performance: It enhances the speed and reliability of websites and applications. By evenly distributing the workload, load balancing ensures services are delivered efficiently.

- Scalability: It allows systems to handle demand increases. More resources can be added without disrupting the service, allowing the system to accommodate more users smoothly.

- Prevents Downtime: Distributing the load reduces the risk of server overload. This prevents downtime and ensures that the application or website remains available and operational.

- Failover Support: If one server fails, load balancing automatically redirects traffic to the remaining operational servers. This failover mechanism ensures continuous service availability.

- Resource Utilization: It optimizes server capacity, ensuring no single server is underutilized or overburdened. This leads to more efficient resource use.

Through these mechanisms, load balancing is essential in ensuring that web services are delivered quickly, reliably, and efficiently. It’s a critical part of the infrastructure that supports the smooth operation of online services.

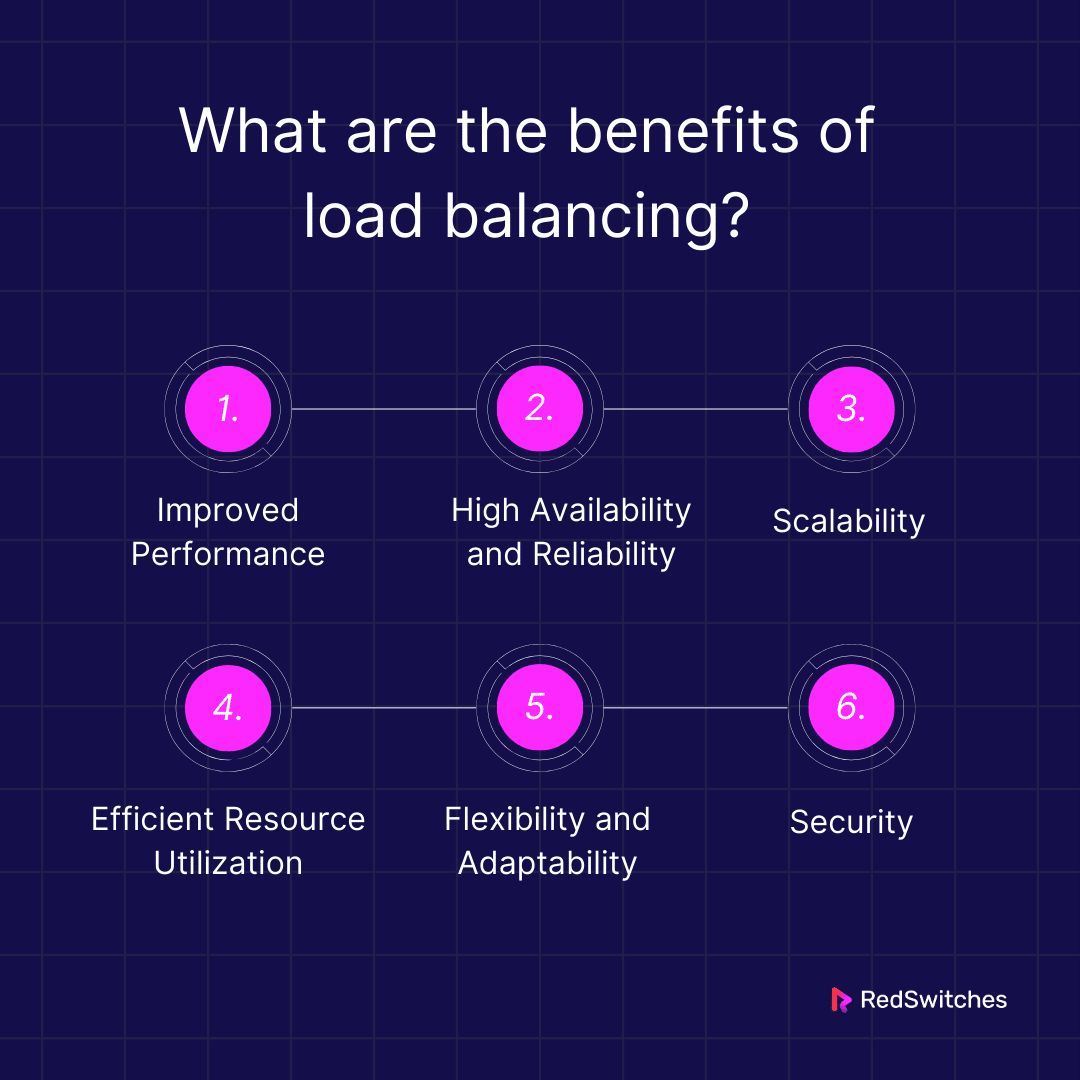

What Are The Benefits Of Load Balancing?

Load balancing isn’t just about distributing network traffic. It’s a multifaceted strategy that benefits your network’s performance and reliability. From speeding up your website to ensuring it’s always available. Let’s dive into the key advantages of load balancing. An essential component of modern network infrastructure.

Improved Performance

Load balancing boosts the performance of applications and websites. Distributing incoming network traffic across multiple servers ensures no single server bears too much load. This results in faster response times and higher throughput, providing a smoother user experience.

High Availability and Reliability

Load balancing enhances the availability and reliability of websites and applications. It does this by redirecting traffic away from servers that are down or overloaded to those that are operational. This means even if one server fails, the website or application remains up and running, minimizing downtime.

Scalability

Load balancing allows for easy scalability. As the demand increases, new servers can be added to the pool without disrupting the ongoing services. This makes it easier for businesses to grow and handle increased traffic without compromising on performance.

Efficient Resource Utilization

With load balancing, resources are used more efficiently. Traffic is distributed in a way that ensures no single dedicated server is idle or overworked. This optimal use of resources leads to cost savings and enhanced overall performance of the system.

Flexibility and Adaptability

Load balancing offers flexibility. It allows for different load distribution strategies based on current needs and conditions. Whether it’s a sudden spike in traffic or the introduction of a new application, load balancing can adapt and ensure efficient handling of network traffic.

Security

Load balancing can enhance security. Distributing traffic across multiple servers it can help mitigate DDoS attacks and ensure the network remains resilient against such threats. Moreover, specific advanced load balancers have built-in security features to further protect against vulnerabilities.

Also Read Cloud Services: Understanding High Availability in Cloud Computing

By ensuring high performance, reliability, scalability, efficient resource utilization, flexibility, and enhanced security, load balancing becomes an indispensable tool for managing network traffic effectively.

Load Balancing Strategies

Credits: Freepik

In this section, we explore load balancing strategies. Each one has its benefits. They help manage network traffic and improve service. Let’s look at how each strategy works and what it offers.

Round Robin Load Balancing

Round Robin is a straightforward load balancing strategy. It’s known for its simplicity and fairness. Here’s a closer look at how it operates and where it fits best.

Sequential Allocation

Round Robin distributes incoming network requests sequentially. It sends each new request to the next server on the list. After reaching the last server, it loops back to the first. This cycle ensures each server gets an equal number of requests over time.

Uniformity and Simplicity

One of the main advantages of Round Robin is its uncomplicated nature. It doesn’t require complex algorithms or real-time analysis of server loads. This makes it relatively easy to implement and manage. It’s particularly effective when the servers in the pool have similar capabilities and configurations. In such environments, Round Robin ensures that no single server is overwhelmed while others remain idle.

Limitations to Consider

However, Round Robin does have its limitations. It doesn’t account for the current load or performance of a server. This means a server might receive a new request even if it handles a heavy load. Moreover, Round Robin might not be ideal in setups where servers have different processing powers or memory capacities. It treats all servers equally without considering their individual capabilities or current load.

Best Use Cases

Despite its limitations, Round Robin is highly effective in specific scenarios. It’s particularly suited for environments where servers are homogenous and tasks require similar processing times. It’s a popular choice due to its simplicity and the fairness in its approach to load distribution.

While Round Robin is a straightforward and easy-to-implement load balancing strategy, assessing your specific needs and dedicated server environment is essential. Understanding its limitations is crucial in determining the right strategy for your infrastructure. Round Robin can efficiently and effectively solve more uniform and predictable workloads.

Least Connections Load Balancing

Least Connections is an intelligent load balancing strategy. It’s designed to consider the current load on each server. This method goes beyond the simplicity of Round Robin. It adds a layer of intelligence to load distribution.

Understanding the Workload

Unlike Round Robin, Least Connections pays attention to each server’s number of active connections. When a new request arrives, it’s sent to the server with the fewest active connections. This approach aims to prevent any server from getting overloaded.

Dynamic and Adaptive

Least Connections is dynamic. It adapts based on real-time server performance. This makes it suitable for environments where the load is unpredictable or where request handling times vary significantly.

Advantages

The main advantage of Least Connections is its ability to balance the load evenly across servers, especially when some requests take longer to process than others. It helps in utilizing server resources efficiently and ensuring a more consistent response time for end-users.

Also Read Exploring High Availability vs Fault Tolerance

Considerations

However, there are considerations. If the servers have different capabilities, the strategy might need tweaking. It’s essential to ensure that the server with the least connections can handle the new request without compromising performance.

Best Suited For

Least Connections is best for environments where request processing times are inconsistent or vary greatly. It’s also effective when there’s a mix of short and long tasks. This strategy helps handle sudden spikes in traffic more gracefully, ensuring no single server becomes a bottleneck.

Least Connections load balancing is a step up from more straightforward methods like Round Robin. It brings a smarter approach to managing server load. Considering the number of active connections ensures a more balanced and efficient distribution of requests. This leads to better performance and a smoother experience for users. However, it’s essential to consider the specific capabilities of each server to make the most out of this strategy.

Least Response Time Load Balancing

Credits: Freepik

Least Response Time takes load balancing slightly higher. This method examines the number of connections and considers how fast servers respond. It’s about directing traffic to the server that’s most likely to provide quick responses.

Prioritizing Speed

This strategy prioritizes server speed. It’s not just about how many requests a server handles but how quickly it handles them. The dedicated server with the fewest connections and the fastest response time gets the new request. This ensures that the network doesn’t just balance load but also maximizes speed.

Dual Criteria for Distribution

Unlike other methods, Least Response Time uses two criteria the number of active connections and the server’s response time. This dual approach aims to optimize user experience by reducing wait times.

Benefits

The key benefit of this method is its ability to enhance user experience. Websites and applications feel faster and more responsive. It’s particularly useful for services where speed is crucial. Users get quicker access to the needed resources, leading to higher satisfaction.

Complexity in Implementation

While the advantages are clear, this method is more complex than Round Robin or Least Connections. It requires real-time monitoring of server response times, adding a layer of complexity to the load balancing strategy.

Ideal Scenarios

Least Response Time is ideal for high-traffic environments where a small delay can lead to user dissatisfaction. It’s great for dynamic websites, online gaming platforms, or e-commerce sites, where fast and reliable resource access directly impacts the user experience.

The Least Response Time is a sophisticated load balancing strategy. It optimizes performance by considering the number of connections and the server’s speed. This approach can significantly enhance user experience, making services faster and more reliable.

However, the added benefits come with increased complexity in monitoring and managing server response times. It’s a strategy well-suited for scenarios where speed is of the essence.

Hash-Based Load Balancing

Credits: Freepik

Hash-Based load balancing introduces a method that adds precision to how requests are directed. It uses a unique attribute of each request, like the IP address or the request URL, to decide where to send it.

Consistency in Request Handling

This strategy ensures that the same server handles a particular request type. It uses a hash function on certain request attributes. The result determines which server gets the request.

Predictable Routing

Hash-Based load balancing provides predictability. Once a pattern is established, it’s easy to know which server will handle a particular type of request. This is especially useful for maintaining user sessions or handling transactions where continuity is important.

Load Distribution Control

While it provides consistency, this method allows for controlled load distribution. Selecting the right attributes to hash can influence how the load is spread across the servers.

Challenges to Consider

One challenge is ensuring the load remains balanced. If not implemented carefully, certain servers might handle more load than others. Monitoring and adjusting the hash function or the server pool is crucial to maintaining balance.

Best Suited For

Hash-Based load balancing is ideal for applications where keeping a user’s session on the same server is important. It’s also useful in environments where requests of a certain type need specialized handling by specific dedicated servers.

Hash-Based load balancing offers precision and consistency that other methods don’t. It’s great for maintaining user sessions and managing specific types of requests. However, it requires careful setup and ongoing monitoring to distribute the load evenly. It’s a strategy that combines predictability with control, making it a valuable option for certain application scenarios.

Also Read 16 Tips on How to Increase Your Server Speed & Performance

Weighted Least Connections Load Balancing

Weighted Least Connections takes the Least Connections strategy further by adding weights to servers. This method considers each server’s current number of connections and accounts for the server’s capacity or performance.

Balancing with Precision

Each server is assigned a weight reflecting its ability to handle load. The load balancer considers these weights and the current number of active connections. It then directs new requests to the server with the least connections relative to its weight.

Dynamic Load Management

This strategy is dynamic and responsive. It’s excellent for environments where server load and capacity can change rapidly. It adjusts to ongoing conditions, aiming to ensure a smooth and balanced distribution of requests.

Efficient Utilization of Resources

Weighted Least Connections is about maximizing each server’s capacity. It ensures that powerful servers are fully utilized but not overburdened. At the same time, it prevents less capable servers from becoming a bottleneck.

Setup and Maintenance Considerations

While effective, this method requires careful setup. Determining the appropriate weight for each server is crucial. These weights may need to be adjusted over time to reflect changes in server performance or the overall load.

Ideal for Mixed Environments

This load balancing strategy suits setups with servers of varying capacities. It’s also beneficial when the workload is unpredictable or when it’s crucial to minimize response times.

Weighted Least Connections offers a sophisticated approach to load balancing. It combines the intelligence of considering the number of active connections with the insight of server capacity. This results in a highly efficient and responsive load distribution. However, it does demand a thorough understanding of the environment and ongoing management to ensure optimal performance.

IP Hash Load Balancing

Credits: Freepik

IP Hash load balancing uses a unique method to distribute network traffic. It creates a consistent and predictable mapping between a client and a server. This method relies on the IP address of the client to determine which server will handle the request.

Consistent Client-Server Mapping

In IP Hash load balancing, a hash function is applied to the client’s IP address. This function determines which server will handle the client’s requests. This ensures that a client is consistently connected to the same server for the duration of their session.

Session Persistence

This method is excellent for maintaining session persistence. It’s crucial for applications where the user’s session data is stored locally on the server. With IP Hash, user requests are directed to the same server, maintaining session continuity.

Simple and Predictable

IP Hash is straightforward in its approach. The client-server mapping is easy to predict and manage. This predictability can simplify the network’s overall management and troubleshooting.

Load Distribution Considerations

While IP Hash ensures session persistence, it may not evenly distribute the load across servers. The distribution depends on the client IP addresses, which may not always result in a balanced load. This can lead to some servers being overburdened while others are underutilized.

Best Suited for Specific Applications

IP Hash load balancing is best suited for applications that require session persistence. It’s ideal when the continuity of user sessions is more critical than evenly distributing the load across servers.

IP Hash load balancing offers a straightforward and effective solution for maintaining session persistence. It ensures that clients are consistently connected to the same server, which is crucial for certain applications. However, it’s important to consider its impact on load distribution and ensure that it aligns with the application’s requirements and the overall goals of the network infrastructure.

Also Read Application Resiliency vs Infrastructure Resiliency: Understand the Similarities & Differences

Resource-Based Load Balancing

Credits: Freepik

Resource-Based load balancing is an advanced method that dynamically distributes traffic based on the actual resource usage of dedicated servers. It considers factors like CPU load, memory usage, and network bandwidth to make intelligent routing decisions.

Dynamic Resource Assessment

This strategy continuously monitors the resource usage of each server. It evaluates parameters like CPU load, memory consumption, and network traffic. Based on this real-time data, it directs incoming requests to the server with the most available resources.

Adaptive and Responsive

Resource-Based load balancing is highly adaptive. It responds to changes in server load and performance in real-time. This ensures that no single server is overwhelmed and the workload is evenly distributed based on current conditions.

Maximizing Server Efficiency

By considering actual server resources, this method maximizes the efficiency of the server infrastructure. It prevents scenarios where some servers are idle while others are overloaded, using the available resources fully.

Complexity in Implementation and Maintenance

While highly effective, Resource-Based load balancing is more complex to implement and maintain. It requires a setup that can monitor and analyze server resources in real-time and make instant decisions on traffic distribution.

Ideal for Dynamic Environments

This load balancing is great for places where work changes significantly, and servers work differently. It’s beneficial in big cloud setups and data centers where what’s available can change fast.

Resource-Based load balancing smartly spreads out network traffic. It uses server resources well and adjusts to changes quickly, keeping things balanced and efficient. But, it’s complex and needs careful setup and management to really work well.

Geographic or Location-Based Load Balancing

Geographic or Location-Based load balancing connects users to the nearest server. It’s a strategy that focuses on where users are. The goal is to make websites and apps faster and more reliable for everyone, no matter where they are.

Location Matters

This method uses the user’s location to decide where to send their requests. It often chooses the nearest server. This reduces the distance data travels, making everything faster.

Faster Access

The main advantage is speed. Users get connected to nearby servers. This means less waiting and quicker access to websites and apps.

Balanced Traffic Globally

Traffic gets spread out across servers worldwide. When busy in one place, servers in quieter areas can help. This keeps the load balanced, no matter the time or place.

Content That Fits the Location

This method is great for showing users content that’s right for their region. It makes services more personal and useful.

Handling Global Networks

While powerful, this strategy needs careful management. Handling servers all over the world can be complex. It’s important to keep an eye on all locations to make sure they work well together.

Ideal for Worldwide Services

Geographic load balancing is perfect for services that reach users worldwide. It’s about giving everyone, everywhere, a fast and stable experience.

In short, Geographic or Location-Based load balancing makes online services quicker and more relevant. It does this by linking users to the nearest server. This strategy can make a big difference for companies that serve users worldwide. It brings faster performance and content that fits each user’s location. But, managing it well across many places is key to its success.

Also Read Monolith vs Microservices Architecture: Which Is The Best Approach For Your Projects?

Predictive Algorithms (Machine Learning) in Load Balancing

Credits: Freepik

Predictive Algorithms, especially those powered by Machine Learning, transform load balancing into a more intelligent and proactive process. This approach leverages historical data and real-time analytics to predict and manage network traffic.

Anticipating Traffic Patterns

Machine Learning algorithms analyze past traffic data. They identify patterns and trends. This analysis helps predict future traffic surges or drops. The load balancing system can prepare in advance by anticipating these changes, ensuring optimal performance.

Adaptive and Smart

One of the key strengths of using Predictive Algorithms is their adaptability. They continuously learn and adjust. As they process more data, their predictions become more accurate. This means the system can dynamically adjust its strategies to balance the load more effectively.

Enhanced Efficiency and Performance

Predictive load balancing can significantly improve efficiency. By allocating resources based on predicted demand, it ensures servers are neither overburdened nor underutilized. This not only enhances performance but can also lead to cost savings.

Complexity and Resource Requirements

Implementing Machine Learning algorithms is complex. It requires significant computational resources and expertise in data science. The setup and maintenance of such systems can be challenging and resource-intensive.

Real-Time Decision Making

These algorithms enable real-time decision-making. They can quickly respond to sudden changes in traffic, redistributing the load instantly to maintain smooth operation.

Ideal for Large and Dynamic Environments

Predictive Algorithms are particularly beneficial in large, dynamic environments where traffic patterns are complex and constantly changing. They are ideal for cloud environments, large e-commerce platforms, and global online services.

Predictive Algorithms using Machine Learning offer a forward-thinking approach to load balancing. They provide intelligence and adaptability that traditional methods can’t match. These algorithms ensure efficient resource utilization by accurately forecasting traffic patterns and enhancing performance and user experience. However, their complexity and the need for advanced computational resources make them more suitable for larger, more dynamic networks.

Priority-Based Load Balancing

Priority-Based load balancing is a method that manages traffic based on the importance of requests or servers. It’s like having a VIP lane, ensuring critical tasks swiftly get the attention they need.

Setting Priorities

This method assigns traffic or dedicated servers priorities. High-priority traffic or servers get first access to resources. This ensures that the most important tasks are handled quickly and efficiently.

Guaranteed Performance for Critical Tasks

The biggest advantage of Priority-Based load balancing is that it guarantees resources for high-priority tasks. Whether it’s a crucial database query or a high-revenue customer, these tasks won’t have to wait in line.

Effective Resource Utilization

By focusing resources on high-priority tasks, this method makes sure that important jobs are completed swiftly. At the same time, it efficiently manages less critical tasks, ensuring an overall balanced server load.

Complexity in Configuration and Management

Setting up a Priority-Based system can be complex. It requires understanding which tasks or servers should be a high priority. Maintaining this system also demands continuous monitoring to ensure priorities are aligned with current business needs.

Also Read What is Server Clustering & How Does it Work? + 3 Main Benefits

Adaptable to Changing Needs

One of the strengths of Priority-Based load balancing is its adaptability. Priorities can be adjusted as business needs change, ensuring the system remains aligned with strategic goals.

Ideal for Diverse Workload Environments

This strategy is particularly beneficial in environments where workloads vary greatly in importance. It’s well-suited for businesses where certain operations or customers are critical and must be given preferential treatment.

Priority-Based load balancing is a strategic approach that focuses resources where they’re needed most. It ensures that critical tasks are handled promptly, improving performance and user satisfaction. However, it requires careful planning and ongoing management to ensure priorities reflect the most current and pressing business needs. For environments with diverse and dynamic workloads, this method offers a valuable way to align network performance with business priorities.

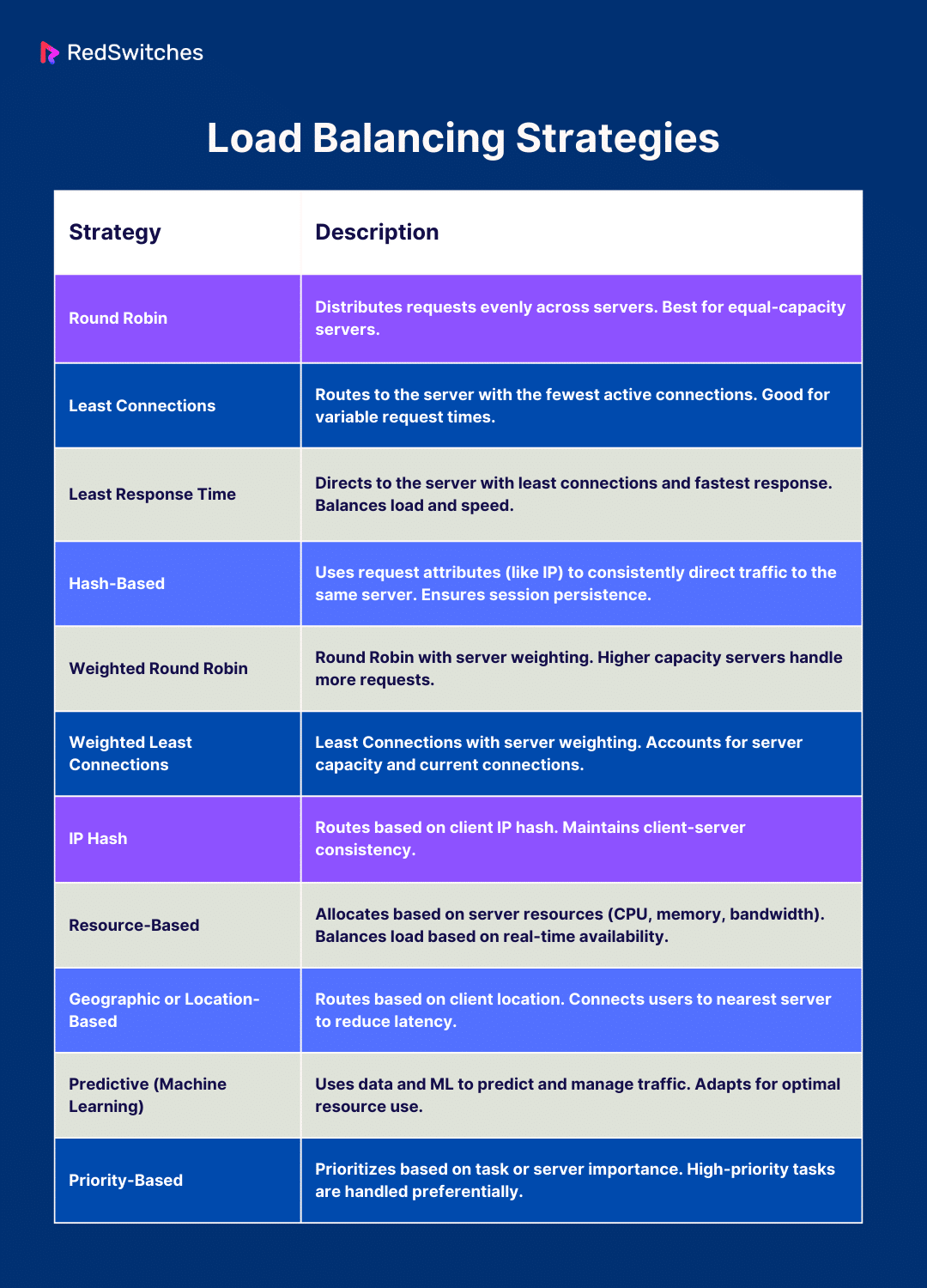

This table provides a brief yet comprehensive overview of each load-balancing strategy. Focusing on their key aspects and suitability.

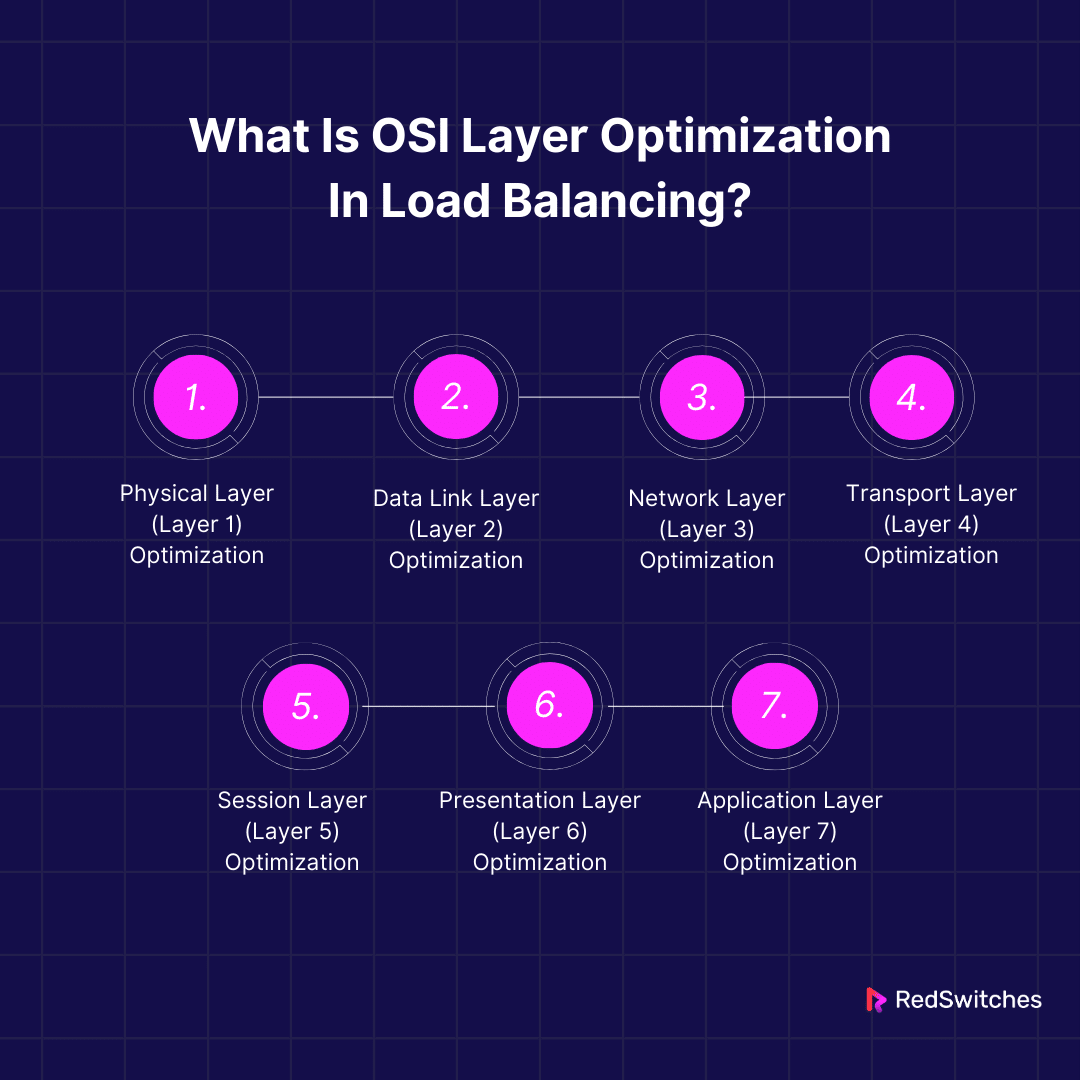

What Is OSI Layer Optimization In Load Balancing?

OSI Layer Optimization refers to enhancing data flow in networks. The OSI (Open Systems Interconnection) model has seven layers. Each layer has a specific role in handling data. Optimizing these layers improves how data travels in a network.

Physical Layer (Layer 1) Optimization

At the Physical Layer, the focus is on the tangible elements of the network. Optimizing here means using reliable cables and ensuring strong signals. Quality materials and proper setup keep the physical connections robust.

Data Link Layer (Layer 2) Optimization

The Data Link Layer is where data packets start their journey. Optimization here means making sure these packets move efficiently. It involves managing traffic well and making sure data is delivered correctly.

Network Layer (Layer 3) Optimization

At the Network Layer, it’s all about routing. Data needs to find the best path to its destination. Efficient routing protocols are key here. They guide data on the most effective route, avoiding delays.

Transport Layer (Layer 4) Optimization

The Transport Layer manages how data packets travel end-to-end. Here, optimizing means controlling the flow to avoid overload. It ensures data packets arrive in order and on time. This layer is crucial in load balancing, especially for managing traffic based on network data like TCP and UDP protocols.

Session Layer (Layer 5) Optimization

The Session Layer handles interactions. It’s where applications connect and talk to each other. Optimization involves managing these connections well. It keeps sessions stable and synchronizes data effectively.

Presentation Layer (Layer 6) Optimization

This layer translates data. It’s where data is prepared for the application or the network. Optimizing involves compressing data for faster transmission and encrypting it for security. This makes data handling more efficient.

Application Layer (Layer 7) Optimization

The Application Layer is closest to the user. It’s where user services are managed. Optimization here means making sure applications use data and network resources well. This layer is also essential in load balancing. It distributes traffic based on content, like URLs and HTTP headers.

Load balancing becomes more effective by focusing on these layers, especially the Transport and Application layers. It ensures that data moves efficiently through the network and that applications and services perform at their best. This leads to a smoother, faster, and more reliable network.

Also Read All You Need to Know About an Efficient Cloud Computing Infrastructure

Challenges When Implementing Load Balancing

Credits: Freepik

Implementing load balancing can significantly enhance network performance and reliability. However, it comes with its own set of challenges. Understanding these hurdles is crucial for a successful implementation.

Complex Configuration

Setting up a load balancer can be complex. It requires a deep understanding of network architecture and the specific needs of your applications. Each load balancing strategy has unique setup requirements, challenging the configuration process.

Server Capacity and Capability Mismatch

In environments with different capacities and capabilities, balancing the load effectively can be difficult. Ensuring that each server is neither overburdened nor underutilized requires careful planning and continuous monitoring.

Maintaining Session Persistence

For certain applications, it’s crucial to maintain user session data. Ensuring session persistence across multiple servers can be challenging, especially when using strategies that don’t naturally support it, like the Round Robin method.

Security Concerns

Introducing a load balancer can create new security challenges. It becomes a single point through which all traffic passes, potentially becoming a target for attacks. Ensuring robust security measures are in place is essential.

Cost Implications

Implementing and maintaining a load balancing strategy can be costly. It involves investing in hardware or software solutions and possibly additional servers. There’s also the ongoing cost of maintenance and monitoring.

Performance Monitoring and Management

Continuously monitoring the performance of each server to ensure efficient load distribution is a complex task. It requires sophisticated tools and a proactive approach to managing the infrastructure.

Scalability Issues

As your business grows, your load balancing strategies need to scale, too. Ensuring your load balancer can handle increased traffic and more servers without performance degradation is challenging.

Handling Failover

Ensuring a seamless failover if a server goes down is crucial for maintaining uninterrupted service. Setting up and testing failover mechanisms can be complex and requires thorough planning.

Balancing Load Distribution

Finding the right balance in distributing the load can be tricky. Overloading a server can lead to performance issues, while underutilizing servers is inefficient. Achieving an optimal balance requires deeply understanding your traffic patterns and server capabilities.

Adapting to Traffic Fluctuations

Traffic on the internet is unpredictable. A load balancer must be able to adapt quickly to sudden spikes or drops in traffic. Designing a system that can handle these fluctuations without compromising performance is challenging.

Technical Expertise

Implementing and maintaining load balancing strategies requires technical expertise. You need skilled professionals who understand the nuances of your network and the load balancing strategies you’re using.

Compliance and Regulation

In some industries, data handling and processing are subject to strict regulations. Ensuring that your load balancing strategy complies with these regulations can add another layer of complexity.

While load balancing is a powerful tool for enhancing network performance and reliability. It comes with its challenges. From complex configurations and security concerns to cost implications and compliance issues. Understanding and addressing these challenges is key to a successful implementation. With careful planning, the right expertise, and a proactive approach to management and monitoring. You can overcome these hurdles and ensure your load balancing strategies meet your needs. And enhances your network’s performance.

This table encapsulates the multifaceted challenges of implementing load balancing. Highlighting the need for strategic planning, technical expertise, and proactive management.

Best Practices for Improved Performance in Load Balancing

Implementing load balancing effectively can significantly enhance network performance and reliability. To maximize its benefits, it’s crucial to follow certain best practices.

Understand Your Traffic

Know the patterns and nature of your network traffic. Understanding peak times, traffic sources, and types of requests can help you choose the right load balancing strategy.

Choose the Right Strategy

Select a load balancing strategy that aligns with your traffic patterns and business needs. Whether it’s Round Robin, Least Connections, or a more advanced method, the right strategy can significantly improve performance.

Monitor Server Performance

Continuously monitor the performance of your servers. Real-time monitoring helps in detecting issues early and ensuring that the load is evenly distributed.

Ensure Scalability

Your load balancing solution should be scalable. It must handle growth in traffic and additional resources without performance degradation.

Plan for Failover

Implement robust failover mechanisms. This ensures that if a server goes down, the load is automatically redistributed to the remaining servers, maintaining uninterrupted service.

Security Measures

Ensure your load balancer has robust security measures in place. Protecting the load balancer from potential attacks is crucial as it handles all incoming traffic.

Regularly Update and Patch

Keep your load balancing software and servers updated. Regular updates and patches are crucial for maintaining performance and security.

Balance Load Evenly

Continuously strive to balance the load evenly across all servers. Avoid overburdening a single server, which can lead to performance bottlenecks.

Also Read How To Prevent DDoS Attacks [8 Proven Tactics You Can Try Right Now!]

Test Load Balancing Setup

Regularly test your load balancing setup under various conditions. This helps identify potential issues and ensure the system can handle real-world scenarios.

Use Quality of Service (QoS) Controls

Implement QoS controls to prioritize traffic. This ensures that critical applications and services receive the necessary resources.

Educate and Train Your Team

Ensure your team is well-trained in managing and troubleshooting the load balancing setup. A knowledgeable team can respond effectively to any issues that arise.

Consider Geographic Load Balancing

Consider using geographic load balancing for global services. This can reduce latency and respond faster to users based on their location.

Adapt to Changes

Be prepared to adapt your load balancing strategy as your business and traffic patterns change. The digital landscape is dynamic, and the ability to adapt can significantly improve performance.

Document Everything

Maintain detailed documentation of your load balancing configuration and policies. This is crucial for troubleshooting and understanding the impact of any changes made.

By following these best practices, you can ensure that your load balancing strategy meets your current needs. Plus, it is prepared to handle future demands. Effective load balancing improves performance, reliability, and a better user experience.

Conclusion

In digital performance, load balancing stands out as a pivotal strategy. Ensuring your applications and websites run smoothly under varying loads and conditions. It’s about distributing traffic intelligently, maintaining optimal server health, and providing users with the best possible experience. But navigating the complexities of load balancing requires expertise and a nuanced approach.

That’s where Redswitches comes into play whether you choose the right load balancing strategy. Or aiming for top-notch performance. Or ensuring your services remain uninterrupted and secure. Redswitches has the solutions and expertise you need. Our team of professionals is dedicated to tailoring a load balancing strategy that aligns perfectly with your specific needs and goals.

Don’t let the intricacies of load balancing hold you back. Partner with Redswitches and unlock the full potential of your digital infrastructure. Visit us today to explore how our tailored solutions can elevate your network performance. Your journey towards seamless, efficient, and robust digital services starts with RedSwitches.

FAQs

Q. What are the different types of load balancing?

Different types of load balancing include Round Robin, Least Connections, Least Response Time, Hash-Based, Weighted Round Robin, Weighted Least Connections, IP Hash, Resource-Based, Geographic or Location-Based, Predictive Algorithms (Machine Learning), and Priority-Based load balancing.

Q. What are the most used load balancing algorithms?

Round Robin, Least Connections, and IP Hash are the most used load balancing algorithms.

Q. What are the 3 types of load balancers in AWS?

In AWS, the three types of load balancers are Application Load Balancer (ALB), Network Load Balancer (NLB), and Classic Load Balancer (CLB).

Q. Which are the most well-known ways to perform load balancing?

The most well-known ways to perform load balancing include Round Robin, Least Connections, IP Hash, and the use of hardware or software load balancers provided by cloud service providers like AWS, Azure, and Google Cloud.

Q. What is global server load balancing (GSLB)?

Global server load balancing is a method of load balancing that distributes client requests across multiple servers. Located in different geographic locations, ensuring efficient resource allocation and high availability.

Q. How does DNS load balancing function?

DNS load balancing utilizes DNS servers to allocate client requests to different server IP addresses, providing an efficient method for distributing network traffic among multiple servers.

Q. What is elastic load balancing in cloud computing?

Elastic load balancing in cloud computing is a service that automatically distributes incoming application traffic across multiple targets, offering high availability and scalability for applications running in the cloud.

Q. What are the load balancing capabilities of network load balancers?

Network load balancers offer capabilities such as efficient distribution of TCP and UDP traffic, support for static IP addresses, and high performance for handling millions of requests per second.

Q. What are the differences between static and dynamic load balancing?

Static load balancing uses predetermined allocation methods, while dynamic load balancing adjusts resource distribution based on real-time factors such as server load, network latency, or client demand.

Q. How do load balancers function in local and global scenarios?

In local scenarios, load balancers distribute traffic within a specific network, while in global scenarios, they manage traffic distribution across multiple geographic locations, ensuring optimal resource utilization.

Q. What are the different types of algorithms and strategies?

There are various load balancing algorithms and strategies, including dynamic load balancing algorithms, static load balancing algorithms, round-robin load balancing, response time load balancing algorithms, and more. These strategies determine how the incoming traffic is distributed among the servers.

Q. How does cloud load balancing work?

Cloud load balancing involves the distribution of incoming network traffic across various resources in the cloud. It ensures that applications and workloads are efficiently distributed and scale dynamically based on demand.

Q. What is a hardware load balancer, and how does it differ from a software based load balancer?

A hardware load balancer is a physical appliance that performs load balancing functions. It differs from a software-based load balancer, a program that runs on a general-purpose computer and utilizes the underlying hardware resources to distribute the load.

Q. What is layer 4 load balancing and how does it differ from other types of load balancing?

Layer 4 load balancing operates at the transport layer of the OSI model, focusing on the source and destination IP addresses and port numbers. It differs from other types of load balancing such as layer 7 load balancing, which operates at the application layer.

Q. What is meant by virtual load balancing and how does it function in a network environment?

Virtual load balancing refers to the use of virtual load balancers in a network environment. These virtual load balancers dynamically distribute incoming traffic across virtual server instances, providing scalability and flexibility.

Q. What are some examples of static load balancing and dynamic load balancing techniques?

Examples of static load balancing techniques include round-robin load balancing, where each server is used in a circular order, and hash-based load balancing, where the client’s IP address determines which server will handle the request. Dynamic load balancing techniques include response time load balancing algorithms, which consider the server’s response times when distributing traffic.