Takeaways

- A load balancer is a device or software that disperses network or application traffic throughout servers.

- Load balancer performance matters for several reasons. This includes ensuring high availability, reduced latency, and improved security.

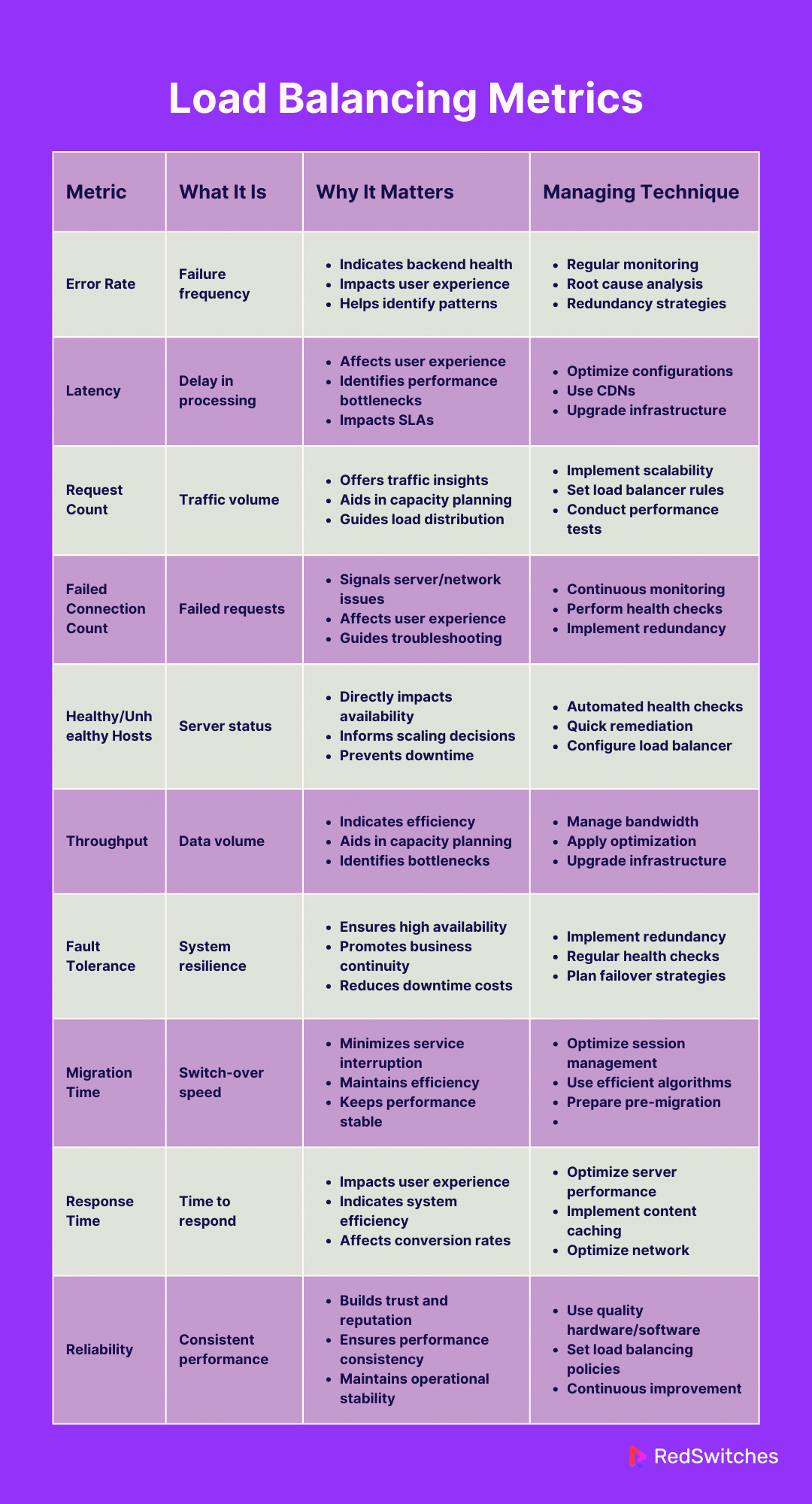

- Some load balancer performance metrics include error rate, latency, and error count.

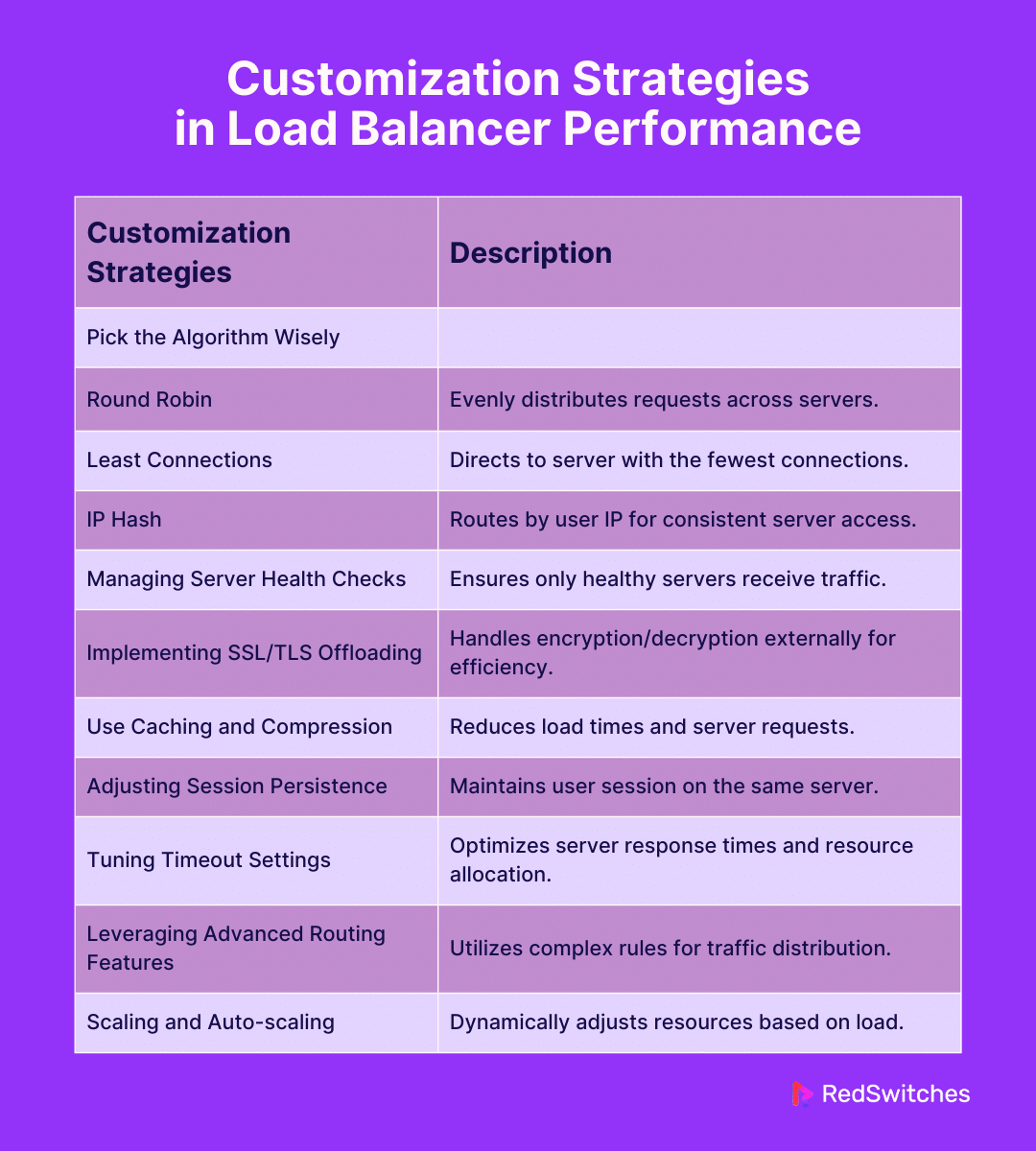

- Some load balancer customization strategies include choosing the right algorithm, caching, compressing, etc.

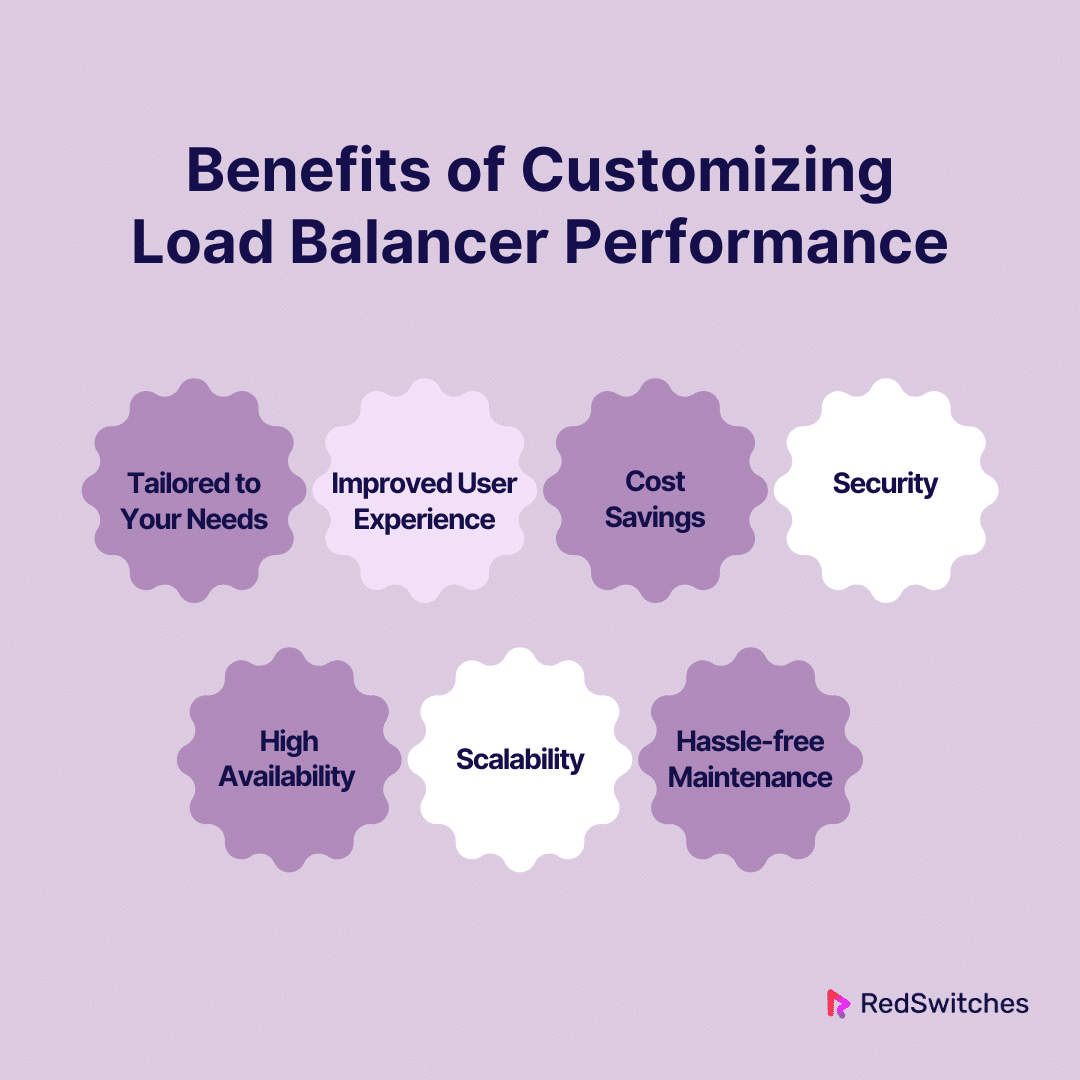

- There are many benefits of customizing load balancer performance. This includes improved user experience, security, scalability, etc.

- Failover is a backup operational mode. In this mode, the functions of a system automatically switch over to a secondary system when the primary system fails.

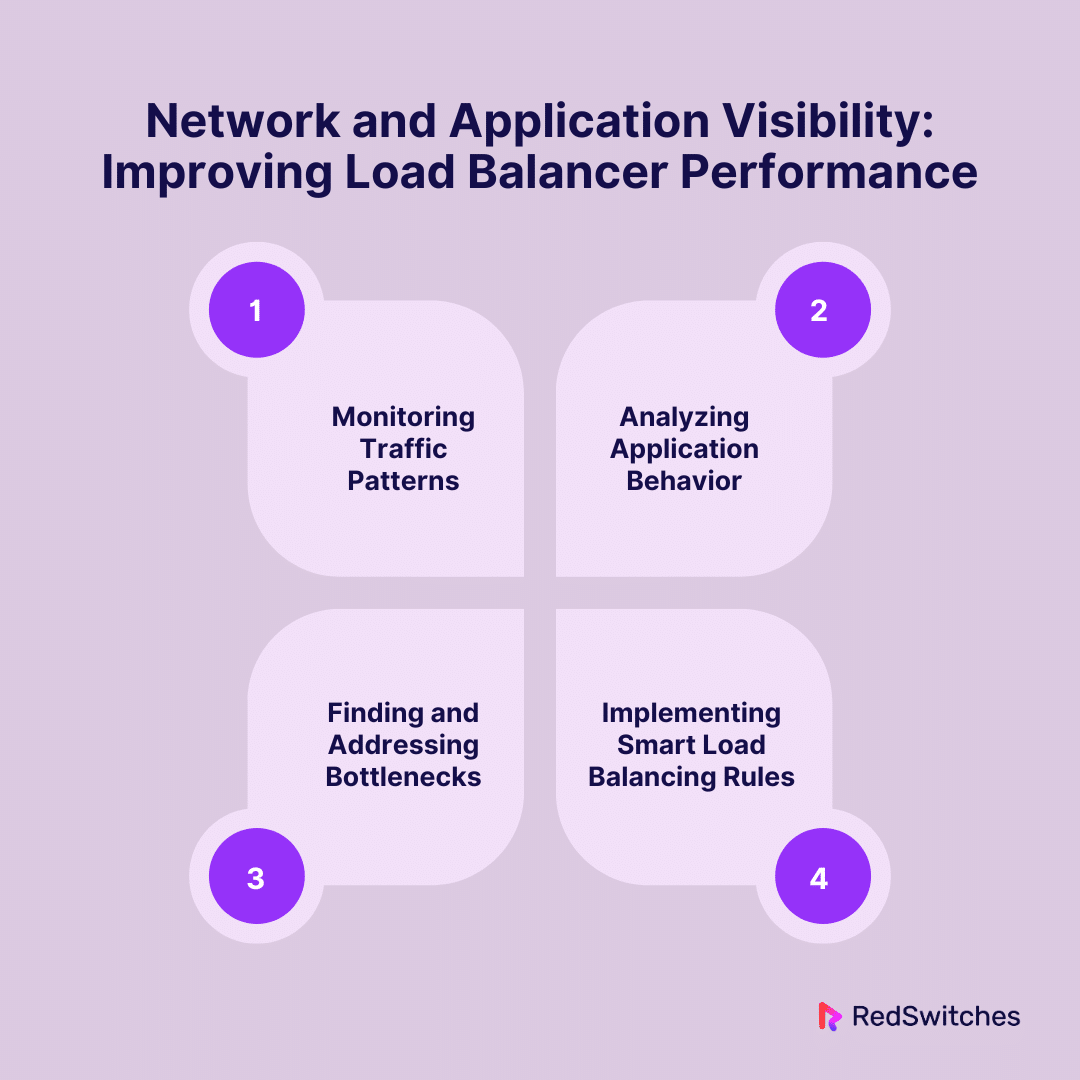

- There are ways to improve load balancer performance with network and application visibility. Some include analyzing application behavior and finding and addressing bottlenecks.

- Integrating a load balancer with dedicated servers boosts overall system performance.

How long does it take to leave a website due to its inability to load quickly?

According to Google, over 53% of mobile users leave websites with over 3 seconds to buffer. Only a few individuals are willing to wait over 5 seconds for a site to load. This proves the critical importance of performance optimization.

The strategic use of load balancers can be critical in performance optimization. When customized effectively, load balancers can drastically improve the responsiveness of web applications.

This blog will take readers through the art of tailoring load balancer performance to meet specific operational demands. At the end of this blog, you will know the best customization hacks for load balancer performance optimization.

Table Of Contents

- Takeaways

- What is a Load Balancer?

- Load Balancer Performance: Why Does it Matter?

- Load Balancing Metrics

- How to Customize Load Balancer Performance?

- Benefits of Customizing Load Balancer Performance

- What is Failover?

- How to Improve Load Balancer Performance With Network and Application Visibility

- Case Study: Transforming Digital Infrastructure for Scalability and Efficiency

- Load Balancer Efficiency Combined with Dedicated Servers

- Conclusion – Customizing Load Balancer Performance

- FAQs

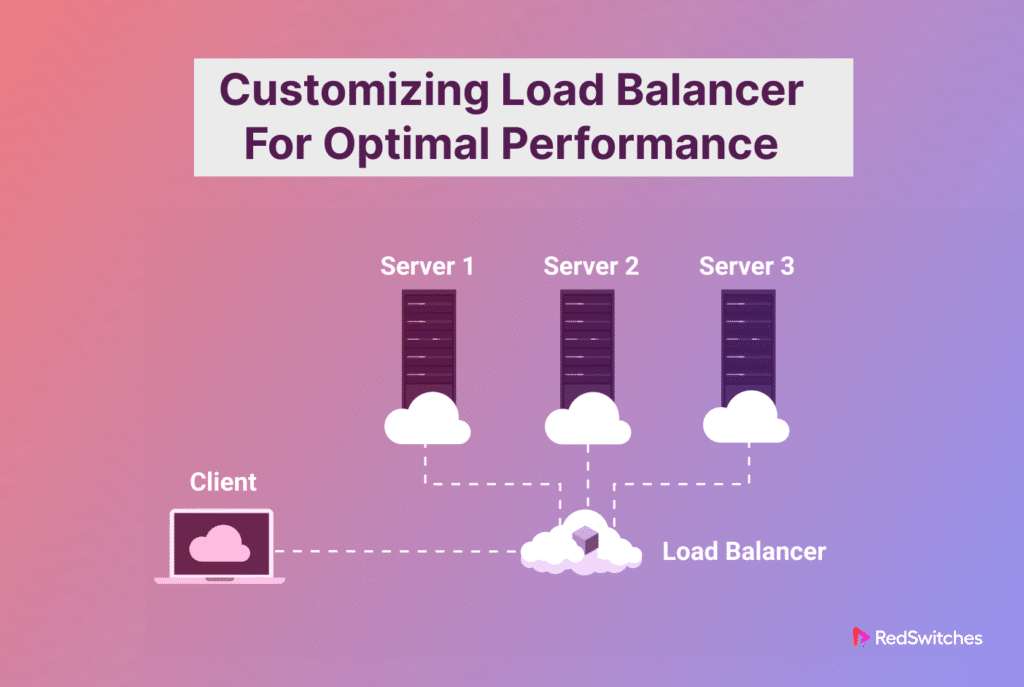

What is a Load Balancer?

Credits: FreePik

Before we discuss how to customize load balancer performance, it is important to understand what a load balancer is.

A load balancer is a device or software that disperses network or application traffic throughout servers. Its key objective is maintaining the server environment’s efficiency, reliability, and capability. Balancing the load ensures that the load is distributed among all servers equally. This helps keep servers from crashing and ensures more consistent performance for users.

Are you contemplating investing in a network load balancer? Read our blog, ‘Guide To Network Load Balancer: How Load Balancing Works,’ for insight.

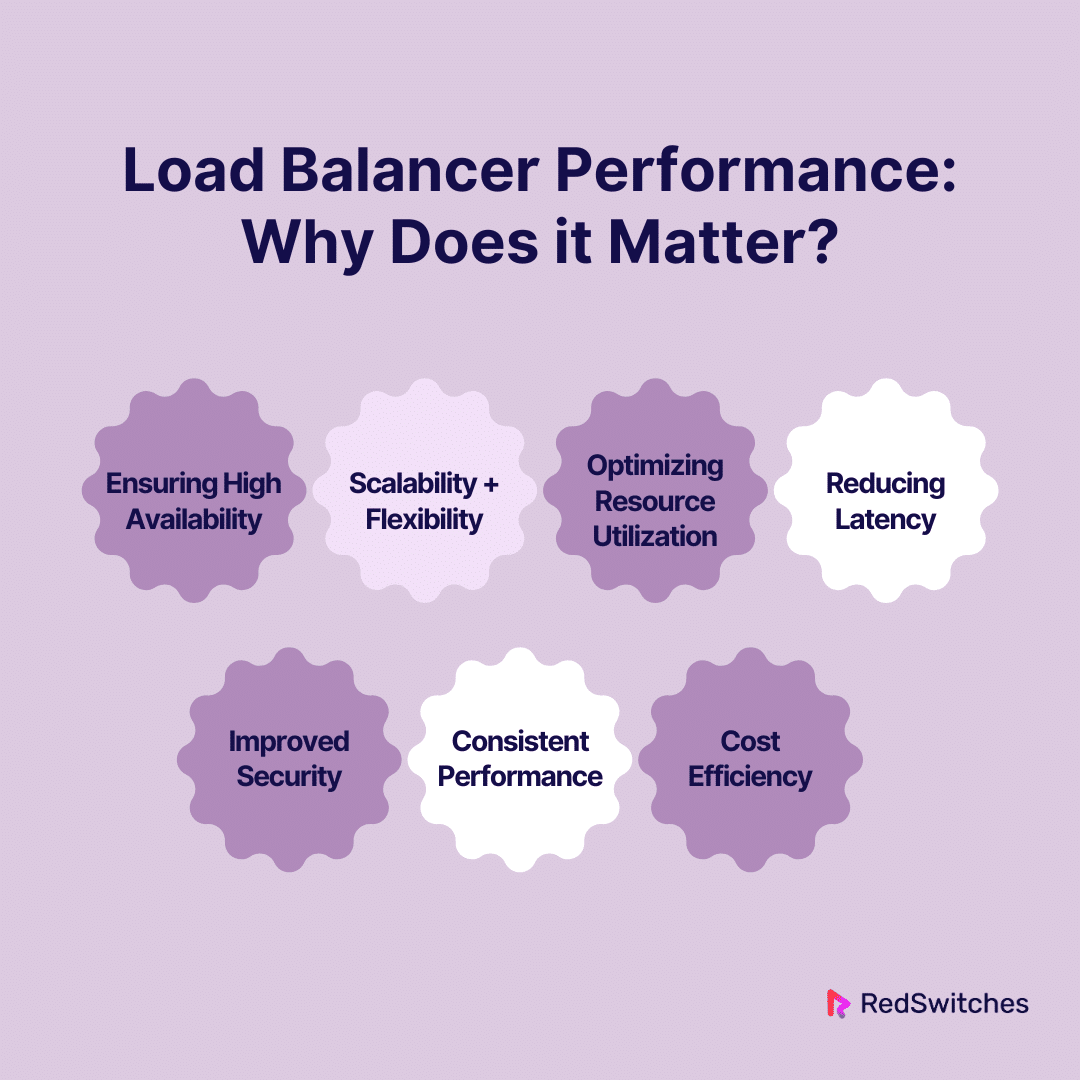

Load Balancer Performance: Why Does it Matter?

It is common for those new to the world of load balancers to ask why load balancer performance matters. Here is the answer:

Ensuring High Availability

A load balancer’s main role is to boost the availability of applications and services. It disperses user requests across many servers and ensures traffic can be rerouted to other servers if one fails. High-performance load balancers can detect server health in real time. It makes split-second decisions to maintain uninterrupted service. This ability can offer many benefits in high-traffic scenarios, where the cost of downtime can be extreme.

Scalability + Flexibility

The volume of traffic a business receives flourishes as the business grows. A high-performance load balancer can handle traffic variations with ease. This offers the scalability required to support expansion without sacrificing user experience. Making it easy to add or remove servers without interfering with the service gives companies the adaptability to change as their needs do.

Optimizing Resource Utilization

Efficient use of resources is key to cost-effective IT operations. Load balancers with superior performance can distribute traffic to maximize the utilization of server resources, ensuring no single server is underutilized or overburdened. This optimized resource allocation enhances the user experience and ensures businesses get the most out of their server investments.

Reducing Latency

Latency can be a deal-breaker today when one in four visitors would leave a website that takes over 4 seconds to buffer. High-performance load balancers lower latency by routing requests to the server physically nearest to the user or the one with the most minimal response time. This reduction in latency is crucial for time-sensitive applications. It can play an important role in refining the overall user experience.

Improved Security

Load balancers are an important aspect of the security architecture of an IT environment. They can provide an abstraction layer between the user and the backend servers. This protects the servers from direct internet exposure.

High-performance load balancers have advanced security features like DDoS protection, SSL off-loading, and intrusion detection systems. This brings an added element of security to the infrastructure.

Consistent Performance

Load balancer performance customization ensures consistent performance in multi-server environments. A load balancer’s ability to distribute the traffic across servers is the key to consistency. Thanks to their health check feature, they automatically reroute traffic from troubled servers to perfectly functioning ones. This maintains a seamless user experience by minimizing disruptions and downtime.

Advanced load balancing algorithms can also consider every server’s current load and performance capabilities. This further optimizes the distribution of requests based on real-time conditions.

Cost Efficiency

High-performance load balancers can offer cost savings by optimizing server resource utilization and enabling scalability. They allow businesses to scale horizontally. Businesses can easily add more servers as needed rather than buying expensive hardware. This scaling approach can be more cost-effective and sustainable in the long-term.

Also, read our blog, ‘Tried And Tested Load Balancing Strategies,’ to learn about the best load-balancing strategies.

Load Balancing Metrics

Credits: Freepik

It is important to monitor certain metrics to ensure optimal load balancer performance. Here are a few key load balancing metrics one must monitor for optimal load balancer performance:

- Error Rate

The error rate is an important metric that measures the percentage of request failures out of the total number of requests the load balancer processes. These failures can be because of many factors. This includes server errors, connection timeouts, or other issues that prevent a successful response to the client’s request.

Why It Matters

-

Indicates Health of Backend Servers

A high error rate often signals problems with the backend servers. This could be anything from overloading to misconfigurations or software issues.

-

Impacts User Experience

Frequent errors can severely degrade the user experience. It causes dissatisfaction and potentially drives users away.

-

Helps Identify Patterns

Analyzing the types and sources of errors can help identify specific problem areas within the infrastructure. This can help companies ensure they pay attention where it’s needed.

Managing Error Rate

-

Regular Monitoring

Continuously monitor error rates to detect and address issues quickly.

-

Root Cause Analysis

Investigate the causes of high error rates to implement targeted solutions.

-

Redundancy and Failover Strategies

Design your system with a focus on redundancy. This ensures that traffic can be rerouted away from problematic servers.

- Latency

Latency measures the time it takes for a request to reach from the client to the server through the load balancer and back. It’s a critical measure of the responsiveness of your web services.

Why It Matters

-

User Experience

High latency can lead to slow loading times, negatively affecting user satisfaction and engagement.

-

Performance Bottlenecks

Latency can help identify network bottlenecks, whether related to the load balancer’s configuration, server performance, or network issues.

-

Service Level Agreements (SLAs)

Maintaining low latency is a part of customer contractual obligations for many services.

Managing Latency

-

Optimize Configurations

Ensure the load balancer and servers are optimally configured for performance.

-

Content Delivery Networks (CDNs)

Use CDNs to reduce latency by serving content from locations closer to the user.

-

Upgrade Infrastructure

If latency issues persist, consider upgrading hardware or network infrastructure to improve speed.

- Request Count

The request count metric tallies the total number of requests that the load balancer handles over a specific period. This includes both successful and failed requests.

Why It Matters

- Traffic Insights

Understanding request volumes can help gauge the popularity of your services and predict traffic trends.

- Capacity Planning

High request counts may indicate the need for additional resources or servers to handle the load effectively.

- Load Distribution

Analyzing request counts can also reveal how effectively the load balancer distributes traffic across backend servers.

Managing Request Count

- Scalability Solutions

Implement scalable solutions to accommodate varying traffic levels without compromising performance.

- Load Balancer Rules

Adjust load balancing rules and algorithms based on request patterns to optimize distribution.

- Performance Testing

Regularly test the infrastructure under high request loads to ensure it can handle peak traffic.

- Failed Connection Count

The failed connection count metric tracks the number of unsuccessful attempts to connect to a server behind the load balancer. These failures could be due to server unavailability or network issues. They may also occur due to configuration errors preventing an established connection.

Why It Matters

- Indicates Server or Network Issues

A high number of failed connections often signals problems with backend servers or network paths.

- Affects User Experience

Each failed connection represents a potential service disruption for a user, impacting overall satisfaction.

- Guides Troubleshooting Efforts

Identifying trends in failed connections can help pinpoint specific servers or network components that require attention.

Managing Failed Connection Count

- Continuous Monitoring

Keep a close watch on failed connection metrics to quickly spot and fix emerging issues.

- Health Checks

Implement regular health checks to assess the availability and responsiveness of backend servers.

- Redundancy Measures

Ensure your architecture includes failover capabilities to reroute traffic away from troubled servers.

Also Read: Set Up HAProxy Load Balancing For CentOS 7 Servers.

- Healthy/Unhealthy Hosts

This metric refers to the servers (hosts) health status in the load balancer’s pool. It is categorized as either ‘healthy’ (able to accept traffic) or ‘unhealthy’ (unavailable or experiencing issues).

Why It Matters

- Directly Impacts Availability

The ratio of healthy to unhealthy hosts impacts the overall ability to manage incoming traffic and maintain service availability.

- Informs Scaling Decisions

Monitoring hosts’ health can guide decisions to scale up or down, ensuring adequate capacity.

- Prevents Downtime

Proactively identifying unhealthy hosts allows for quick remediation, preventing potential downtime.

Managing Healthy/Unhealthy Hosts

- Automated Health Checks

Set up automated health checks to continuously evaluate the status of each host in the pool.

- Quick Remediation

Establish protocols for quickly addressing problems with unhealthy hosts, whether through automated restarts or manual intervention.

- Load Balancer Configuration

Configure the load balancer to automatically reroute traffic away from unhealthy hosts, maintaining seamless service.

- Throughput

Throughput measures the amount of data successfully delivered over a network in a given period. For load balancers, it reflects the volume of data processed and transferred to and from backend servers.

Why It Matters

- Indicates Performance Efficiency

Throughput levels can show how efficiently the load balancer and backend servers are handling traffic.

- Capacity Planning

Understanding throughput is integral for capacity planning. This ensures the infrastructure can handle expected traffic volumes.

- Bottleneck Identification

Variations in throughput can help find bottlenecks within the network or server infrastructure that may be negatively affecting performance.

Managing Throughput

- Bandwidth Management

Ensure enough bandwidth is available to handle peak throughput demands without congestion.

- Optimization Techniques

Use optimization methods like compression and caching to maximize throughput efficiency.

- Infrastructure Upgrades

Consider upgrading network infrastructure or adding more servers if throughput consistently approaches or exceeds current capacity limits.

- Fault Tolerance

Fault tolerance refers to the ability of a system to continue operating without interruption, even when one or more of its components fail. In the context of load balancing, it measures the system’s resilience to server or network failures, ensuring that services remain available to users.

Why It Matters

- Ensures High Availability

A system with high fault tolerance can handle server failures gracefully. It redirects traffic to healthy servers without affecting user experience.

- Business Continuity

Maintaining uninterrupted service during failures is crucial for business operations and reputation.

- Reduces Downtime Costs

Minimizing downtime through fault tolerance can significantly reduce the financial impact linked with outages.

Managing Fault Tolerance

- Redundancy

Implement redundant servers and network paths to ensure there’s always a backup available in case of failure.

- Regular Health Checks

Configure the load balancer to perform ongoing health checks on servers to find and isolate failures quickly.

- Failover Strategies

Create and test failover mechanisms to ensure smooth traffic rerouting if server or component failures occur.

- Migration Time

Migration time measures the time it takes to transfer an active session or service from one server to another. This often occurs due to load redistribution or server maintenance.

Why It Matters

- Minimizes Service Interruption

Lower migration times mean users are less likely to notice any disruption as sessions move between servers.

- Efficiency in Load Redistribution

Quick migration allows for more dynamic and efficient load balancing. It adapts quickly to changing traffic patterns.

- Maintains Performance Levels

Ensuring minimal migration time helps maintain the overall performance and responsiveness of the service.

Managing Migration Time

- Optimize Session State Management

Use shared session state mechanisms to reduce the overhead of migrating sessions.

- Efficient Load Balancing Algorithms

Choose and fine-tune load-balancing algorithms that minimize the need for frequent migrations.

- Pre-Migration Preparations

Preload necessary data and resources on the target server before migration to reduce downtime.

Also Read: How To Prevent DDoS Attacks: 8 Best Practices.

- Response Time

Response time is the total time it takes for a system to answer a user request. This includes the time to process the request on the server and the network latency involved in delivering the response back to the user.

Why It Matters

- Direct Impact on User Experience

Faster response times translate to quicker loading times and a smoother user experience, which is crucial for user satisfaction and engagement.

- Indicator of System Efficiency

Response time can indicate how well the load balancer and backend servers perform, highlighting potential bottlenecks.

- Affects Conversion Rates

Quicker response times in e-commerce and online services can lead to higher conversion rates and customer retention.

- Managing Response Time

- Optimize Server Performance

Ensure that servers are adequately resourced and optimized for the applications they host.

- Content Caching

Implement caching strategies at the load balancer or server level. This reduces the need for repeated processing of the same requests.

- Network Optimization

Optimize network configurations and paths to reduce latency and improve data transfer speeds.

- Reliability

Reliability in load balancing measures the consistent performance and availability of services over time. It reflects the system’s ability to deliver uninterrupted service at the expected performance levels.

Why It Matters

- Trust and Reputation

Reliable services build trust with users and enhance your organization’s reputation.

- Performance Consistency

Ensures that users have a consistent experience without significant performance variations.

- Operational Stability

High reliability contributes to the overall stability of your operations, reducing the need for emergency interventions.

Managing Reliability

- Quality Hardware and Software:

Invest in high-quality infrastructure components and software solutions that are less prone to failure.

- Load Balancing Policies

Develop and implement load balancing policies that ensure even distribution of traffic. This is an effective way of preventing the overloading of individual servers.

- Continuous Improvement

Use insights gained from monitoring reliability metrics to improve your infrastructure continuously.

Also Read: Highly Available Architecture: Definition, Types & Benefits.

How to Customize Load Balancer Performance?

Credits: FreePik

Customizing load balancer performance requires one to evaluate their needs and know the best customization strategies. Here is a more in-depth guide on customizing load balancer performance:

Important Considerations

The first step in customizing load balancer performance is considering some important factors. This includes:

- Traffic Patterns: Understand your traffic’s nature. Is it steady, or are there peaks during specific times?

- Application Requirements: Different applications may have unique requirements. For instance, a streaming service needs fast data delivery, while an e-commerce site may prioritize session persistence.

- Server Capacity: Understand the capacity of your servers. Balancing loads effectively demands understanding your resource limits.

Customization Strategies

Once you have understood your requirements, you can employ load balancer performance customization strategies.

-

Pick the Algorithm Wisely

Different load balancers use various algorithms to distribute traffic. The choice depends on your specific needs:

- Round Robin: Distributes requests evenly across all servers. Best for servers with similar specifications.

- Least Connections: Sends requests to the server with the fewest active connections. Suitable for long-lived connections.

- IP Hash: Directs user requests based on IP address, ensuring a user consistently reaches the same server. This helps maintain session persistence.

-

Managing Server Health Checks

Regular health checks ensure traffic is only sent to operational servers. Customize health check parameters like frequency, timeout settings, and the health check type (HTTP, TCP) to fit your environment.

-

Implementing SSL/TLS Offloading

SSL/TLS offloading removes the encryption and decryption load from your web servers. It achieves this by handling it at the load balancer level. This can improve performance for secure applications. It frees up server resources for application processing.

-

Use Caching and Compression

Reducing server load and speeding up response times can be achieved by caching frequently requested material on the load balancer. Similarly, you can lower the amount of data delivered by enabling compression for specific material categories.

-

Adjusting Session Persistence

Session persistence allows the user’s session data to be maintained across requests. This is vital for applications where the user’s state needs to be preserved. It must be used carefully to avoid overloading a single server.

-

Tuning Timeout Settings

Customize timeout settings for your load balancer to ensure that slow connections don’t hold up resources. This includes setting appropriate timeouts for idle connections and adjusting keep-alive settings.

-

Leveraging Advanced Routing Features

Some load balancers offer advanced routing features based on content type, user location, or application-specific data. This can be used to route traffic more intelligently, improving performance for specific types of requests.

-

Scaling and Auto-scaling

Ensure your load balancer can scale with your traffic. Many modern load balancers offer auto-scaling features that automatically adjust resources based on traffic volume. This ensures optimal performance without manual intervention.

Ongoing Monitoring

Ongoing monitoring is vital to ensure load balancer performance is always at its best. Use analytics and logging to track performance and identify areas for further optimization. Be prepared to adjust settings as your traffic patterns and application requirements evolve.

Challenges in Load Balancer Performance Customization

Customizing load balancer performance is not without hurdles. One may encounter two common challenges. This includes balancing between performance and cost and managing the difficulties of complex applications and microservices architectures.

Balancing between Performance and Cost

Credits: FreePik

Achieving optimal load balancer performance through customization can sometimes increase costs. High-performance algorithms, advanced health check mechanisms, and sophisticated routing strategies come with a need for financial investment. This is the case for both hardware and software load balancers.

Organizations must carefully evaluate their performance needs against their budget constraints. The key is to spot the most critical areas for performance enhancement that offer the best return on investment. For instance, implementing smart caching strategies or optimizing session persistence can provide performance boosts without a considerable cost increase.

Dealing with Complex Applications and Microservices Architectures

Modern web applications are often built using complex architectures. This includes microservices, which can complicate the customization of load balancers. A microservices architecture divides an application into small, independent services communicating over a network.

This setup introduces numerous endpoints, each potentially requiring different load-balancing strategies. Customizing a load balancer in such environments demands a deep understanding of each service’s specific needs and traffic patterns. It involves intricate configuration and constant tuning to ensure the load is evenly distributed across services.

The dynamic nature of microservices also requires the load balancer to be highly adaptable and adjust to environmental changes.

Benefits of Customizing Load Balancer Performance

What are the benefits of customizing load balancer performance? Here is the answer:

Tailored to Your Needs

Every business is unique, and so is its digital traffic. Customizing your load balancer means setting it up in a way that perfectly matches your specific needs. A customized load balancer is the best option whether you have seasonal traffic spikes, run promotions, or experience steady user visits. This custom approach ensures that your online services reflect your business’s distinctive rhythm and demands.

Improved User Experience

Slow websites are a big no-no. By customizing your load balancer, you ensure that your website loads quickly for every user, every time. This leads to happier visitors who are more likely to stick around, engage with your content, and convert into paying customers. A smooth, fast, responsive website creates a positive first impression that differentiates you from competitors.

Cost Savings

Efficiency isn’t only about speed. It’s also about smart resource use. A customized load balancer optimizes the workload across your servers. This ensures you get the most out of your existing infrastructure. This can delay or finish the need for pricey hardware upgrades. It also translates into energy savings and reduced operational costs. This way, you can also do your part in creating a more sustainable business model.

Also Read: Exploring Fault Tolerance Vs High Availability.

Security

Customization allows you to implement advanced security rules and protocols through your load balancer, adding an extra layer of protection against threats like DDoS attacks. Keeping your digital environment secure is crucial for maintaining your users’ trust. This proactive stance on security safeguards your data and strengthens your reputation as a reliable service provider.

High Availability

Downtime is bad news for any business operating online. Customizing your load balancer improves its ability to find and avoid issues instantly. It achieves this by redirecting traffic to healthy servers. This means your services remain available around the clock, ensuring reliability that your users will appreciate. Consistent availability produces user confidence, boosting loyalty and repeat visits.

Scalability

Scalability is an important aspect of the current flourishing digital space. A customized load balancer is designed to scale with you. It can accommodate increased traffic without sacrificing performance. This flexibility supports your growth without the growing pains. Scalability ensures your digital infrastructure is always prepared to welcome future growth opportunities.

Hassle-free Maintenance

Customization can also facilitate the maintenance of your digital environment. By automating specific tasks and setting up intelligent monitoring, you reduce the manual effort required to keep things running smoothly, freeing up your team to focus on innovation. This streamlining of operations enhances efficiency and promotes a culture of innovation and constant improvement.

What is Failover?

Failover is a backup operational mode. In this mode, the functions of a system automatically switches to a secondary system when the primary system fails. This mode may also be activated when the primary system is momentarily turned off for maintenance. This procedure guarantees that users won’t experience service availability disruptions.

Failover systems are commonly used in high-availability environments where continuous operation is critical. Some examples include data centers, servers, networks, and databases. Failover aims to minimize downtime and maintain business continuity. Their ability to automatically transition tasks to backup systems until the primary system is restored can greatly benefit businesses.

Also Read: What Is Server Clustering & How Does It Work? + 3 Benefits.

How to Improve Load Balancer Performance With Network and Application Visibility

Network visibility is how well you can see and understand the data flowing through your network. Application visibility is about having insight into the performance of your applications on your network. Here is how you can improve load balancer performance with these two aspects:

The Role of Network and Application Visibility

Visibility allows a load balancer to make informed decisions. It empowers it to divert traffic most efficiently. Network visibility provides insights into the traffic flow, potential bottlenecks, and the health of the network paths. Application visibility goes deeper. It offers an understanding of how different applications perform. It also offers information on response times and the nature of the traffic they generate.

Network and Application Visibility: Improving Load Balancer Performance

Here are a few ways you can improve load balancer performance with network and application visibility:

Monitoring Traffic Patterns

Start by looking at traffic flow through your network. Determine peak times, common traffic sources, and most visited destinations. This understanding allows you to configure your load balancer performance to handle expected loads. It will also enable you to adjust dynamically to sudden traffic hikes or drops.

Analyzing Application Behavior

Different applications have varying resource needs and user interaction patterns. You can change load balancer rules to optimize for these patterns by monitoring how applications behave. This monitoring involves looking at how applications handle requests and how users interact with them. Doing so helps ensure that critical applications always have the resources they need.

Finding and Addressing Bottlenecks

With clear visibility into your network and applications, you can find where bottlenecks occur. Is a particular server slower? Is there a consistently congested network path? Addressing these issues can significantly improve overall load balancer performance. It can help ensure performance consistency.

Implementing Smart Load Balancing Rules

Armed with detailed information, you can set up more intelligent load-balancing rules. For instance, besides routing traffic based on server availability, you can do so based on other factors. This includes the type of requests, the specific needs of an application, or the user’s location.

Case Study: Transforming Digital Infrastructure for Scalability and Efficiency

A leading online service provider known as “the Company” (the Company chose to keep its name anonymous) faced significant challenges with its existing digital infrastructure. The legacy hardware and systems in place were becoming inadequate to handle the growing volume of traffic and data demands. The rigidity and high costs associated with their outdated setup were major obstacles to scalability and efficiency.

The Challenge

The Company was experiencing several critical issues, including:

- Costly Infrastructure: The legacy systems were expensive to maintain and lacked the flexibility to scale operations cost-effectively.

- Limited Scalability: As demand for the Company’s digital services grew, the existing hardware could not keep up, leading to performance bottlenecks and degraded user experiences.

- Lack of Flexibility: The rigid infrastructure hindered the Company’s ability to adapt to changing market dynamics and technological advancements, stifling innovation and growth.

The Solution

In search of a solution that could address these challenges, the Company turned to RedSwitches for its advanced load-balancing solutions. RedSwitches provided a highly flexible and performance-oriented infrastructure that was tailor-made to meet the Company’s specific needs.

Implementation

The transition involved:

- Custom Load Balancing: RedSwitches implemented a custom load balancing solution that efficiently distributed traffic across servers. This ensured high availability and optimal performance.

- Scalable Architecture: The new infrastructure was designed to be easily scalable. It allowed the Company to expand its resources seamlessly as traffic demands increased.

- Cost Efficiency: RedSwitches’ solution significantly reduced operational costs. This eliminated the financial burden of outdated legacy systems.

The Impact

The impact of the new infrastructure was profound:

- Performance Boost: The Company managed a high traffic volume. The volume exceeded its initial capacity goals.

- Massive Cost Savings: The transition to RedSwitches’ solutions reduced costs. The company saved up to 90% compared to the legacy systems.

- Greater Flexibility: The flexible nature of the infrastructure allowed the Company to quickly adapt to new requirements and incorporate the latest technologies, fostering innovation.

Load Balancer Efficiency Combined with Dedicated Servers

Credits: FreePik

Are you wondering what happens when you integrate a load balancer with dedicated server? Keep reading to learn.

Load Balancer Performance

Load balancer performance is crucial as it directly impacts the responsiveness and reliability of the applications it serves. Some benefits connected to load balancer performance efficiency include:

- Traffic Management

Load balancers route client requests to the least busy servers, ensuring balanced workloads.

- Scalability

They allow for easy scaling of server resources to handle increased traffic without service degradation.

- Reliability and Availability

By rerouting traffic from failed servers to healthy ones, load balancers enhance the overall reliability of your services.

- Latency Reduction

Load balancers can reduce latency by directing user requests to the server closest to them.

Dedicated Server Performance

Dedicated servers are powerful because they offer:

- Dedicated Resources

All server resources (CPU, storage, RAM) are allocated to a single tenant. This ensures high performance and stability for hosted applications.

- Customization and Control

Users have complete control over the server environment. This allows for custom configurations tailored to specific application needs.

- Security

With no shared resources, dedicated servers offer a more secure environment. They minimize the risk of data breaches.

Also Read: How To Increase Server Speed Performance?

Synergy Between Load Balancers and Dedicated Servers

Integrating a load balancer with dedicated servers creates a synergistic effect that enhances overall system performance:

- Efficient Traffic Distribution

The load balancer ensures that no single dedicated server is overloaded. This prevents server overload and ensures applications run smoothly.

- Increased Availability and Reliability

The load balancer increases applications’ overall availability and reliability by directing traffic from underperforming servers to healthier ones.

- Scalability

The load balancer can distribute traffic across additional dedicated servers. This is great for growing businesses. It allows for the handling of increased load without degradation in performance.

- Improved User Experience

With optimized load distribution, end-users experience faster response times and higher reliability. This contributes to overall satisfaction.

Conclusion – Customizing Load Balancer Performance

Optimizing your load balancer performance requires an in-depth understanding of your needs and customization strategies. Such customization helps ensure the efficient distribution of traffic across servers. It can directly impact your business’s bottom line.

Make RedSwitches your partner in this journey. We offer robust dedicated server solutions that form the backbone for customizable load balancing strategies. With RedSwitches, you’re investing in superior technology and the assurance that your online presence is optimized for the highest performance standards. Learn more about custom load balancer performance optimization by contacting us today.

FAQs

Q. Does load balancer increase performance?

A load balancer can increase performance by effectively dispersing incoming network traffic across multiple servers. It prevents any one server from becoming overloaded. This ensures quicker response times and improved overall service availability for users.

Q. How do I check my load balancer performance?

You can monitor metrics like response time and throughput to check your load balancer’s performance. Some other metrics include error rates and server health statuses. Many load balancers come with built-in monitoring tools. You can also use third-party monitoring solutions to gather and analyze these metrics.

Q. What is load balancer performace?

Load balancer performance testing involves simulating traffic to see how effectively it allocates network traffic across all servers. This testing helps identify the maximum capacity and response time under various loads. It also helps determine the overall efficiency of the load balancer in maintaining application performance.

Q. What is a load balancer and how does it work?

A load balancer is a device that evenly distributes network traffic across multiple servers. It helps optimize resource utilization, maximize throughput, minimize response time, and avoid server overload. It dynamically routes client requests across all available servers. This ensures each server handles a manageable load and optimizing overall performance.

Q. What are the benefits of load balancing?

Load balancing offers several benefits. This includes improved server uptime and availability, enhanced website performance, and scalability to handle increased traffic. Other benefits include redundancy to prevent single points of failure and efficient resource allocation to maximize server capacity.

Q. What are the different types of load-balancing algorithms?

There are various load balancing algorithms. This includes round-robin, least connections, IP hash, least response time, and weighted round-robin. Each algorithm employs different strategies to distribute traffic among servers based on factors. Some factors include server load, response time, or client IP address.

Q. What is the difference between software and hardware load balancers?

Software load balancers are implemented as software applications installed on standard server hardware, providing flexibility and cost-effectiveness. In contrast, hardware load balancers are purpose-built devices with dedicated hardware components. They offer high performance, scalability, and advanced features suitable for large-scale deployments.

Q. How does global server load balancing differ from standard load balancing?

Global server load balancing (GSLB) extends traditional load balancing. It achieves this by distributing traffic across geographically dispersed data centers or cloud regions to optimize global application performance and ensure high availability. It considers server proximity, latency, and health to route client requests to the best server location.

Q. What are the different types of load balancing, and which one is suitable for cloud environments?

Load balancing can be categorized into hardware, software, and cloud-based solutions. Cloud load balancing, including DNS-based and application-based load balancing, is popular due in cloud environments. This popularity is due to its scalability, flexibility, and ability to disperse network traffic through cloud resources.

Q. What is the role of layer 4 and layer 7 load balancing in network infrastructure?

Layer 4 load balancing operates at the transport layer of the OSI model. It makes routing decisions based on source and destination IP addresses and ports. In contrast, layer 7 load balancing functions at the application layer. It makes routing decisions based on specific application data, URLs, or HTTP headers. This enables for more sophisticated traffic management and application-aware routing.

Q. What is dynamic load balancing, and how does it differ from static load balancing?

Dynamic load balancing dynamically adjusts the distribution of traffic based on real-time server load. It ensures optimal resource utilization and responsiveness. In contrast, static load balancing distributes traffic based on predefined configurations. It does not adapt to changing server conditions.

Q. How do hardware load balancers and software load balancers differ in their requirements and capabilities?

Hardware load balancers require specialized hardware appliances. They are designed for high-performance and high-throughput environments. Software load balancers can run on standard hardware and offer flexibility and integration with existing infrastructure. Both types have unique features and capabilities tailored to different deployment scenarios.

Q. What are the key considerations for achieving effective load balancer performance customization?

Effective load balancer performance customization involves several key considerations. This includes understanding traffic patterns and application requirements, selecting the appropriate load-balancing algorithm, and configuring health checks and session persistence. Other factors include optimizing SSL termination and using advanced features.