Key Takeaways

- Load balancing distributes network traffic across multiple servers.

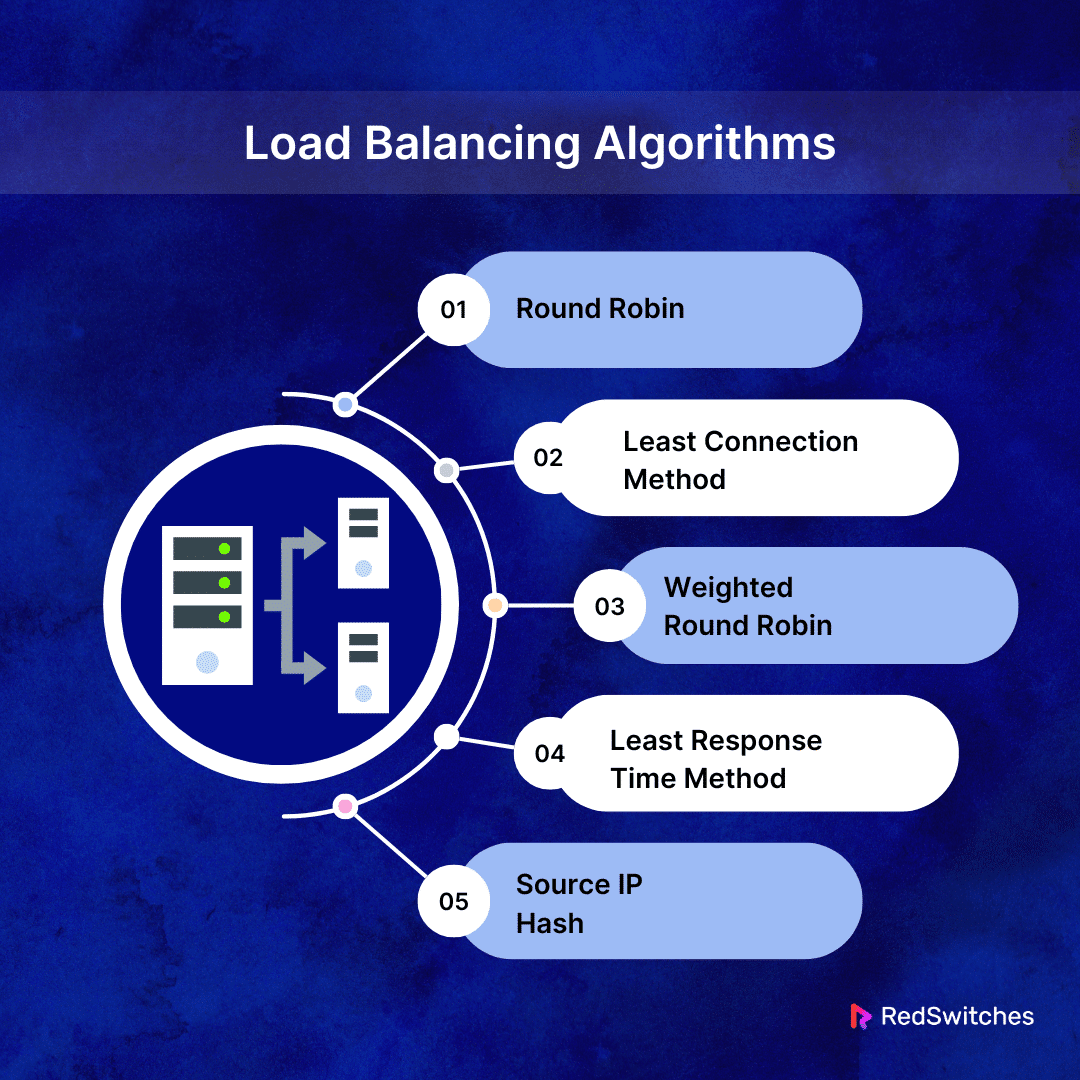

- There are several types of load balancing, including Round Robin, Least Connection Method, and Weighted Round Robin.

- A load balancer works by balancing traffic load, managing traffic arrival, and conducting health checks.

- Load balancer implementation can offer many benefits, including enhanced reliability, efficiency, and scalability.

- Tools for load balancing include software and hardware solutions.

- Load balancer implementation requires pre-planning based on needs and infrastructure.

- Load balancer implementation begins with installation, configuration, and server integration.

- Ongoing upkeep of load balancers ensures optimal performance.

- A dedicated server for load balancing offers benefits like more control and performance.

Did you know that average server downtime costs around $301,000 to $400,000 or more? This highlights the critical need for solutions that guarantee uptime. This is where load balancers serve as an indispensable tool.

Load balancer is a technology designed to distribute network or application traffic across multiple servers. This offers several benefits, like enhanced performance and reliability.

The following piece will take you through a practical tutorial navigating the nuances of load balancer implementation. It will highlight how to implement load balancer – setting up, configuring, and maintaining a load-balancing system.

Let’s Begin!

Table of Contents

- Key Takeaways

- What Is Load Balancing?

- Load Balancing Algorithms

- How Load Balancing Works

- Benefits of Load Balancing

- What are the Tools for Load Balancing?

- Pre-Implementation Considerations

- Load Balancer Implementation

- Load Balancer Implementation: Maintenance and Monitoring

- Benefits of Setting Up a Dedicated Server for Load Balancing

- Conclusion – Load Balancer Implementation

- FAQs

What Is Load Balancing?

Credits: FreePik

Before we discuss the aspects of load balancer implementation, it is important to understand what load balancing is.

Load balancing is where a load balancer distributes incoming network traffic across numerous servers. This process ensures that no single server becomes overloaded by too many requests. This can cause server failure and slower response times.

Load balancer implementation helps maintain optimal service speed and reliability by spreading the load evenly. It’s like having several checkout lanes in a grocery store. The customers (or network requests) get divided among all available lanes (or servers). This helps reduce the wait time for everyone. Besides efficiently managing traffic, load balancing provides:

- Failover.

- Automatically redirecting traffic from a failing server to a healthy one.

- Ensuring continuous service availability.

This makes load balancer implementation critical in any high-availability, high-performance computing environment.

Do you want to learn more about network load balancers? Read our informative guide, ‘Guide To Network Load Balancer: How Load Balancing Works.’

Load Balancing Algorithms

The algorithms that dictate how traffic is distributed are central to the effectiveness of load balancing. Understanding these algorithms is a necessary aspect when planning load balancer implementation. Here are some widely used load-balancing algorithms:

Round Robin

The Round Robin algorithm is the simplest form of load balancing. It allocates incoming requests sequentially and evenly across all servers. Think of it as taking turns; each server gets a request in a cyclic order.

This method is straightforward and effective in scenarios where all servers have similar capabilities. However, its simplicity also means it doesn’t account for the current load or server performance. This can cause imbalances if servers have varying capacities or current loads.

Least Connection Method

The Least Connection method directs new requests to the server with the fewest active connections. This approach is more dynamic than Round Robin. This is because it considers the current state of the server pool.

It’s especially effective in environments where session lengths vary. This is because it helps prevent any single server from overloading with long-lasting connections. This method ensures a more even load distribution, especially in real-time situations.

Weighted Round Robin

Building on the Round Robin concept, the Weighted Round Robin algorithm assigns a weight to each server based on its capacity. Servers with higher capacities get a larger share of the requests.

This method is an enhancement over the basic round-robin. It allows for a more nuanced distribution of traffic that accounts for the varied capabilities of each server. It ensures that more powerful servers do more work. This helps enhance the efficiency of the load-balancing process.

Least Response Time Method

The Least Response Time method selects the server with the shortest response time for the next request. This algorithm considers the number of active connections and how quickly the server can respond to requests.

It’s an intelligent approach to ensuring requests are distributed and handled efficiently. This leads to faster application performance and improved user experience.

Source IP Hash

The Source IP Hash algorithm uses a unique attribute, the IP address of the incoming request. This helps it determine which server will handle the request. Creating a unique hash of the source IP address and mapping it to one of the servers ensures that a particular user will consistently be connected to the same server.

This consistency can be crucial for session persistence in applications where it’s essential to maintain user state across sessions. It may, however, cause uneven distribution if many requests come from a limited set of IP addresses.

Also Read: Set Up HAProxy Load Balancing For CentOS 7 Servers.

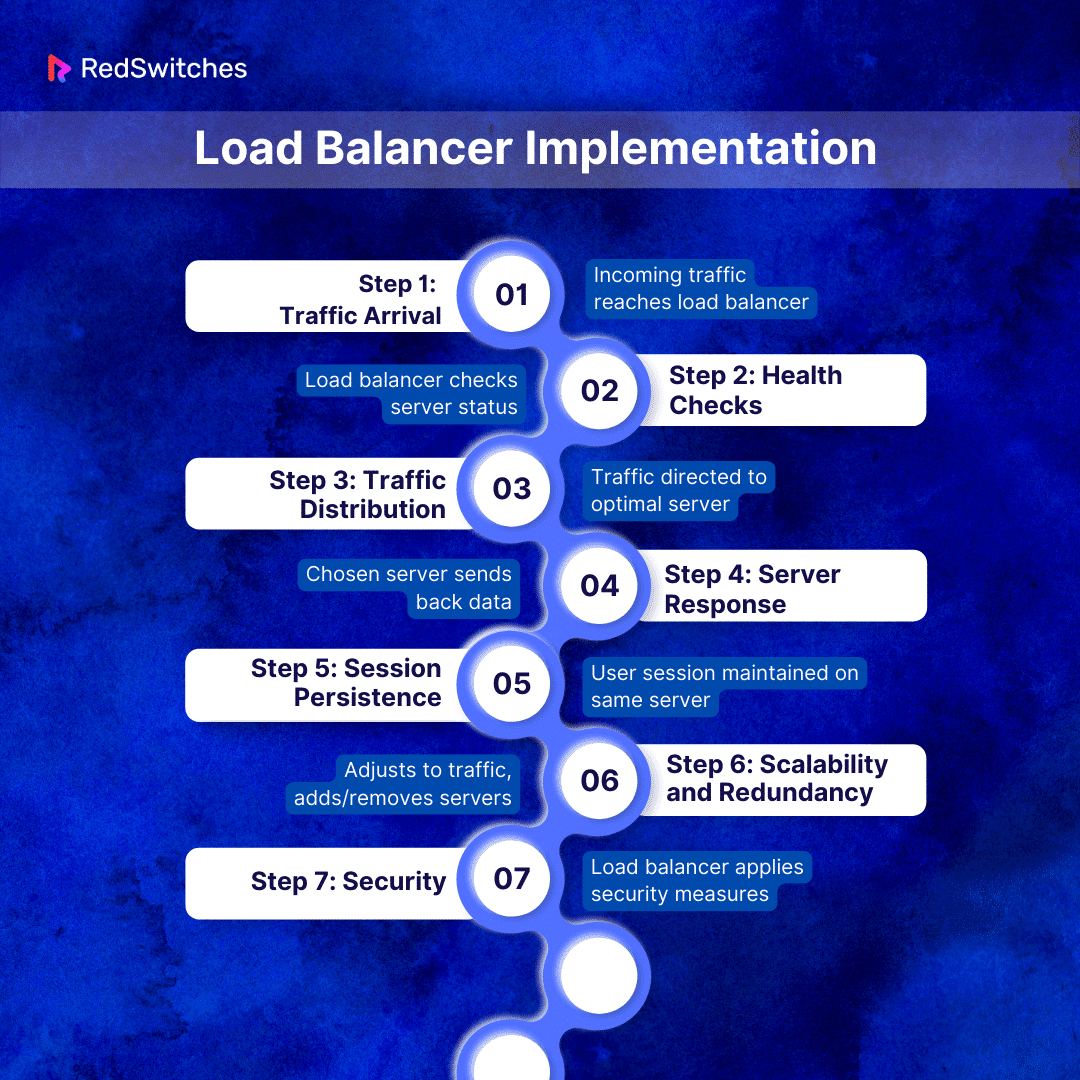

How Load Balancing Works

Load balancer’s working mechanism begins with traffic arrival. Here is a step-by-step rundown of the workings of a load balancer:

Step 1: Traffic Arrival

When a user clicks a link or inserts a URL, a load balancer receives their request. This interception is important since it allows the load balancer to manage the flow of requests. It ensures that no single server is overwhelmed. The load balancer’s job at this point is to assess incoming requests and effectively route them. It keeps the user experience responsive and seamless.

Step 2: Health Checks

Credits: FreePik

The load balancer oversees the health of all servers via health checks. These checks can range from simple pings to more complex connection attempts or specific URL requests.

The goal is to evaluate whether the server is ready to handle new requests. Servers failing these checks are temporarily removed from the pool. This ensures that traffic is only directed to operational servers, helping maintain service continuity.

Step 3: Traffic Distribution

The load balancer employs algorithms to distribute the traffic, upon confirming the servers’ health:

Round Robin

This method cycles through the servers in order, ensuring a uniform distribution of requests. It’s simple but effective for evenly-matched servers.

Least Connections

This algorithm favors servers with fewer active connections, assuming they have more free capacity, which is ideal for sessions of varying lengths.

IP Hash

The user’s IP address determines the server assignment. This method ensures a user consistently interacts with the same server, which benefits session continuity.

Step 4: Server Response

The chosen server processes the incoming request and directs the response back to the load balancer, relaying it to the user. This back-and-forth is seamless from the user’s perspective. It maintains the illusion of direct communication with a single server.

Step 5: Session Persistence

Specific applications like online shopping demand a user to stay connected to the same server for session consistency. Load balancers manage this through session persistence, using techniques like cookies or session IDs to map users to specific servers. This helps retain a coherent user experience.

Step 6: Scalability and Redundancy

Load balancers excel in adaptability. This allows for the dynamic addition or removal of servers based on demand, ensuring the system scales efficiently. The load balancer reroutes its traffic to other servers in case of server failure. This provides a fail-safe to maintain uninterrupted service.

Step 7: Security

Security is integral for modern load balancers. Load balancers act as a defense against attacks by pre-screening incoming requests. SSL termination offloads encryption tasks from the servers. This helps free resources while maintaining secure data transmission. Load balancers can also mitigate DDoS attacks by spreading traffic or using defensive measures to filter out malicious requests.

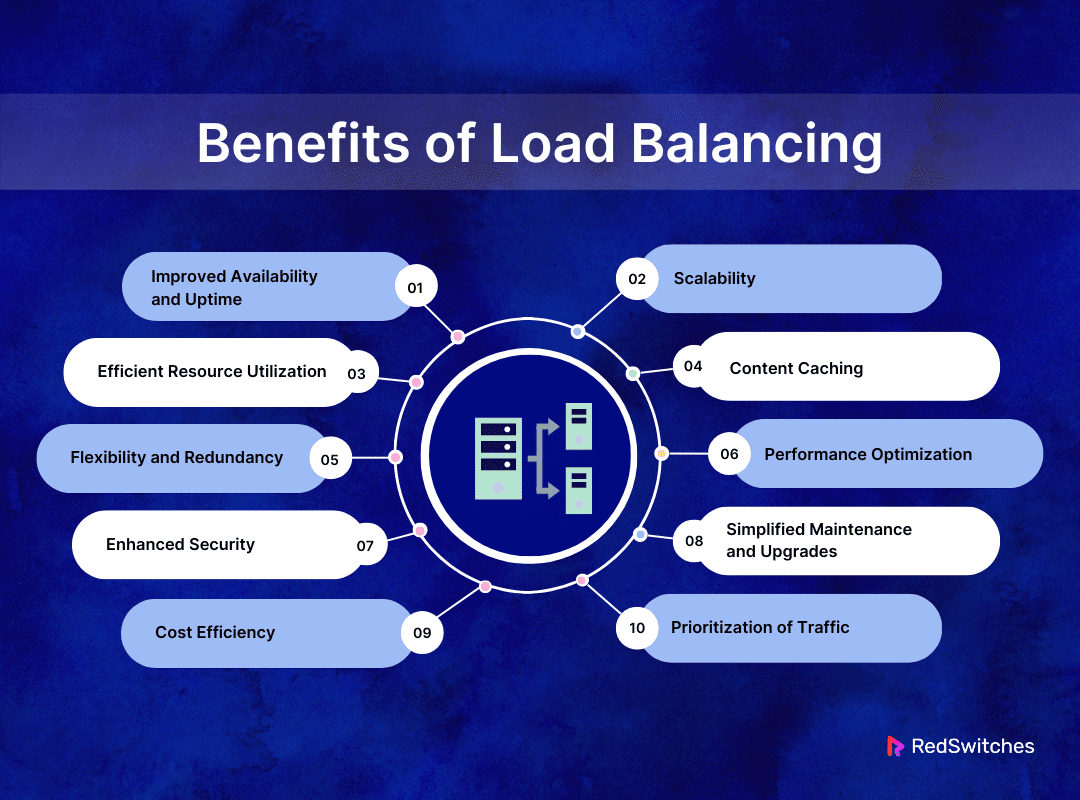

Benefits of Load Balancing

Load balancers offer an abundance of advantages, including improved availability and uptime. Here is a more in-depth look at the numerous benefits of load balancer implementation:

Improved Availability and Uptime

Load balancing is constantly praised for its high availability. They distribute traffic across multiple servers. This helps ensure that even if one server goes down, the others can take over, keeping your services online. This redundancy is vital for maintaining continuous service. It makes load balancing a must-have for businesses where downtime can cause customer dissatisfaction and revenue loss.

Scalability

Load balancers offer immense scalability. Load balancing allows you to add more servers to handle the increased traffic easily. This empowers you to scale your resources to match growing demand. The best part is that this can be done without interrupting your services. This helps ensure a smooth user experience.

Efficient Resource Utilization

Efficiency is another hallmark of load balancing. It ensures that no server is inactive while others are overloaded by evenly distributing the traffic. This optimal use of resources prevents overprovisioning and underutilization. This leads to a more cost-effective and environmentally friendly IT infrastructure.

Content Caching

Load balancers can also cache content, storing copies of frequently accessed files. This lowers the load on the server and accelerates the response time for the user. It makes your website or application even faster.

Flexibility and Redundancy

Load balancing offers unmatched flexibility. It allows for the seamless addition or removal of servers without disrupting the service. This redundancy ensures that in the event of a server failure, others can take its place, maintaining the service’s availability without any manual intervention.

Performance Optimization

Credits: FreePik

Load balancing enhances user experience by optimizing performance. It ensures requests are directed to the server with the quickest response time or the lowest load. It lowers the risk of any single server becoming a bottleneck. This results in faster load times and a more responsive application or website.

Enhanced Security

Security is a key concern for online services. Load balancing contributes to a more secure environment. The even traffic allocation can help mitigate DDoS attacks and several other prominent security concerns. Some load balancers offer advanced features like SSL offloading, further enhancing security.

Simplified Maintenance and Upgrades

Load balancers make maintenance and upgrades less disruptive. Servers can be taken offline for maintenance without impacting the overall service availability. This means you can keep your systems up-to-date and secure with minimal impact on the end user.

Cost Efficiency

Cost efficiency is a major advantage of load balancing. Businesses can save on hardware expenses and lower energy consumption by optimizing resource utilization and allowing for scalability. This makes load balancing not only a technical but also an economically smart choice.

Prioritization of Traffic

Some advanced load balancers allow traffic prioritization. This ensures critical requests are handled first. This is particularly important for services that handle critical and non-critical requests. It offers the peace of mind that high-priority tasks are completed swiftly.

Also Read: Exploring Fault Tolerance Vs High Availability.

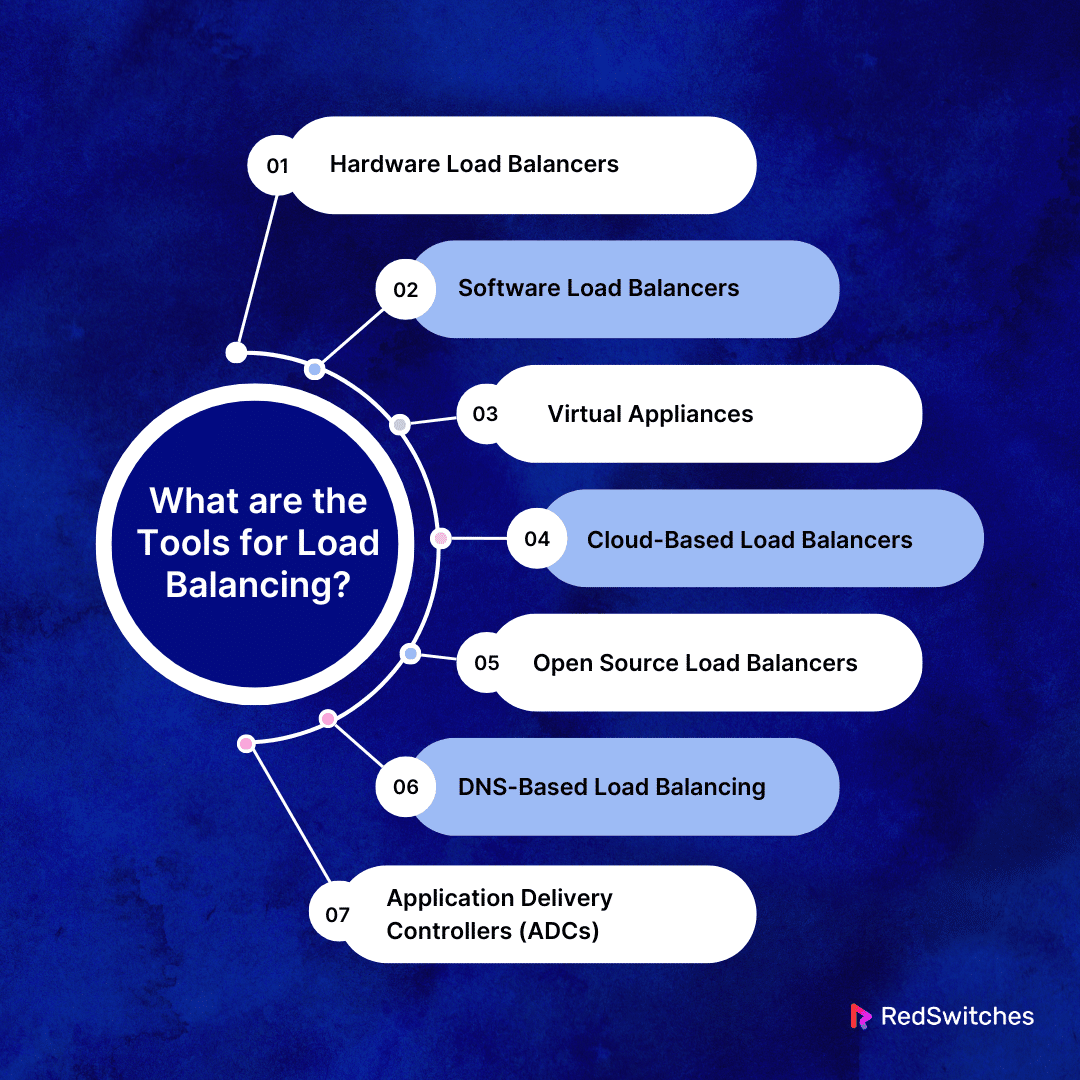

What are the Tools for Load Balancing?

Before you begin your load balancer implementation journey, it is critical to become familiar with tools for load balancing. Here is information on the key tools available for load balancing:

Hardware Load Balancers

Hardware load balancers are physical devices mainly created for load balancing. They are installed directly into the network infrastructure and are celebrated for their robustness and high performance. Hardware load balancers are ideal for environments with extensive traffic.

This is because they can handle extensive data volumes with low latency. Their dedicated processing power makes them highly reliable, though they come with higher initial costs than software solutions.

Software Load Balancers

Software load balancers provide a flexible and cost-effective alternative to hardware. They can run on standard hardware or be deployed in virtual environments. This offers high customization.

These tools are ideal for cloud-based or virtualized settings, where scalability and adaptability are key. Software load balancers can smoothly integrate with existing IT systems. This allows for automated scaling and management.

Virtual Appliances

Virtual appliances are pre-configured software load balancers that can be installed on virtual machines. They combine the convenience of software solutions with the efficiency of hardware devices. This offers a middle ground for businesses looking for a balance between performance and cost. Virtual appliances are easy to deploy and handle, making them suitable for businesses with limited IT resources.

Cloud-Based Load Balancers

Credits: FreePik

Cloud-based load balancers are offered as part of cloud computing services. They provide scalability and flexibility, allowing businesses to adjust their load-balancing capabilities based on demand.

These tools are managed by the cloud service provider, reducing the need for in-house maintenance. Cloud-based load balancers are ideal for organizations with fluctuating traffic patterns or those looking to minimize their on-premises hardware.

Open Source Load Balancers

Open-source load balancers offer a customizable and cost-effective solution for businesses willing to manage their load-balancing setup. These tools can be tailored to specific needs, providing significant control over the load-balancing process. However, they demand specific technical expertise to configure and maintain.

DNS-Based Load Balancing

DNS-based load balancing uses the Domain Name System to allocate traffic among servers. It directs user requests to different server IP addresses based on geography, server health, and load. While not as dynamic as other methods, DNS-based load balancing is simple and can effectively distribute traffic across geographically dispersed servers.

Application Delivery Controllers (ADCs)

ADCs are advanced load balancers that offer additional features like security, application acceleration, and SSL offloading. They provide a comprehensive solution for managing traffic and protecting applications. ADCs are suitable for complex enterprise environments where traffic management needs to go beyond simple load distribution.

Also Read: Highly Available Architecture: Definition, Types & Benefits.

Pre-Implementation Considerations

Before load balancer implementation, proper planning is critical to ensure the best outcomes. Here is more information on the critical pre-implementation considerations to ensure a smooth and effective load balancer implementation.

Understand Your Needs

Before implementing load balancers, it is important to understand your needs to ensure an effective implementation.

Assess Traffic Patterns

Analyzing your network’s traffic patterns is one of the first and most important steps before load balancer implementation. Understanding peak traffic times and the types of requests your servers handle is crucial. This insight will guide the configuration of your load balancer for optimal performance.

Identify Critical Services

Determine which services are critical to your operations. Prioritizing these services ensures that your load balancer configuration aligns with your business objectives, maintaining high availability where it’s most needed.

Choose the Right Type of Load Balancing

Once you have identified your needs, you must choose the right load balancing type.

Hardware vs. Software Load Balancers

The second step before load balancer implementation is to decide between hardware and software load balancers based on your requirements. Hardware solutions offer robust performance but at a higher cost. Software load balancers provide flexibility and are easier to scale.

Application Delivery Controllers

Consider Application Delivery Controllers (ADCs) if you need advanced features like application-specific load balancing, SSL offloading, and automatic traffic management.

Evaluate Your Infrastructure

The third step is to evaluate your infrastructure to ensure it can accommodate your load balancer implementation.

Network Topology

Understanding your existing network topology is essential. The placement of your load balancer within your network impacts its effectiveness in distributing traffic.

Server Capacity

Evaluate your servers’ capacity. Ensure they can handle the redistributed load effectively once the load balancer is in place.

Security Considerations

It is critical to make security considerations before finalizing the implementation to ensure there are no security issues in the future.

SSL Offloading

Decide if you’ll use your load balancer for SSL offloading. This can reduce the load on your backend servers but requires careful security considerations, such as handling SSL certificates.

DDoS Protection

Credits: FreePik

Consider the load balancer’s role in DDoS protection. A well-configured load balancer can help mitigate DDoS attacks by distributing traffic evenly and identifying malicious traffic patterns.

Compliance and Regulations

Compliance and regulations are other critical factors one should not ignore before load balancer implementation.

Data Protection Laws

Ensure your load balancer setup complies with relevant data protection laws. This is particularly important if you’re handling sensitive information that may be subject to regulations like GDPR. Ensuring this beforehand can help you avoid hefty fines or, in worse cases, closures.

Industry Standards

Adhere to industry standards and best practices. Compliance ensures that your load-balancing implementation meets the required quality and security benchmarks.

Scalability and Future Growth

It is also important to keep future growth and scalability in mind before implementing a load balancer.

Anticipate Future Growth

Plan for future growth. A scalable load-balancing solution can adapt to increasing traffic volumes and evolving business needs without requiring a complete overhaul.

Cloud Integration

Consider how your load balancer will integrate with cloud services if you use or plan to use cloud infrastructure. Seamless integration facilitates hybrid or cloud-native deployments.

Testing and Validation

Setting up testing and validation during the pre-implementation stage will help you ensure an efficient load-balancing setup.

Test Environment

Set up a test environment that reflects your production setup. Testing your load balancer in a controlled environment allows you to fine-tune configurations and identify potential issues before going live. This can help ensure a smooth and error-free implementation.

Performance Testing

Conduct performance testing to validate that your load balancer can handle the expected traffic loads and distribute traffic efficiently across your servers.

Documentation and Support

Reviewing documentation and implementing robust support channels will further help enhance your load-balancing pre-setup.

Vendor Documentation

Review vendor documentation thoroughly. Understanding the features and limitations of your chosen load balancer helps in effective configuration and troubleshooting.

Support Channels

Ensure reliable support channels are available. Access to expert support is crucial for resolving any issues arising during or after the implementation. Support channels can also offer peace of mind that you have backup and support in emergencies.

Now that we have discussed the load balancer definition, types, tools, benefits, and pre-implementation considerations, let’s go over how is load balancer implemented.

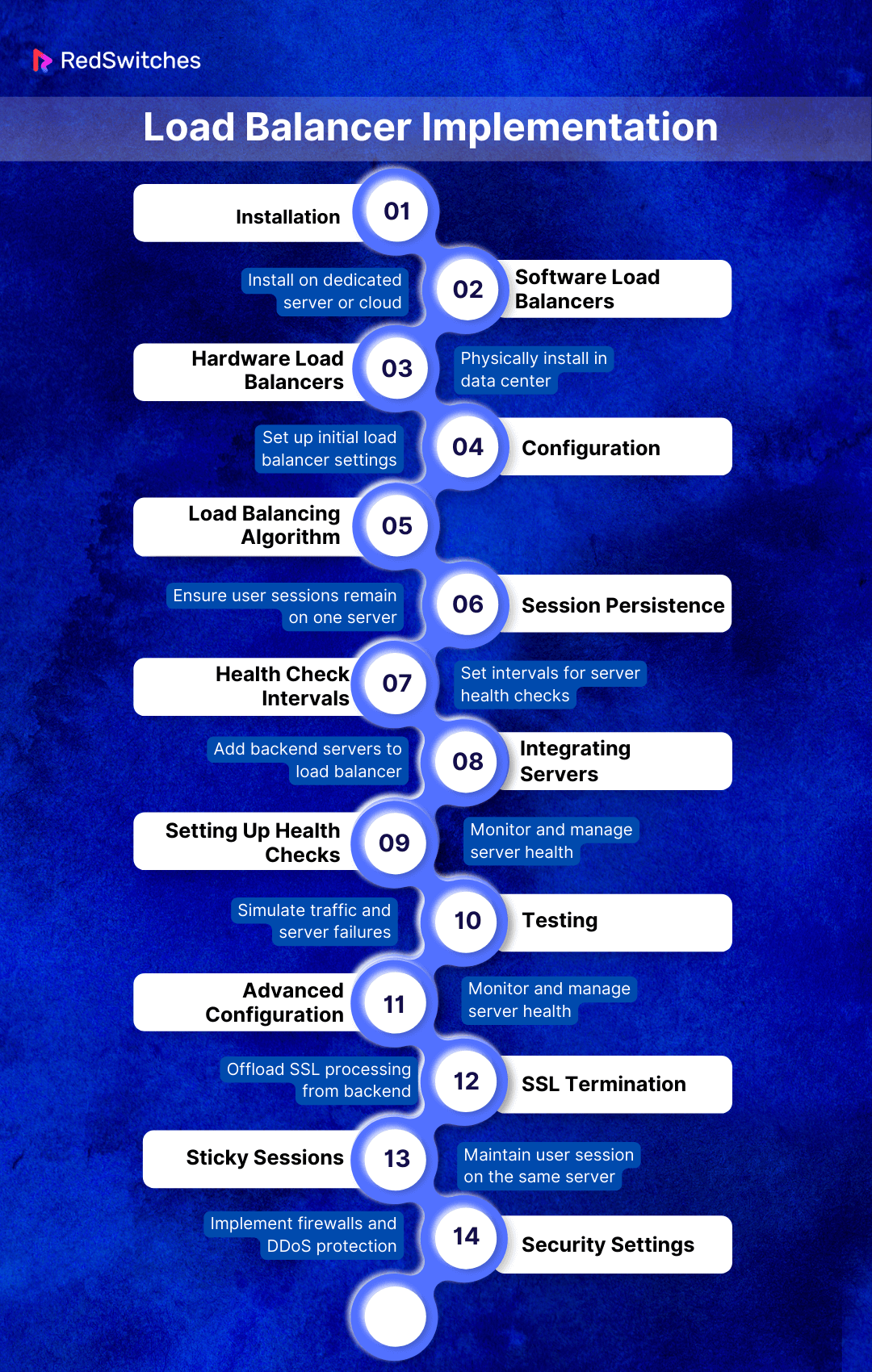

Load Balancer Implementation

Implementing a load balancer involves a series of critical steps, each contributing to your network infrastructure’s overall efficiency and reliability. Here’s a more detailed look at each phase in the load balancer implementation process.

Installation

The load-balancing implementation process begins with installation.

Software Load Balancers

For software-based solutions, the first step for load balancer implementation is installing the load-balancing software. This can be done on a dedicated server exclusively used for load balancing. It can also be set up within a virtual machine in a cloud infrastructure. Choosing between these depends on your requirements. It requires considering factors like traffic volume, budget, and desired control level.

Hardware Load Balancers

A hardware load balancer implementation involves physical setup within your data center. This includes connecting the device to your network and properly integrating it with your existing infrastructure. Hardware load balancers are known for their robustness and high performance, making them suitable for environments with high traffic demands.

Configuration

Configuring your load balancer is crucial in determining how traffic is distributed across your servers. Key settings include:

Load Balancing Algorithm

Choose an algorithm like round-robin. This type of algorithm distributes requests equally. It favors servers with fewer active connections. The choice depends on your application’s nature and traffic patterns.

Session Persistence

This setting ensures that a user’s session remains on the same server for consistency in transactions or user experience.

Health Check Intervals

Define how frequently your load balancer checks the health of connected servers. This helps ensure they’re available to handle requests.

Integrating Servers

Credits: FreePik

Adding your backend servers to the load balancer’s configuration is next. This step involves specifying the IP addresses and ports of the servers that will handle the incoming traffic. Proper integration ensures the load balancer can effectively distribute traffic and manage server health.

Setting Up Health Checks

Health checks are critical for maintaining the reliability of your service. They allow the load balancer to detect unavailable or underperforming servers. Configure health checks to regularly verify server status using methods like HTTP checks, TCP checks, or custom scripts. If a server fails a health check, the load balancer will stop sending traffic to it until it’s back online and passing health checks again.

Testing

Conduct thorough testing before your load balancer goes live to ensure it operates as expected. Simulate various traffic scenarios and potential server failures to evaluate how the load balancer manages traffic distribution and failover. This testing phase is crucial for identifying and rectifying any issues, ensuring a smooth deployment.

Advanced Configuration

Advanced configurations involve several aspects, including sticky sessions and SSL termination.

SSL Termination

Setting up SSL termination during load balancer implementation is essential for handling encrypted traffic. This setup decrypts incoming SSL requests at the load balancer level, reducing the encryption workload on your backend servers, which can significantly improve performance.

Sticky Sessions

Configure sticky sessions for applications requiring users to maintain a session on the same server. This is particularly important for applications that store session information locally on the server.

Security Settings

Enhancing the security of your load balancer is crucial. Implement measures like firewalls to control incoming and outgoing traffic and DDoS protection to guard against distributed denial-of-service attacks. These security settings help protect your network from cyber threats while ensuring your load balancer remains a robust gateway for traffic distribution.

Also Read: Horizontal Vs Vertical Scaling: 5 Key Differences.

Load Balancer Implementation: Maintenance and Monitoring

Once you reach the last step of load balancer implementation, it is integral to maintain consistent maintenance and monitoring efforts to ensure efficient and smooth load balancing. Here are a few steps you can take to ensure this:

Regular Updates

Ensuring that your load balancer’s software or firmware remains current is crucial for maintaining system integrity and security after load balancer implementation. Regular updates often include patches for vulnerabilities, performance enhancements, and new features that can improve efficiency.

Action Steps

Set up a schedule for regular checks on software or firmware updates from the vendor. Automate the update process if possible, and always test updates in a controlled environment before applying them to your production system.

Performance Monitoring

Keeping a close eye on how your load balancer performs under various loads is key to preemptively identifying and resolving issues. Monitoring traffic distribution, server health, and response times can provide insights into potential bottlenecks or inefficiencies.

Action Steps

Use built-in monitoring tools or third-party solutions to track the performance metrics of your load balancer. Set up alerts for anomalies or thresholds that indicate potential problems, allowing swift action to rectify issues.

Scaling

Your load-balancing infrastructure must scale as your user base or application demand expands. This might mean adding more backend servers to distribute the load further. It may also require you to tweak the load balancer’s settings to optimize resource allocation.

Action Steps

Review your traffic patterns and growth trends regularly to anticipate scaling needs. Develop a scalable architecture that allows for easy addition of servers or adjustment of resources. Consider auto-scaling solutions that dynamically adjust resources based on current demand.

Also Read: Exploring The Best Cloud Application Hosting For Your Apps.

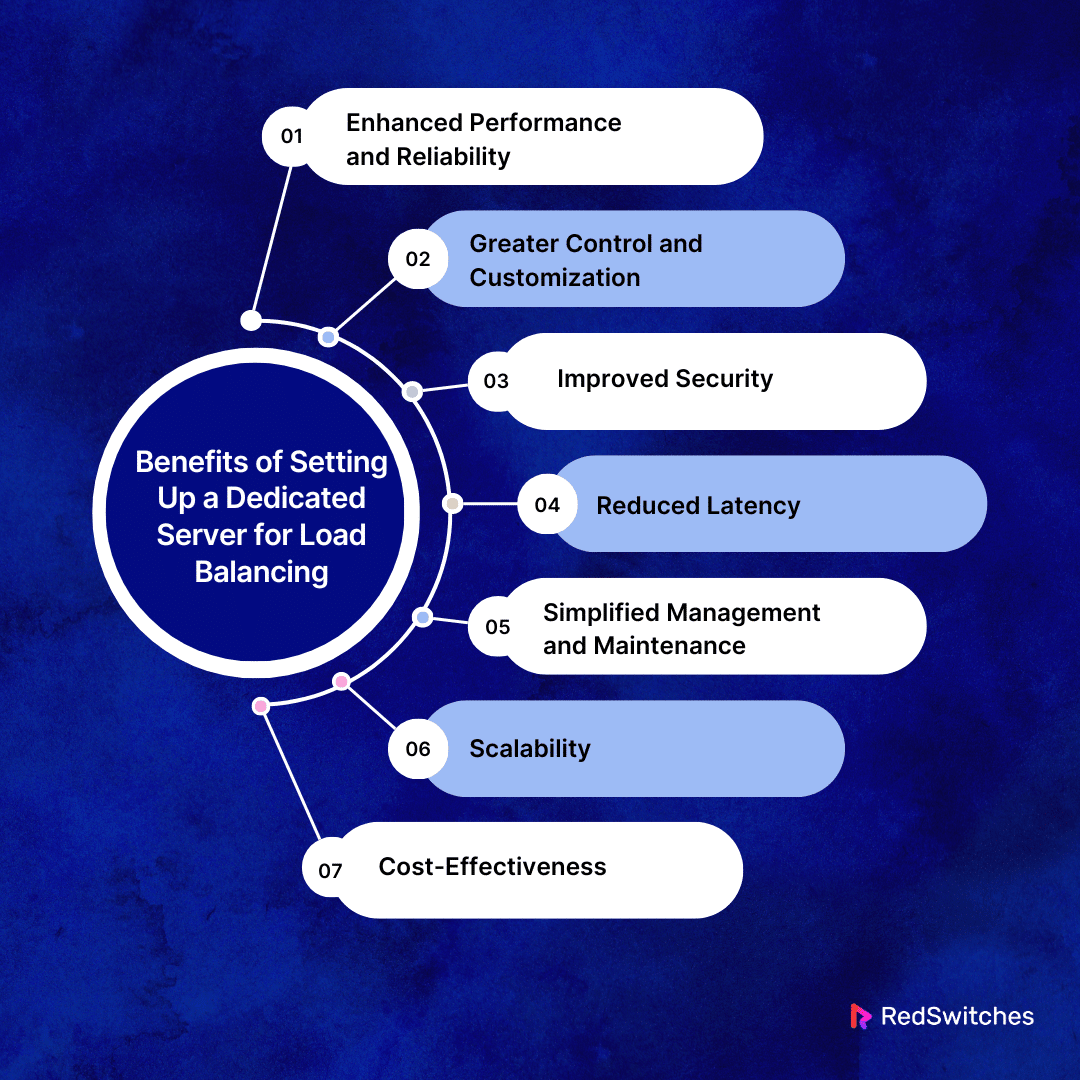

Benefits of Setting Up a Dedicated Server for Load Balancing

While various load-balancing methods exist, a dedicated server offers distinct advantages. Here are a few advantages of setting up this server type for load balancing:

Enhanced Performance and Reliability

A dedicated server for load balancing allows all resources to distribute network traffic. This specialization ensures high performance. This is because the server can manage large volumes of traffic without the risk of performance degradation that might occur on shared or multi-purpose servers.

These servers usually come with high-quality hardware and robust infrastructure. This further enhances the reliability and uptime of your services.

Greater Control and Customization

Dedicated servers allow you complete control over the load-balancing environment. This level of control allows for precise configuration of load balancing rules, algorithms, and health checks.

This helps ensure traffic is distributed according to your specific needs and priorities. Whether you need to implement custom health checks, IP-based routing, or session persistence, these servers allow you to tailor everything to your requirements.

Improved Security

Credits: Unsplash

A dedicated server for load balancing can amplify your security posture. The dedicated load balancer can serve as a strategic point to implement advanced security measures, like SSL offloading and firewall rules, by controlling the entry point to your network. This centralized security control facilitates management and provides a defense against potential threats.

Reduced Latency

You can significantly lower latency by placing a dedicated server for load balancing in a data center close to your user base. This proximity ensures that user requests are quickly routed to the nearest available server. It minimizes delay and enhances the overall user experience. Today, where even milliseconds matter, reducing latency can give you a competitive edge.

Simplified Management and Maintenance

Managing dedicated servers for load balancing can be simpler than dealing with a distributed or cloud-based solution. With a single platform, administrative tasks like updates, patches, and configurations are simplified.

Many server providers also offer management services. This further eases the maintenance burden and allows you to focus on your core business activities.

Scalability

When your website or application experiences increased user traffic, your load-balancing setup must adapt to meet these new demands. Opting for dedicated servers allows you to scale up. It allows you to enhance or augment the server’s hardware with more resources whenever necessary. Such adaptability guarantees that your load-balancing system keeps pace with your business growth.

Cost-Effectiveness

Credits: Unsplash

Although the initial investment in dedicated servers might seem higher than other solutions, the long-term benefits can offer cost savings. Improved performance and reliability can enhance customer satisfaction and loyalty.

This can potentially increase profit. The efficiency and scalability of these servers can reduce the need for additional hardware or services, lowering IT expenses.

Also Read: What Is Provisioning In Cloud Computing.

Conclusion – Load Balancer Implementation

Mastering load balancer implementation can help you strengthen your IT infrastructure against downtime and traffic surges. The above tutorial aims to equip you with the knowledge and skills to enhance your network’s efficiency and reliability through effective load-balancing strategies.

RedSwitches is an ideal partner for those seeking load balancer implementation support. The provider offers robust solutions tailored to modern businesses’ demanding needs. RedSwitches can serve as your foundation for deploying sophisticated load-balancing systems.

RedSwitches can help you achieve the smooth, high-performing digital environment your business deserves. Explore our website now, or contact us to learn how we can elevate your load-balancing strategy. Take this necessary step to push your business toward unparalleled digital resilience.

FAQs

Q. How do you implement an application load balancer?

Below is the answer to how to implement an application load balancer:

- Pick a load-balancing solution supporting application-level (Layer 7) traffic management.

- Configure the load balancer to distribute traffic based on URLs, HTTP headers, or other application-specific data.

- Define the routing rules and health checks to ensure traffic is directed to healthy servers.

Q. How do you implement a load balancer from scratch?

Find the answer to how to implement a load balancer from scratch below:

- Selecting the right hardware or software platform.

- Installing the load-balancing software.

- Configuring the network settings.

Define your balancing algorithms and set up health checks for backend servers. This will help maintain optimal performance and reliability.

Q. How do you implement load balancing on a server?

Load balancer implementation on a server demands the following steps:

- Install load-balancing software or use the built-in load-balancing features of your server’s operating system.

- Configure the load balancer to distribute incoming requests among multiple backend servers based on your chosen algorithm.

- Regularly monitor performance and adjust settings to ensure balanced traffic distribution.

Q. What is a load balancer?

A load balancer is a device or software application that distributes network traffic across many servers. It ensures no single server becomes overwhelmed, thus enhancing the system’s overall performance.

Q. What are the different types of load balancers?

There are several types of load balancers. This includes global server load balancing, classic load balancer, and elastic load balancing. Each type comes with its specific use cases and features.

Q. How does a load balancer work?

When a client request reaches the load balancer, it distributes the traffic across the servers. This is done according to the specific load-balancing method in use. Examples include round-robin, least connections, or IP hash, ensuring efficient utilization of resources.

Q. What is DNS load balancing?

DNS load balancing involves using the Domain Name System to distribute client requests across many server resources. This is done based on geographic location, server health, and server load.

Q. What is cloud load balancing?

Cloud load balancing refers to distributing network traffic within a cloud environment. It manages virtual server and resource demands to optimize performance and prevent overload.

Q. What are the key features of a load balancer?

Load balancers can route traffic, implement load-balancing methods, and effectively distribute network traffic. They also ensure the health of the servers, contributing to an efficient system.

Q. What are layer 4 and layer 7 load balancing?

Layer 4 load balancing operates at the transport layer, forwarding traffic based on network protocols and IP addresses. Layer 7 load balancing functions at the application layer. It makes routing decisions based on application-specific data.

Q. How do load balancers contribute to system scalability?

Load balancers are crucial in system scalability. They distribute client requests across many servers. This enables the system to handle increased traffic and maintain performance while adding or removing server instances.

Q. What are some popular load balancing methods?

Popular load-balancing methods include round-robin, least connections, IP hash, weighted round-robin, and more. Each method distributes traffic to meet specific requirements and optimizes resource utilization.