Connectivity is the lifeblood of modern applications and services today. As organizations adopt cloud-native architectures and distributed systems, efficient and dependable communication across different components becomes critical. This is where Cloud Native Remote Procedure Call services come in, offering efficient solutions to this challenge.

RPC services provide a solution to this challenge by facilitating remote method invocation between different parts of an application, independent of their physical location. This simplifies the development and maintenance of distributed systems and ensures that data flows smoothly across services, improving overall system performance and reliability.

This blog post will delve into Cloud Native Remote Procedure Call services, exploring their importance, benefits, and applications. We’ll also discuss why some RPC services have become the top choices for many organizations looking to improve connectivity in their cloud-native ecosystems.

Table of Contents

- What is Remote Procedure Call (RPC)?

- Types of RPC

- Remote Procedure Call Example

- What is Cloud Native Remote Procedure Call?

- Remote Procedure Call: History and Origins

- Key Features of Cloud-Native RPC Services

- Advantages of Cloud Native Remote Procedure Call Services

- Disadvantages of Cloud-Native Remote Procedure Call Services

- Implementing RPC in Cloud Native Applications

- Steps in a Remote Procedure Call

- Challenges in Implementing RPC

- Is RPC Better Than REST?

- Popular Cloud Native Remote Procedure Call Services

- Which Remote Procedure Call Service to Choose?

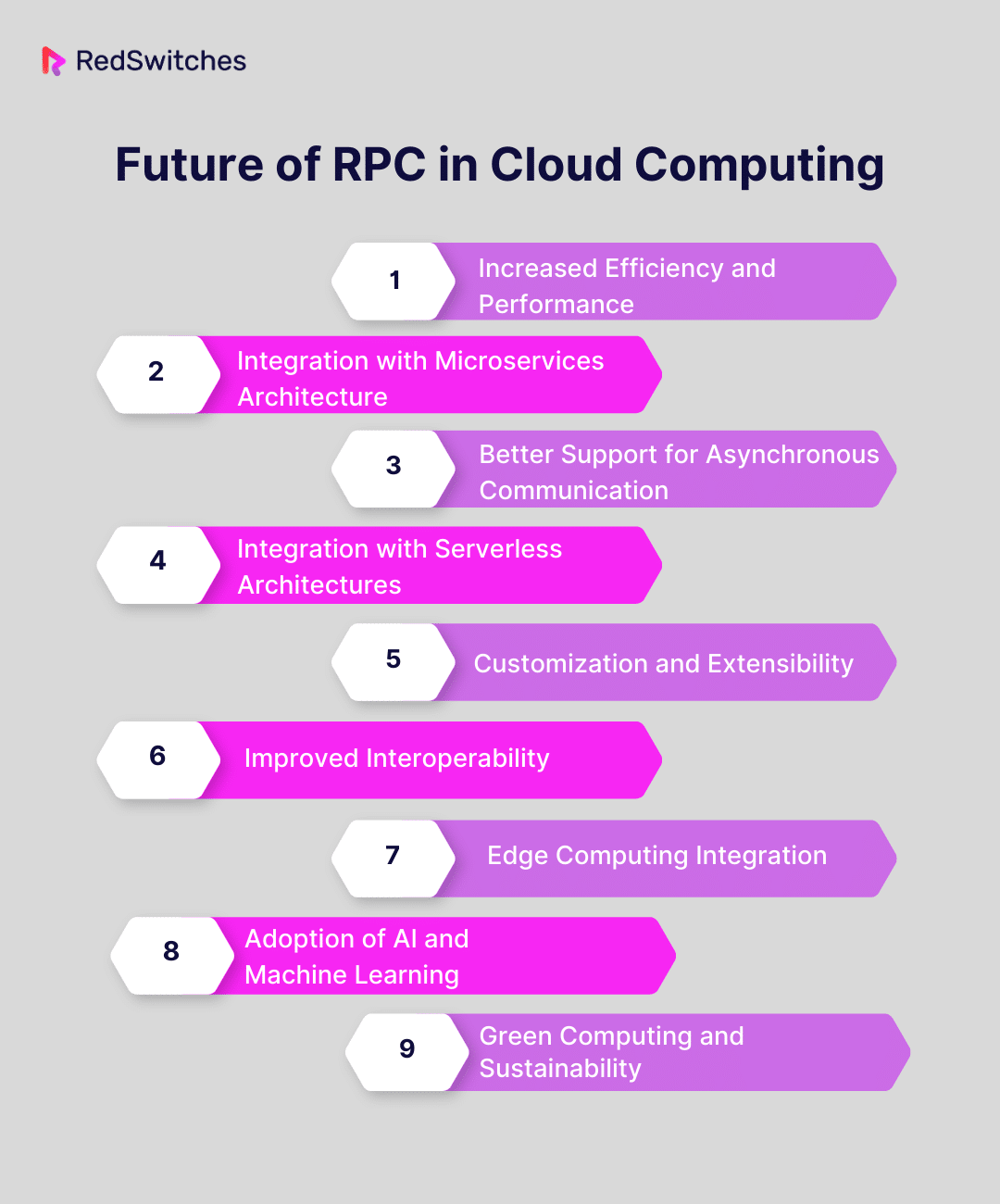

- Future of RPC in Cloud Computing

- Increased Efficiency and Performance

- Integration with Microservices Architecture

- Better Support for Asynchronous Communication

- Integration with Serverless Architectures

- Customization and Extensibility

- Improved Interoperability

- Edge Computing Integration

- Adoption of AI and Machine Learning

- Green Computing and Sustainability

- Conclusion

- FAQs

What is Remote Procedure Call (RPC)?

Credits: FreePik

Remote Procedure Call, or RPC, is a protocol used in computer networks. It allows a program to direct the execution of a subroutine or function in a different address space – that is, on a different shared network or computer. The development of distributed, client-server applications starts with this protocol.

RPC abstracts the complexities of network communication. It makes a call to a remote server look like a local function call within a program. When a client makes an RPC call, it provides the parameters needed for the remote procedure. This request is sent over the network to the server hosting the procedure.

Upon receiving the request, the server executes the procedure using the supplied parameters. The execution results are then sent back to the client across the network. On the client’s side, the RPC system behaves like a local procedure call, waiting for the procedure to complete and return the results to the calling program.

RPC systems often manage various tasks, including data serialization and deserialization, error handling, and managing network communication details. This makes RPC a convenient and efficient way to implement client-server communication, especially in a distributed computing environment where procedures can be executed on different hardware and software platforms.

Also Read: Learn How Distributed Tracing Work.

Types of RPC

Below is a list of the five types of remote procedure call or RPC:

-

Synchronous RPC

Synchronous RPC is much like a standard function call in programming, but the function resides on a remote server. The client makes a request and is ‘frozen’ until a response is received. This process is simple, much like the traditional programming models.

While its simplicity and familiarity are advantages, extended server response time can lead to resource underutilization. It can also complicate the client design in scenarios requiring multiple simultaneous server interactions.

-

Asynchronous RPC

Asynchronous Remote Procedure Call is similar to event-driven programming. Here, the client initiates a request and continues its operations without waiting for a response. A callback function is triggered once the server’s response is ready to handle the result.

This approach is more efficient when it comes to resource utilization and allows for a more responsive client. However, it introduces complexity in handling the asynchronous nature of communication, especially when dealing with error handling and ensuring the consistency of the application state.

-

Batch RPC

Batch Remote Procedure Call is created to optimize network communication. Instead of sending requests individually, multiple requests are grouped together in a single network call. This is useful when each request is lightweight and the overhead of individual network calls is extensive.

By reducing network calls, batch RPC increases efficiency. It can introduce latency for individual requests since they must wait for the batch to be assembled and sent. This approach also adds complexity in handling and synchronizing the batched responses with client-side operations.

-

Concurrent RPC

Concurrent Remote Procedure Call involves making multiple RPC calls simultaneously, without pausing for each to complete before beginning the next. This type is useful when the server can manage multiple requests in parallel, improving throughput and resource utilization.

The main benefit is the significant performance gain in scenarios where the server can process requests in parallel. The drawback is the added complexity in managing concurrency, both on the client and server side, which can introduce challenges in synchronization and maintaining data integrity.

-

Stateful vs Stateless RPC

In a stateful Remote Procedure Call, the server retains state information across multiple requests from the same client. This is contrasted with stateless RPC. Each request is treated independently, without the server retaining any state information from previous interactions.

Stateful RPC benefits scenarios requiring continuity between requests, offering a more personalized and coherent interaction. However, it requires more resources and can be more difficult to scale. Stateless RPC, being more simple and scalable, is best for scenarios where each request can be processed independently. It may not be suitable for complex interactions requiring context continuity.

Remote Procedure Call Example

Below is a simple example of a Remote Procedure Call (RPC) using Python’s xmlrpc library. In this example, we’ll create a server that exposes a function and a client that calls that function remotely.

Server (server.py):

import xmlrpc.server

# Create a simple function to be exposed via RPC

def add_numbers(x, y):

return x + y

# Create an RPC server and expose the function

server = xmlrpc.server.SimpleXMLRPCServer(("localhost", 8000))

server.register_function(add_numbers, "add")

# Start the server

print("RPC server is running on port 8000...")

server.serve_forever()

Client (client.py):

import xmlrpc.client

# Create an RPC proxy to connect to the server

proxy = xmlrpc.client.ServerProxy("http://localhost:8000/")

# Call the remote function

result = proxy.add(5, 3)

# Print the result

print("Result of adding 5 and 3:", result)

Here’s how it works:

- The server defines a simple function, add_numbers, that adds two numbers.

- The server creates an RPC server using SimpleXMLRPCServer. It registers the add_numbers function with the name add.

- The server begins listening on port 8000.

- The client creates an RPC proxy using ServerProxy to link to the server.

- The client calls the remote function added to the server with arguments 5 and 3.

- The client prints the result received from the server.

When you run the server and client, the client will make a remote call to the server’s add function, and you will see the result printed on the client side, which should be “Result of adding 5 and 3: 8.”

Security is paramount in the context of cloud-native applications. Read our informative piece, Cloud Native Security: An In-Depth Guide Of 7 Key Aspects to take control of the security of your cloud-native applications.

What is Cloud Native Remote Procedure Call?

Cloud Native Remote Procedure Call refers to implementing and using RPC in a cloud-native setting. It commonly includes containerized applications, microservices architectures, and dynamic orchestration. RPC enables communication between an application’s various independently deployable and scalable components in a cloud-native setup.

Services are often distributed across different environments, containers, and geographical locations in cloud-native ecosystems. Cloud Native RPC is designed to work smoothly in distributed systems, enabling services to communicate over the network efficiently, reliably, and scalably.

This communication is important to the functioning of microservices architectures, where different services might be written in different languages but need to work together as part of a more extensive application.

Remote Procedure Call: History and Origins

Below, we explore the history and origin of Remote Procedure Call:

The Birth of RPC

- Origins in the 1970s: The concept of RPC traces its origins back to the 1970s, emerging as a response to the growing complexity of distributed computing systems.

- First Described by Bruce Jay Nelson: Bruce Jay Nelson is credited with formalizing the concept of RPC in his 1981 dissertation at Carnegie Mellon University. His work laid the foundational principles of RPC as a method for inter-process communication.

The Need for Efficiency

- Simplification of Distributed Computing: The primary motivation behind RPC was to simplify the process of executing code on a different computer in a network, making it as easy as a local procedure call.

- Early Challenges: The initial challenges were to create a smooth experience where network operations were abstracted, allowing developers to concentrate on the main logic of their applications.

RPC in Distributed Systems

- Abstracting Network Complexities: Remote Procedure Calls played an important role in abstracting the complexities of network communication, thereby simplifying the development of distributed systems.

- Early Implementations and Use Cases: One of the earliest applications of Remote Procedure Call was in the Network File System (NFS) developed by Sun Microsystems. NFS allowed a computer to access files via a network as effortlessly as if they were on its local storage.

RPC in the Pre-Cloud Era

- Expansion in the 1990s: The internet and distributed computing growth through the 1990s provided fertile ground for the expansion of RPC. It became a key technology in enabling the early internet’s interconnected systems.

- Standardization Efforts: Efforts were made to standardize RPC technologies, creating various protocols and frameworks.

Diverse Implementations

- Variations of RPC: This era saw the emergence of various forms of Remote Procedure Call, including the Distributed Computing Environment (DCE/RPC) by the Open Software Foundation and Sun’s Open Network Computing (ONC) RPC.

- Addressing Different Needs: Each implementation addressed specific network communication challenges and use cases, showcasing the versatility of RPC in different environments.

Challenges

- Handling Network Issues: With the expansion of networks, RPC had to evolve to address latency, fault tolerance, and efficient handling of network failures.

- Security in Focus: The increasing reliance on RPC for critical operations brought security concerns to the forefront, leading to the development of more secure and robust RPC mechanisms.

Transition to Cloud-Native RPC Services

- A Paradigm Shift: The 2000s marked a major shift towards cloud computing. This fundamentally transformed how remote procedure call was used and implemented in distributed systems.

- Adapting to Cloud Requirements: The cloud era demanded RPC solutions that were more scalable, reliable, and could smoothly integrate with the emerging cloud infrastructure.

Innovations in Cloud-Native RPC

- Embracing Microservices and Containerization: The cloud-native movement, emphasizing microservices and containerization, brought a renaissance in RPC technologies. This era saw the rise of frameworks like gRPC by Google and Apache Thrift. These frameworks were designed to offer efficient, cross-language RPC mechanisms suited for modern cloud environments.

- Focus on Performance: Cloud-native RPC services were engineered to reduce latency, improve communication efficiency, and support many programming environments. These features made them stand out from their predecessors.

Also Read: Understanding Cloud RAID For Extra Resilience.

Key Features of Cloud-Native RPC Services

Below are the key features of cloud-native RPC services:

Smooth Integration with Cloud Ecosystems

One of the key features of cloud-native RPC services is their ability to integrate smoothly with the cloud ecosystem. These services are designed to work effortlessly with container orchestrators like Kubernetes, service meshes like Istio, and cloud-based monitoring tools.

This integration ensures that RPC services can be easily deployed, managed, and monitored within the cloud environment, providing a smooth workflow for developers.

Scalability and Performance Optimization

Cloud-native RPC (remote procedure call) services are known for their scalability. They are built to handle the extensive scaling requirements of modern applications, allowing for the effortless management of increased workloads without compromising performance.

This is essential in cloud environments where resource allocation and demand fluctuate rapidly. These services are also optimized for high performance. They include features like load balancing and caching, ensuring that applications run efficiently.

Support for Multiple Programming Languages

Another significant feature is the support for multiple programming languages. Cloud-native RPC frameworks like gRPC offer cross-language support. This means developers can implement services in various languages, including Java, Go, Python, and more, offering flexibility and making integrating with existing systems and workflows easier.

Improved Security Features

Security is vital when it comes to cloud-native RPC services. These services provide advanced security features, including end-to-end encryption, token-based authentication, and authorization mechanisms. This ensures that the data transferred between client and server is secure, which is crucial in a cloud environment where data often traverses public networks.

Efficient Communication Protocols

Cloud-native RPC services leverage efficient communication protocols like HTTP/2. This offers notable improvements over traditional HTTP, like lower latency and support for multiplexing. Efficient protocols ensure faster and more reliable communication between services.

Microservices-Friendly

Cloud-native Remote Procedure Call is designed to complement microservices architectures. They support the development of lightweight, independent, and loosely coupled services that communicate over well-defined APIs. This is integral in cloud-native settings, where applications are often broken down into microservices.

Developer-Friendly Interface

These RPC services offer a developer-friendly interface with features like auto-generated client libraries, comprehensive documentation, and easy-to-use APIs. This reduces the learning curve and development time, enabling easier, faster, and more efficient development of applications.

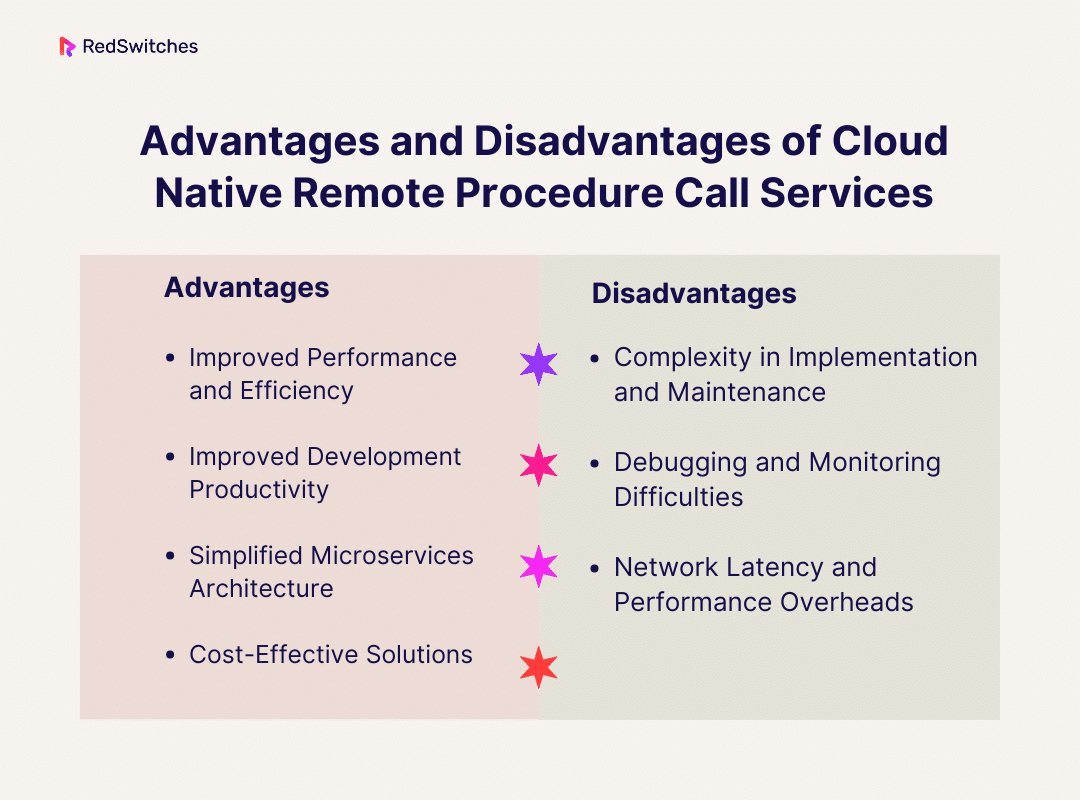

Advantages of Cloud Native Remote Procedure Call Services

Below are the key advantages of Cloud-native Remote Procedure Call Services:

Improved Performance and Efficiency

One of the most striking benefits of cloud-native RPC services is the performance boost they offer. RPC mechanisms are designed to be lightweight and fast, providing more efficient communication between different services or microservices in a cloud environment.

This efficiency is especially advantageous when dealing with high-load systems, where every millisecond of response time counts. Cloud-native Remote Procedure Call Services, like gRPC developed by Google, use HTTP/2 protocol, allowing multiplexed streams over a single connection, reducing latency and improving load times.

Improved Development Productivity

RPC services that are cloud-native simplify the development process by abstracting the underlying complexity of network protocols. This allows developers to focus on designing business logic instead of navigating the complexities of network protocols. By streamlining the development process, this abstraction lowers the time and effort needed to create and maintain applications.

Simplified Microservices Architecture

The microservices architecture, a staple in cloud-native development, is greatly complemented by RPC services. They provide a standardized way for services to communicate, regardless of where they are hosted or how they are implemented. This standardization is crucial in a microservices ecosystem, where services often need to interact with each other across different environments.

Cost-Effective Solutions

The use of RPC services in a cloud-native setup can be cost-effective. The efficiency and speed of RPC protocols mean that fewer computational resources are required, leading to lower operational costs. Moreover, the scalability and modular nature of RPC-based applications allow for more precise resource allocation, avoiding over-provisioning and thus reducing costs.

Also Read: A Beginner’s Guide To Cloud Repatriation.

Disadvantages of Cloud-Native Remote Procedure Call Services

Below are the disadvantages of cloud-native remote procedure call:

Complexity in Implementation and Maintenance

One of the top challenges with cloud-native remote procedure call services is their implementation and maintenance complexity. Unlike traditional monolithic architectures, cloud-native applications are distributed and consist of multiple, often loosely coupled services. Implementing RPC in such environments requires careful planning and a deep understanding of network protocols and communication patterns.

Maintaining these services over time, especially as the application scales and evolves, can be challenging. This complexity often demands skilled personnel and can lead to increased costs and resource allocation.

Debugging and Monitoring Difficulties

Debugging and monitoring distributed systems that use RPC can be considerably more challenging than in traditional setups. An application’s components might be spread across various services and hosts in a cloud-native environment. When an issue arises, pinpointing the exact source of the problem—be it in the network, the service, or the RPC implementation itself—can be daunting.

This complexity is compounded by the asynchronous nature of many RPC calls, making it difficult to track the data flow and understand the system’s state at any given point.

Network Latency and Performance Overheads

While RPC enables communication across different services and locations, it also introduces network latency. This network latency can be in the range of milliseconds compared to the microseconds of local calls. Every RPC call involves network communication, which can be significantly slower than local calls within a single application.

This latency can also impact the application’s performance, especially if the system makes frequent or complex RPC calls. During these calls, serialization and deserialization of data add additional overhead, which can further degrade performance.

Implementing RPC in Cloud Native Applications

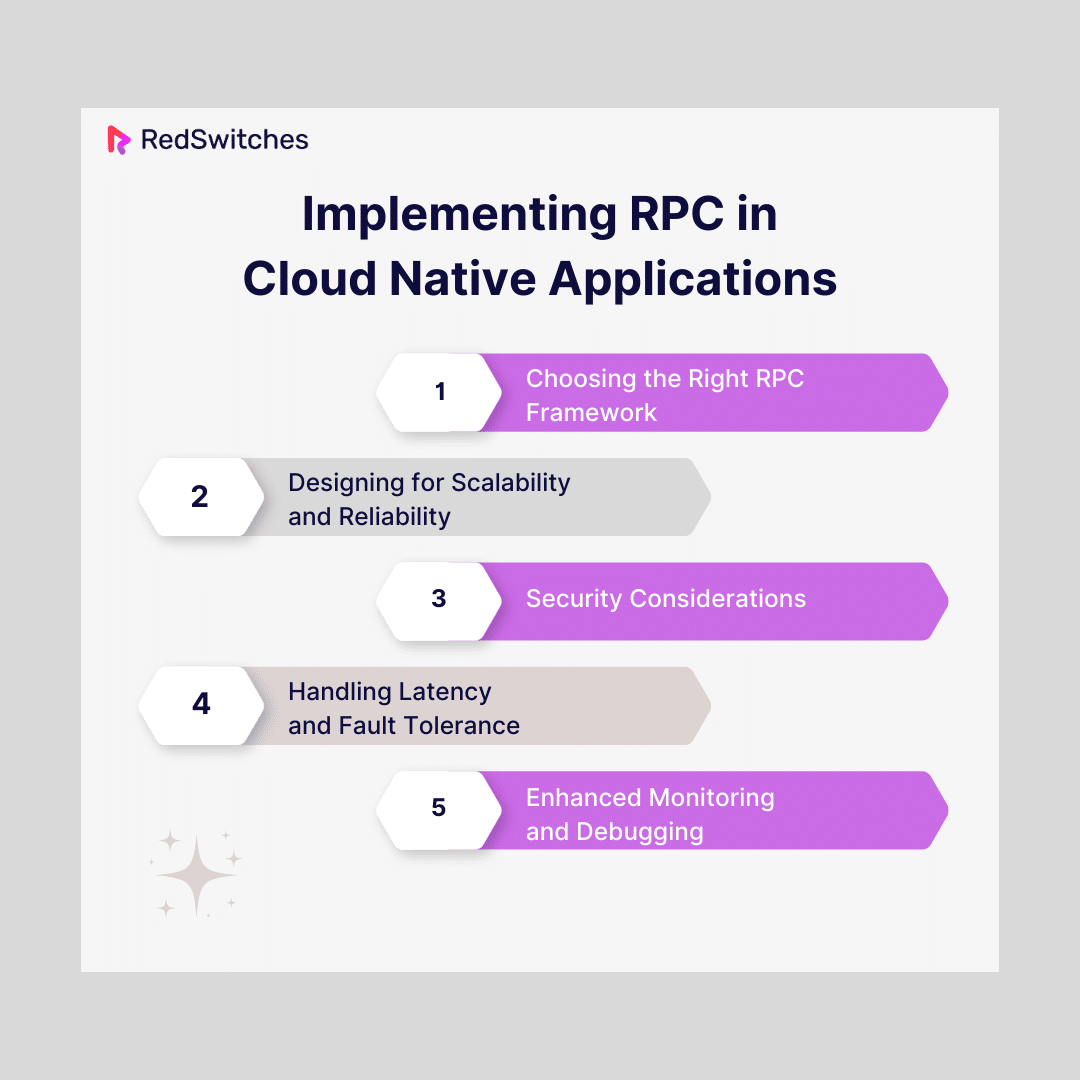

Below are a few steps involved in the implantation of Remote Procedure Call in cloud-native applications:

Choosing the Right RPC Framework

Selecting the most suitable Remote Procedure Call framework is a foundational step in developing cloud-native applications. While gRPC, Apache Thrift, and JSON-RPC are prominent choices, the decision should align with the application’s specific needs:

- Performance Optimization: Consider frameworks that optimize data transmission speeds and reduce latency.

- Language Support: Ensure the chosen RPC framework supports the programming languages used in your application.

- Compatibility: The Remote Procedure Call framework should integrate smoothly with existing systems and microservices. Verify if it works with the cloud infrastructure and tech stack you currently have.

- Community and Support: A framework with good community support and regular updates is crucial. This ensures access to knowledge and resources for troubleshooting and enhancements.

- Protocol Flexibility: Some applications may benefit from the flexibility of Apache Thrift or JSON-RPC, which support various serialization formats and protocols.

Designing for Scalability and Reliability

When integrating RPC into cloud-native applications, scalability and reliability are paramount. Beyond stateless design and horizontal scaling, several other factors contribute to a powerful system:

- Efficient Load Balancing: Implement sophisticated load balancing strategies to distribute RPC calls evenly across servers, preventing any single point of failure.

- Dynamic Service Discovery: Use service discovery mechanisms to dynamically locate networked services. This is especially important in cloud environments where services frequently change IPs or ports.

- State Management: While RPC calls should be stateless, efficiently managing state data (either client-side or using external services like Redis) is crucial for maintaining continuity in user experience.

- Failover and Redundancy Plans: Develop comprehensive failover strategies and maintain redundant systems to handle server or network failures seamlessly, ensuring uninterrupted service.

- Rate Limiting and Throttling: Implement rate limiting to prevent overloading services and maintain system stability, especially during traffic spikes.

Security Considerations

Security is a key concern when implementing Remote Procedure Call in cloud-native applications, mainly due to the network-based nature of RPC interactions. TLS/SSL encryption is essential to protect data in transit, but it’s only a part of the overall security strategy. Other crucial elements include:

- Authentication and Authorization: Implement authentication mechanisms to verify the identity of the entities involved in the RPC communication. Use authorization techniques to ensure only permitted entities can access specific procedures or data based on predefined policies.

- Input Validation: Implement stringent input validation on client and server sides to prevent injection attacks.

- Secure Communication Protocols: Besides TLS/SSL, consider implementing other secure communication protocols that suit the specific requirements of your application, such as HTTPS, to secure data further.

- Regular Security Audits: Perform frequent vulnerability assessments and audits to identify and reduce security threats.

Also Read: What Is PaaS? Platform-as-a-Service Types Explained.

Handling Latency and Fault Tolerance

Efficient RPC implementations in distributed systems must adeptly handle latency and ensure fault tolerance. Strategies include:

- Asynchronous Calls and Callbacks: Use these to manage and reduce the impact of latency, allowing other processes to continue while waiting for a response.

- Retries and Timeout Policies: Implement retry mechanisms with intelligent timeout policies to handle temporary failures in the network or servers.

- Circuit Breakers: Use circuit breakers to prevent repeated calls to a failing service, thereby avoiding further strain on the system.

- Deadline Propagation: Implement deadline propagation in RPC calls to ensure that requests do not remain stuck indefinitely, improving the system’s overall responsiveness and reliability.

Enhanced Monitoring and Debugging

Effective monitoring and debugging are critical for maintaining the health of distributed systems using Remote Procedure Call. This involves:

- Logging and Tracing: Implement comprehensive logging and tracing in RPC calls to capture detailed information about system operations and interactions. This aids in debugging and performance analysis.

- Using Monitoring Tools: Employ tools like Prometheus for detailed monitoring, tracking a wide range of metrics, and providing insights into the system’s performance.

- Tracing Tools: Use tracing tools like Jaeger for distributed tracing to visualize call flows and diagnose issues in complex, distributed environments.

- Real-time Monitoring and Alerts: Set up real-time monitoring systems with alerting capabilities to identify and respond to issues as they occur quickly.

Steps in a Remote Procedure Call

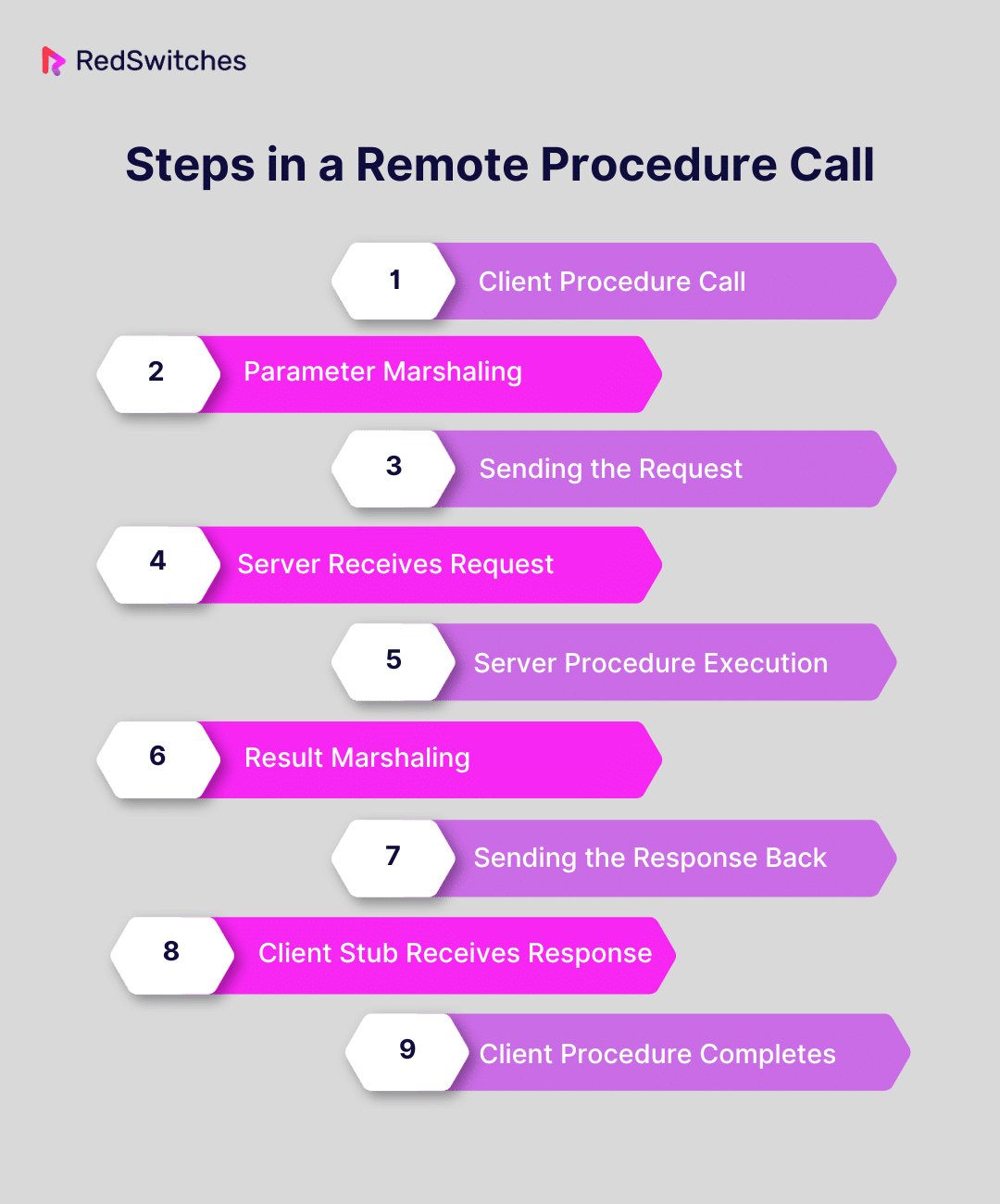

Understanding an RPC’s detailed steps helps appreciate how complex operations are simplified across networks. Below is an in-depth overview of each step:

Client Procedure Call

- Initiating the Call: The process begins when a client application needs to request a service located on a remote server. Instead of calling the remote procedure directly, the client invokes a local procedure known as stub.

- Role of the Client Stub: The client stub represents the remote procedure on the client side. It presents an interface similar to the actual remote procedure, hiding the intricacies of network communication from the client application.

Parameter Marshaling

- Preparing Data for Transmission: The client stub prepares the parameters required for the remote procedure. This preparation is known as marshaling. Marshaling involves converting the parameters into a standard format transmitted over the network, often binary.

- Ensuring Data Integrity: The marshaling process also involves encoding data to ensure it remains intact and interpretable when it reaches the server.

Sending the Request

- Transmission Over Network: The client stub requests the server after marshaling. This request includes the name of the procedure to be executed, the marshaled parameters, and any other metadata required for the call.

- Communication Protocols: The request is sent over the network using standard communication protocols, ensuring compatibility and interoperability between different systems.

Server Receives Request

- Server Stub Activation: On the server side, a server stub, analogous to the client stub, receives the message.

- Demarshalling the Parameters: The server stub then marshals the parameters, converting them from the network transmission format to a format usable by the server’s procedure.

Server Procedure Execution

- Executing the Remote Procedure: The server then uses these parameters to execute the desired remote procedure as a local call.

- Processing the Request: The actual computation or service requested by the client is carried out during this phase.

Result Marshaling

- Preparing the Response: Once the server procedure completes its execution, the server stub marshals the result. This may include any return values or data generated by the procedure.

- Format Conversion for Return: Similar to parameter marshaling, this step converts the procedure’s output into a format suitable for network transmission.

Sending the Response Back

- Response Transmission: The marshaled response is sent back across the network to the client stub. This step is crucial for delivering the outcome of the remote procedure to the client.

Client Stub Receives Response

- Receiving and Processing the Response: Upon receiving the response from the server, the client stub demarshals the result data, converting it from the network format to a format the client application can use.

- Completion of Data Transfer: The data transfer process between the client and server ends here.

Client Procedure Completes

- Finalizing the Call: Once the client stub has successfully demarshalled the response data from the server, it returns control to the client program, providing the results of the remote procedure call.

- Perception of Local Execution: To the client application, this entire process appears to have executed a local procedure, abstracting the complexities of network communication and remote execution.

Also Read: Lift & Shift Migration: An 18-Point Checklist.

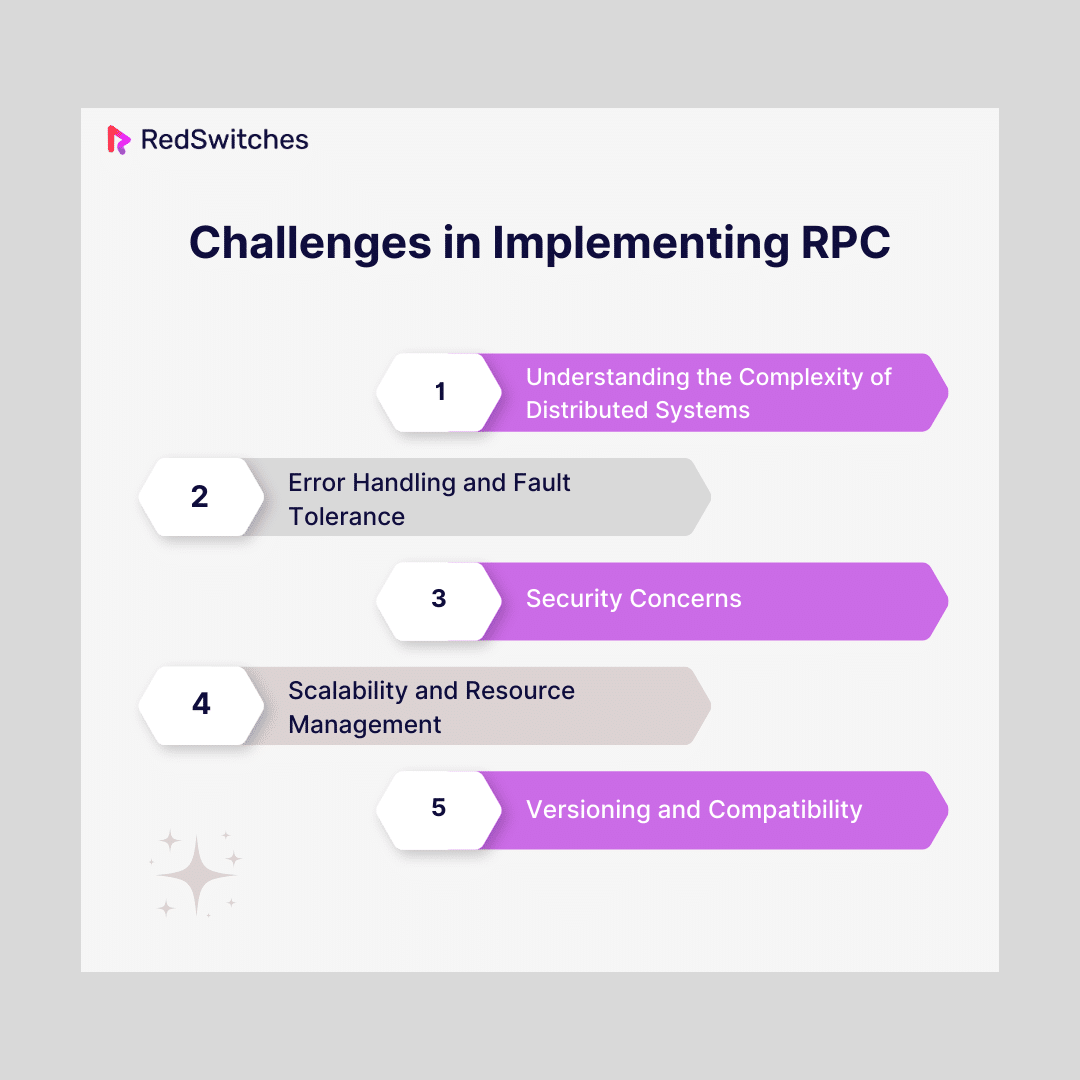

Challenges in Implementing RPC

Below are a few challenges one can expect to face when implementing remote procedure call (RPC):

Understanding the Complexity of Distributed Systems

One of the top challenges in implementing remote procedure call is the complexity of distributed systems. RPC, by nature, involves different machines, which may have varying architectures, operating systems, and network configurations. Ensuring smooth communication across these diverse environments can be daunting.

Developers need to consider data serialization for platform independence, handle network latency, and manage the synchronization of different system components.

Error Handling and Fault Tolerance

Dealing with errors and ensuring fault tolerance becomes more complex in distributed systems. RPC systems need robust error-handling mechanisms to deal with scenarios like network failures, server unavailability, or unresponsive services. Implementing retries, timeout policies, and fallback strategies is essential. However, these must be carefully designed to avoid cascading failures in a networked environment.

Security Concerns

Security is another critical challenge in remote procedure call implementations. Since RPC involves data being sent over networks, it’s vulnerable to various security threats like data interception, unauthorized access, and attacks on network communication.

Implementing secure communication channels using SSL/TLS encryption, proper authentication, and authorization mechanisms are vital. Ensuring data integrity and confidentiality during RPC calls is also essential.

Scalability and Resource Management

Scalability becomes a concern as applications flourish. RPC implementations must be able to scale with the increasing load. This scalability involves handling more remote procedure calls and efficiently managing resources like memory and connections. Load balancing, rate limiting, and resource pooling can help manage scalability issues.

Versioning and Compatibility

Maintaining backward compatibility and managing versioning in RPC systems is challenging. Ensuring that changes in one service do not break compatibility with others is essential as systems evolve. This challenge requires careful API design and versioning strategies, such as semantic versioning and adhering to contract-first API development.

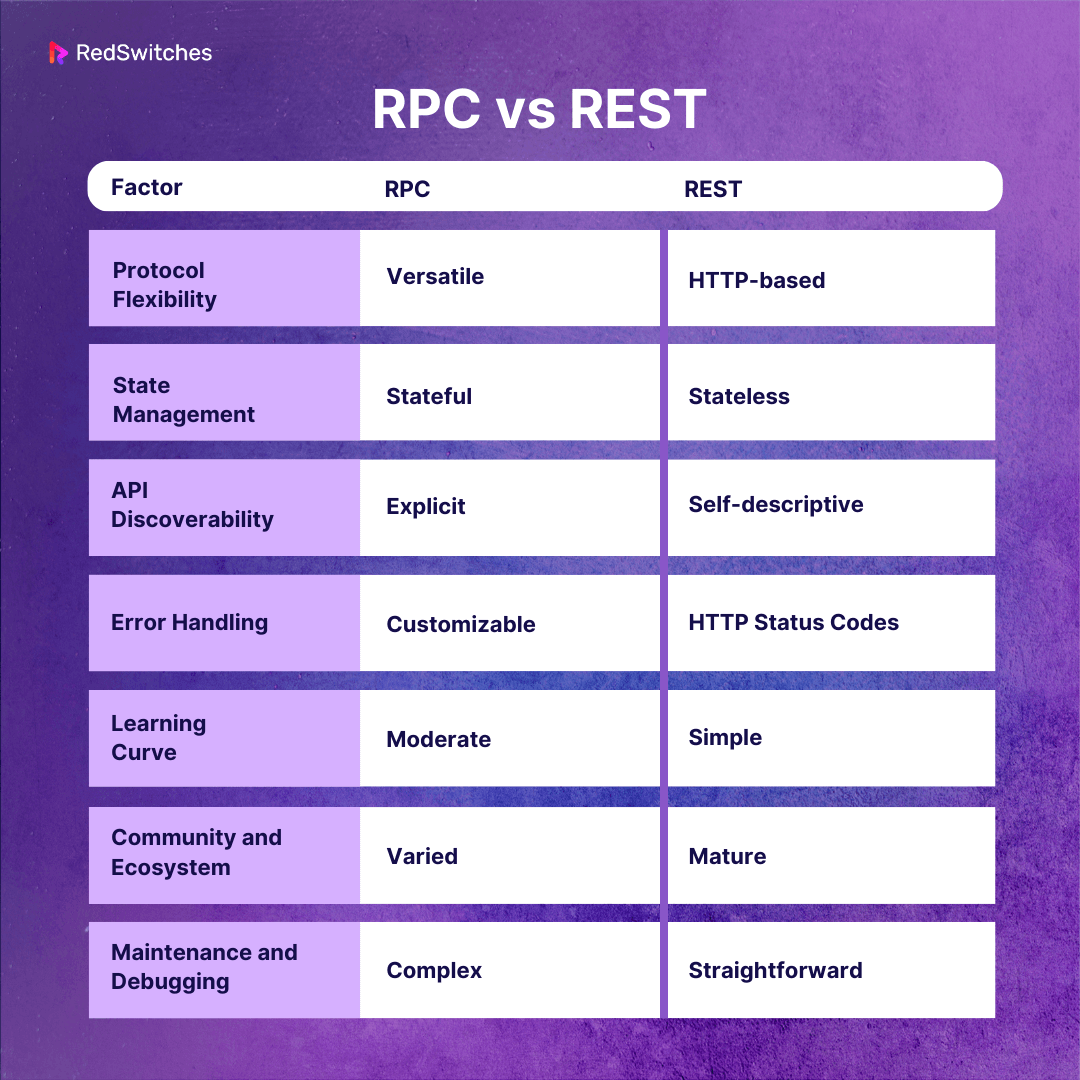

Is RPC Better Than REST?

A common query most individuals have is whether RPC is better than REST. When deciding whether RPC is better than REST, it is important to compare both in terms of several factors. Before making comparisons, one must understand what REST means.

REST is an architectural style for designing networked applications. It uses HTTP requests to access and manipulate data, representing the state of the requested resource with readable URLs.

Here is a comparison of RPC vs REST:

Protocol Flexibility

RPC

Known for its protocol agnosticism, remote cloud procedure isn’t confined to HTTP. Certain implementations of RPC can operate over other protocols, offering a versatile approach to system design. This flexibility allows RPC to be tailored to specific network environments and requirements.

REST

REST is tied to HTTP. It leverages HTTP’s well-established capabilities, including caching, security layers (TLS/SSL), and its stateless nature. This close association with HTTP means RESTful services are well-aligned with web technologies. This makes them highly compatible with the Internet ecosystem.

State Management

RPC

RPC often maintains a state between requests. This can be beneficial in scenarios where the context of a user session or transaction needs to be preserved across multiple procedure calls, such as in complex transactional systems or when dealing with real-time data streams.

REST

REST adopts statelessness, a core principle of RESTful architecture. Each request must be self-contained, carrying all necessary information, which improves scalability and reliability. This stateless nature simplifies the server design, as it doesn’t need to maintain, update, or communicate the session state.

API Discoverability

RPC

Discoverability in RPC might be more difficult to achieve. Clients often require specific knowledge about the procedures available on the server, necessitating comprehensive documentation or additional discovery tools for clients to understand and interact with the API effectively.

REST

REST offers improved discoverability. Its use of standard HTTP methods (GET, POST, PUT, DELETE) and resource-oriented URLs make RESTful services more intuitive and self-descriptive. The structure of a REST API can often be inferred from the URL patterns and HTTP methods, making it easier for developers to understand and use.

Error Handling

RPC

The complexity of error handling in remote procedure call can vary based on the protocol and serialization format used. Different RPC implementations may have distinct ways of representing and communicating errors, which can complicate error handling and require more detailed client-side logic.

REST

REST uses standardized HTTP response codes to indicate the status of requests, including errors. This standardization simplifies error handling, as developers can leverage common, universally understood HTTP status codes to determine the nature of the error and the appropriate response.

Learning Curve

RPC

RPC can have a considerable learning curve, especially when using protocols like gRPC and XML-RPC. This might require familiarity with specific serialization formats or interface definition languages.

REST

REST has a more pronounced learning curve, especially for individuals not well-versed with the nuances of HTTP methods, status codes, and architectural principles like statelessness and resource orientation. However, this is often offset by the extensive resources and community support available for REST.

Community and Ecosystem

RPC

The community and ecosystem surrounding remote procedure call can vary depending on the specific implementation. For instance, gRPC has a growing community with increasing support for multiple programming languages and environments.

REST

Since REST is widely used and conforms to web standards, it has a vibrant community. Given the community’s widespread backing, a great deal of resources, libraries, and tools are available for developing RESTful services.

Maintenance and Debugging

RPC

Maintenance and debugging in remote procedure call environments can be challenging, especially due to the use of binary data formats and custom protocols in some implementations. These aspects can make data less visible during transmission, making it difficult to diagnose issues. Developers may require specialized tools or detailed logs to debug RPC-based communications.

REST

REST tends to be easier to maintain and debug. Text-based formats like JSON or XML make the data human-readable, facilitating easier inspection and troubleshooting. The widespread use of HTTP means that countless standard tools and practices are available to support debugging and maintenance of RESTful services.

Also Read: Learn How Distributed Tracing Works.

Popular Cloud Native Remote Procedure Call Services

Among the many remote procedure call frameworks available, a few names like gRPC, Apache Thrift, and Dubbo have gained significant popularity in cloud-native environments. Let’s further discuss the most popular cloud-native remote procedure call services and why they stand out:

gRPC

Credits: gRPC

gRPC is an advanced remote procedure call framework that uses HTTP/2 as its transport protocol. Although Google initially created it, it’s now part of the Cloud Native Computing Foundation. The main objective of gRPC is to facilitate efficient communication between services in a distributed system, especially those structured as microservices.

Key Features of gRPC

- Protocol Buffers (Protobuf): gRPC uses Protocol Buffers, Google’s language-neutral, platform-neutral, extensible mechanism for serializing structured data. Protobuf is more efficient and less ambiguous than XML and JSON, leading to smaller message sizes and faster processing.

- HTTP/2 Based Communication: gRPC uses HTTP/2, allowing for several advanced features like bidirectional streaming, efficient connection management, and reduced latency. HTTP/2’s ability to perform multiple requests over a single TCP connection significantly enhances communication performance in microservices architectures.

- Language Agnostic Interface Definition: gRPC supports many programming languages, making it an ideal choice for polyglot environments. Developers can define service methods and message types in a .proto file, and gRPC generates the client and server-side code in various languages.

- Strongly Typed Contracts: By using Protobuf, gRPC enables strongly typed service contracts, which can reduce errors and misunderstandings in service communication.

- Support for Streaming: gRPC supports four types of streaming – server streaming, client streaming, bidirectional streaming, and unary (non-streaming). This flexibility allows for a variety of communication patterns between services.

Why Choose gRPC for Cloud Native Applications?

- Efficiency: The combination of HTTP/2 and Protobuf makes gRPC incredibly efficient in terms of bandwidth and resource usage, which is crucial in cloud environments where resources are often metered and limited.

- Cross-Language Support: With support for numerous languages, gRPC fits flawlessly in a cloud-native ecosystem, where services are often written in different languages.

- Seamless Interoperability: gRPC ensures smooth and efficient communication between services, essential for the microservices architecture predominant in cloud-native applications.

- Performance: The efficiency of HTTP/2 and the low overhead of Protobuf result in high performance, making gRPC an excellent choice for high-load systems.

- Community and Ecosystem: Being part of CNCF, gRPC benefits from strong community support and integration with other cloud-native tools and technologies.

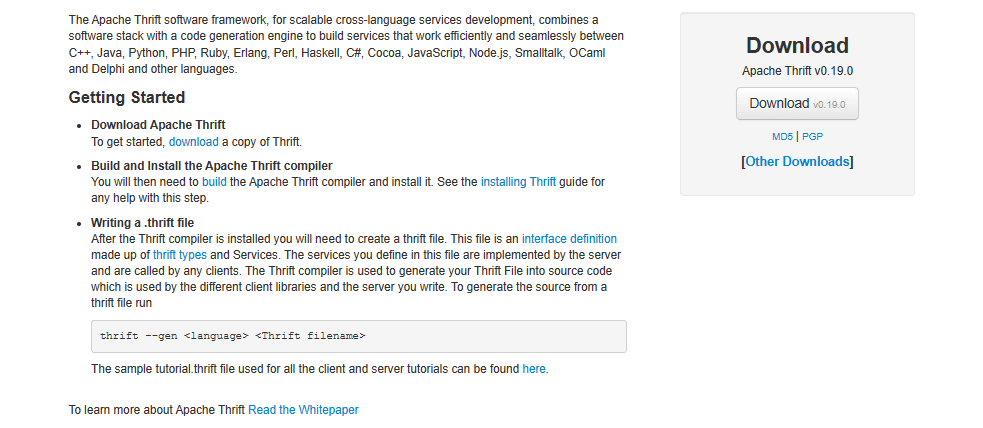

Apache Thrift

Credits: Apache

Apache Thrift is a free remote rocedure call framework that facilitates efficient and reliable communication between services, particularly in a distributed system environment. It is known for its ability to bridge different programming languages, which empowers developers to create services that work smoothly together despite being written in different languages. This cross-language support is of great help in the diverse ecosystems of cloud-native applications.

Key Features of Apache Thrift

- Cross-Language Support: One of Thrift’s most obvious benefits is its extensive language support. It supports several languages, including C++, Java, Python, PHP, Ruby, Erlang, Perl, Haskell, C#, Cocoa, JavaScript, Node.js, Smalltalk, OCaml, and Delphi. This makes it ideal for polyglot environments common in cloud-native systems.

- Flexible Serialization Options: Unlike some RPC frameworks tied to a specific serialization format, Apache Thrift empowers developers to choose from various serialization formats, including binary, compact, and JSON. This flexibility enables developers to optimize for performance, compatibility, or readability.

- Support for Synchronous and Asynchronous Processing: Thrift caters to multiple use cases by supporting synchronous and asynchronous processing. This versatility is crucial for building responsive and scalable cloud-native applications.

- Comprehensive Interface Definition Language (IDL): Thrift uses an Interface Definition Language (IDL) to define data types and service interfaces, which are then used to generate code for any supported language. This approach ensures consistency and ease of maintenance across different parts of a distributed system.

- Efficient and Lightweight: Apache Thrift is designed to be lightweight and efficient. This helps minimize the overhead on network and system resources, a critical consideration in cloud-native environments.

Why Opt for Apache Thrift in Cloud-Native Applications?

- Cross-Language Development: In cloud-native environments, where services are often developed in different languages, Thrift’s cross-language capabilities ensure smooth and effortless integration and communication.

- Flexibility in Serialization: The ability to choose the most appropriate serialization format for each use case allows for better optimization in terms of performance and compatibility.

- Scalability: With support for synchronous and asynchronous processing, Thrift can efficiently handle varying loads. This makes it suitable for scalable cloud-native applications.

- Ease of Use: The comprehensive IDL and the auto-generation of client and server code across languages simplify the development process. This allows teams to focus on business logic rather than communication specifics.

- Community Support: As part of the Apache Software Foundation, Thrift has a large open-source community offering support, tools, and continuous improvements.

Also Read: Cloud Data Security: 5 Important Things To Know.

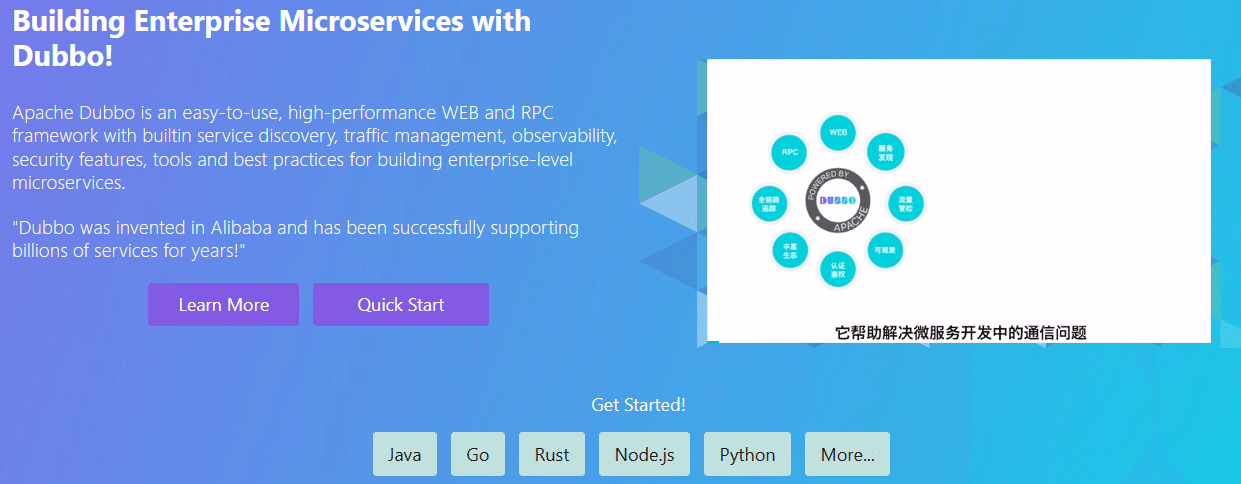

Dubbo

Credits: Dubbo

Dubbo is an open-source RPC framework developed by Alibaba. It’s designed to provide high-performance service-based communication between applications in a distributed system, particularly in a microservices architecture. Dubbo has gained significant traction and community support as a project under the Apache Software Foundation.

Key Features of Dubbo

- High Performance and Scalability: Dubbo is known for its high performance and scalability. It can handle thousands of service invocations per second, making it suitable for enterprise-level applications.

- Transparent Interface-Based Remote Method Invocation: Dubbo makes remote service invocation as simple as calling local methods. It abstracts the underlying network communication, serialization, and deserialization processes.

- Dynamic Service Discovery and Load Balancing: Dubbo supports multiple service registry centers, such as Zookeeper, Nacos, and others, for service registration and discovery. It also implements various load-balancing strategies to distribute traffic among service providers efficiently.

- Fault Tolerance and Failover Mechanisms: To ensure high availability, Dubbo provides several fault tolerance strategies, including failover, failfast, failsafe, and failback.

- Runtime Traffic Routing and Configuration Management: Dubbo offers robust runtime traffic routing and dynamic configuration management, which helps control and adjust system behavior without restarting services.

- Monitoring and maintenance tools: Dubbo comes with a set of monitoring and maintenance tools that help track service dependencies, load, and performance metrics.

Why Choose Dubbo for Cloud Native Applications?

- Smooth Integration with Microservices Architectures: Dubbo fits naturally into microservices architectures. It offers efficient and easy-to-use mechanisms for inter-service communication.

- Support for Various Communication Protocols: Dubbo supports multiple communication protocols, including HTTP, RMI, Hessian, and WebService. This flexibility allows developers to choose the most appropriate protocol based on their use case.

- Improved Service Governance: With features like service governance, runtime traffic routing, and dynamic configuration, Dubbo empowers developers and system administrators with greater control over their services.

- Sizeable Community: Being part of the Apache Software Foundation, Dubbo benefits from a sizeable community and ecosystem. This ensures continuous improvement and support.

- Optimized for Cloud Environments: Dubbo’s lightweight nature and capability to handle high-throughput scenarios make it well-suited for cloud environments, where resources are often dynamically scaled.

- Language Agnostic: Although Dubbo supports Java primarily, it also offers compatibility with other languages through protocol layering. This makes it a versatile choice for diverse development teams.

SOFARPC

Credits: Sofastack

SOFARPC, or Service-Oriented Framework Array Remote Procedure Call, is an open-source RPC framework that offers efficient, high-performance, and reliable service-to-service invocation for large distributed systems. Ant Financial initially developed it to address their specific needs in high-throughput, scalable, and resilient service communication.

Key Features of SOFARPC

- High Performance and Scalability: SOFARPC is built for performance. It can handle high volumes of concurrent requests with low latency, making it ideal for scenarios where performance is critical.

- Multiple Protocol Support: SOFARPC supports various protocols, including its own SOFARPC protocol, REST, and others. This flexibility allows for easy integration with different systems and services.

- Load Balancing and Fault Tolerance: Advanced load balancing and fault tolerance mechanisms are integral to its design. These features ensure that service requests are evenly distributed across available resources and that the system can gracefully handle service failures.

- Service Governance: It offers robust service governance capabilities, including service routing, service degradation, and traffic management. This allows for better control and optimization of service interactions in complex environments.

- Rich Ecosystem Integration: SOFARPC smoothly integrates with other cloud-native tools and platforms, providing a cohesive environment for developing and managing microservices.

Why Choose SOFARPC for Cloud Native Applications?

- Optimized for High-Throughput Systems: SOFARPC is particularly well-suited for applications requiring handling concurrent requests without sacrificing performance.

- Versatility in Communication: The support for multiple communication protocols makes SOFARPC versatile and adaptable to various application needs, from simple RESTful services to complex, stateful service interactions.

- Robust Resilience Features: In cloud-native environments, where services are distributed, and failures are inevitable, SOFARPC’s resilience features, like load balancing and fault tolerance, are invaluable.

- Enhanced Service Management: With its strong focus on service governance, SOFARPC simplifies the management of service dependencies, traffic flow, and performance tuning in distributed architectures.

- Ease of Integration: Being compatible with various cloud-native tools and platforms, SOFARPC fits well into the broader ecosystem, making integrating with existing cloud infrastructure and services easier.

Also Read: Mesos Vs Kubernetes: The Best Container Orchestration Tool.

JSON-RPC

Credits: JSON

JSON-RPC is a lightweight, text-based Remote Procedure Call protocol. It uses JSON as its data format for requests and responses. Originating from XML-RPC and SOAP, JSON-RPC is created to be simple, portable, and language-independent. This protocol allows remote procedure calls and data between diverse system components, regardless of the underlying network or programming languages used.

Key Features of JSON-RPC

- Simplicity and Lightweight: JSON-RPC’s use of JSON, a human-readable format, makes it straightforward to understand. The lightweight nature of JSON also ensures minimal overhead, which is crucial in a cloud-native environment where efficiency is key.

- Statelessness: JSON-RPC does not retain the state between requests. This statelessness aligns well with the principles of cloud-native applications, which often rely on stateless architectures for scalability and resilience.

- Transport Agnostic: JSON-RPC can operate over various transport protocols, including HTTP, WebSockets, or TCP. This flexibility allows it to integrate into different applications and network environments easily.

- Language Independence: JSON, a universally recognized data format, can be used in virtually any programming language. This makes JSON-RPC highly versatile and a good fit for diverse development ecosystems.

- Asynchronous and Batch Processing: JSON-RPC supports asynchronous processing and can handle batch requests, where multiple calls are sent in a single request. This improves performance and can be valuable in scenarios demanding high throughput.

Why Choose JSON-RPC for Cloud Native Applications?

- Alignment with Microservices Architecture: The simplicity and lightweight nature of JSON-RPC make it well-suited for microservices architectures commonly found in cloud-native applications. It facilitates easy and quick data exchange between loosely coupled services.

- Scalability: Given its stateless nature and support for asynchronous processing, JSON-RPC can handle high volumes of requests, which is essential for scalable cloud-native applications.

- Cross-Platform Communication: JSON-RPC’s language-agnostic approach enables smooth inter-service communication in cloud-native environments, where applications often involve various technologies and programming languages.

- Ease of Use and Development: Developers appreciate JSON-RPC’s straightforward implementation and readability. This ease of use can significantly speed up development and debugging processes.

- Flexibility in Network Environments: Being transport agnostic, JSON-RPC can adapt to various network conditions and constraints, making it a robust choice for cloud-native applications that may operate across different environments.

XML-RPC

Credits: XML-RPC

XML-RPC is a remote procedure call protocol utilizing HTTP as a transport method and XML as an encoding for its calls. It was developed in the 1990s and is considered one of the oldest and most basic web service communication protocols. Thanks to XML-RPC software running in various settings and on different operating systems can call procedures via the Internet.

Key Features of XML-RPC

- Simple and Extensible: XML-RPC uses XML as its message format, which is both human-readable and straightforward to parse programmatically. Its simplicity makes it easy to understand and implement.

- Language and Platform Agnostic: One of the top strengths of XML-RPC is its language neutrality. It can be used with virtually any programming language that supports HTTP and XML parsing, making it highly versatile.

- Stateless Communication: Like HTTP, XML-RPC is a stateless protocol, meaning each request from a client to a server contains all the information needed to understand and respond to the request.

- XML-Based Protocol: XML ensures that the data can be easily passed through firewalls and read by any XML-compatible parser, regardless of the platform.

- HTTP as Transport Layer: Leveraging HTTP for transport means XML-RPC can work smoothly over the web, taking advantage of the existing infrastructure.

Why Choose XML-RPC for Cloud Native Applications?

- Ease of Use and Interoperability: XML-RPC’s straightforward nature makes it easy to implement and integrate into various systems, ensuring broad interoperability across different platforms and languages.

- Lightweight Communication: While not as compact as binary protocols like Protobuf used in gRPC, XML-RPC’s use of XML offers a good balance between readability and efficiency, suitable for less complex or less frequent communication scenarios.

- Legacy System Integration: XML-RPC is the best choice for integrating with legacy systems that already use XML for data interchange. Its compatibility with older systems makes it valuable when upgrading or replacing existing infrastructure is not feasible.

- Suitable for Simple Applications: It presents an ideal solution for applications that don’t require the high performance of more modern RPC protocols and can benefit from the simplicity of XML-RPC.

- Ease of Debugging: The human-readable format of XML simplifies debugging and development, which can be beneficial in rapidly changing cloud-native environments.

TARS (Tencent TARS)

Credits: Tarscloud

Tencent developed the open-source microservices framework TARS in 2008. It’s meant to facilitate the quick creation, deployment, and maintenance of server applications, and it is mainly focused on boosting the capabilities and efficiency of cloud-native apps. Tencent Advanced RPC Suite is a platform developed primarily as an advanced RPC framework.

Key Features of TARS (Tencent TARS)

- High-Performance RPC: TARS is an RPC framework that supports multiple protocols like TARS protocol, HTTP, and more. It’s built to handle high-throughput and low-latency scenarios, making it ideal for real-time communication needs.

- Language Neutrality: A standout benefit of TARS is its support for multiple programming languages. Developers can define services in languages such as C++, Java, Node.js, PHP, and Python, offering flexibility in a multi-language development environment.

- Automatic Service Registration and Discovery: TARS includes built-in support for service registration and discovery. This is essential for microservices architectures where services need to discover and communicate with each other dynamically.

- Integrated Service Monitoring and Orchestration: TARS provides several tools like service monitoring, orchestration, and deployment. This includes automatic load balancing, health checks, and seamless scaling.

- Efficient Serialization/Deserialization: The framework includes efficient serialization and deserialization mechanisms, which are crucial for RPC performance. This feature ensures that the data exchanged between services is compact and fast to process.

- Extensive Administration Console: TARS comes with an administrative console for managing the lifecycle of services. This console simplifies tasks like deployment, scaling, and monitoring of services.

Why Choose TARS for Cloud Native Applications?

- Scalability: TARS is designed with scalability in mind. It can handle many concurrent service requests, making it suitable for applications that need to scale based on demand.

- Cross-Language Support: The ability to define and implement services in various languages makes TARS highly adaptable for diverse development teams and application requirements.

- Robust Service Management: Comprehensive management features like service discovery, load balancing, and health monitoring enable the reliable and efficient operation of large-scale microservice architectures.

- Cloud-Native Oriented: TARS aligns well with cloud-native principles like containerization, orchestration, and dynamic management. It integrates smoothly with cloud-native technologies and platforms.

- Community and Support: Being an open-source project, TARS benefits from a growing community. Developers and organizations can contribute to and leverage community-driven innovations and solutions.

- Flexibility in Deployment: TARS can be deployed in various environments, including on-premises data centers, public clouds, and hybrid cloud environments. This flexibility ensures that it can adapt to different organizational needs and infrastructure setups.

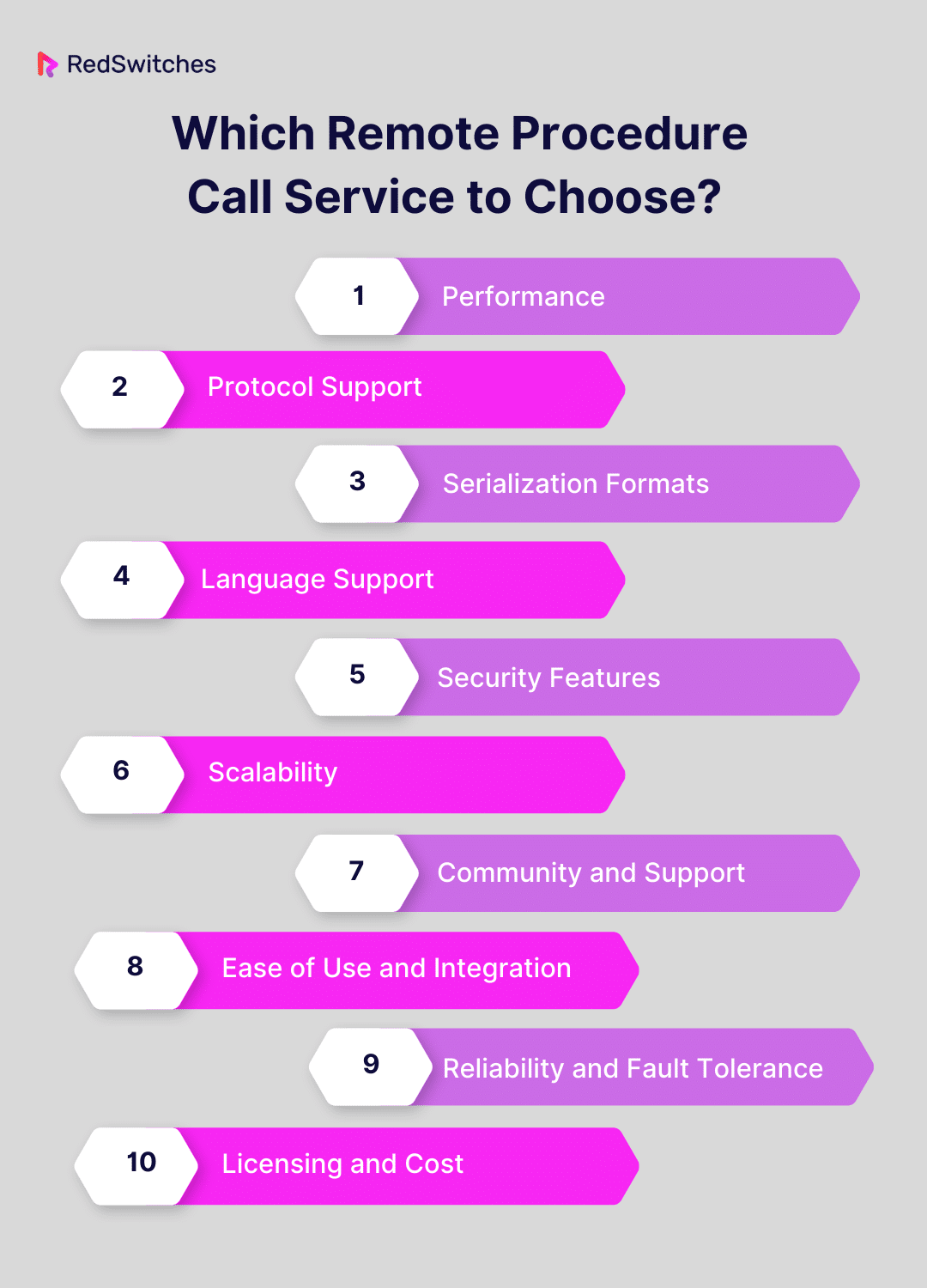

Which Remote Procedure Call Service to Choose?

Performance

Performance takes center stage when it comes to selecting a Remote Procedure Call service. It is crucial to evaluate the RPC service’s speed and efficiency, especially in terms of latency and throughput. High-performing RPC services ensure faster communication between services, essential for time-sensitive applications.

Consider the service’s ability to handle concurrent requests and its response time under load. A service that performs well under stress is critical for maintaining the responsiveness of your application, especially during peak usage times.

Protocol Support

Different RPC services support various communication protocols. Choosing one that aligns with your system’s requirements is essential. Common protocols include HTTP, TCP, and named pipes. HTTP is universal and works well with web-based applications, while TCP is suitable for lower-level network communication.

Named pipes are often used for inter-process communications on the same machine. The choice of protocol can significantly affect the performance and scalability of your application, so it’s important to select a service that offers the protocols best suited to your architecture.

Serialization Formats

Serialization is the process of converting an object into a format that can be easily transported over the network. Some RPC services use XML, while others prefer JSON or binary formats like Protocol Buffers. The choice of serialization format can have a noteworthy impact on the performance and efficiency of your application.

Binary formats are known to be faster to serialize/deserialize and produce smaller payloads than text-based formats like JSON or XML. Text-based formats are more human-readable and easier to debug. Your choice should balance efficiency with usability based on your application’s needs.

Language Support

It is important to ensure the RPC service you choose supports the programming languages used in your project. Some RPC frameworks are language-specific, offering optimizations and features tailored to a specific language ecosystem. Others are designed to be language-agnostic, supporting multiple languages.

This is even more important in a microservices architecture where different services might be written in different languages. A service with broad language support offers more flexibility and can simplify integration across diverse systems within your organization.

Security Features

As discussed above, security is the most integral factor in selecting a Remote Procedure Call service, especially when handling sensitive or confidential data. Beyond basic SSL/TLS support, learn about the service’s encryption standards, data integrity measures, and compliance with regulatory frameworks.

Authentication mechanisms are also vital. Options could range from basic token-based systems to more sophisticated OAuth or Kerberos. Authorization capabilities, like role-based access control, ensure that clients have appropriate permissions, adding another layer of security. It is also important to evaluate the service’s ability to handle security vulnerabilities and its track record in responding to threats.

Scalability

The ability of an RPC service to scale effectively with your application’s growth is crucial. This means handling increased traffic and more clients and maintaining performance stability under load. Investigate whether the service offers load balancing, which can distribute workloads across multiple servers to improve responsiveness.

Check if it supports horizontal scaling (adding more machines) and vertical scaling (adding resources to existing machines) and its capability to integrate with cloud services for elastic scalability.

Community and Support

Strong support and a thriving community are invaluable for long-term success. A large, active community means access to a wealth of shared knowledge, tips, and troubleshooting assistance.

Evaluate the availability and quality of official support channels like documentation, forums, or customer service. The frequency of updates and patches and the service’s roadmap can provide insight into its future viability and commitment to staying current with technological advances.

Ease of Use and Integration

The ease with which you can implement and integrate the Remote Procedure Call service into your existing systems is a key consideration. Look for user-friendly interfaces, comprehensive documentation, and a straightforward setup process. SDKs and libraries compatible with your tech stack can significantly reduce integration efforts.

Don’t forget to consider the service’s compatibility with other tools and services you use, as seamless integration can greatly enhance workflow efficiency and reduce development time.

Reliability and Fault Tolerance

Reliability is non-negotiable in an RPC service. Assess the service’s uptime history and mechanisms for handling outages or service disruptions. Built-in fault tolerance features, like automatic retries, circuit breakers, and backup systems, are essential for maintaining service continuity.

It’s also important to consider the service’s geographic redundancy and disaster recovery capabilities, ensuring your application remains operational even under adverse conditions.

Licensing and Cost

The economic aspect is important, especially for projects with budget constraints. Open-source RPC services can offer cost-effective solutions with the added benefit of community support and flexibility. However, they may require more in-house expertise for implementation and maintenance.

Although commercial services often come with more comprehensive support and advanced features, they come at a higher cost. Consider the total cost of ownership, including setup, maintenance, and potential scalability costs, not just the upfront price or subscription fees.

Future of RPC in Cloud Computing

The future of Remote Procedure Calls (RPC) in cloud computing is expected to evolve and adapt to the changing needs of cloud-based services and applications. Below are some key aspects that might define the future of RPC in cloud computing:

Increased Efficiency and Performance

Remote Procedure Call mechanisms are likely to become more efficient and performant, handling higher throughput and lower latency, which is crucial for cloud-based applications that require real-time processing and data exchange.

Integration with Microservices Architecture

As microservices architecture continues to dominate cloud computing, RPC protocols like gRPC are becoming more popular. They offer advantages like language agnosticism and efficient communication, making them well-suited for microservices-based cloud applications.

Better Support for Asynchronous Communication

As cloud applications become more complex, there’s a growing need for remote procedure call mechanisms that efficiently support asynchronous communication, allowing for non-blocking operations and better resource utilization.

Integration with Serverless Architectures

The rise of serverless computing in cloud environments might lead to RPC protocols optimized for serverless architectures, offering seamless integration and efficient functioning in these dynamic environments.

Customization and Extensibility

Future RPC frameworks might offer more customization and extensibility options, allowing developers to tailor the RPC mechanisms to fit the specific needs of their cloud applications.

Improved Interoperability

As cloud ecosystems become more diverse, interoperability between systems and services becomes crucial. RPC protocols will likely evolve to support better interoperability across various platforms and programming languages.

Edge Computing Integration

With the growth of edge computing, Remote Procedure Call protocols may be adapted to work more efficiently in edge-cloud environments, where low latency and efficient data processing are essential.

Adoption of AI and Machine Learning

RPC mechanisms could be integrated with AI and machine learning models to optimize performance, predict and manage loads, and provide smarter routing and data handling capabilities.

Green Computing and Sustainability

As part of the broader move towards sustainable computing, future RPC protocols might also focus on reducing the environmental impact by optimizing resource usage and reducing energy consumption.

Conclusion

Cloud Native RPC services are fundamental building blocks for modern, scalable, and efficient distributed systems. With the rapid growth of microservices and containerized applications, the importance of RPC services in simplifying inter-service communication cannot be overstated.

To take advantage of the full potential of Cloud Native remote procedure call services, choosing the right infrastructure and hosting environment is crucial. This is where RedSwitches, a leading cloud hosting solution provider, can make a notable difference. With expertise in cloud infrastructure and top-notch services, RedSwitches is an ideal partner for organizations looking to optimize their Cloud Native RPC setup.

To learn more about how RedSwitches can empower your Cloud Native remote procedure call services and enhance your overall application performance, visit our website today.

FAQs

Q. What is a remote procedure call in cloud computing?

A Remote Procedure Call in cloud computing is a protocol that one program can employ to order a service from a program located in another computer in a network without understanding the network’s specifics.

Q. What are the various types of remote procedure calls?

The various types of remote procedure calls include Synchronous RPCs, Asynchronous RPCs, Batched RPCs, Nested RPCs, and Concurrent RPCs.

Q. How does RPC work?

RPC works by the caller requesting a local stub procedure that sends the call to a remote system. The remote system then processes the call and returns the results to the caller.

Q. What is a procedure number in the context of RPC?

A procedure number in RPC refers to a unique identifier that represents a specific procedure or method that the server can execute in response to a remote procedure call from a client.

Q. What is the difference between a local and remote procedure call?

A local procedure call involves the application program making a call into a procedure without regard for whether it is local or remote, while a remote procedure call is made to a procedure on a remote system and blocks until the call returns.

Q. What is an RPC client?

An RPC client is a program or process that initiates a remote procedure call and sends a message to the server to request the execution of a specific procedure or method.

Q. What are remote objects in the context of RPC?

Remote objects in RPC refer to the objects or resources on a remote system that can be accessed and manipulated through remote procedure calls from a client program.

Q. What does it mean when the server is processing the call in RPC?

When the server is processing the call in RPC, the remote system executes the requested procedure or method and generates the results to be sent back to the client.

Q. What are the semantics of a local procedure in RPC?

The semantics of a local procedure in RPC refer to the behavior and properties of a procedure call made within the same system without the overhead and complexities of remote communication.

Q. How does RPC handle blocking in remote procedure calls?

RPC handles blocking by making a remote procedure call to a server and blocking until the call returns, allowing the client to wait for the results of the remote procedure execution.