Key Takeaways

- Docker simplifies app development & deployment. It uses containers for portability across environments.

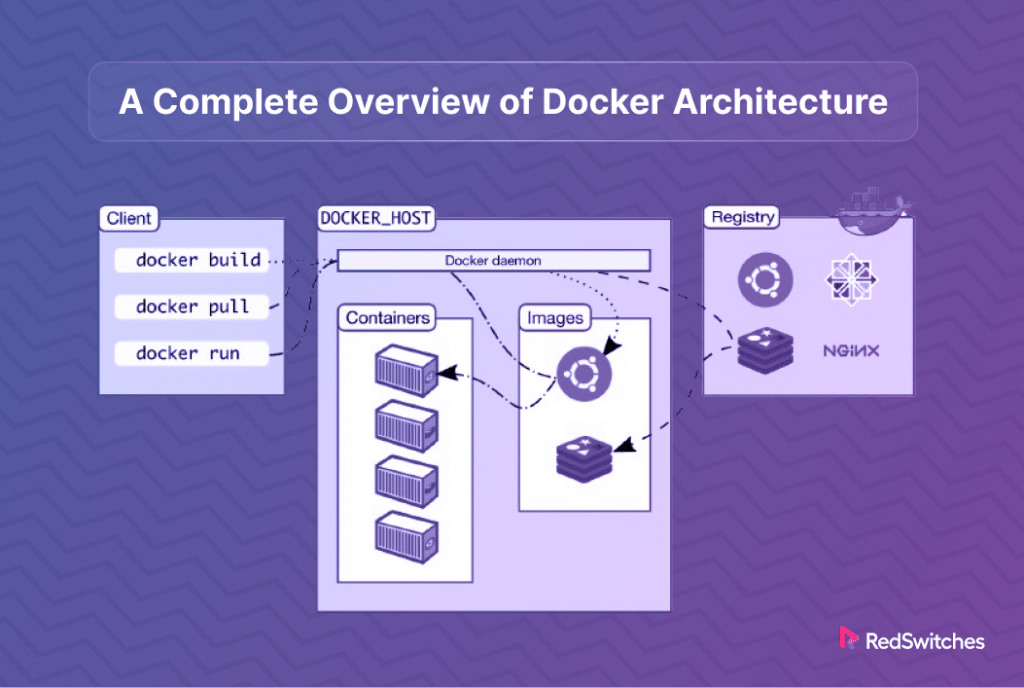

- Docker uses a client-server model for interaction. The daemon manages tasks, and the client provides control.

- Docker offers a robust development platform. It features standardization, portability, and tool integration.

- Docker is versatile. It can be used for configuration, isolation, CI/CD pipelines, microservices, and more.

- Understand Docker’s architecture for effective use. Learn about daemons, clients, registries, and objects.

- Images define applications, and containers run them. Images are blueprints, and containers are executable instances.

- Docker provides flexible data storage options. Choose from volumes, bind mounts, and tmpfs mounts.

- Compose and manage multi-container apps. Define and run complex applications with ease using Docker Compose.

- Swarm simplifies container orchestration. Manage large-scale container deployments effectively.

- Dockerfiles automate image creation. Ensure consistency and repeatability with instruction-based files.

For developers and IT workers, understanding Docker architecture has become essential in the rapidly changing field of software development. Docker, a revolutionary virtualization and cloud computing tool, offers a streamlined, efficient way to manage application deployment. Understanding Docker architecture is key to unlocking its full potential, enabling you to create, deploy, and scale applications with unparalleled ease and consistency.

As we delve into the intricacies of Docker architecture, we aim to provide a comprehensive guide that simplifies this complex technology. Whether you’re a beginner looking to grasp the basics or an experienced professional aiming to refine your skills, this article is tailored to help you confidently navigate the world of Docker.

From its core components to its innovative features, Docker architecture offers a world of possibilities. Join us as we explore how Docker’s architecture can revolutionize your development workflow, providing a robust platform for your applications to thrive in today’s fast-paced digital landscape.

Table of Contents

- Key Takeaways

- Docker Overview

- Docker Architecture

- Docker Containers vs Virtual Machines

- Challenges and Limitations of Docker

- Future Trends in Containerization

- Increased Adoption in Enterprise Environments

- Growth of Kubernetes and Container Orchestration

- Enhanced Security Measures

- Integration with Serverless Architectures

- Edge Computing and IoT

- AI and Machine Learning Workloads

- Continuous Improvement in DevOps Workflows

- Expansion of Cloud-Native Technologies

- Conclusion

- FAQs

Docker Overview

Credits: Freepik

At its core, Docker is a powerful platform that simplifies the process of building, shipping, and running applications. It utilizes containerization technology to package an application and its dependencies into a single, portable container. This innovation has revolutionized how we handle software development and deployment, making processes more efficient and less prone to errors.

What sets Docker apart is its unique architecture. Docker architecture operates on a client-server model, comprising the Docker Daemon (the server), the Docker Client, and the Docker Registry. The Daemon manages Docker objects like images, containers, networks, and volumes. Meanwhile, the Client is your interface for interacting with the Daemon through Docker commands. Lastly, the Docker Registry stores Docker images, allowing for easy sharing and distribution of applications.

Using lightweight containers, Docker ensures your applications run in isolated environments. This isolation allows for greater control, efficiency, and scalability, as containers require fewer resources than traditional virtual machines. Furthermore, Docker’s compatibility with various cloud and infrastructure services makes it an invaluable tool for modern DevOps practices.

In the following sections, we’ll explore the intricacies of Docker architecture in detail, unlocking the strategies to master this robust technology.

Also read Docker Image vs Container: 5 Key Differences Explained

The Docker Platform

Credits: Freepik

Docker is more than just a containerization tool; it’s a comprehensive platform designed for software developers and IT professionals to develop, ship, and run applications. At its heart, Docker provides an environment where applications can be assembled from components and virtualized. This process enables applications to run in various locations, from local desktops to enterprise data center deployments across cloud platforms.

The beauty of the Docker platform lies in its simplicity and flexibility. It allows for the standardization of environments, ensuring that the application will run the same, regardless of where it is deployed. This consistency eliminates the often-heard phrase, “But it works on my machine,” ensuring that if it works in one environment, it works in all. The platform supports multiple languages and frameworks, allowing developers to create applications in their preferred tools and package them into Docker containers.

The platform integrates seamlessly with existing DevOps tools and workflows, enhancing the development pipeline with container orchestration tools like Kubernetes or Docker Swarm. This integration provides automation for deploying, scaling, and operating containerized applications, further simplifying the deployment process.

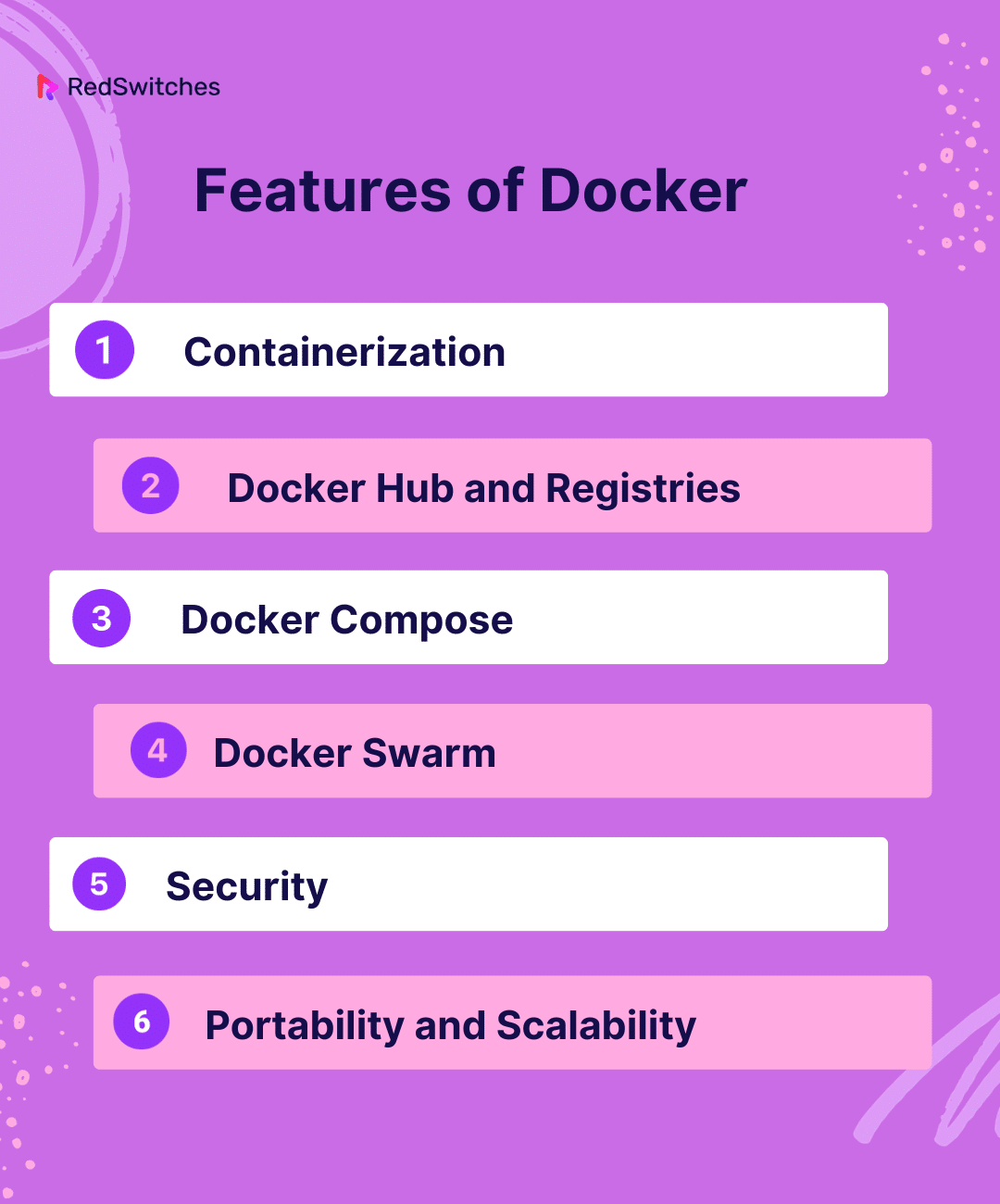

Features of Docker

Docker offers a range of features that make it an indispensable tool in modern software development. Some key features include:

- Containerization: At its core, Docker uses containerization to encapsulate an application and its dependencies in a container that can run on any Linux or Windows-based system.

- Docker Hub and Registries: Docker Hub is the most extensive library and community for container images, providing an array of pre-built containers for various applications and services. Users can also create private registries to store and manage their container images.

- Docker Compose: This tool defines and runs multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services, making it easier to manage complex applications.

- Docker Swarm: Docker Swarm provides native clustering functionality for Docker containers, turning a group of Docker engines into a single, virtual Docker engine.

- Security: Docker provides strong default isolation capabilities to secure your applications. Users can further enhance security through features like Docker Secrets and secure networking.

- Portability and Scalability: Containers can be easily moved across different environments, and Docker’s scalability features allow for easy adjustment as per the demand.

Each feature contributes to Docker’s robustness, making it a go-to solution for developers looking to streamline application development, testing, and deployment.

Also read How to Update Docker Images & Containers in 4 Steps

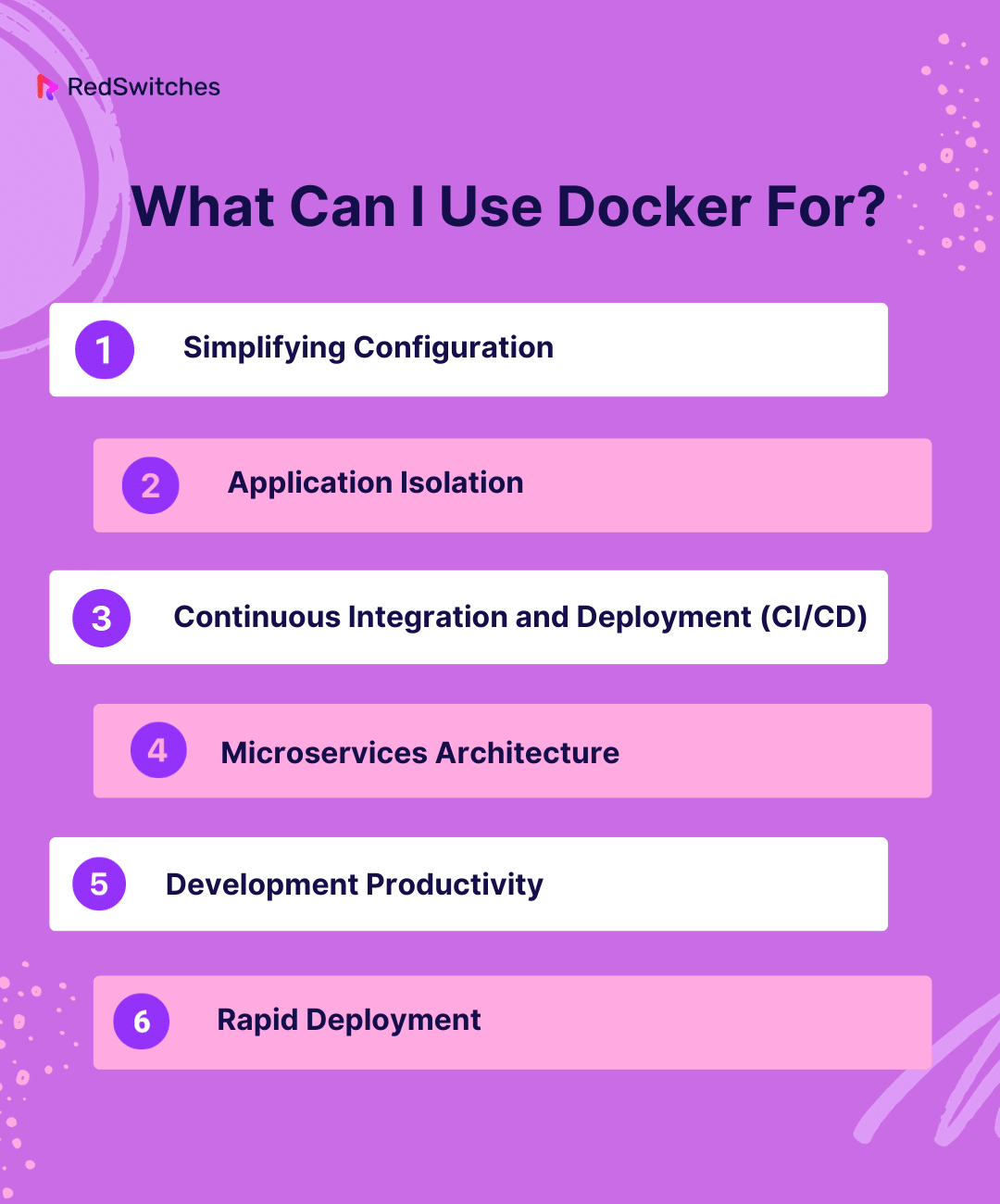

What Can I Use Docker For?

Docker’s versatility allows it to be used for various purposes, making it a valuable tool in many different scenarios:

- Simplifying Configuration: Docker can eliminate the need to install and configure software on individual machines. Using containers provides a consistent environment across all development lifecycle stages.

- Application Isolation: Docker provides a way to isolate your applications in containers, ensuring they can only access the resources they need. This isolation helps in managing dependencies and avoiding conflicts between different applications.

- Continuous Integration and Deployment (CI/CD): Docker is highly effective in CI/CD pipelines. It enables consistent and rapid deployment of applications by creating a standardized environment for development, testing, and production stages.

- Microservices Architecture: Docker is ideal for microservices-based applications, as it allows each service to be deployed, updated, scaled, and restarted independently.

- Development Productivity: Developers can use Docker to quickly set up development environments that mirror production systems, reducing the “it works on my machine” problem.

- Rapid Deployment: Docker containers can be started in milliseconds, making it possible to scale up and down in response to demand rapidly.

Benefits of Using Docker

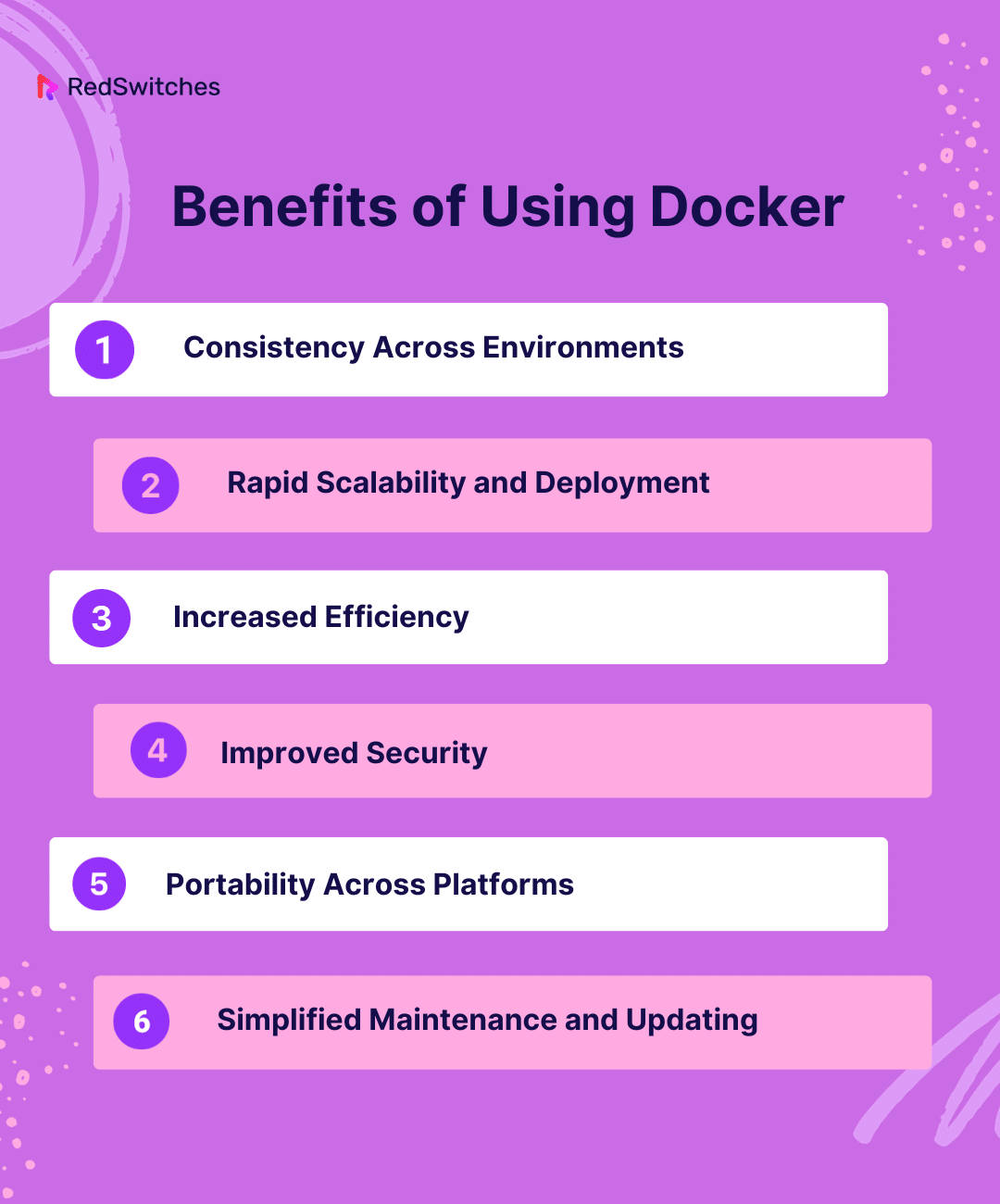

The use of Docker in software development and IT operations brings numerous benefits:

- Consistency Across Environments: Docker ensures consistency across multiple development, testing, and production environments, reducing the complexity and potential issues during deployment.

- Rapid Scalability and Deployment: Docker enables you to scale your application and its underlying infrastructure quickly.

- Increased Efficiency: Containers are lightweight compared to virtual machines, leading to less overhead and more efficient utilization of system resources.

- Improved Security: The isolation of applications in containers increases security. Docker also offers additional security features like image signing and verification.

- Portability Across Platforms: Containers can run across any desktop, data center, or cloud environment, enhancing portability.

- Simplified Maintenance and Updating: Updating or modifying applications is more manageable with Docker, as changes can be made to the container images and then easily deployed.

Organizations and developers can significantly improve their development pipelines and operational efficiency by understanding Docker’s diverse uses and benefits.

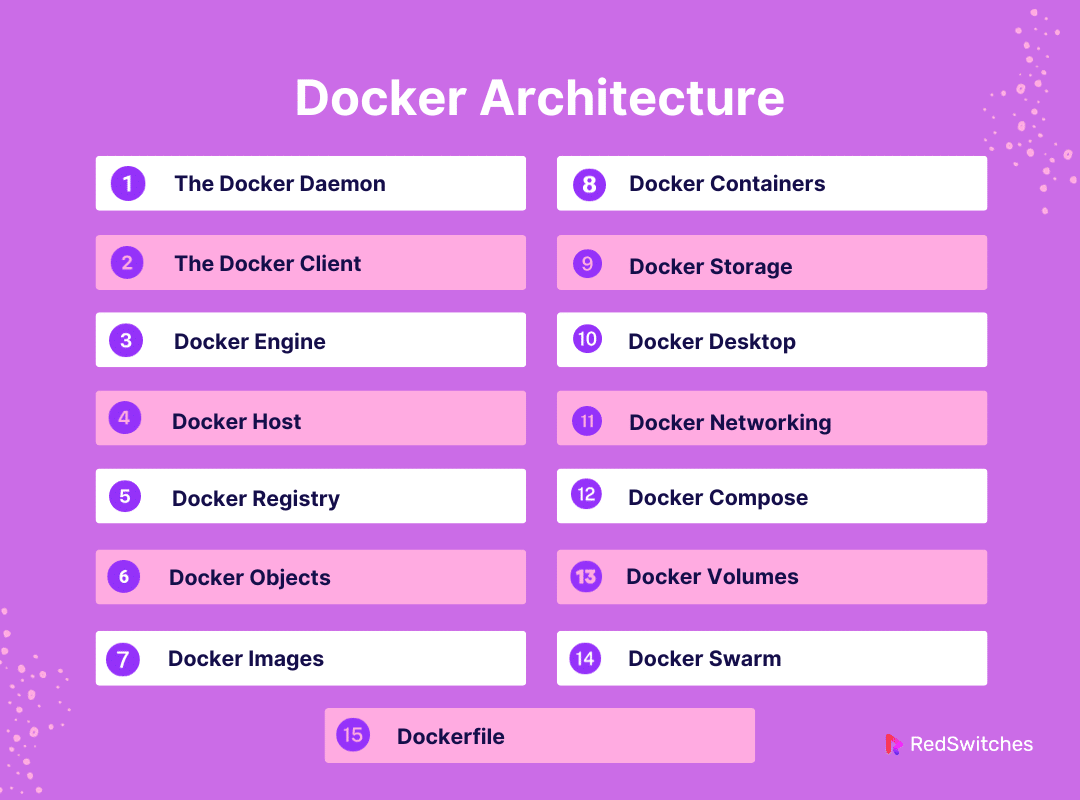

Docker Architecture

Diving deep into the world of containerization, understanding the Docker architecture is crucial for anyone looking to leverage the power of Docker fully. This architecture is not just the backbone of Docker’s functionality; it’s the key to unlocking its full potential. By exploring the Docker architecture, we gain invaluable insights into how Docker manages and orchestrates containers, ensuring efficient, scalable, and isolated application environments.

In this section, we’ll unravel the layers of Docker architecture, explaining each component’s role in the bigger picture and how they interconnect to provide a seamless and robust user experience. Whether you’re a developer, a system administrator, or a tech enthusiast, comprehending Docker’s architecture will empower you to use this technology more effectively and innovatively.

Also Read How to List / Start / Stop Docker Containers

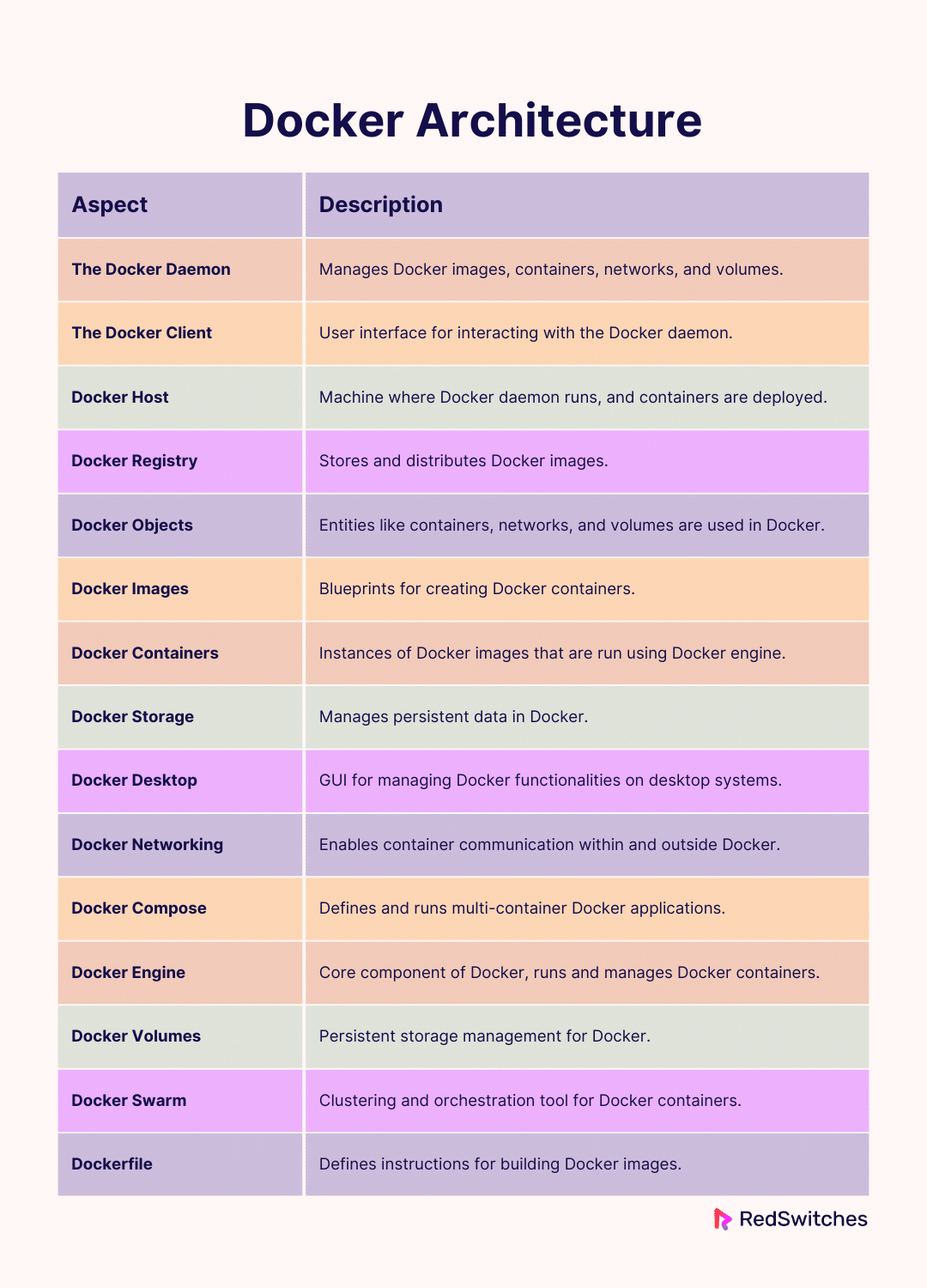

The Docker Daemon

The Docker daemon, or dockerd, plays a central role in the Docker architecture. The server-side component creates and manages Docker images, containers, networks, and volumes. The daemon listens for Docker API requests and can communicate with others to manage Docker services. Essentially, the behind-the-scenes workhorse does the heavy lifting, allowing users to build, deploy, and run containers. Understanding the Docker daemon is crucial for anyone looking to harness Docker’s full capabilities, as it’s responsible for the core operations of Docker’s containerization technology.

The Docker Client

The Docker client, commonly called the docker command, is how users interact with Docker. When commands like ‘docker run’ or ‘docker build’ are used, the client transmits these instructions to ‘dockerd,’ which executes them. The Docker client and daemon communicate, making it a versatile tool for controlling multiple Docker environments. Docker uses a client-server interaction that is fundamental to Docker’s design, providing a user-friendly interface to the powerful capabilities of the Docker daemon.

Docker Engine

Docker Engine is the core of Docker, a powerful client-server application with a server-side daemon process (dockerd) and a client-side command-line interface (CLI). The Engine accepts commands from the Docker client and manages Docker objects like images, containers, networks, and volumes. Essentially, it’s the runtime that builds and runs your containers. Docker Engine comes in two editions: Docker Engine – Community and Docker Engine – Enterprise. The Community edition is ideal for individual developers and small teams looking to start with Docker and experiment with container-based apps. In contrast, the Enterprise edition is designed for larger-scale operations and offers advanced features like management and security. Understanding Docker Engine is crucial for anyone using Docker, as it provides the underlying functionality that powers the entire Docker platform.

Docker Host

Credits: Freepik

The Docker host is the host machine (physical or virtual) on which the Docker daemon runs. It provides the runtime environment for Docker containers. The host machine can be your local machine, a cloud server, or any system capable of running the Docker daemon. It’s where all the components of the Docker architecture come together – the daemon, containers, images, volumes, and networks all exist on the Docker host. Understanding the role of the Docker host is critical to effectively deploying and managing Dockerized applications, as it’s the environment where your containers live and operate.

Docker Registry

The Docker Registry is a crucial docker architecture component, acting as a storage and distribution system for Docker images. It’s where you store, share, and manage Docker images, either in a public or private capacity. The most well-known example of a Docker Registry is Docker Hub, which hosts thousands of public images that can be used as a starting point for your containers. Third parties can host registries or can be set up privately within your infrastructure. Understanding how to use and interact with Docker Registries is essential for efficient container management and deployment, allowing you to leverage the vast array of images available and streamline your workflow.

Docker Objects

In Docker, objects are various entities used to assemble an application in Docker. The most significant Docker objects include containers, images, networks, and volumes. Each object serves a specific purpose:

- Containers are instances of Docker images that can be run using the Docker engine.

- Networks in Docker provide the networking interface for containers, allowing them to communicate with each other and the outside world.

- Volumes are used for persisting data generated by and used by Docker containers.

Understanding Docker objects and how they interact is key to effectively using Docker, as they form the basic building blocks of your Docker environment.

Also Read How to SSH into a Docker Container in 3 Easy Methods

Docker Images

Docker images are the blueprints for Docker containers. They are lightweight, standalone, executable software packages that include everything needed to run an application – code, runtime, system tools, system libraries, and settings. Docker images are built from a Dockerfile, a text file that contains instructions for building the image. Once an image is built, it can be shared on a Docker Registry and used to start containers on any Docker host. Knowledge of Docker images is fundamental for creating reproducible and consistent environments for your applications, ensuring they run the same, regardless of where they are deployed.

Docker Containers

Docker containers are the execution environments created from Docker images. They are lightweight, standalone, and secure environments where applications can run. A container is essentially a running instance of an image, isolated from the host system and other containers. It provides everything the application needs to run, including the code, runtime, system tools, and libraries. Containers are designed to be ephemeral – they can be quickly created, started, stopped, moved, and deleted. This flexibility is one of Docker’s key strengths, as it allows for easy scaling and updating of applications. Understanding how to manage Docker containers, including their lifecycle and interactions with other objects, is crucial for anyone working with Docker.

Docker Storage

Credits: Freepik

Docker storage is an essential aspect of Docker architecture, dealing with how data is stored and managed in the Docker environment. Docker provides several options for storage, including:

- Volumes: The most recommended way to persist data in Docker. Volumes are stored in a part of the host filesystem managed by Docker and are isolated from the host’s filesystem.

- Bind Mounts: They allow storing data on the host system outside the Docker-managed area. This is useful for sharing configuration files or other resources between the host and the container.

- tmpfs Mounts: A temporary storage option stored in the host system’s memory only, not on the disk, making it fast but ephemeral.

- Storage Drivers: Docker uses storage drivers to manage the contents of the container layers. Each storage driver handles the implementation differently, but they all provide a layering system for containers.

Understanding Docker storage is essential for effectively managing data within your Docker environment, ensuring data persistence, and optimizing performance.

Docker Desktop

Docker Desktop is an easy-to-use interface for managing Docker functionalities, making Docker more accessible, especially for those new to the platform. Available for both Windows and Mac, Docker Desktop integrates Docker Engine, Docker CLI client, Docker Compose, Docker Content Trust, Kubernetes, and Credential Helper. It provides a graphical user interface (GUI) to manage Docker containers, images, volumes, and networks, simplifying the process of running Docker on desktop systems. Docker Desktop also facilitates the building and sharing of containerized applications and microservices, making it an invaluable tool for developers looking to streamline their Docker workflows. For those just starting with Docker, Docker Desktop is an excellent way to familiarize themselves with Docker’s capabilities without delving deep into command-line operations.

Also Read Mastering Containerization Docker in 2024: A Quick Guide

Docker Networking

Credits: Freepik

The network interface is a vital component of Docker architecture that allows containers to communicate with each other and the outside world. Docker’s networking capabilities are robust and versatile, providing several options to suit different needs:

- Bridge Network: The default network model. It is suitable for communication between containers on the same host.

- Host Network: Removes network isolation between the container and the Docker host, allowing the container to use the host’s networking directly.

- Overlay Network: Enables network communications between containers on different Docker hosts, commonly used in Docker Swarm setups.

- Macvlan Network: Allows containers to appear as physical devices on the network, with their own MAC addresses.

- None Network: Disables all networking for the container, useful for containers that don’t require network connections.

Understanding Docker networking is key to deploying scalable and secure applications, ensuring the right level of communication and isolation as per your project’s needs.

Docker Compose

Docker Compose is a tool for defining and running multi-container Docker applications. It allows you to use a YAML file to configure your application’s services, networks, and volumes, making managing complex applications with multiple containers much simpler. With Docker Compose, you can define the entire stack in a single file and then spin up your application with a single command (docker-compose up). This not only streamlines the development process but also ensures consistency across environments. Compose is particularly useful in development, testing, and staging environments and CI workflows. For developers looking to manage and deploy multi-container applications efficiently, Docker Compose is an indispensable tool in their Docker toolkit.

Docker Volumes

Docker volumes are an essential component of Docker used for managing persistent data generated by and used by Docker containers. Unlike data in containers, which can be ephemeral, volumes are designed to persist data independent of the container’s lifecycle. This makes volumes ideal for handling important data or databases that must be preserved across container restarts and updates. Volumes are stored in a part of the host filesystem managed by Docker (/var/lib/docker/volumes/ on Linux). They can be more safely shared among multiple containers and are better than bind mounts for non-Linux hosts. Docker volumes also support driver plugins, allowing you to store data on remote hosts or cloud providers, enhancing the flexibility and scalability of data storage in Docker.

Also read Docker Vs Kubernetes: Which One Fits Your Needs Better?

Docker Swarm

Docker Swarm is Docker’s native clustering and orchestration tool. It turns a group of Docker hosts into a single, virtual Docker host, providing a standard Docker API for managing its resources. With Docker Swarm, you can quickly deploy, scale, and manage large numbers of containers. It offers load balancing, decentralized design, and secure communication between nodes, making it ideal for high-availability and high-scalability environments. Docker Swarm is designed to be easy to use, highly secure, and performant, effectively orchestrating container deployments. Docker Swarm is an invaluable tool for managing multiple containers across different hosts, simplifying complex container management tasks into a few straightforward commands.

Dockerfile

A Dockerfile is a fundamental component in the ecosystem, serving as a blueprint for building Docker images. It’s essentially a text file containing a set of instructions and commands which are used to assemble an image. Each instruction in a Dockerfile adds a layer to the image, with layers being cached and reused for efficiency. The Dockerfile starts with a base image and executes commands to install programs, copy files, and set up environments.

Key components of a Dockerfile include:

- FROM: Specifies the base image from which you are building.

- RUN: Executes commands in a new layer on top of the current image.

- COPY: Copies files from your Docker client’s current directory.

- ADD: More advanced than COPY; it can be used to unpack compressed files automatically.

- CMD: Provides a command and its default arguments for the container.

- EXPOSE: Inform Docker that the container listens to specified network ports.

- ENV: Sets environment variables.

- ENTRYPOINT: Configures a container to run as an executable.

Understanding how to use a Dockerfile effectively is crucial for anyone creating custom Docker images. It allows for automation, repeatability, and consistency in the image creation, making it a cornerstone of efficient Docker architecture containerization.

Also, read Docker ADD vs COPY in Dockerfile

This table concisely overviews each aspect’s role and significance in the Docker architecture ecosystem.

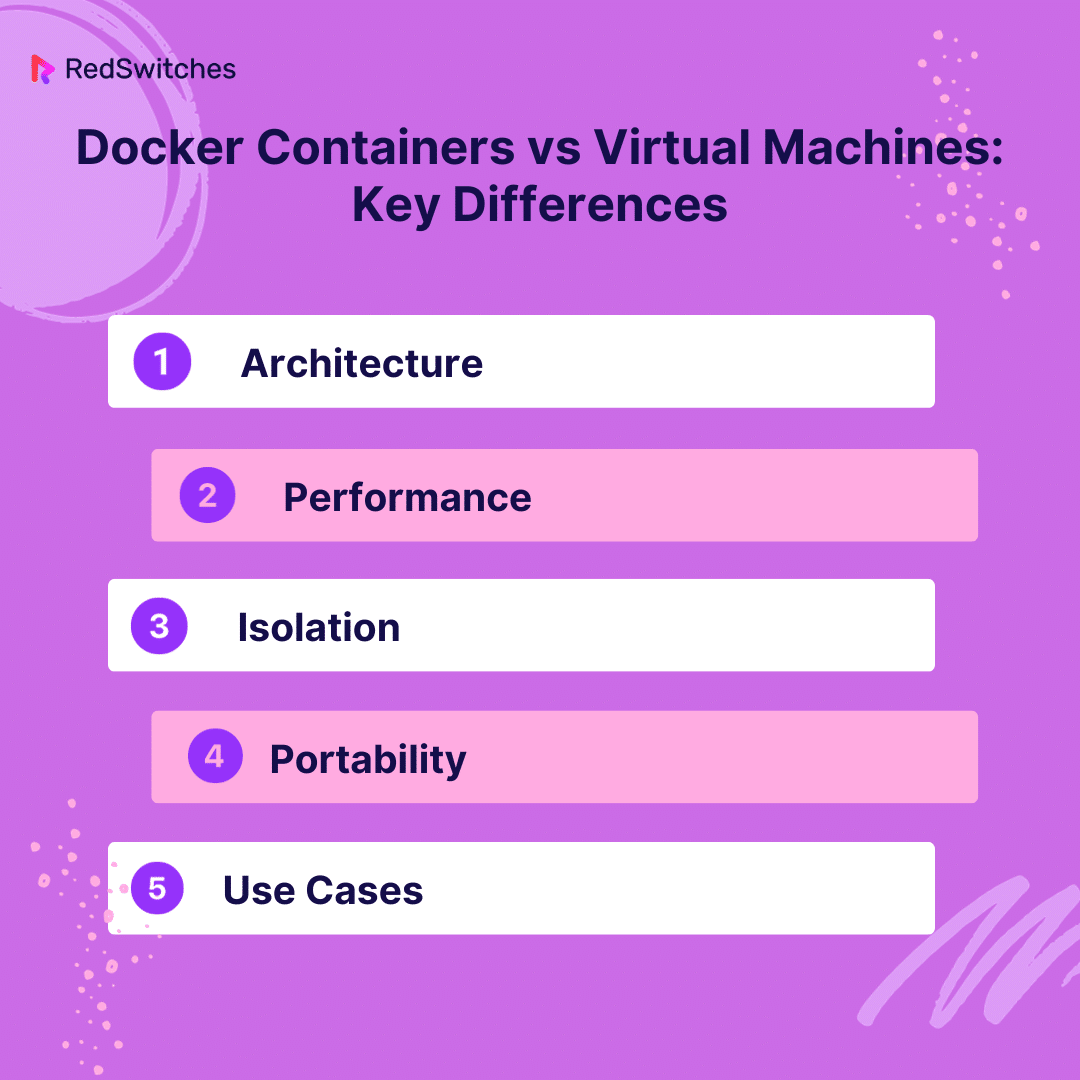

Docker Containers vs Virtual Machines

In software development and deployment, comparing Docker containers and virtual machines (VMs) is a topic of significant interest. Understanding the differences between these two technologies is crucial for making informed decisions about your infrastructure and deployment strategies.

Key Differences

-

Architecture:

- Docker Containers: Containers share the host system’s kernel but are isolated regarding processes, memory, and other resources. They are lightweight, start faster, and require fewer resources than VMs.

- Virtual Machines: VMs include not only the application and necessary binaries and libraries but also an entire guest operating system. This makes them more resource-intensive and slower to start.

-

Performance:

- Lightweight containers provide better performance and resource efficiency than VMs. They enable more applications to run on the same hardware unit.

-

Isolation:

- VMs provide strong isolation by running a separate kernel and operating system instance. Containers, while isolated, share the host system’s kernel, which can be a concern from a security standpoint.

-

Portability:

- Docker containers are highly portable across different environments as they encapsulate the application and its environment. VMs are less portable due to their dependency on the guest operating system.

-

Use Cases:

- Containers are ideal for microservices architecture, CI/CD implementation, and where density, efficiency, and speed are required.

- VMs are better suited for applications requiring full isolation, extensive OS-level operations, or running applications on multiple OS platforms.

Advantages and Disadvantages

Credits: Freepik

Docker Containers:

- Advantages: Efficient resource utilization, faster startup times, and easier scalability and replication.

- Disadvantages: Less isolation, potential security risks, and dependency on the host operating system’s kernel.

Virtual Machines:

- Advantages: Strong isolation, broader compatibility with different OS types, and suitable for legacy applications.

- Disadvantages: Higher resource usage, slower boot times, and less efficient scaling.

Also Read Proxmox vs Docker: Dissecting Virtualization and Containerization Giants

In conclusion, the choice between Docker containers and VMs depends on the application’s specific needs, the required isolation level, and the infrastructure in place. Both have their place in the IT landscape and often, organizations find a hybrid approach that uses both technologies to be the most effective.

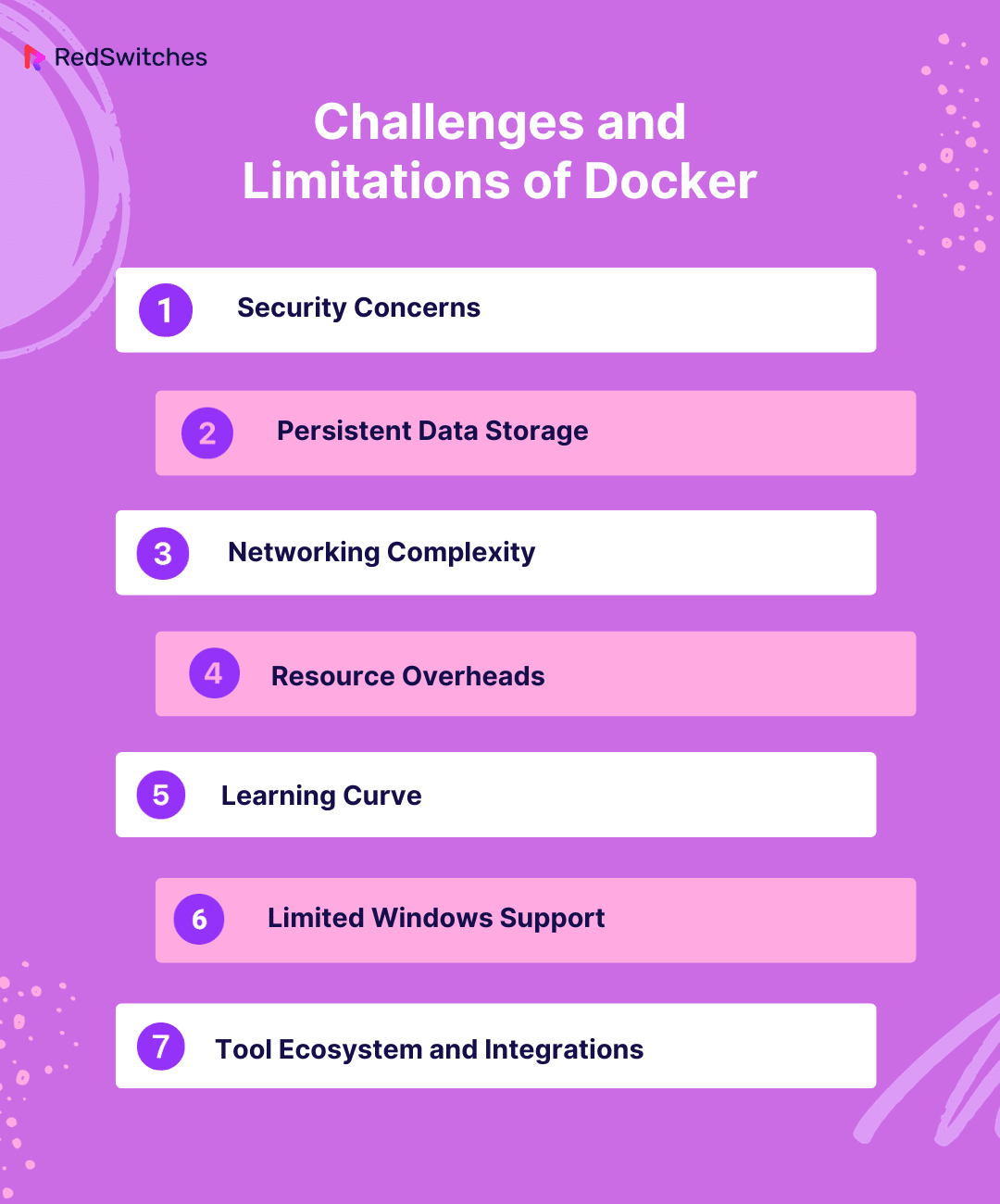

Challenges and Limitations of Docker

While Docker offers numerous benefits and has revolutionized containerization and application deployment, it is not without its challenges and limitations. Understanding these is crucial for effectively using Docker and planning how to integrate it into your workflows.

1. Security Concerns

- Container Isolation: Although Docker provides isolation between containers, it is not as strong as the isolation provided by virtual machines. If not properly secured, containers may become vulnerable to attacks.

- Image Security: Relying on third-party images from public registries can pose security risks. Ensuring that images are scanned for vulnerabilities and come from trusted sources is essential.

2. Persistent Data Storage

- Docker’s stateless nature challenges persistent data storage. While Docker volumes offer a solution, managing data persistence across multiple containers and hosts can be complex.

3. Networking Complexity

- Setting up networking for Docker, especially in large-scale deployments or in Docker Swarm, can become complicated. Networking issues can arise, requiring in-depth knowledge to troubleshoot.

4. Resource Overheads

- While containers are lightweight compared to virtual machines, they still consume resources. In high-density deployments, the cumulative resource usage can be significant.

5. Learning Curve

- Docker’s concepts, architecture, and command-line interface can overwhelm beginners. The learning curve can be steep, especially for teams transitioning from traditional deployment methods.

6. Limited Windows Support

- Docker primarily targets Linux. While it supports Windows, there are limitations and differences in how containers behave on Windows, which can lead to compatibility issues.

7. Tool Ecosystem and Integrations

- Navigating Docker’s ecosystem of tools and integrations requires time and expertise. The complexity increases when integrating Docker into existing CI/CD pipelines and DevOps practices.

These challenges highlight the importance of thorough planning, continuous learning, and robust security practices when adopting Docker in your projects. By being aware of these limitations, teams can better prepare and leverage Docker’s capabilities.

Also Read Unveiling the Differences: LXC vs Docker – An In-Depth Comparison

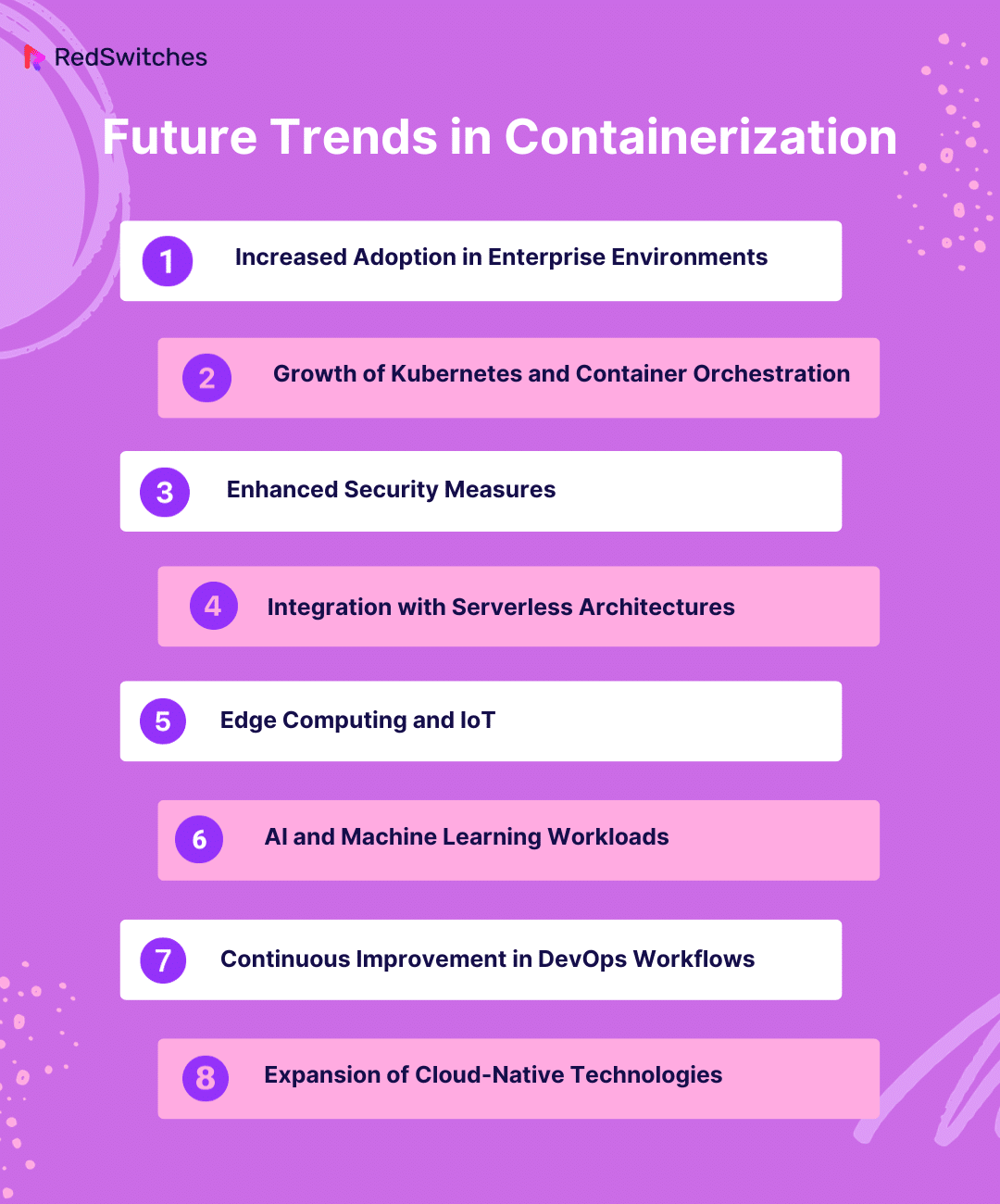

Future Trends in Containerization

Containerization, led by platforms like Docker, is rapidly evolving, reshaping the software development and deployment landscape. Several key trends emerge as we look to the future, pointing to an exciting and transformative road ahead.

1. Increased Adoption in Enterprise Environments

Containerization is expected to increase adoption in enterprise environments for its efficiency, portability, and scalability. Large organizations will likely continue integrating containerized applications into their infrastructure to enhance agility and reduce costs.

2. Growth of Kubernetes and Container Orchestration

Kubernetes has become the de facto standard for container orchestration. Its growth is expected to continue, with more tools and services integrating with Kubernetes to enhance orchestration capabilities.

3. Enhanced Security Measures

As containers become more prevalent, security will become a greater focus. Expect advancements in container-specific security tools and practices, addressing vulnerabilities and providing robust protection for containerized applications.

4. Integration with Serverless Architectures

The convergence of containerization and serverless computing is a trend to watch. This combination can provide even greater scalability and efficiency, leading to more sophisticated, cloud-native applications.

5. Edge Computing and IoT

With the rise of edge computing and IoT, containerization is set to play a key role. Containers, with their lightweight and portable nature, are ideal for deploying applications in edge computing environments, driving innovation in IoT solutions.

6. AI and Machine Learning Workloads

The use of containers for AI and machine learning workloads is growing. Containers provide a flexible, scalable environment for developing and deploying AI models, and this trend is expected to accelerate.

7. Continuous Improvement in DevOps Workflows

Containerization will continue influencing DevOps, promoting more efficient workflows and continuous deployment practices. This will lead to faster development cycles and more responsive software delivery.

8. Expansion of Cloud-Native Technologies

The future will see a deeper integration of containerization with cloud-native technologies, leading to more resilient, manageable, and observable systems.

The future of containerization is bright and full of potential. It promises to not only streamline application deployment but also to foster innovation across different sectors. Staying abreast of these trends will be essential for organizations looking to leverage the full potential of containerization.

Conclusion

As we’ve navigated the intricate and dynamic world of Docker architecture and containerization, it’s clear that this technology is not just a fleeting trend but a cornerstone of modern software development and deployment. From its efficient architecture to the emerging trends that promise even more innovation and flexibility, Docker continues to shape the future of IT infrastructure.

As exciting as these developments are, harnessing the full potential of Docker architecture and containerization requires a reliable and robust platform. This is where RedSwitches comes into play. With RedSwitches, you can elevate your Docker experience to new heights. Whether deploying complex applications, embracing microservices, or experimenting with cutting-edge containerized solutions, RedSwitches provides the perfect environment to ensure your projects are successful, secure, and scalable.

Take the next step in your Docker journey with RedSwitches. Explore our range of services, tailor-made for your containerization needs, and join a community of experts and innovators shaping the future of technology.

FAQs

Q. What is Docker engine architecture?

Docker Engine architecture is a client-server model comprising the Docker Daemon (server), Docker Client, and REST API for communication. It manages containers, images, networks, and storage volumes.

Q. Is Docker Engine different from Docker?

Docker Engine is a part of Docker, specifically the core software that creates and runs Docker containers. Docker, as a whole, includes the Engine and other tools and services.

Q. What is the structure of the Docker engine?

The Docker Engine’s structure includes the Docker Daemon (handles Docker objects), Docker Client (CLI for user interaction), and REST API (interface for communication between client and daemon).

Q. Is Docker Engine a Docker daemon?

Docker Engine includes the Docker daemon, but they are not the same. The daemon is a part of the Engine responsible for creating and managing Docker objects.

Q. What is the purpose of Docker engine?

The purpose of Docker Engine is to provide a runtime environment to build and run containers, manage Docker images, networks, and volumes, and handle container orchestration.

Q. What architectures support Docker engine?

Docker Engine supports multiple architectures, including x86-64, ARM, and others, making it versatile for different hardware and operating systems.

Q. What is the architecture of Docker?

Docker follows a client-server architecture, where the Docker client communicates with the Docker daemon to execute commands. The daemon, in turn, manages Docker objects such as images, containers, networks, and volumes.

Q. Can you explain the components of Docker architecture?

The Docker architecture consists of the Docker client, Docker daemon, Docker host, Docker objects (images, containers, networks, volumes), and Docker registries. The client interacts with the daemon to perform various operations, while the host provides the complete environment for running Docker applications.

Q. What does the client-server architecture of Docker entail?

In the client-server architecture of Docker, the client sends commands to the Docker daemon, and the daemon executes them. The client can be local or remote, communicating with the daemon using REST API calls.

Q. How does the communication between Docker client and daemon work?

The Docker client interacts with the Docker daemon through the Docker API using commands like docker pull, docker run, and docker push. The client and daemon communicate over a Unix socket or a network interface.

Q. What is the role of Docker Hub in Docker architecture?

Docker Hub is a public registry that hosts a wide range of Docker images. It allows users to pull images from the hub and push their own images to the repository, making it a central component in the Docker ecosystem.

Q. How does a remote Docker setup function within the Docker architecture?

A remote Docker setup involves the Docker client interacting with a remote Docker daemon, which can be situated on a different machine. This setup enables users to manage Docker resources on a remote host.

Q. How is Docker architecture structured for communication between components?

The communication within Docker architecture involves the client sending requests to the Docker daemon, which acts as a background process managing Docker resources. The client and daemon can also run on the same or different hosts for varied configurations.

Q. What are the key elements of the Docker architecture explained in detail?

The key elements of Docker architecture include the Docker client, responsible for sending commands to the Docker daemon; the daemon, which manages Docker objects and systems; and the host, providing the complete environment for Docker applications.

Q. How is the communication with Docker Hub facilitated in the Docker architecture?

Docker architecture enables the Docker client to interact with Docker Hub by default, allowing users to search for and access a wide array of Docker images available on the public registry.

Q. Can you describe the working of the remote Docker daemon in Docker architecture?

The remote Docker daemon allows users to manage resources on a separate host. The Docker client communicates with the remote daemon to execute commands and manage Docker containers, images, and objects.