If you’ve been trying to determine the differences between Kubernetes and Docker, you’re in luck! Kubernetes and Docker are closely related and go hand-in-hand in a virtualized environment.

This article will go into the major aspects of the Kubernetes vs Docker debate and highlight the crucial differences between the two container technologies. Then, we’ll go into the details of security and container orchestration to ensure you have enough information to make an informed decision about choosing the best fits your needs.

So, let’s jump right in and start demystifying the core aspects of the Docker vs Kubernetes debate!

But first, let’s start with containers.

Table Of Content

-

- Docker: Understanding Containers

- What is Docker?

- What are Docker’s Key Features?

- The Benefits Docker Brings to Your Operations

- The Disadvantages of Using Docker

- What is Kubernetes?

- Eight Essential Kubernetes Features

- Boost Your Infrastructure with Kubernetes: Let’s Talk Pros

- Drawbacks of Kubernetes

- Kubernetes vs Docker Swarm

- Conclusion: Which Technology Should You Use – Kubernetes or Docker?

- FAQ-Kubernetes or Docker

Docker: Understanding Containers

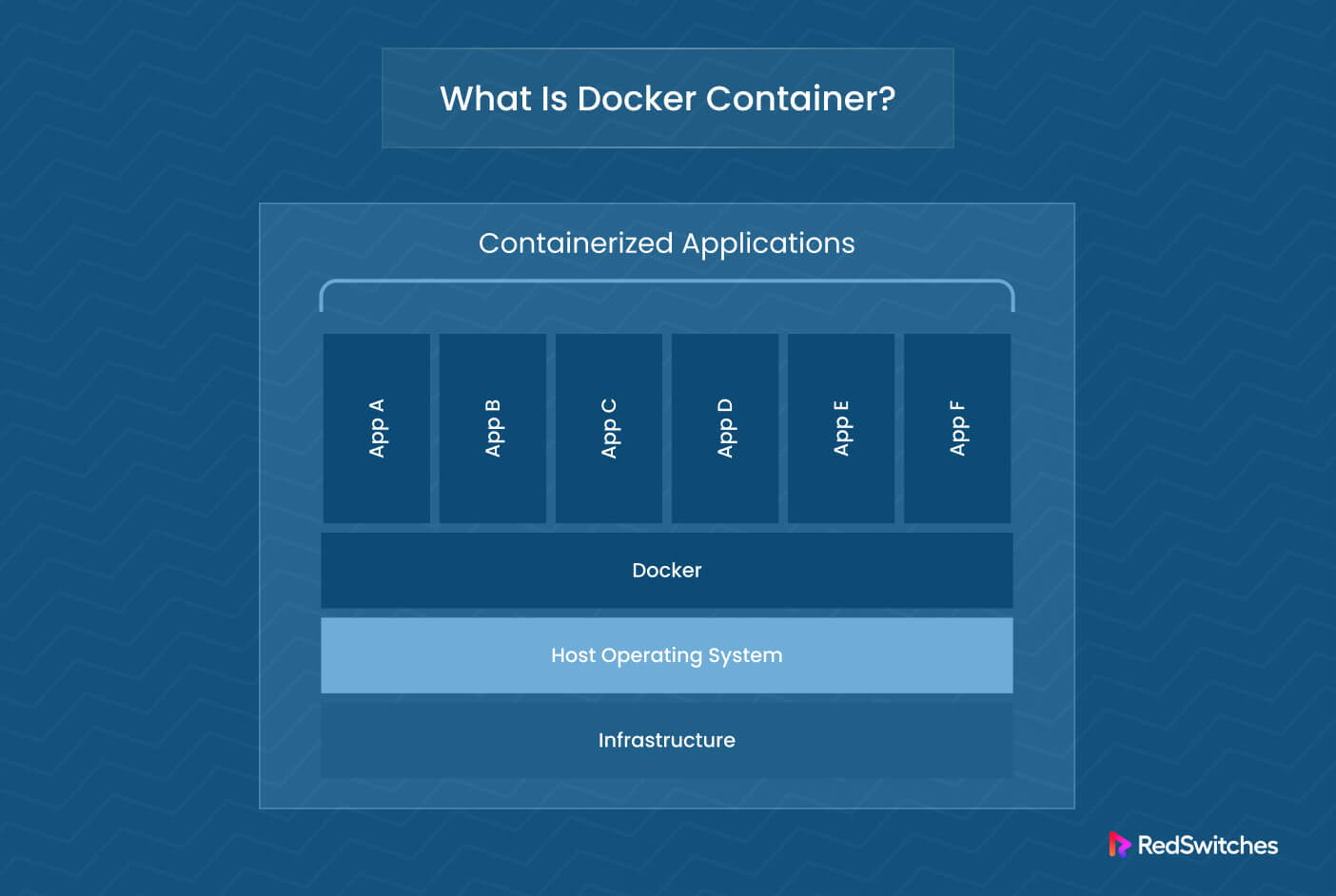

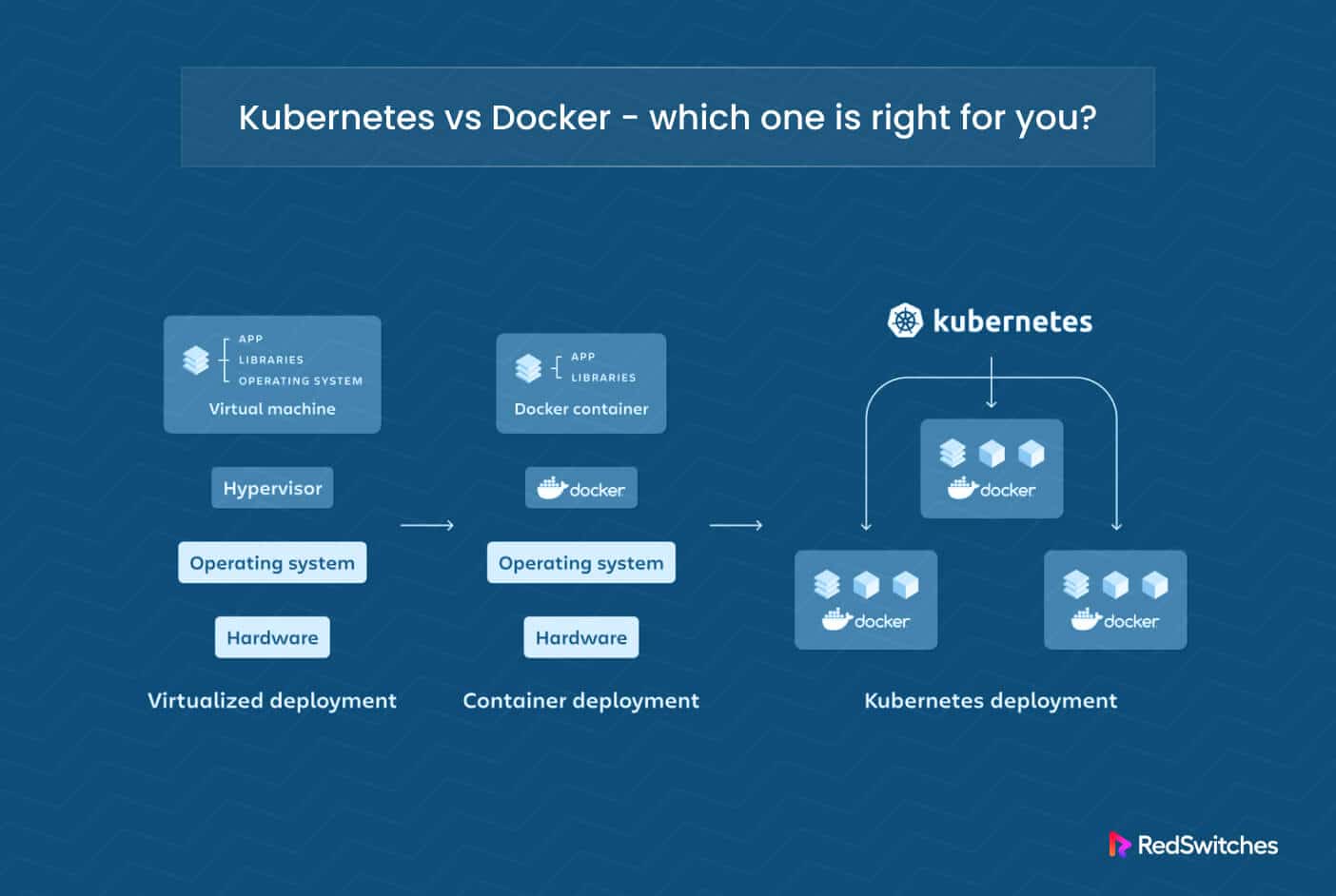

Containers have become an increasingly popular virtualization technology in recent years. They leverage the power of containerized applications that save development and deployment time and costs.

In practical terms, containers are virtualized environments that allow users to isolate application components from other code running on the system. They offer complete control over individual components within a package (core code and libraries), allowing for greater scalability than

with traditional virtual machines (VMs).

Containers are compact and lightweight compared to full VMs, requiring much fewer system resources for execution.

Now that you have a clear understanding of containers, let’s start the Kubernetes vs Docker debate with an introduction to Docker, a popular platform for deploying containers.

What is Docker?

Docker (a product of Docker, Inc.) is a popular open-source containerization platform that developers use to quickly build, deploy, and run modern applications in virtual containers.

With Docker, you can create and deploy apps with minimal effort using “images” containing all necessary components (core files, assets, libraries, and code). It also cleanly isolates each application from one another, ensuring security and stability without requiring any active intervention. As a result, you can quickly deploy and scale individual containers (and the deployed apps) without worrying about resource allocation and security issues.

The strength of Docker lies in commands such as Docker exec (for faster container provisioning) and in components such as Docker Prune (for removing unused containers and images) and Docker Swarm cluster management.

If you’re interested in setting up Docker on CentOS 7, check out our detailed guide that covers installation and setup.

Is Docker Free?

The core Docker platform is free for personal use and includes a Community Edition with support for basic container orchestration features. The Enterprise Edition provides additional features like Docker container image security scanning and role-based access control.

Get Docker up and running on your preferred platform with our guides to install Docker on Windows, Docker on Mac, and install Docker on Debian.

What is Docker Used For?

Docker is famous for building, packaging, and deploying container applications.

Here are four critical components of any Docker deployment:

- Docker Engine software allows you to create and manage containers.

- A Docker container is a lightweight, standalone, and executable package that includes the code, runtime, system tools, libraries, and dependencies needed to run an application.

- A Docker file is a text file that contains a set of instructions for building a Docker image. It specifies the base image to use, the files to include, and the commands to run.

- Docker Hub is a cloud-based repository where Docker images can be stored and shared. It provides a centralized location for developers to find and access pre-built images to be used as a starting point for their projects.

Docker Image vs Container

Docker images are the files that contain the code, configuration, and environment setup needed to run an application in a container. Containers are instances of a Docker image that have been started and active in a Docker environment.

A single Docker image can be used to create multiple lightweight containers. Each container will have its settings and configurations, but the underlying code remains the same. Developers use this write-once-deploy-everywhere benefit to deploy applications quickly and efficiently, as they don’t need to rebuild the entire image whenever they want to create a new application instance.

What are Docker’s Key Features?

Docker is a containerization platform that allows you to create, deploy, and run applications inside containers. Here are some of the critical features of the platform

Portability

With Docker containers, developers don’t need to worry about hardware compatibility since they can quickly move containers to different OS platforms, including Linux, macOS, and Windows. This makes containers incredibly versatile, and developers can handle software deployments and updates much more quickly across multiple systems.

Faster Deployment

Docker images are incredibly lightweight compared to virtual machines because they only contain the application code and any associated dependencies. Since there is no “bloat” in the form of an OS, deploying applications is much faster and easier with Docker containers.

Resource Utilization

In addition to offering faster container deployments than VMs, Docker also uses fewer resources, making the platform more economical in the long run.

By running multiple apps (each in a container) on a single instance of an operating system, businesses save money by minimizing their hardware and software costs. The deployed apps perform much more efficiently due to better use of available resources.

Easy Maintenance

Do you need to deploy a new version of your application?

With Docker containers, you only need to update the container runtime or deploy a fresh one without affecting other active applications. This makes maintenance incredibly easy and reliable because you no longer need to worry about conflicting applications and their versions causing problems within your system.

Docker containers ensure that each application has an isolated environment, regardless of your running version!

Secure Sandboxing

Docker offers a robust sandboxing feature, where each container is completely isolated from other containers and deployed software.

This prevents malicious code from accessing critical data stored anywhere on the system. For developers, this sandboxing ensures additional peace of mind because they don’t have to worry about unexpected security complications down the line.

The Benefits Docker Brings to Your Operations

Docker is an excellent tool for developers and admins who must deploy cloud applications more effectively by setting up isolated, reproducible, and lightweight software containers.

It’s been rapidly gaining popularity as one of the top go-to tools for deploying applications in different environments. In almost all cases, a Docker image is the preferred way of quickly and easily sharing applications with team members.

Here are some more benefits Docker brings to the game.

Flexible Application Development

Docker facilitates building a faster and more flexible application development environment, as you no longer have to worry about dependencies or configuration issues.

This freedom means combining different services or components into a single package that runs as a single instance in a container. You don’t have to worry about fragmentation, as all dependencies are accounted for in the same container across multiple machines that make up your Docker deployment infrastructure.

Easier Deployment Process

Docker makes it easier to initiate the deployment process without any strict prerequisites.

Docker images are composed of layers that provide all the necessary software components to execute applications consistently. As a result, you can automate deployment, scale up the deployment processes, and ultimately save time and resources.

Thanks to automation, you can also avoid the errors that arise during manual deployments. Businesses can keep their system running smoothly without dedicating resources to deployment tasks.

Portability Across Systems

Docker has solved the portability issue for those developing applications across all popular platforms and devices.

This allows developers to write their software once and then use containers to deploy their products without worrying about the hardware and the OS running on target machines.

The same goes for application updates. Developers must update the package once, and the changes will be reflected at all compatible locations.

The containerized application also comes with all the settings so that there’s no extra step required for porting the application. You can rest easy that the settings will stay visible across OS and environments.

Enhanced Security

Docker provides an additional security layer in the form of isolated container ecosystems. This secure space allows the software to run on a microkernel rather than on top of a traditional operating system.

In addition to the “runtime” safety for processes already running alongside applications, this isolation ensures that developers can test applications in their native environment before pushing them to production.

Sharing containers globally allows applications to run faster with minimum resource investment. This containment model is precious for businesses looking to ensure their data remains segregated from the rest of the processes.

The Disadvantages of Using Docker

While Docker can provide a wide range of benefits for your production environment, it has some potential drawbacks.

The most common disadvantage of using Docker is that scaling can be difficult and costly.

Here’s an in-depth look at the pitfalls of using Docker in a production environment.

Increased Resource Usage

When running services with Docker, each process runs inside its container. Compared to standalone services, this could increase resource usage on the machine. This is because each container requires its distinct set of server resources (memory, CPU, and disk space).

Therefore, it is essential to consider resource usage when determining whether Docker is an excellent fit for your particular application or service.

Higher Maintenance Costs

In addition to increased resource usage, deploying applications with Docker can also be more expensive from a maintenance perspective.

Each container must be regularly maintained to maintain the system’s stability and security. This means developers must keep each container individually rather than rely on one platform-wide maintenance procedure.

Security Issues

One of the main disadvantages of using containers relates to security concerns.

When running services in containers, attackers can compromise the benefits by accessing sensitive data stored within the Docker image or exploiting vulnerabilities within the container’s operating system or other components.

Therefore, developers take appropriate security measures when deploying applications with Docker for airtight security. Some suggestions include restricting access permissions and applying additional hardening techniques.

Difficulty in Scaling Applications

Although containers provide an easy way of deploying multiple instances of an application at once (ideal for auto-scaling), managing such deployments can become difficult if applications aren’t designed with scalability in mind.

Manually scaling containers can add extra work to capacity planning and the dynamic allocation of resources necessary for effective performance optimization.

Additionally, depending on your specific scenario (such as relying on network filesystems to synchronize shared states between instances), you may face performance penalties due to latency incurred during communication across endpoints. This issue becomes acute when scaling out multiple concurrent running processes over different hosts/containers/services.

All these factors significantly complicate the scaling process once tasks enter production use cases requiring massive scalability. The system must quickly allocate resources across geographically distributed workspaces without compromising data quality or consistency between nodes.

Scalability is a severe challenge to Docker, even if you use frameworks and tools developed for coordinating distributed resources (a good example is Apache Zookeeper).

What is Kubernetes?

Next, in the Kubernetes vs Docker debate, we have Kubernetes (aka, K8s), an open-source container orchestration platform created by Google. It enables developers to deploy, manage, and scale applications quickly and easily.

With Kubernetes, applications can be scaled up or down in seconds, allowing developers greater flexibility and control over their cloud infrastructure. In addition, Kubernetes makes it easy to decentralize workloads and smoothly deploy across multiple public and private clouds.

Since its introduction in 2014, Kubernetes has become the preferred choice for deploying applications in a distributed environment.

Also Read: Navigating the Cloud: A Comprehensive Guide to Kubernetes Monitoring Solutions

Eight Essential Kubernetes Features

Now that you know what is Kubernetes, let’s see why developers use Kubernetes as a powerful container orchestration platform to manage, deploy, and scale containerized applications.

Here are eight essential Kubernetes features that help you take control of your infrastructure.

Container Orchestration

Kubernetes is a container orchestration system that helps manage applications and services running on multiple containers over a cluster of nodes.

You get features such as service discovery, automatic healing, monitoring, and self-healing. As a result, businesses don’t have to worry about time-consuming project maintenance tasks.

Deployment Controls

Kubernetes deployments can be managed with the help of advanced deployment strategies such as blue/green deployment or rolling updates that developers can use to better control their application’s release cycle.

Auto-scaling & Self-Healing

Kubernetes comes with a built-in auto-scaling feature, which ensures your application’s availability and performance are optimized regardless of the number of users.

Additionally, the self-healing capabilities automatically remove nodes from active duty when an issue arises and reinstate them when it is fixed. This improves system health and minimizes outages.

Logging & Monitoring

Logging and monitoring applications running on top of an orchestrated platform are critical for managing performance metrics, traceability, troubleshooting issues, and increasing developer productivity.

Kubernetes includes logging and metric functions that collect logs across all containers in real time, providing insight into resource usage by clusters and applications.

Built-in Namespaces

Kubernetes allows you to break up clusters into separate namespaces, which helps better manage resources by assigning them dedicated pods with set limits per team or environment outside production environments.

This ensures that only trusted resources remain active while preventing teams (application teams and operations teams) from interfering with other projects already deployed in production.

Endpoint Management

Kubernetes has built-in endpoint management services that businesses can use for better network data management. This removes dependencies on third-party hosted solutions such as DNS providers or cloud services for name resolution needs.

Access Control & Security

Users also benefit from Kubernetes’s access control policies that allow efficient control over resource distribution within cluster environments. This ensures that only authorized users have access rights and processes don’t “waste” resources while in wait/inactive state.

Additionally, security options such as audit logging provide a record of user interactions for later analysis. The logs are especially useful in formulating better security processes based on potential attack vectors and exposed vulnerabilities.

Interoperability & Connectivity

Kubernetes offers support for connecting external services to internal microservices.

For instance, you can connect the hybrid cloud’s native configuration with your on-premises workloads to build an orchestrated platform that defines out-service boundaries while authorizing outgoing communication with mutual TLS certificates.

Boost Your Infrastructure with Kubernetes: Let’s Talk Pros

Kubernetes is an increasingly popular tool for enterprises looking to boost their infrastructure performance, maximize cost savings, and scale operations quickly and efficiently.

Let’s explore the advantages Kubernetes brings to business processes.

Cost Savings

Kubernetes allows you to manage your digital infrastructure without frivolous costs. You can utilize cloud computing services, such as Amazon Web Services and Google Cloud, to store data and application code. In addition to saving the costs of building and maintaining your servers, you can further optimize operational budgets by leveraging the vast feature set of hybrid and public cloud providers.

Automated Deployment

Kubernetes offers automated deployment processes that minimize manual input and supervision when you deploy apps or push updates.

You can track deployment progress and quickly discover any issues that could derail the process. In almost all cases, the issue discovery is far quicker and smoother than traditional labor-intensive manual deployment processes.

Easy Scaling

Kubernetes makes scaling easy, thanks to the container-based platform that delivers the required resource allocation without prior hardware provisioning or OS configuration.

This simplifies application scalability by adjusting the underlying hardware allocation with minimal manual intervention.

Code Isolation

Kubernetes code isolation is a great way to manage and secure applications in highly dynamic environments.

You can deploy fully isolated distributed applications containing multiple functional components, such as containers, pods, services, configurations, and other resources.

With Kubernetes, applications can be safely segmented into different areas for different stakeholders. This ensures that each group only has access to the essential data for their requirements. By allowing each internal element of an application to run separately in its own containerized environment, Kubernetes ensures better control and scalability.

Security Benefits

Last but not least, Kubernetes offers several security benefits that traditional virtualized approaches don’t offer.

The list includes features such as built-in network segmentation, isolating apps and microservices from direct outside access while making them accessible within the organizational context by connecting all their components underneath one hood (with no firewall configuration required).

Furthermore, if any malicious activity is detected, Kubernetes automates many security measures, such as automated incident response, creating audit trails, taking snapshots, and alerting relevant personnel.

Drawbacks of Kubernetes

Kubernetes has been a popular choice for deploying applications in the cloud. It is a powerful technology, enabling the development and deployment of complex clusters of applications with minimal effort. But, like all technologies, there are drawbacks to using Kubernetes.

Here’s a look at some of these drawbacks:

High Costs

The high costs associated with Kubernetes can sometimes be prohibitive for some businesses.

There’s an initial cost of setting up a Kubernetes cluster and additional ongoing costs as you add more nodes to scale up your application, along with other infrastructure costs such as storage and networking.

Additionally, there may be license fees if you buy products from vendors and third-party service providers.

Complexity

Kubernetes is known for its flexibility and scalability. However, these benefits come with the complexity associated with setup and management.

For instance, running fault-tolerant clusters requires detailed knowledge of replication sets and pods –and this is just the beginning!

Managing a secure production environment requires knowledge about authentication mechanisms and handling secrets. This makes deploying even simple applications quite challenging unless you have someone skilled in container orchestration on your team.

Lack of Integration Options

Enterprise developers use multiple services across different applications to build and deliver their products. While most public clouds offer integration between services within their platform, these usually don’t work as well with services and products outside the provider’s ecosystem.

To connect different external systems into a cohesive whole (always an essential requirement), you must learn how to manually integrate various services, which can often be difficult or time-consuming, depending on which tools are used.

Security Risks

Security is a critical drawback that must be considered when using Kubernetes. The spectrum ranges from internal threats to malicious actors tampering with data stored inside containers (code injection is a popular method).

As mentioned earlier, secrets need special handling throughout deployments, so extra attention must be given to ensure that only authorized personnel can access sensitive data.

Data leakage could occur inadvertently due to improper configuration or careless sharing of passwords/access keys with developers who shouldn’t have privileged access.

Finally, vertically segmented lockdowns should always be employed whenever possible for added security layers in the development (QA) and active production stages, especially after updates.

Kubernetes vs Docker Swarm

Kubernetes and Docker Swarm are both popular container management solutions.

Each has its strengths and weaknesses, so it’s essential to understand the differences to decide which solution is best for your needs.

Kubernetes is an open-source platform for automating container deployment, scaling, and management. It is highly extensible and supports various container orchestration capabilities, including rolling updates, service discovery, and self-healing. It is also capable of handling large clusters with thousands of nodes.

Docker Swarm is an open-source container orchestration platform that simplifies managing multiple containers. It uses a declarative syntax to define tasks, making deploying and managing workloads easier. It also supports native integration with Docker Compose, allowing users to quickly spin up a cluster of containers.

When comparing the two solutions, Kubernetes has an edge over Docker Swarm regarding scalability and flexibility. Kubernetes offers advanced features such as automated deployment and scaling, which makes it ideal for large-scale projects.

Unlike virtual machines (VMs), which each have a complete copy of a guest operating system, container isolation is implemented at the kernel level without needing a guest operating system.

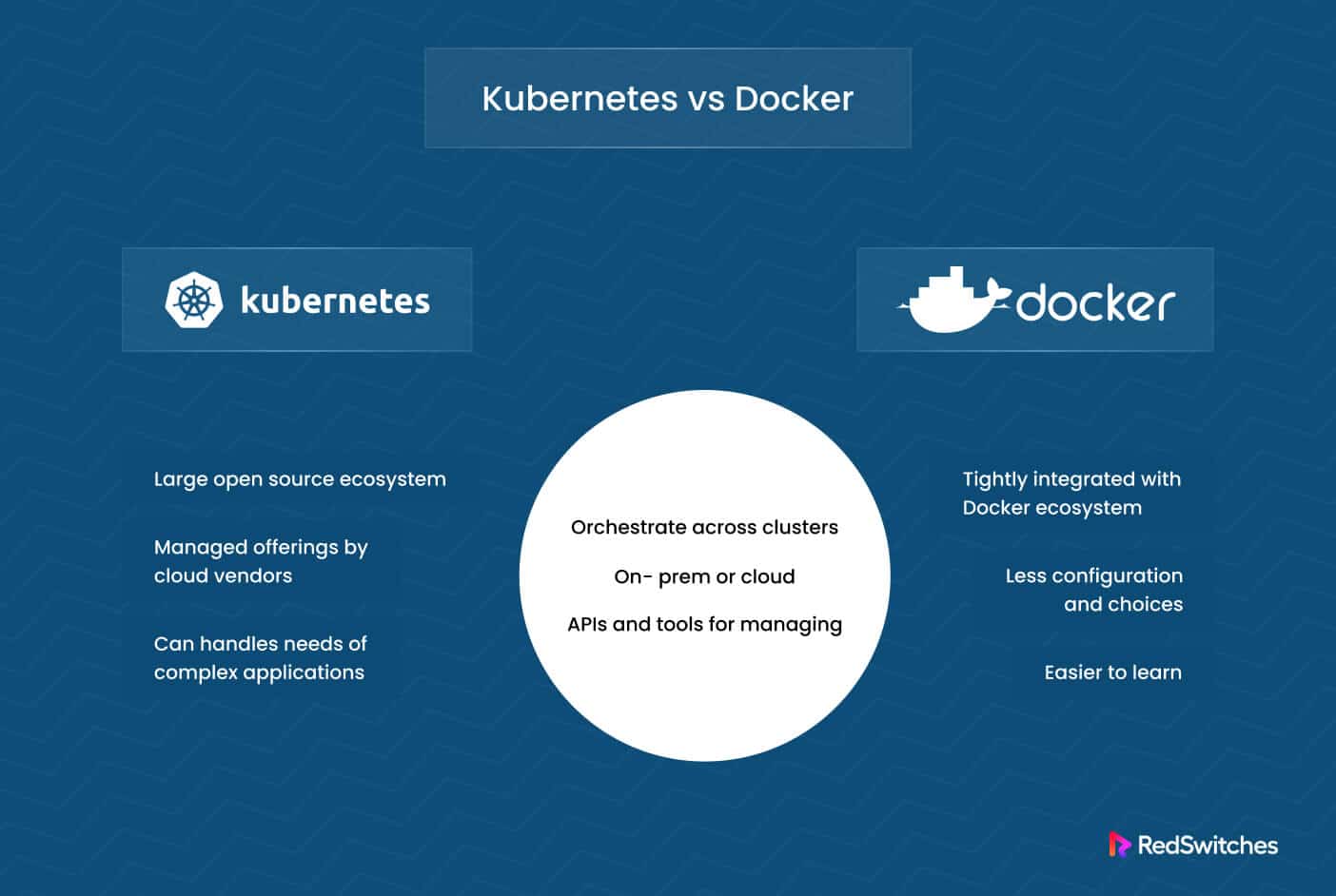

Conclusion: Which Technology Should You Use – Kubernetes or Docker?

When it comes to Kubernetes vs Docker, the vital thing to remember is that both are popular options for containerization. Both technologies have pros and cons, so whether you use Kubernetes or Docker will depend on your particular needs.

Ultimately, what matters most is that whatever technology you choose should meet your goals.

Kubernetes is a powerful platform with many features, but it can be complex and takes time to learn and understand. On the other hand,

Docker works more efficiently for smaller-scale projects and has an easy learning curve. Considering each option before deciding is essential, as there’s no single correct answer when choosing between Kubernetes and Docker.

Whether you go with Kubernetes or Docker (or an alternative), you need a dependable hosting partner that delivers bare metal hosting servers for your projects.

RedSwitches support engineers help you build and maintain the bare metal hosting infrastructure needed to deploy your preferred container orchestration platform for your projects.

RedSwitches is one of the best dedicated server hosting providers for Linux projects. We offer the best dedicated server pricing and deliver instant dedicated servers, usually on the same day the order gets approved. Whether you need a dedicated server, a traffic-friendly 10Gbps dedicated server, or a powerful bare metal server, we are your trusted hosting partner.

Our support engineers help you build and maintain the bare metal hosting infrastructure needed to deploy your preferred container orchestration platform for your projects.

FAQ-Kubernetes or Docker

What is a Docker image?

A Docker image is a preconfigured template for creating containers used to package apps and server environments for private or public sharing.

Do I need both Docker and Kubernetes?

Kubernetes can function independently of Docker but requires a container runtime with CRI implementation.

What is Blue/Green Deployment in Kubernetes?

The blue-green deployment model involves a gradual transfer of user traffic from an existing version of an application or microservice to a new, almost identical release that’s also deployed in the production environment.

What are containers, and what do they have to do with Kubernetes and Docker?

Containers are fundamental units in virtualization technology that allow applications, data, and services to be isolated and segregated from the underlying operating system.

They enable developers to package up an application with all its dependencies into a single “container” that can run on any machine regardless of its underlying environment. This makes them incredibly powerful for creating portable applications, simplifying development workflows, and enabling rapid deployment.

What are Kubernetes and Docker Container Orchestration Tools?

Kubernetes and Docker are popular container orchestration tools that provide a platform for deploying, scaling, and managing containers in a distributed environment.

Kubernetes is an open-source system that automates containerized applications’ deployment, scaling, and operations across clusters. It can easily handle large deployments with thousands of nodes.

Docker Swarm is another open-source container orchestration tool that provides automated deployment and scaling of containerized applications. It has many features, such as built-in service discovery, load balancing, and health checking.

Has Kubernetes announced any plans for deprecating support for the Docker container engine?

Kubernetes does not plan to deprecate support for the Docker container engine. Kubernetes and Docker are two separate technologies that complement each other to provide a complete container-orchestration solution.

What are other container engine options available in the market?

In addition to Docker and Kubernetes, you can find other container engines, such as

CoreOS rkt: CoreOS rkt is an open-source container engine that uses a simple, secure, and composable application model for Linux containers. It offers features such as support for multiple images per container instance, better security through namespacing, and more straightforward orchestration.

AWS EC2 Container Service (ECS): ECS is a container management service that makes deploying, managing, and scaling applications in the Amazon Web Services cloud environment easier. It supports Docker containers and offers automated service discovery, resource-level isolation, and integrated load-balancing features.

Apache Mesos: Apache Mesos is an open-source cluster manager that automates containerized applications’ deployment, scaling, and management. It supports both Docker and rkt containers. It also provides resource isolation, automated service discovery, and integrated load balancing features.

Overall, the choice of container engine will depend on the specific project requirements.