In the ever-evolving big data landscape, two titans have consistently dominated the conversation: Hadoop vs Spark. These technologies, fundamental to the processing and analyzing of massive datasets, have become cornerstones in the toolkit of data professionals.

However, as similar as they might seem in their goals, Hadoop vs Spark offer distinct approaches and capabilities, making them suitable for different scenarios in the data processing world.

Data’s increasing volume, variety, and velocity present a compelling argument for better understanding these tools.

According to a recent report by Markets and Markets, the big data market size is expected to grow significantly, reaching $229.4 billion by 2025. This growth underlines the expanding role of data processing frameworks like Hadoop vs Spark in extracting value from big data.

In this article, we dive into Hadoop vs Spark’s key differences, dissecting their unique characteristics and how they stand apart in big data processing. Whether you’re a data scientist, a business analyst, or a tech enthusiast, understanding these differences is crucial in navigating big data’s complex and rapidly changing world.

Table Of Contents

- What Is Hadoop?

- What Is Spark?

- Spark and Hadoop: Differences Between The Two Big Data Frameworks

- Common Use Cases for Spark

- Common Use Cases for Hadoop

- How to Choose Between Hadoop or Spark?

- Conclusion: Harnessing the Power of Big Data with Hadoop and Spark

- FAQs

What Is Hadoop?

Credits: Hadoop

In the duet of Hadoop vs Spark, understanding each performer is crucial. Hadoop, often called Apache Hadoop, is not just a single tool but a suite of open-source software utilities that facilitate using a network of many computers to solve problems involving massive amounts of data and computation. It provides a reliable means for storing, processing and analyzing colossal data sets, which was once arduous. Developed by the Apache Software Foundation, Hadoop has become synonymous with big data processing.

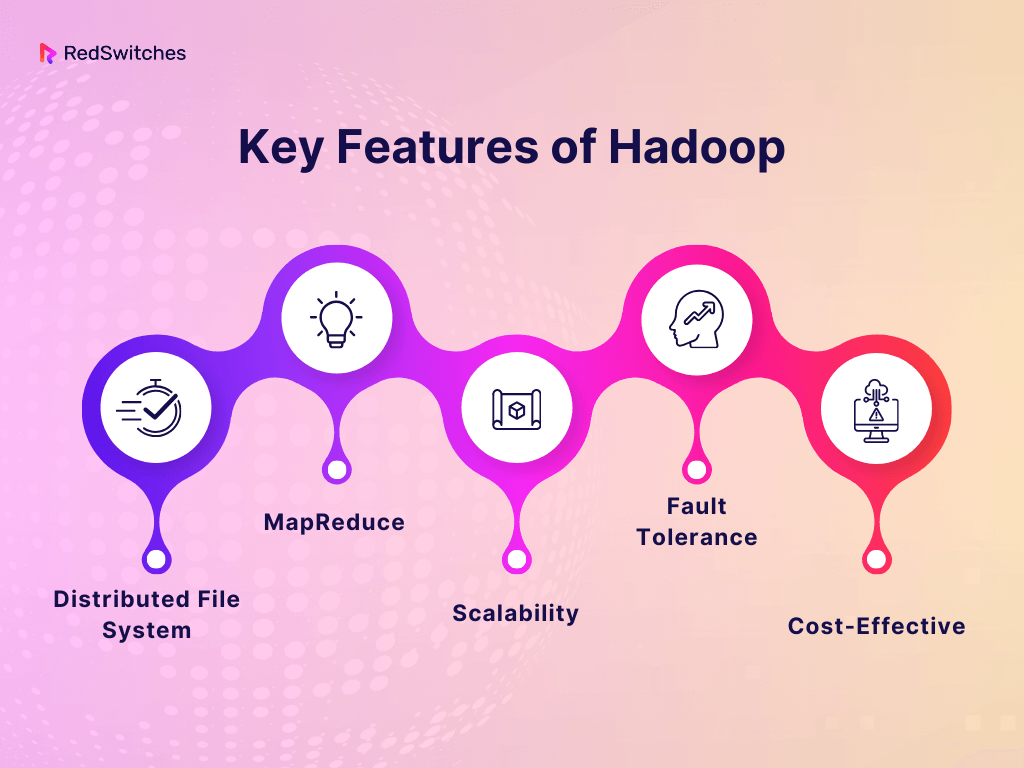

Key Features

- Distributed File System: Hadoop’s heart lies in its distributed file system, HDFS (Hadoop Distributed File System), which allows data to be stored in an easily accessible format, spread across thousands of nodes.

- MapReduce: This is Hadoop’s processing muscle. MapReduce is a programming model that helps process large data sets with a parallel, distributed algorithm on a cluster.

- Scalability: One of Hadoop’s key strengths is its scalability. It can store and distribute large data sets across hundreds of inexpensive servers operating in parallel.

- Fault Tolerance: Hadoop is designed to be robust. It maintains copies of data to ensure reliability. Data is redirected to another path if a node goes down, ensuring uninterrupted processing.

- Cost-Effective: Hadoop allows storing large quantities of data at a relatively low cost, making it a popular choice for businesses leveraging big data.

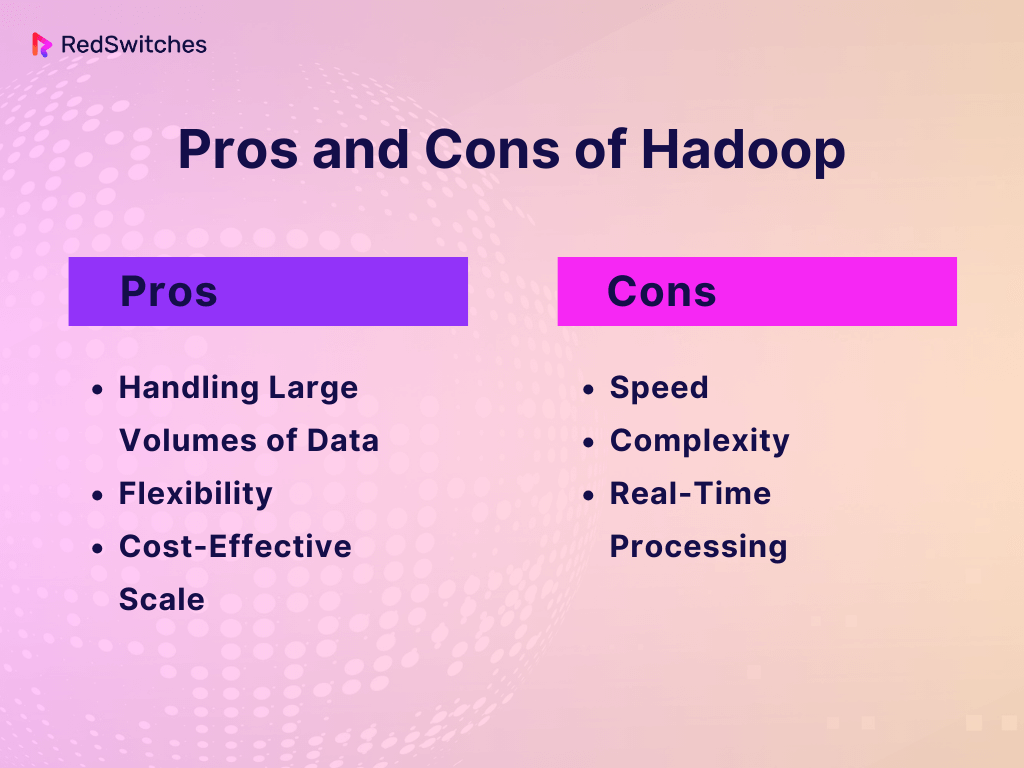

Pros and Cons

Pros:

- Handling Large Volumes of Data: Hadoop excels at storing and processing vast amounts of data, making it ideal for businesses with terabytes or petabytes.

- Flexibility: It can process structured, semi-structured, and unstructured data, offering flexibility that is highly valuable in today’s varied data landscape.

- Cost-Effective Scale: Its ability to store data on commodity hardware makes scaling up more affordable than traditional relational database management systems.

Cons:

- Speed: When it comes to speed, Hadoop lags, especially in comparison with Spark. Its MapReduce model, though influential, is slower in processing large datasets.

- Complexity: Setting up and maintaining a Hadoop cluster requires significant expertise and understanding of the underlying architecture.

- Real-Time Processing: Hadoop is not well-suited for real-time data processing, which can be a limitation for businesses needing immediate insights from their data.

In the context of Hadoop vs Spark, it’s clear that while Hadoop set the stage for big data processing, it has certain limitations, especially regarding speed and real-time data analysis. However, its robustness, scalability, and cost-effectiveness make it indispensable in the extensive data toolkit.

Navigate the cloud computing landscape with our insightful article “Top 7+ Cloud Computing Advantages and Disadvantages“. Unravel the benefits and challenges to make informed decisions for your digital strategy.

What Is Spark?

Credits: Spark

As we continue our exploration of Hadoop vs Spark, it’s time to shine the spotlight on Spark. Apache Spark, often simply referred to as Spark, is an open-source, distributed computing system offering impressive capabilities for big data processing. It emerged as a solution to some of the drawbacks of Hadoop, particularly its speed limitations. Developed for batch and real-time data processing, Spark quickly garnered attention for its efficiency and speed.

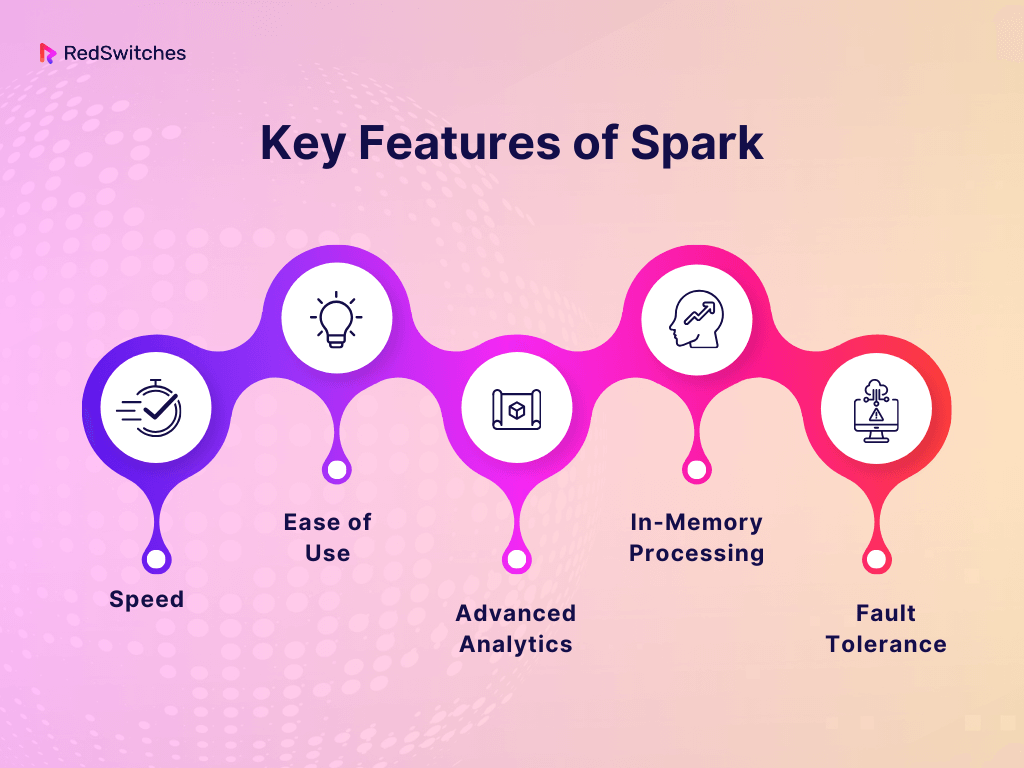

Key Features

- Speed: Spark’s claim to fame is its speed. It can process data up to 100 times faster than Hadoop’s MapReduce in memory or 10 times faster on disk.

- Ease of Use: Spark provides easy-to-use APIs in Java, Scala, Python, and R, making it accessible to various developers and data scientists.

- Advanced Analytics: Beyond MapReduce, Spark supports SQL queries, streaming data, machine learning, and graph data processing.

- In-Memory Processing: Spark’s in-memory computation capabilities are a significant factor in its speed, enabling fast data processing and real-time analytics.

- Fault Tolerance: Like Hadoop, Spark is designed to be fault-tolerant, with resilient distributed datasets (RDDs) that automatically recover data on failure.

Also Read: Python vs PHP: How To Choose The Best Language For Your Projects?

Pros and Cons

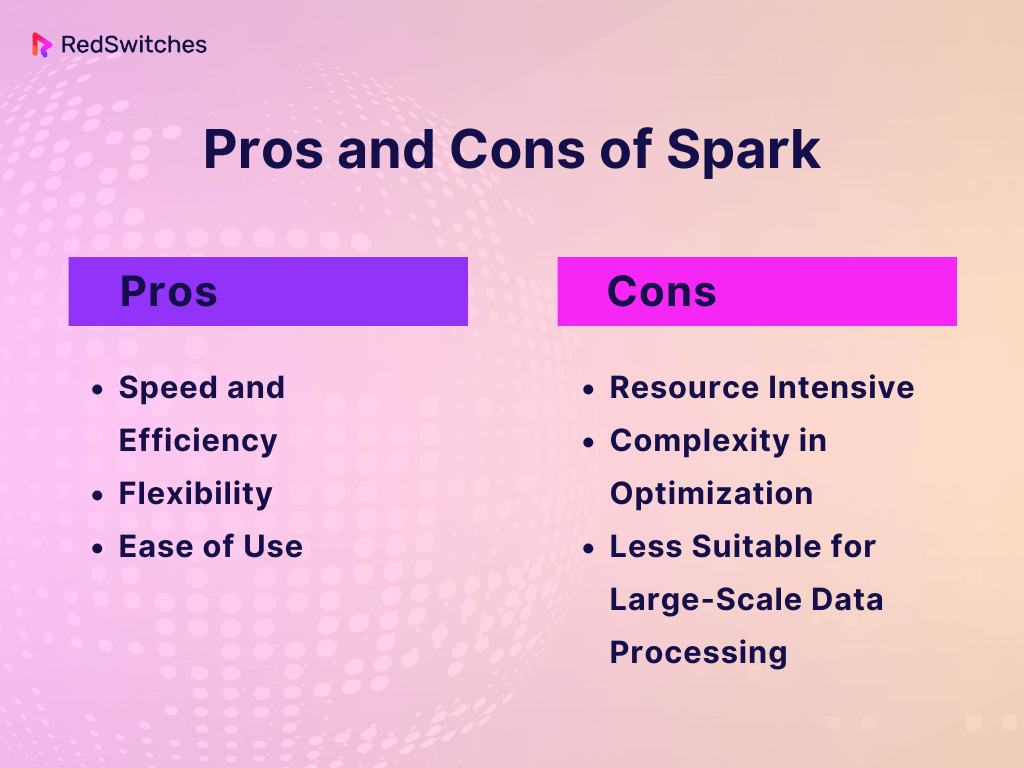

Pros:

- Speed and Efficiency: Spark’s in-memory data processing speeds up tasks significantly, making it ideal for data analytics applications where speed is crucial.

- Flexibility: Spark is versatile and can handle batch processing, real-time analytics, machine learning, and more.

- Ease of Use: Its user-friendly APIs and support for multiple languages make Spark accessible to a broader range of users.

Cons:

- Resource Intensive: Spark’s in-memory processing can be resource-intensive, requiring more RAM and potentially leading to higher costs.

- Complexity in Optimization: To fully leverage Spark’s capabilities, users need a good understanding of its operations and the ability to optimize them for specific tasks.

- Less Suitable for Large-Scale Data Processing: While Spark is incredibly fast, it is not as well-suited as Hadoop for extremely large-scale data processing due to its in-memory model.

In the grand scheme of Hadoop vs Spark, Spark emerges as a powerful, fast, and versatile framework, especially suited for real-time analytics and data processing tasks where speed is essential. However, its resource-intensive nature and the requirement for optimization skills can be challenging.

Spark and Hadoop: Differences Between The Two Big Data Frameworks

Credits: Freepik

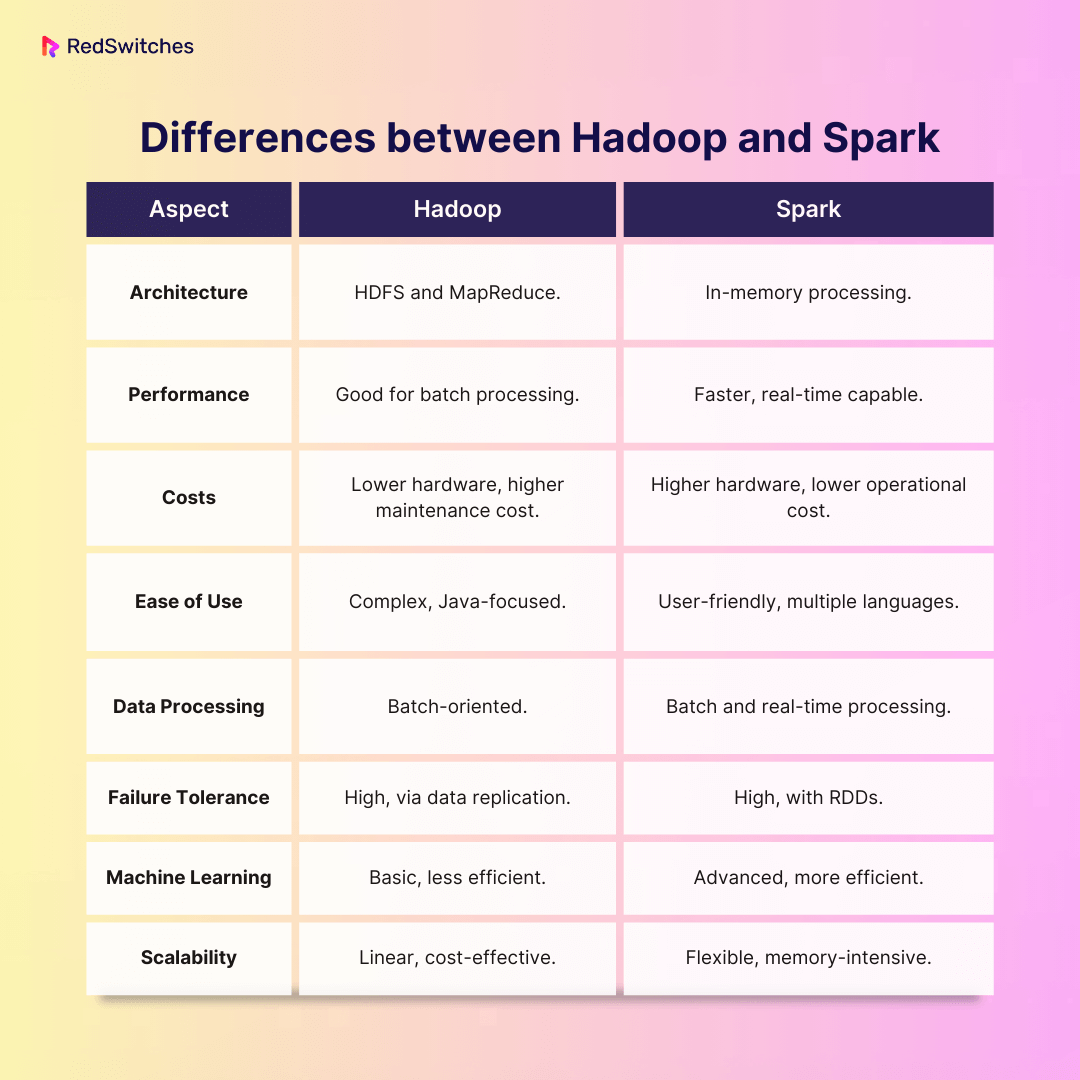

As we navigate through the intricate world of big data, comparing Hadoop vs Spark emerges as a critical discourse. While both Hadoop and Spark are luminaries in big data processing, understanding their differences is not just about contrasting two technologies; it’s about deciphering the evolving needs of big data applications and choosing the right tool for the right job.

Hadoop, the seasoned veteran, transformed the landscape of big data storage and processing with its robust architecture and scalability. On the other hand, Spark entered the scene as a nimble and efficient contender, addressing some of the performance limitations of Hadoop, particularly in speed and real-time processing.

This segment aims to unravel these differences in a detailed comparison, highlighting how each framework excels and where it falls short. Whether you’re a data scientist weighing your options, a business leader making strategic decisions, or a developer looking to build scalable applications, understanding the nuanced distinctions between Hadoop vs Spark is essential for leveraging the full potential of big data technologies.

Architecture

When exploring the Hadoop vs Spark debate, the architecture of these frameworks forms a foundational point of comparison. The architecture dictates how each system operates and influences its suitability for various data processing tasks. Here’s a detailed look at the architecture of both Hadoop and Spark.

Hadoop’s Architecture

- Hadoop Distributed File System (HDFS): At the heart of Hadoop’s architecture is HDFS, a file system that distributes data across multiple nodes, ensuring high data availability and fault tolerance. HDFS splits large data files into blocks and distributes them across the cluster, allowing for parallel data processing.

- MapReduce: This is Hadoop’s processing engine. MapReduce divides tasks into small parts (Map phase) and then consolidates the results (Reduce phase), making it practical for processing large volumes of data. However, MapReduce can be slower for complex tasks, as it writes intermediate data to disk.

- Modular and Scalable: Hadoop’s architecture is highly modular, allowing it to scale quickly by adding more nodes to the cluster. This scalability makes it well-suited for enterprises with vast amounts of data.

Spark’s Architecture

- In-Memory Processing: Unlike Hadoop, Spark is designed for fast, in-memory data processing. This allows Spark to process data significantly faster than Hadoop, especially for complex, iterative algorithms like those used in machine learning.

- Resilient Distributed Datasets (RDDs): Spark’s core data structure is the RDD, which enables fault-tolerant, parallel data operations. RDDs are immutable collections of objects distributed across the Spark cluster.

- General-Purpose Processing: Spark supports diverse data processing tasks — from batch and interactive processing to real-time analytics and machine learning — making it a more versatile option than Hadoop.

Dive into the vast and dynamic universe of big data databases with our extensive overview. Uncover the intricacies, explore various types, and grasp the full potential of big data databases in modern technology.

Comparative Analysis

- Processing Speed and Efficiency: Spark’s in-memory processing enables faster data analysis than Hadoop’s disk-based MapReduce. This makes Spark more suitable for quick processing tasks like real-time analytics and iterative machine-learning algorithms.

- Flexibility: Spark provides more flexibility with its support for various data processing types, whereas Hadoop is primarily optimized for batch-processing tasks.

- Fault Tolerance: Both frameworks offer robust fault tolerance but achieve it differently. Hadoop replicates data blocks in HDFS, while Spark uses RDDs to recover lost data through lineage.

- Resource Utilization: Spark’s in-memory processing demands more memory and potentially more powerful hardware, whereas Hadoop’s disk-based approach can run on more cost-effective hardware but might be slower.

Understanding this architectural diff between Hadoop and Spark is key in determining the right tool for your big data needs. Hadoop is highly reliable for large-scale data storage and batch processing, while Spark excels in speed and flexibility, offering advantages in real-time data processing and complex analytics.

Performance

Credits: Freepik

In the Hadoop vs Spark debate, performance is a crucial aspect that differentiates these two big data frameworks. Performance in this context refers to how efficiently and quickly the systems can process large volumes of data. Let’s investigate how Hadoop vs Spark perform in various data processing scenarios.

Hadoop Performance

- Batch Processing Efficiency: Hadoop excels in batch processing large datasets. Its MapReduce model, while not the fastest for processing data, is highly reliable to sequential data processing over large volumes of data.

- Disk-Based Processing: Hadoop’s reliance on disk-based storage and processing can lead to slower performance, especially for data-intensive operations. This is because reading from and writing to disk is slower than processing data in memory.

- Scalability Impact on Performance: Hadoop’s ability to scale linearly means its performance can be predictably enhanced by adding more nodes to the cluster, which is a significant advantage for handling growing data volumes.

Spark Performance

- Speed and In-Memory Processing: Spark is renowned for its speed, primarily due to its in-memory data processing capabilities. It can process data up to 100 times faster than Hadoop for specific tasks, particularly those involving iterative algorithms, such as machine learning and data mining.

- Real-Time Data Processing: Spark’s ability to handle real-time data processing (stream processing) is a significant performance advantage over Hadoop. This makes Spark ideal for scenarios where immediate data analysis and decision-making are required.

- Resource Efficiency: While Spark’s in-memory processing is faster, it requires sufficient memory allocation, which can limit cost and hardware resources.

Comparative Analysis

- Processing Speed: Spark generally outperforms Hadoop in processing speed, especially for complex tasks requiring quick computation and iterative processes.

- Suitability for Large Data Sets: Hadoop is more suitable for applications that process large datasets, especially in environments where processing speed is not critical.

- Use Case Adaptability: Spark’s speed and support for real-time processing make it adaptable for a broader range of use cases, including those that require fast data analytics and interactive processing.

In summary, while Hadoop offers robust and reliable performance for large-scale batch processing, Spark leads in speed and versatility, making it better suited for real-time analytics and complex data processing tasks that require quick turnaround.

Delve into the world of Data-at-Rest Encryption with our comprehensive exploration. Discover how it works, learn about its various types, and grasp best practices alongside the latest trends.

Costs

Credits: Freepik

In any discussion about Hadoop vs Spark, an essential factor to consider is cost. This encompasses the direct costs associated with installing and running these systems and indirect costs like maintenance, scaling, and the need for specialized personnel. Let’s delve into the cost aspects of Hadoop vs Spark to provide a clearer picture.

Hadoop Costs

- Hardware Costs: Hadoop is designed to run on commodity hardware, which is less expensive than the specialized hardware often required for high-speed processing. This can lead to significant cost savings, especially for businesses with massive data sets.

- Scalability and Maintenance: Scaling a Hadoop system can be more cost-effective than traditional data processing systems. However, maintaining a Hadoop cluster, especially a large one, can be complex and might require a dedicated team of IT professionals.

- Energy Consumption: Hadoop relies on disk-based storage and processing, which can be more energy-intensive, especially when operating large clusters. This can increase operational costs over time.

Spark Costs

- In-Memory Processing: Spark’s in-memory processing requires a significant amount of RAM, which can increase hardware costs. High-performance memory is generally more expensive than disk storage.

- Resource Efficiency: Despite the higher hardware costs, Spark’s efficient use of resources can lead to faster processing times and reduced long-term operational costs, particularly in environments where speed and real-time processing are crucial.

- Reduced Need for Physical Infrastructure: For specific applications, Spark’s speed and efficiency can allow businesses to accomplish their data processing goals with less physical infrastructure compared to Hadoop, potentially lowering overall costs.

Comparative Analysis

- Initial Setup vs Ongoing Costs: Hadoop may have lower initial hardware costs, but the cost of scaling and maintenance should not be overlooked. Spark might require a higher initial investment in more powerful hardware but can offer operational efficiency and speed savings.

- Dependence on Use Case: The cost-effectiveness of Hadoop or Spark dramatically depends on the specific use case. Hadoop’s low-cost storage solution may be more economical for long-term, large-scale batch processing. For businesses needing fast processing and real-time analytics, the higher initial cost of Spark may be justified by the speed and efficiency gains.

- Expertise and Training Costs: Hadoop vs Spark Both require specialized knowledge for setup, maintenance, and optimization. The costs associated with training or hiring qualified personnel are also important in the overall cost analysis.

In conclusion, while Hadoop vs Spark both offer cost-effective solutions for big data processing, the choice between them should be based on a thorough analysis of both direct and indirect costs, aligned with the specific requirements and goals of the project or business.

Ease of Use

When comparing Hadoop vs Spark regarding ease of use, we’re looking at how simple it is for users to set up, manage, and interact with these technologies, especially for those who may not have extensive technical expertise. Let’s break down what ease of use means for Hadoop vs Spark.

Hadoop Ease of Use

- Setup and Management: Setting up a Hadoop cluster can be complex. It often requires in-depth knowledge of the system’s architecture and hardware considerations. Managing a Hadoop environment, especially a large one, can also be challenging, requiring ongoing maintenance and monitoring.

- Learning Curve: While powerful, Hadoop’s MapReduce programming model can be difficult for beginners to grasp. It demands a good understanding of Java, as it’s the primary language used for writing MapReduce jobs.

- Ecosystem and Tools: Hadoop’s ecosystem includes various tools like Hive and Pig, making writing and managing MapReduce jobs easier using SQL-like queries and scripts. However, understanding and efficiently using these tools still requires much learning.

Also Read: Install Java Ubuntu In Under 5 Minutes

Spark Ease of Use

- User-Friendly APIs: Spark is often praised for its user-friendly APIs. It supports multiple programming languages like Scala, Java, Python, and R, making it accessible to a broader range of users, including those who are not Java experts.

- Interactive Analytics: Spark’s ability to perform interactive data analysis (using Spark Shell, for instance) is a big plus for ease of use. Users can quickly write and test code in an interactive environment, a significant advantage over Hadoop’s batch-oriented nature.

- Streamlined Data Processing: Spark abstracts much of the complexity in data processing. Its built-in SQL, streaming, machine learning, and graph processing libraries allow users to handle various data processing tasks more straightforwardly than Hadoop.

Comparative Analysis

- Learning Curve and Accessibility: with its simpler APIs and interactive nature, Spark generally has a gentler learning curve than Hadoop. It’s more accessible for beginners or those unfamiliar with Java programming.

- Flexibility in Language Support: Spark’s support for multiple programming languages can be a significant advantage in accessibility and ease of use, especially in diverse development environments.

- Complexity in Deployment and Management: Hadoop and Spark require expertise to set up and manage effectively. However, the complexity of managing a Hadoop cluster, especially at scale, can be greater than managing a Spark environment.

While analyzing the difference between Hadoop and Spark in terms of ease of use, both have their complexities. Spark tends to be more user-friendly, especially for those new to big data processing. Its interactive shell, support for multiple programming languages, and streamlined data processing capabilities make it a more approachable option for a broader range of users.

Data Processing

Credits: Freepik

In the realm of Hadoop vs Spark, data processing is a pivotal aspect that differentiates these two giants of big data. Understanding how each framework handles data processing is crucial for anyone looking to leverage these technologies, whether they are data professionals or individuals with a general interest in big data.

Hadoop Data Processing

- Batch-Oriented Processing: Hadoop is primarily designed for batch processing. It is highly efficient at processing large volumes of static data (data that is not changing) in batches. This means that Hadoop takes a large set of data, processes it, and then moves to the next set of data.

- MapReduce Model: The core of Hadoop’s data processing capability lies in the MapReduce model. It involves two key steps – ‘Map,’ which sorts and filters the data, and ‘Reduce’, which performs a summary operation. This model, while powerful, can be slower and less efficient for certain types of tasks, especially those requiring real-time analysis.

- Disk-Based Processing: Hadoop writes intermediate data to disk during MapReduce. This can slow down data processing, especially compared to in-memory methods, but it allows Hadoop to handle large datasets effectively.

Spark Data Processing

- Versatile Data Processing: Spark is built to handle both batch and real-time data processing. It can process data as it arrives, making it an ideal choice for applications that require immediate insights, such as live data from sensors, social media feeds, or financial transactions.

- In-Memory Processing: Spark’s most significant advantage in data processing is its in-memory processing capability. It stores intermediate data in RAM (Random Access Memory), reducing the need to read from and write to disk. This results in much faster data processing compared to Hadoop’s disk-based MapReduce.

- Advanced Analytics Capabilities: Spark supports complex data processing operations like machine learning, graph algorithms, and stream processing. This makes it a versatile tool for many data processing tasks beyond just batch processing.

Comparative Analysis

- Batch vs Real-Time Processing: While Hadoop is a robust solution for batch processing of large datasets, Spark provides flexibility with its ability to handle both batch and real-time processing efficiently.

- Speed of Processing: Spark’s in-memory processing makes it significantly faster than Hadoop for many data processing tasks, especially those requiring immediate insights or involving iterative algorithms.

- Complexity of Data Operations: Spark’s support for advanced analytics and complex data operations makes it a more suitable option for tasks beyond simple batch processing.

The choice between Hadoop vs Spark for data processing depends on the project’s specific needs. Hadoop is ideal for large-scale, batch-oriented data processing, while Spark offers superior performance and flexibility for both batch and real-time processing and advanced analytics tasks.

Failure Tolerance

Failure tolerance is critical in the Hadoop vs Spark comparison, particularly in big data processing. It refers to the ability of a system to continue operating correctly in the event of the failure of some of its components. This is crucial in environments with large volumes of data and complex computations, where the risk of component failure can be significant.

Hadoop Failure Tolerance

- Robust Data Replication: Hadoop’s HDFS (Hadoop Distributed File System) is designed with a high level of fault tolerance. It achieves this by replicating data blocks across different nodes in the cluster. In case of a failure in one node, the system can access the replicated data from another node, ensuring that data is not lost and processing can continue.

- Task Re-execution: In Hadoop’s MapReduce, if a task fails, the system automatically re-executes the failed task on another node. While this ensures that the processing is completed, it can lead to longer processing times, especially in the case of large-scale failures.

- Data Recovery: Hadoop’s architecture and data replication model makes it highly effective for recovering data after failures, ensuring data integrity and availability even during hardware failures.

Spark Failure Tolerance

- Resilient Distributed Datasets (RDDs): Spark’s primary mechanism for failure tolerance is its Resilient Distributed Datasets (RDDs). RDDs are designed to recover from node failures automatically. When an RDD partition is lost, Spark recomputes it using the original transformation from which it was created.

- In-Memory Data Replication: While Spark’s in-memory processing is faster, it also poses a challenge for failure recovery, as data in memory can be lost if a node fails. To mitigate this, Spark offers mechanisms to persist selected RDDs to disk and replicate them across multiple nodes.

- Task Re-execution with Efficient Fault Recovery: Spark re-executes failed tasks like Hadoop. However, due to its in-memory processing, these re-executions are generally faster in Spark than in Hadoop.

Comparative Analysis

- Data Replication vs In-Memory Recovery: Hadoop relies heavily on data replication for failure tolerance, which is effective but can increase storage requirements. Spark, with its RDDs, offers a more memory-efficient approach, though it might require additional strategies for data persistence in memory-intensive tasks.

- Recovery Speed: Spark generally offers faster recovery from failures due to its in-memory processing and efficient fault recovery mechanisms.

- Suitability for Long-Running Tasks: For long-running tasks, Hadoop’s robust data replication model can provide a higher level of assurance against data loss, while Spark’s faster recovery can minimize downtime during processing.

In summary, Hadoop vs Spark both offer strong failure tolerance capabilities, but their approaches differ. Hadoop’s strength lies in its robust data replication model, making it highly reliable for long-running batch processes. With its RDDs and faster recovery mechanisms, Spark provides an efficient solution for applications requiring quick recovery and minimal processing interruption.

Embark on a seamless journey to configure Master-Master MySQL Database Replication with our straightforward guide. In just 5 easy steps, you’ll transform your database management skills.

Machine Learning

Credits: Freepik

Machine learning capabilities form a significant part of the Hadoop vs Spark conversation, especially as big data and artificial intelligence increasingly intersect. Both frameworks offer tools and libraries for machine learning, but their approaches and efficiencies differ.

Hadoop Machine Learning

- MapReduce for Machine Learning: Hadoop’s traditional approach to machine learning involves using its MapReduce model. However, this can be less efficient due to the slow speed of disk-based processing, particularly for iterative algorithms common in machine learning.

- Mahout: Apache Mahout is a machine-learning library specifically designed for Hadoop. It provides scalable machine learning algorithms, but its reliance on MapReduce may not be as efficient for complex models requiring fast, iterative processing.

- Hadoop Ecosystem Integration: Hadoop’s strength in machine learning comes from its ecosystem. Tools like Hive or Pig can be used for pre-processing data, and Hadoop’s robust data storage capabilities are beneficial for handling large machine-learning datasets.

Spark Machine Learning

- Spark MLlib: Spark’s primary tool for machine learning is MLlib. It is a library that provides a variety of machine-learning algorithms optimized for parallel processing. MLlib can handle batch and real-time data, making it versatile for different machine-learning scenarios.

- In-Memory Processing: Spark’s in-memory processing is particularly beneficial for machine learning, as it significantly speeds up the iterative algorithms common in machine learning tasks.

- Ease of Use and Flexibility: Spark’s easy-to-use APIs and support for multiple programming languages make it accessible for a wider range of users, from data scientists to application developers. This accessibility is a big plus for developing and deploying machine learning models.

Comparative Analysis

- Processing Speed for Machine Learning: Spark’s faster processing speed makes it more suitable for complex machine learning tasks, especially those requiring iterative processing.

- Scalability: Both Hadoop and Spark can scale to handle large machine-learning workloads. However, Spark’s efficient use of resources often makes it more cost-effective for scaling up machine learning tasks.

- Ecosystem and Tools: Hadoop’s ecosystem provides a robust environment for managing and preprocessing large datasets for machine learning. Spark, with MLlib, offers a more streamlined and efficient environment for building and deploying machine learning models.

In the context of machine learning, Spark generally offers a more efficient and user-friendly environment, especially for complex models and real-time analytics. Hadoop, while capable, may be better suited for more straightforward machine learning tasks or scenarios where its robust data storage and processing capabilities can be leveraged.

Scalability

Credits: Freepik

Scalability is a vital factor in the Hadoop vs Spark discussion, particularly as organizations grapple with ever-increasing volumes of data. Scalability refers to the ability of a system to handle growing amounts of work or its potential to be enlarged to accommodate that growth. Let’s explore what is difference between Hadoop and Spark in terms of scalability.

Hadoop Scalability

- Designed for Large-Scale Data: Hadoop was built from the ground up with scalability in mind. It can store and process petabytes of data across thousands of servers in a distributed computing environment.

- Linear Scalability: One of Hadoop’s key strengths is its linear scalability. Adding more nodes to a Hadoop cluster increases its linear capacity to store and process data. This makes scaling predictable and effective for large data sets.

- Commodity Hardware: Hadoop’s ability to run on commodity hardware allows organizations to scale their systems cost-effectively. As data grows, additional nodes can be added without significant investment in high-end hardware.

Spark Scalability

- Efficient Processing at Scale: While Spark also supports scalability, its approach differs. Spark can handle large-scale data processing, but its in-memory processing model requires more RAM, potentially leading to higher costs as the system scales.

- Flexible Scaling Options: Spark provides flexibility in scaling, not just in terms of data size but also in the variety of data processing tasks it can handle, including batch processing, real-time analytics, and machine learning.

- Resource Management: Spark’s advanced resource management capabilities, such as those provided by its cluster manager, allow for efficient allocation of resources, which is crucial as the system scales.

Comparative Analysis

- Handling Massive Datasets: Hadoop is well-suited for organizations needing to scale large datasets, particularly for batch processing tasks. Its ability to scale linearly while using cost-effective hardware makes it a strong choice for massive data storage and processing.

- Resource Considerations in Scaling: While Spark is highly scalable, it may require more careful resource management, especially regarding memory allocation. The costs associated with scaling Spark, particularly for large in-memory computations, must be considered.

- Versatility in Scalability: Spark offers a more versatile approach to scalability, capable of scaling for data size and different types of data processing tasks. This makes it suitable for many applications beyond simple batch processing.

In essence, both Hadoop and Spark offer strong scalability features, but their approaches and the associated costs differ. Hadoop is ideal for linear scalability in massive data storage and processing scenarios, while Spark provides a more versatile and efficient but potentially more resource-intensive scaling option.

Dive into the world of big data with this succinct comparison table, highlighting the key differences between Hadoop and Spark. Covering essential aspects like architecture, performance, and scalability, this table offers a quick yet comprehensive overview, ideal for understanding these two dominant big data technologies at a glance.

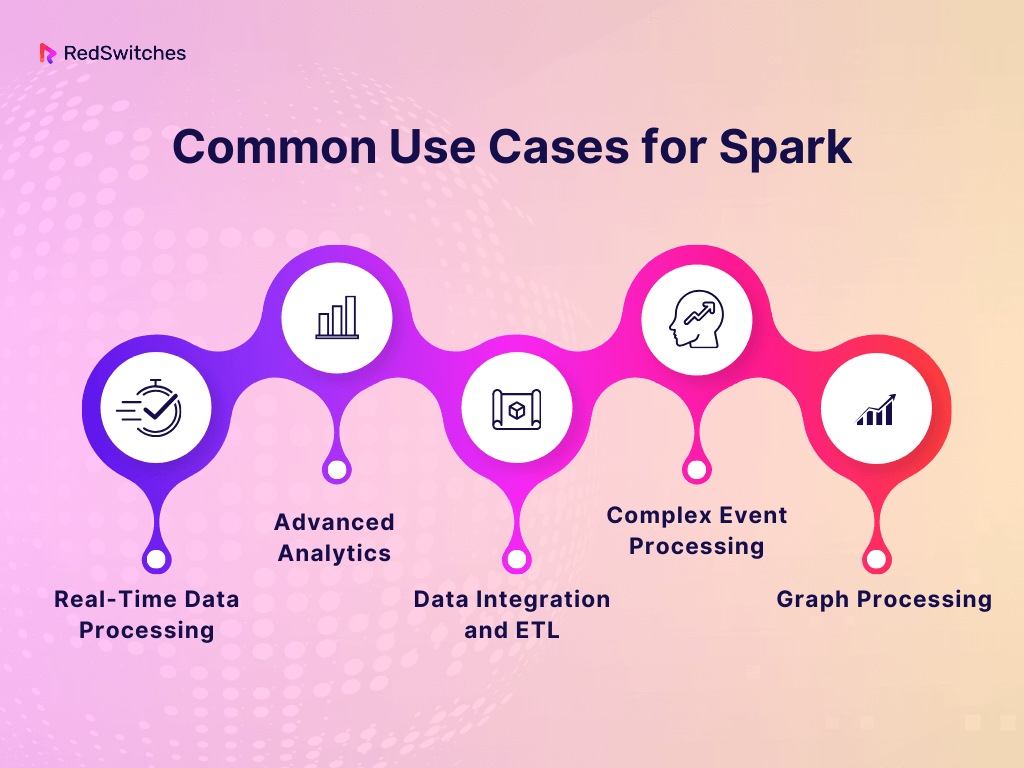

Common Use Cases for Spark

As we delve deeper into big data, Spark stands out as a versatile and powerful tool. Its unique features, such as in-memory processing and support for real-time data analytics, make it suitable for a wide range of applications. Let’s explore some common use cases where Spark particularly shines, showcasing its flexibility and efficiency.

Real-Time Data Processing

- Stream Processing: Spark’s ability to process data in real-time is one of its most significant advantages. With Spark Streaming, businesses can analyze and process data as it arrives, which is crucial for time-sensitive decisions. This feature is particularly beneficial in industries like finance for fraud detection or in social media for live trend analysis.

- Event-Driven Systems: Spark is well-suited for event-driven applications, such as monitoring user activities on websites, tracking customer behavior in real-time, or implementing IoT (Internet of Things) solutions where immediate processing of sensor data is required.

Advanced Analytics

- Machine Learning: Spark’s MLlib library offers a range of machine learning algorithms and utilities, making it easier for data scientists to implement complex predictive models. This is particularly useful in customer segmentation, predictive maintenance, and recommendation systems.

- Interactive Data Analysis: With tools like Spark SQL and DataFrames, Spark enables interactive data exploration and analysis. Analysts and data scientists can quickly query large datasets, perform exploratory data analysis, and gain insights into trends and patterns.

Data Integration and ETL

- ETL (Extract, Transform, Load) Operations: Spark’s speed and ease of handling diverse data formats make it ideal for ETL tasks. Businesses often use Spark to clean, transform, and prepare data for storage in data warehouses or for further analysis.

- Data Pipeline Development: Developing data pipelines is another common use case for Spark. It can efficiently handle data ingestion, processing, and transformation from various sources, ensuring a smooth data flow through the pipeline.

Complex Event Processing

- Financial Analysis: In the financial sector, Spark is used for complex event processing, such as analyzing stock market trends, processing high-frequency trading data, or risk management.

- Network Monitoring: Spark is also employed in network monitoring, which processes large network data streams to detect anomalies, predict failures, or ensure cybersecurity.

Graph Processing

- GraphX Library: For applications involving graph processing, like social network analysis or detection of fraud rings, Spark’s GraphX library provides a suite of tools and algorithms designed explicitly for graph analytics.

These use cases demonstrate Spark’s adaptability and power in handling varied big data challenges. From real-time analytics to sophisticated machine learning models, Spark provides an efficient and user-friendly environment, making it a favored choice in the toolbox of data professionals across industries.

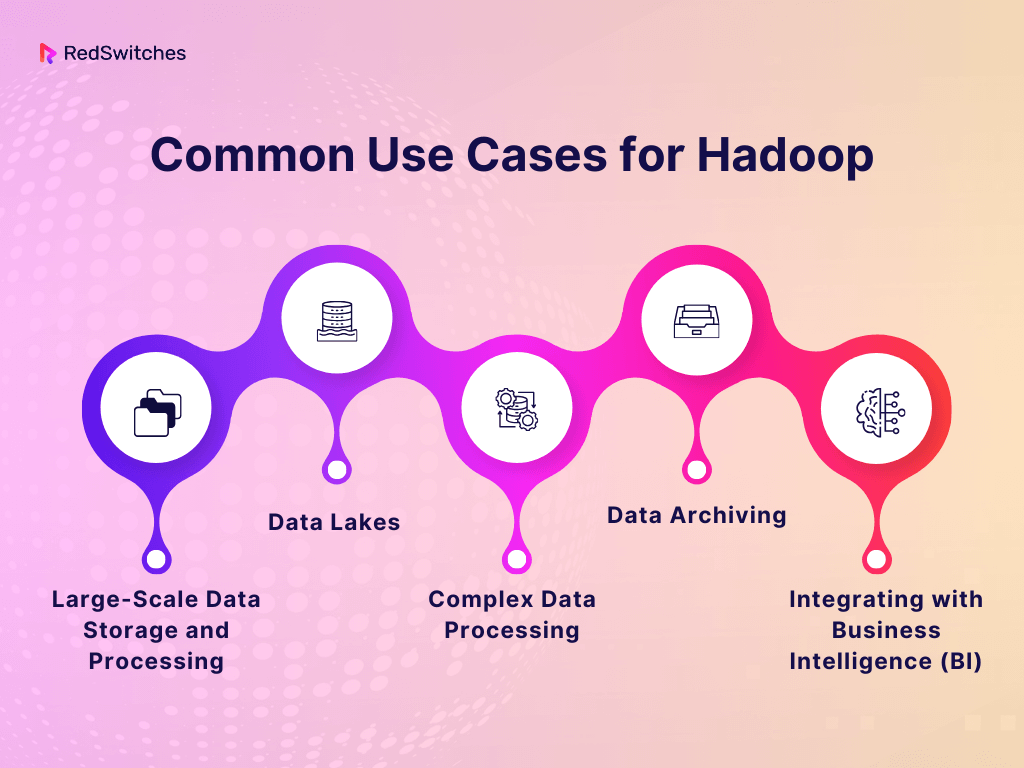

Common Use Cases for Hadoop

Hadoop, with its robust architecture and proven track record, has established itself as a staple in the big data realm. Its ability to efficiently store and process vast amounts of data makes it a go-to choice for various use cases.

Here, we explore some of the most common scenarios where Hadoop’s capabilities are particularly beneficial, showcasing its importance in big data.

Large-Scale Data Storage and Processing

- Data Warehousing: Hadoop’s ability to store massive data and its scalability make it ideal for data warehousing solutions. Businesses use Hadoop to store and manage their historical data, allowing for efficient retrieval and analysis when needed.

- Batch Processing of Big Data: Hadoop is well-suited for batch processing large data sets. Companies often use it to process logs, social media data, or large sets of transactional data, where processing can be done in a sequential, non-time-sensitive manner.

Data Lakes

- Centralized Data Repository: Hadoop’s HDFS (Hadoop Distributed File System) is commonly used to create data lakes. A data lake is a centralized repository that allows storing all structured and unstructured data at any scale. Companies use data lakes to store everything from raw data to transformed data for diverse analytics applications.

- Big Data Exploration and Discovery: Data lakes built on Hadoop enable businesses to explore vast amounts of raw data, identify patterns, and derive insights. This is crucial for healthcare, retail, and finance sectors, where analyzing large datasets is key to strategic decision-making.

Complex Data Processing

- Large-Scale Data Analysis: Hadoop’s MapReduce framework is particularly effective for complex data analysis tasks. This includes analyzing customer behavior, market trends, or large-scale scientific data, where the sheer volume of data would be challenging to process using traditional methods.

- Log and Event Data Processing: Hadoop is widely used for processing and analyzing log files generated by web servers, application servers, or operating systems, providing insights into system performance, user behavior, and operational trends.

Data Archiving

- Cost-Effective Storage Solution: Hadoop’s ability to leverage commodity hardware makes it an economical choice for long-term data archiving. Businesses archive vast amounts of data in Hadoop clusters, with the assurance of data safety and easy accessibility.

Integrating with Business Intelligence (BI)

- BI Tool Integration: Hadoop can be integrated with various Business Intelligence tools, allowing organizations to perform advanced analytics, reporting, and data visualization on big data stored in Hadoop clusters.

These use cases illustrate Hadoop’s significance in handling large-scale data storage, processing, and analysis. Its robustness, scalability, and cost-effectiveness make it an indispensable tool for businesses dealing with big data challenges, particularly where the scale and complexity of data exceed the capabilities of traditional data management systems.

Ready to unlock the mysteries of distributed databases? Click here to delve into our “Distributed Database: A Comprehensive Overview” and discover the world that powers today’s data-driven technologies!

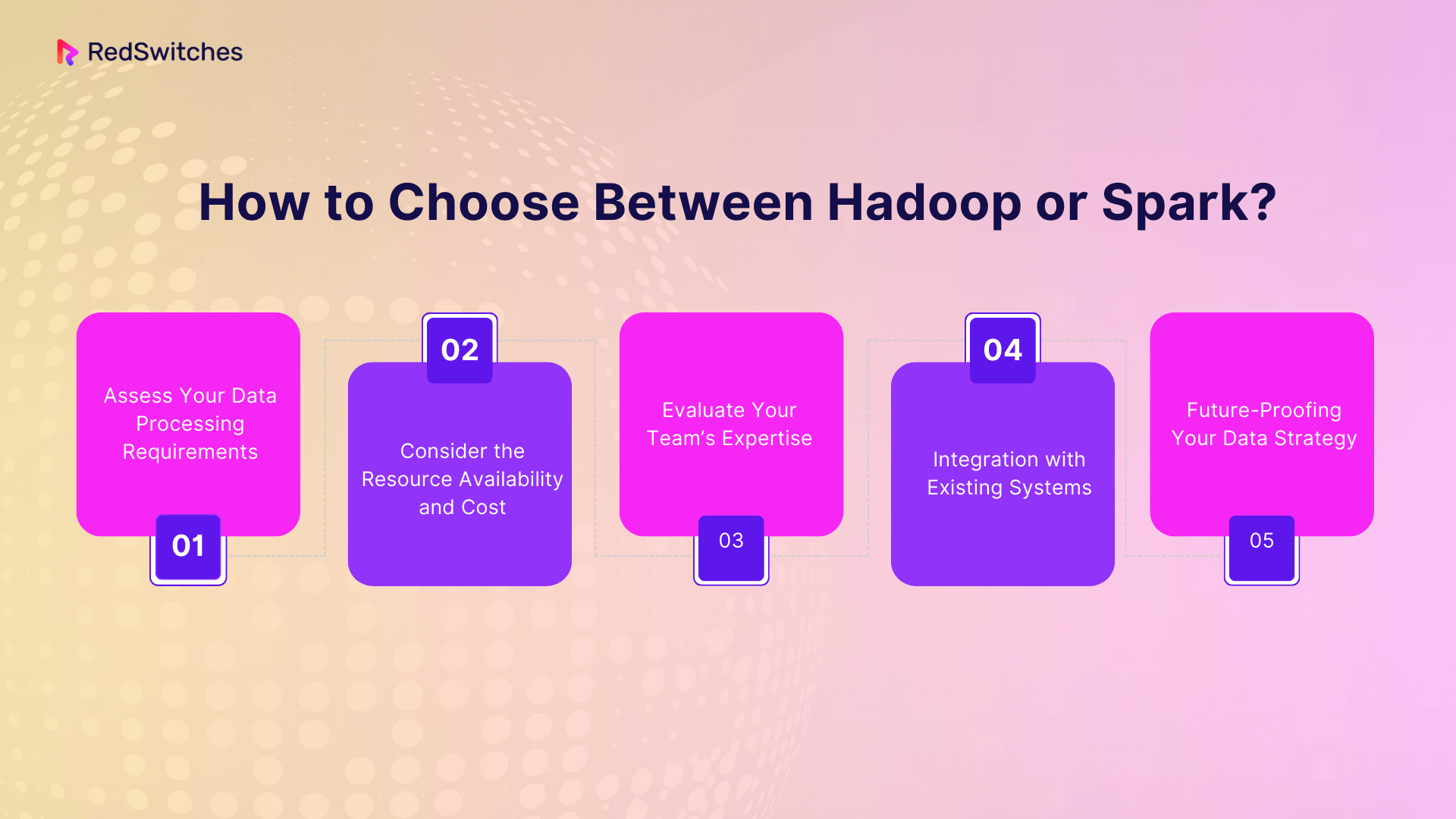

How to Choose Between Hadoop or Spark?

Hadoop vs Spark deciding which tool to implement in your big data strategy is a critical decision that can significantly impact the efficiency and success of your data processing tasks. While both have their strengths, the choice ultimately depends on your needs and constraints. Here are some key considerations to help you decide between Hadoop vs Spark.

Assess Your Data Processing Requirements

- Data Volume and Complexity: If your primary requirement is to store and process vast amounts of data, especially in batch mode, Hadoop might be the more suitable choice due to its robust storage capabilities and efficient batch processing. However, Spark is likely the better option if you focus on speed and need to perform complex, real-time analytics or iterative machine-learning tasks.

- Real-Time Processing Needs: Spark’s fast processing capabilities make it the preferred choice for real-time data applications, such as streaming analytics.

Consider the Resource Availability and Cost

- Hardware and Infrastructure: Evaluate the available hardware and infrastructure. Hadoop can run on commodity hardware and is known for its cost-effective storage solution, making it suitable for businesses on a tight budget. However, Spark requires more powerful hardware with higher RAM, which can be more costly.

- Maintenance and Scaling Costs: Consider the costs of maintaining and scaling the system. Hadoop can be more challenging and costly to manage, especially at scale, while Spark can be more resource-efficient but might require a higher initial investment.

Evaluate Your Team’s Expertise

- Technical Expertise: The decision should also factor in the technical expertise of your team. Hadoop requires in-depth knowledge of its ecosystem and is generally more challenging to set up and manage. Spark, while also complex, offers more straightforward APIs and is often considered more developer-friendly.

Integration with Existing Systems

- Compatibility with Current Infrastructure: Consider how well the new system will integrate with your existing data infrastructure. If you already have a Hadoop ecosystem, integrating Spark can be a seamless process, allowing you to leverage the best of both worlds.

Future-Proofing Your Data Strategy

- Scalability and Flexibility for Future Needs: Consider your current needs and future requirements. Spark’s versatility and scalability might offer more long-term benefits if your data strategy involves scaling up or diversifying data processing tasks.

In summary, choosing Hadoop vs Spark is not a one-size-fits-all decision. It requires thoroughly evaluating your data processing requirements, resource availability, team expertise, existing systems, and future data strategy. By carefully considering these factors, you can select the framework that best aligns with your organization’s needs and goals in big data.

Credits: Freepik

Conclusion: Harnessing the Power of Big Data with Hadoop and Spark

In the dynamic and ever-expanding universe of big data, Hadoop vs Spark emerges as two pivotal forces. These frameworks offer unique strengths and capabilities, making them invaluable assets in any data-driven organization’s arsenal. While Hadoop excels in robust data storage and efficient batch processing, Spark shines with its speed, real-time processing, and flexibility.

As we have journeyed through the intricate details of Hadoop vs Spark, it’s evident that their choice hinges on specific project requirements, resource availability, and strategic data objectives. Whether it’s managing colossal data warehouses with Hadoop or driving innovative, real-time analytics with Spark, the potential to unlock transformative insights from your data is immense.

Are you ready to plunge into the world of big data but unsure where to start? Look no further than RedSwitches. At RedSwitches, we understand the intricacies of big data technologies. Our team of experts is equipped to guide you in selecting and implementing the proper framework that aligns with your business needs.

With our robust infrastructure and tailored solutions, we empower your organization to harness the full potential of big data, be it through Hadoop’s vast storage capabilities or Spark’s lightning-fast processing power.

Embark on your big data journey with the Dedicated Server of RedSwitches. Let’s unlock the power of your data together!

FAQs

Q. What is the difference between Hadoop vs Spark vs Kafka?

Hadoop is a framework primarily for distributed storage and batch processing. Spark is a data processing engine known for fast, in-memory computation, suitable for batch and real-time processing. Kafka is a streaming platform for building real-time data pipelines and apps, often integrated with Hadoop or Spark for data ingestion and processing.

Q. What is the difference between a Hadoop developer and a Spark developer?

A Hadoop Developer typically works on developing applications and systems based on the Hadoop ecosystem, often focusing on components like HDFS, MapReduce, Hive, and Pig. A Spark Developer, in contrast, focuses on building applications using the Apache Spark framework, utilizing its capabilities for fast, in-memory data processing, stream processing, and machine learning.

Q. Is Spark faster than Hadoop?

Yes, Spark is generally faster than Hadoop regarding data processing. Spark’s in-memory data processing architecture allows it to process data significantly faster than Hadoop’s MapReduce, which relies on disk-based processing. This is particularly true for tasks that require real-time processing or involve iterative algorithms.

Q. Is Hadoop faster than Apache Spark?

Typically, no. Hadoop is not faster than Spark in most data processing tasks. Hadoop’s MapReduce model, which processes data on disk, is slower than Spark’s in-memory processing model. However, Hadoop can be more cost-effective for specific large-scale batch processing tasks where the processing speed is not a critical factor.

Q. What is the difference between Hadoop and Spark?

Hadoop and Spark are both big data frameworks, but they have different approaches to processing data. Hadoop uses MapReduce for data processing, while Spark employs in-memory processing.

Q. How does Hadoop process data compared to Spark?

Hadoop processes data using the MapReduce paradigm, which involves writing and executing Map and Reduce functions on disk. On the other hand, Spark processes data in-memory, making it faster compared to Hadoop.

Q. What is the role of Hadoop in big data processing?

Hadoop is an open-source framework that provides a robust ecosystem for storing, processing, and analyzing large volumes of data across distributed computing clusters.

Q. How does Spark differ from Hadoop’s MapReduce?

Spark is much faster than Hadoop’s MapReduce due to its in-memory processing capabilities. It is designed to handle use cases that Hadoop may struggle with in terms of performance.

Q. What are the advantages of using Spark over Hadoop?

Spark runs much faster than Hadoop, especially when it comes to iterative algorithms and interactive data analysis. It also provides libraries for machine learning, graph processing, and streaming.

Q. Can Spark run on top of Hadoop?

Yes, Spark can run on top of Hadoop to leverage Hadoop’s distributed file system (HDFS) for storage and YARN for resource management, enhancing its capabilities for big data processing.

Q. How does Spark handle structured data compared to Hadoop?

Spark processes structured data more efficiently than Hadoop, as it provides libraries like Spark SQL and DataFrame API for working with structured data and performing complex analytics.

Q. What are the key components of the Hadoop ecosystem?

The Hadoop ecosystem includes components such as Hadoop Common, Hadoop Distributed File System (HDFS), Hadoop YARN, and various other tools for data ingestion, processing, and analysis.

Q. What are the key features of Spark compared to Hadoop?

Spark includes features like Spark Core for distributed data processing, Spark SQL for structured data processing, Spark Streaming for real-time data processing, and Machine Learning libraries, making it versatile compared to Hadoop.

Q. How do Apache Hadoop and Apache Spark differ in their approaches to big data processing?

Apache Hadoop uses disk-based storage and MapReduce for processing, while Apache Spark leverages in-memory computing and a unified processing engine for batch, real-time, and iterative data processing.