The NVIDIA RTX A4000 ADA, part of NVIDIA’s professional graphics card series, is a powerful and specialized solution designed to meet the demands of modern applications that rely on sophisticated graphics processing capabilities.

The card is designed to deliver outstanding performance across a wide range of workloads, and it exemplifies NVIDIA’s dedication to providing cutting-edge technology for professionals who demand top-tier graphics and computational capabilities.

This blog will examine the essential features and relevance of the NVIDIA RTX A4000 ADA in professional graphics and computing.

Before going into the details of the RTX A4000 capabilities, let’s have a short overview of this amazing graphic card.

Table of Contents

- Introducing NVIDIA RTX A4000

- An Overview of the NVIDIA RTX Series

- The Evolution of NVIDIA’s RTX Series

- NVIDIA RTX A4000 is Suitable for Machine Learning

- Why NVIDIA’s RTX Series is Significant in Machine Learning Scenarios

- How NVIDIA RTX A4000 is Transforming ADA

- Specifications of NVIDIA RTX A4000 ADA and RTX A4000

- Exploring the Potential of RTX A4000 ADA: Use Cases and Application

- Overcoming Challenges: Implementation and Optimization

- Conclusion

- FAQs

Introducing NVIDIA RTX A4000

In April 2023, NVIDIA introduced a new RTX A4000 ADA, a tiny form-size GPU aimed at workstation applications. This card, which succeeds the A2000, is aimed at processing-intensive activities such as scientific research, engineering computations, and data visualization.

The RTX A4000 ADA has 6,144 CUDA cores, 192 Tensor cores, 48 RT cores, and 20GB GDDR6 ECC VRAM. One of the primary advantages of this card is its power efficiency; the RTX A4000 ADA consumes just 70W, lowering both power expenses and system heat output. The GPU can drive multiple screens because of its 4x Mini-DisplayPort 1.4a connection.

Let’s now discuss the hardware components we commonly see in machine learning projects so that we can appreciate the impact of Nvidia RTX A4000 ADA in these scenarios.

Understanding the Machine Learning Hardware Landscape

Machine learning has rapidly evolved into a transformative technology with applications in various fields, from healthcare and finance to autonomous vehicles and natural language processing. The effectiveness of machine learning models often depends on the hardware infrastructure used for training and data processing.

Let’s discuss these hardware components in detail to see where this NVIDIA offering fits into the hardware scheme.

Central Processing Units (CPUs)

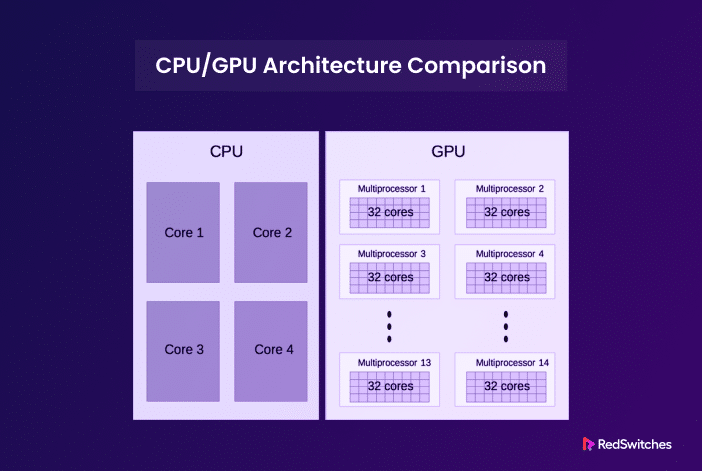

Role: CPUs are general-purpose processors found in most computers. CPU cores in your computer are like workers. So, a CPU with more cores can accomplish a higher number of simultaneous tasks. This helps in deep learning, where computers simulate how people think and make multiple simultaneous decisions.

Use Cases: CPUs are suitable for small-scale, non-complex machine learning tasks in applications that don’t demand high throughput.

Graphics Processing Units (GPUs)

Role: GPUs are specialized processors designed for parallel computing. They excel at matrix operations essential for deep learning.

Use Cases: GPUs are widely used for training deep learning models due to their high computational power and parallel processing capabilities.

Tensor Processing Units (TPUs)

Role: TPUs are custom-designed accelerators by Google for machine learning workloads and intense learning scenarios.

Use Cases: TPUs are known for their speed and efficiency in training large neural networks, making them the preferred choice for cloud-based machine learning.

Field-Programmable Gate Arrays (FPGAs)

Role: FPGAs are reprogrammable hardware chips that can be customized for specific machine-learning tasks.

Use Cases: FPGAs are used in edge devices for low-latency tasks and in data centers for specific acceleration tasks.

Application-Specific Integrated Circuits (ASICs)

Role: ASICs are custom-designed chips optimized for specific machine-learning algorithms and tasks.

Use Cases: ASICs provide unmatched performance for specific workloads like cryptocurrency mining or inference in consumer devices.

Neuromorphic Processors

Role: Neuromorphic processors are designed to mimic the structure and function of the human brain. They excel in processing sensory data in real time with low power consumption.

Use Cases: Neuromorphic processors are used in robotics, IoT, and applications that require energy-efficient, real-time processing.

Quantum Computing Components

Role: Quantum computers leverage quantum bits (qubits) to perform complex calculations exponentially faster than traditional computers.

Use Cases: Quantum computing holds the potential to revolutionize machine learning by solving complex optimization problems and accelerating training processes.

An Overview of the NVIDIA RTX Series

The NVIDIA’s RTX series, initially released in 2018, supports Turing architecture. It offers several high-performance GPUs that provide great graphics quality, real-time ray tracing, and AI-enhanced computation. The RTX 20 series (e.g., RTX 2080 Ti) and the RTX 30 series (e.g., RTX 3080, RTX 3090) are two main GPUs in this series.

How The RTX Series Adds Value to Graphics and AI Tasks

Here are a couple of application scenarios where the RTX series offers excellent performance.

- Real-Time Ray Tracing: The RTX series was the first to bring real-time ray tracing to consumer GPUs. Ray tracing is a rendering technique that simulates how light interacts with objects, resulting in incredibly lifelike graphics.

- AI and Deep Learning: RTX GPUs have Tensor Cores that accelerate AI and deep learning workloads. This is particularly valuable in applications like machine learning, data analytics, and content creation, where AI can enhance performance and image quality.

- DLSS (Deep Learning Super Sampling): DLSS is an AI-driven feature that uses deep learning to upscale lower-resolution images in real time. It improves performance and image quality, making it a game-changer for gamers and content creators.

- Exceptional Performance: The RTX series GPUs are known for their high computational power and performance, making them ideal for gaming enthusiasts, professionals, and researchers working on demanding tasks.

- Graphical Processing: RTX GPUs excel in content creation applications, such as 3D rendering, video editing, and animation.

- AI in Gaming: AI-driven technologies like NVIDIA Reflex and NVIDIA Broadcast leverage RTX GPUs to enhance gaming experiences.

- Scientific and Research Applications: RTX GPUs are used in simulations, data analysis, and AI research.

- Ecosystem and Developer Support: NVIDIA provides a robust ecosystem of tools, libraries, and developer support, making it easier for software developers to harness the power of RTX GPUs in their applications.

- Future-Proof: The RTX series has set a new standard for GPU performance, ensuring users have access to cutting-edge technology for years.

NVIDIA’s RTX series is significant because it has brought about a fundamental shift in the capabilities of GPUs. Let’s check out the major milestones in this series to see how this series introduces exciting capabilities.

The Evolution of NVIDIA’s RTX Series

NVIDIA’s RTX (Real-Time Ray Tracing) series of graphics cards represents a significant milestone in the gaming and graphics industry.

Ray tracing is a rendering technique that simulates how light interacts with objects in a virtual environment, leading to more realistic and visually stunning graphics. This capability allows the RTX GPUs to render images that are closer to reality.

The RTX series has undergone a remarkable evolution since its inception:

Introduction of RTX 20 Series (Turing Architecture)

-

- Year: Announced in August 2018.

- Key Features: The RTX 20 series, based on the Turing architecture, introduced real-time ray tracing and AI-enhanced graphics. The flagship card, the RTX 2080 Ti, offered impressive performance improvements over the previous generation.

- Ray Tracing Debut: The RTX 20 series marked the first consumer graphics cards capable of real-time ray tracing, a technology previously reserved for offline rendering due to its computational intensity.

- DLSS (Deep Learning Super Sampling): DLSS is an AI-driven feature that leverages deep learning to upscale lower-resolution images in real time, significantly improving performance without sacrificing image quality.

RTX 30 Series (Ampere Architecture)

- Year: Announced in September 2020.

- Key Features: The RTX 30 series, built on the Ampere architecture, brought substantial performance improvements over the RTX 20 series. The RTX 3090, for example, offered exceptional gaming and AI capabilities.

- Enhanced Ray Tracing and DLSS 2.0: The RTX 30 series enhanced ray tracing capabilities, making ray tracing more accessible to gamers. DLSS 2.0 introduced image quality and performance improvements, further popularizing AI-driven upscaling.

RTX 30 Series For Laptops

- Year: Launched in early 2021.

- Key Features: NVIDIA extended the RTX 30 series to laptops, enabling high-performance gaming and content creation on portable devices.

RTX 40 Series

- Year: NVIDIA typically introduces a new GPU series every two years, so the RTX 40 series was released in 2022 and 2023.

- Key Features: The RTX 40 series brings further advancements in ray tracing, AI, and gaming performance. Users expect it to deliver higher performance because of significantly improved GPU specifications.

NVIDIA’s RTX series has not only elevated gaming visuals but has also found applications in fields like content creation, scientific research, and AI development. As the series continues to evolve, it promises to push the boundaries of what’s possible in real-time graphics and computation.

NVIDIA RTX A4000 is Suitable for Machine Learning

The CUDA cores are the backbone of the RTX A4000 ADA’s parallel processing capabilities. These cores substantially speed calculations across a wide range of professional applications. Similarly, the Tensor cores, specialized hardware components meant to speed up matrix calculations, demonstrate NVIDIA’s dedication to improving machine learning tasks. This functionality speeds up AI model training and allows new insights and discoveries.

Let’s go into more detail about these components and capabilities.

- CUDA Cores: The RTX A4000 uses CUDA cores for parallel processing. This allows for faster execution of machine learning algorithms, especially those depending upon parallel processing and calculations.

- Tensor Cores: Tensor cores are used to speed up matrix operations, a fundamental component of many machine learning algorithms. These cores significantly speed up the training of deep neural networks.

- High Memory Capacity: The RTX A4000 has a significantly higher bandwidth and GDDR6 memory architecture. This huge capacity is critical for the graphics engine to deliver rendering and GPU-related computation

- Support for Popular AI Frameworks: Popular machine learning frameworks like TensorFlow and PyTorch offer optimizations and libraries that leverage NVIDIA GPUs, including the RTX A4000.

- Compatibility with CUDA and cuDNN: NVIDIA GPUs support CUDA (Compute Unified Device Architecture) and cuDNN (CUDA Deep Neural Network Library), essential tools for accelerating deep learning tasks.

- Precision Modes: The RTX A4000 supports different precision modes, such as single-precision and mixed-precision, which balance computational speed and accuracy in machine learning tasks.

Why NVIDIA’s RTX Series is Significant in Machine Learning Scenarios

NVIDIA’s RTX series represents a significant advancement in graphics processing and has significantly impacted various industries, including gaming, content creation, and artificial intelligence.

This series provides several unique features and capabilities that have changed what is possible in visual computing. They are also a great fit for applications that require machine learning inference.

The cards in the RTX series significantly improve the quality and performance of your computer’s graphics, whether you have a normal desktop GPU or a high-end GPU for professionals like NVIDIA RT or NVIDIA QUADRO. Note that this series has higher power consumption (especially the Ampere generation), which implies they can do more work and deliver a more robust performance to tackle AI workloads.

How NVIDIA RTX A4000 is Transforming ADA

The Advanced Driver Assistance System(ADA) technology is a key advancement in the automotive sector. This technology aims to improve vehicle safety, driver comfort, and the overall driving experience.

Used in autonomous or semi-autonomous vehicles, ADA is critical to delivering features and systems that aid drivers and significantly improve their experience.

The Ada generation of GPU also offers unified memory, a special feature allowing the computer to use memory more efficiently in a desktop workstation. This feature delivers much higher read/write speed than CPU memory, improving the desktop experience.

Let’s explore some critical aspects of the ADA technology:

Introduction of ADA Technology

Year: ADA technology started gaining prominence in the late 20th century, with various advancements over the years.

Key Features: ADA encompasses a range of features such as adaptive cruise control, lane-keeping assistance, automatic emergency braking, blind-spot monitoring, and parking assistance.

Evolution of ADA Components

Sensors: Over time, ADA systems have become more sophisticated, thanks to the integration of advanced sensors such as RADAR, LiDAR, cameras, and ultrasonic components. These sensors enable vehicles to perceive their surroundings and make critical real-time decisions.

Processing Units: ADA systems rely on powerful processing units and artificial intelligence algorithms to interpret sensor data, detect objects, and make decisions for safe driving.

Levels of Automation

ADA technology is categorized into levels of automation, from Level 1 (driver assistance with minimal automation) to Level 5 (full automation, where no driver intervention is required). Most vehicles on the road today feature Level 1 and 2 automation, with higher levels under development.

Safety and Accident Prevention

ADA technology plays a crucial role in accident prevention with features like automatic emergency braking, collision avoidance systems, and pedestrian detection.

Convenience and Driver Assistance

ADA enhances driver convenience through features like adaptive cruise control, which maintains a safe vehicle distance, and lane-keeping assistance, which helps keep the vehicle within its lane.

This technology is a stepping stone toward making fully autonomous vehicles an everyday technology.

The Challenges ADA Faces

The development and deployment of ADA technology face regulatory challenges as governments work to establish safety standards and regulations for autonomous driving. Similarly, the security of ADA systems against potential cyber threats is a growing concern.

The Future Direction of ADA

ADA systems are expected to become even more intelligent and capable with the advancement of artificial intelligence and machine learning. In addition to autonomous vehicles, you’ll see closer ADA-powered technology in smart infrastructure, where these components will enhance safety and traffic management.

Specifications of NVIDIA RTX A4000 ADA and RTX A4000

Exploring the Potential of RTX A4000 ADA: Use Cases and Application

Now that you have read about the capabilities and features of the RTX A4000 ADA, let’s see how this card adds value to typical use cases and applications:

Data Analytics and Business Intelligence

The RTX A4000 ADA is well-suited for data analytics tasks, including data preprocessing, analysis, and visualization. It accelerates complex calculations and data-driven insights, making it valuable for businesses handling large datasets.

Machine Learning and Deep Learning

This GPU is a robust choice for training and inferencing deep learning models. It offers the necessary computational power to speed up the development of AI applications, including image recognition, natural language processing, and recommendation systems.

Healthcare and Life Sciences

This GPU can help improve medical image analysis, drug discovery, genomics research, and epidemiological modeling. Its processing power aids in diagnosing diseases, simulating drug interactions, and conducting genetic studies.

Financial Services

Financial institutions utilize the RTX A4000 ADA for risk analysis, fraud detection, algorithmic trading, and portfolio optimization. Its speed and precision help in making data-driven financial decisions.

Autonomous Vehicles and Robotics:

The GPU’s AI capabilities are vital in autonomous vehicle development, enabling advanced perception systems, decision-making algorithms, and sensor fusion. It’s also valuable in robotics for real-time navigation and object recognition.

Overcoming Challenges: Implementation and Optimization

While NVIDIA A4000 ADA offers significant benefits, there’s still a long road ahead. Let’s discuss several of these challenges:

Hardware Integration

The Challenge: Integrating the RTX 4000 ADA into existing hardware configurations can be complex, especially in servers or workstations with limited space and power capacity.

The Solution: Prioritize compatibility by ensuring that the GPU fits physically and is supported by the power cable or power supply. Choose a motherboard and system components that can accommodate the GPU’s requirements.

Driver and Software Compatibility

The Challenge: Ensuring the GPU’s drivers and software libraries are up-to-date and compatible with your specific use case can be challenging.

The Solution: Regularly update NVIDIA drivers and associated software. NVIDIA typically releases optimized drivers for popular machine learning frameworks like TensorFlow and PyTorch. Verify software compatibility and version requirements.

Cooling and Thermal Management

The Challenge: High-performance GPUs like the RTX 4000 ADA generate significant heat, leading to thermal throttling and reduced performance of systems with poor heat management.

The Solution: Invest in efficient cooling solutions, including proper case ventilation and GPU coolers. We recommend investing in temperature monitoring solutions that ensure adequate airflow and thermal management.

Power Requirements

The Challenge: GPUs like the RTX 4000 ADA demand considerable power, which can complicate power supply and increase operational costs.

The Solution: Verify that your power supply unit (PSU) can provide the necessary wattage and connectors for the GPU. Consider energy-efficient power supplies to reduce long-term operating costs.

Software Licensing and Costs

The Challenge: Some GPU-accelerated software may have licensing costs or limitations, adding to the overall budget.

The Solution: Carefully evaluate software licensing requirements and explore open-source alternatives. Calculate total ownership costs, including licensing fees, for budget planning.

Documentation and Resources

The Challenge: Staying informed about the latest updates, best practices, and community support for the RTX 4000 ADA can be time-consuming.

The Solution: Access NVIDIA’s official documentation, online forums, and communities for insights, troubleshooting, and updates. Engage with the developer community to exchange knowledge and experiences.

Conclusion

The NVIDIA RTX A4000 ADA is a highly capable GPU for processing graphical and AI workloads. Its ability to render images, analyze data, and perform AI-driven activities makes it a flexible tool for businesses looking to push boundaries, get better insights, and create desirable visual experiences. As we explore the RTX A4000 ADA’s capabilities, it becomes clear that NVIDIA’s dedication to excellence in AI and graphical processing continues with this series.

If you’re looking for a robust infrastructure for hosting your GPU-powered projects, RedSwitches offers the best dedicated server pricing and delivers instant dedicated servers, usually on the same day the order gets approved. Whether you need a dedicated server, a traffic-friendly 10Gbps dedicated server, or a powerful bare metal server, we are your trusted hosting partner.

FAQs

Q: What is the NVIDIA RTX A4000 GPU for Deep Learning?

A: The NVIDIA RTX A4000 is a graphics card optimized for deep learning applications. It is built on the Ada Lovelace architecture and offers improved performance and features compared to the previous GPU generations.

Q: What is the form factor of the RTX A4000 GPU?

A: The RTX A4000 GPU is a small form factor (SFF) GPU. It is designed to fit into compact systems without sacrificing performance or features.

Q: How much GPU memory does the RTX A4000 have?

A: The RTX A4000 has 16 GB of GPU memory, suitable for handling large datasets and complex deep-learning models.

Q: What is the architecture of the RTX A4000 GPU?

A: The RTX A4000 is built on the next-generation NVIDIA Ada Lovelace architecture. It features improved ray tracing and tensor cores for faster and more efficient deep learning tasks.

Q: How many CUDA cores does the RTX A4000 have?

A: The RTX A4000 has 6144 CUDA cores, which enable it to perform parallel processing tasks efficiently.

Q: How does the RTX A4000 compare to the RTX 4000 SFF Ada?

A: The RTX A4000 is an upgraded version of the original RTX 4000 SFF Ada, built on the next-generation NVIDIA Ampere architecture. It offers a significant performance boost and better ray-tracing capabilities.