Developers need a powerful tool to reduce time and effort when creating, deploying, and shipping apps.

Before the popularity of Docker, developers used virtual machines (VMs). VMs were flexible but needed more maintenance and attention than Docker containers.

Since developers were running multiple VMs at a time, this commitment was the reason behind the VMs decreasing popularity. When Docker was introduced in 2013, it quickly replaced VMs because of easy troubleshooting.

In this article, we’ll go into the details of Docker Swarm, an orchestration tool used to set up clusters of hosts and containers. But, to understand how Docker Swarm works, you need a bit of background about how Docker works as a containerization platform.

Table Of Content

- What is Docker?

- How Does Docker Work? (A Technical Overview)

- What Is Docker Swarm?

- How Does Docker Swarm Work?

- The Four Critical Elements in a Docker Environment

- A Comprehensive Guide to the Docker Swarm Features

- The 10 Key Concepts You Need To Know About Docker Swarm Mode

- Set Up Your First Docker Swarm Demo

- Concluding a Docker Swarm?

- FAQs

What is Docker?

Regardless of the underlying environment, Docker simplifies application development, deployment, management, and security. It packages code into containers for quick deployment on servers and virtualized environments.

How Does Docker Work? (A Technical Overview)

A container is a concise package in which developers bundle all application components. These can include databases, libraries, and web servers.

Containers run the same way on any environment: hosted on services like AWS, VPSs, dedicated servers, or localhosts. This simplifies deployment management as there’s less need to consider incompatibilities and dependencies.

What Is Docker Swarm?

Docker Swarm simplifies the management of containerized app lifecycles. It is a clustering and scheduling tool that manages multiple Docker hosts like one virtual system. Also, it’s used to deploy multi-node applications quickly and reliably and, most importantly, at scale.

How Does Docker Swarm Work?

Docker Swarm works by allowing users to create a cluster of nodes (or machines) with different roles and characteristics.

A node can be either a manager or a worker node, and each has its own set of responsibilities.

Manager nodes coordinate tasks such as scheduling and provisioning. These nodes also provide an interface for users to interact with the cluster and manage container deployments. When a user issues a command or request, it is routed to the appropriate manager node, which in turn, sends instructions to the worker nodes managing the containers.

Get a Pre-Optimized Dedicated Server

Blazing Fast Speed and Fully Managed Server Like Never Before

This way, Docker Swarm helps users quickly automate and manage containerized applications.

The Four Critical Elements in a Docker Environment

A Docker environment has four critical elements – the Docker engine, storage and networking drivers, image repository, and orchestration tools.

The Docker Engine

The Docker engine takes user input from a command line or GUI and runs the underlying processes that build the layers of the OS-level virtualization.

Storage & Network Drivers

Storage and network drivers allow containers to access external data sources to work efficiently across multiple nodes.

Image Repositories

Image repositories store previously used images. This significantly minimizes delivery time when deploying applications because the deployment process starts by getting the image from the repository.

That’s why popular tools such as Linux distros and CMS offer custom Docker images to speed up the deployment process. In fact, developers can create custom images for their applications for faster delivery.

Orchestration Tools

Orchestration tools help manage the scaling and clustering of containers in production environments.

A Comprehensive Guide to the Docker Swarm Features

Docker Swarm is an excellent solution for managing multiple containers across multiple machines. It allows developers and system administrators to scale their applications more effectively.

The following are the most important Docker Swarm features. We’ll also cover how server admins can use these features to optimize their processes.

Scalability and Availability

Docker Swarm offers horizontal scalability where admins can add or remove hosts to scale Docker infrastructure up or down. This allows for quick adjustments based on the current user load and eliminates the need for over-provisioning.

Additionally, the built-in Docker Swarm redundancy feature ensures that the Docker infrastructure remains highly available, regardless of the number of nodes or hosts in the cluster.

Disaster Recovery

The ability to deploy resources quickly during disaster recovery is built into Docker Swarm. Docker Swarm’s speed and automation let admins get applications back up faster than rebuilding from scratch.

Efficiency in Resource Utilization

Docker Swarm efficiently uses resources across nodes in a cluster. This maximizes scalability by eliminating idle machines and their wasted CPU cycles and storage space. Also, unused resources don’t take up memory space, providing an extra layer of protection.

Simplified Container Configuration Management

Docker Swarm also simplifies container management and deployment. It provides a unified platform for simultaneously managing multiple containers.

As a result, admins can easily coordinate different deployments within an application or among multiple applications. In addition, Docker Swarm can streamline processes such as testing, debugging, update rollout, and new services deployment.

With the simple and unified interface, developers can change the configuration from a single location without having to go to each container and update the settings manually.

Security Benefits

Finally, Docker Swarm provides multi-layered security measures. This includes encryption protocols for secure communications between hosts and control mechanisms.

Additional security measures include setting access rules based on service roles, automatic malware scanning, real-time analysis of potential security risks, and automated user activity logging so admins can stay informed on potential threats.

The 10 Key Concepts You Need To Know About Docker Swarm Mode

Docker Swarm Mode is the native clustering engine for the Docker platform. It provides an easy way to create and scale your Docker applications.

You need to know the following ten key concepts in Docker Swarm Mode so that you can effectively use it for container orchestration and management.

Concept # 1: Clusters

Creating clusters is a core concept in Swarm Mode. Clusters are a group of machines/nodes running on a single or multiple physical machines or cloud providers.

Regardless of the deployment configuration, all clusters work together as one logical unit. Nodes can range from a single node to thousands, depending on how many Universal Control Planes (UCPs) you deploy.

Concept # 2: Orchestration

Orchestration involves managing the services (such as scheduling and deployment) associated with an application over a cluster. The process can involve working with internal and external components, including databases, web servers and other containers that need to be active for the applications to function properly.

Concept # 3: Services

Services run user-defined applications within the cluster. The range of services includes spinning up individual containers for these applications. Each service has specific requirements, such as memory and CPU limits and network policies.

Concept # 4: Load Balancing

The load balancing feature is enabled in Docker Swarm Mode by default and helps distribute services evenly across all nodes in a cluster. All containers are automatically balanced according to the specific “weight” criteria.

Concept # 5: Labels

Labels are used to tag nodes into groups for better identification of processes such as updating service configurations. In addition, labels also allow orchestration software to quickly understand what hardware and cloud resources each node might have (or not).

Concept # 6: Roles

Roles are assigned to nodes so that the system can assign them specific functions. For instance, worker nodes are tasked with keeping all containers up-to-date.

Concept # 7: Secrets & Configs

Secrets & configs are often used inside swarm mode environments to separate certain variables from the container environment variables. This ensures additional secure storage for sensitive information, such as passwords and credentials.

Concept # 8: Traffic Splitting

An interesting Docker Swarm feature is the ability to split traffic among different containers. Known as traffic splitting, this feature evenly distributes user requests across a cluster of containers for better performance and faster response.

Traffic splitting works by using an algorithm called “weighted round robin”. This algorithm assigns a “weight” to each container in the cluster. The weight determines the volume of resources available to each container. When a user request comes in, the algorithm chooses the appropriate container based on the assigned weight and sends the request to it.

Concept # 9: Swarms & Stacks

Swarms and Stacks are two powerful features of Docker Swarm that allow users to easily scale their applications and services.

Swarm mode allows users to deploy multiple containers across multiple nodes and scale them up or down as needed. The Swarm manager is responsible for scheduling and managing the containers across the nodes. This makes running, scaling, and managing multiple services and associated resources easier and much more efficient.

Stack is a feature that allows users to define their application’s environment in a single file, known as a “stack file”. This stack file contains all the necessary information for deploying an application or service, including its configuration and environment variables. This simplifies applications and services deployment as all necessary information is contained in a single file.

Concept # 10: Scalability

Swarm provides an easy way to scale the number of containers running in a cluster. This allows organizations to quickly adjust application performance.

Swarm also makes it easy to roll out new features and updates. With the help of a single command, users can easily deploy any changes to all the nodes in a cluster. As a result, developers can rapidly push updates without manually updating each node. Moreover, Swarm provides an audit trail of all changes since its initial deployment.

Set Up Your First Docker Swarm Demo

Setting up your first Docker Swarm Demo is a great way to understand and demonstrate the power of containerized applications. Creating a successful swarm requires skill, knowledge, and patience. So, although it may seem daunting at first, you can ease into the process by following these steps to set up your first Docker Swarm Demo.

Basic Prerequisites for Setting Up Docker Swarm

The three essential prerequisites for setting up Docker Swarm are:

- A Linux machine to host the primary node and a second Linux machine for a secondary node. For this demo, we’ll use Ubuntu 22.04 for both nodes.

- Docker is installed on all nodes of the Swarm, with a connection between all machines (usually in the form of the same local network).

- It’s important to ensure that each node is configured with appropriate firewall settings and authentication credentials.

These prerequisites are important for establishing and managing multiple distributed containers across various nodes using Docker Swarm.

Let’s start the demonstration.

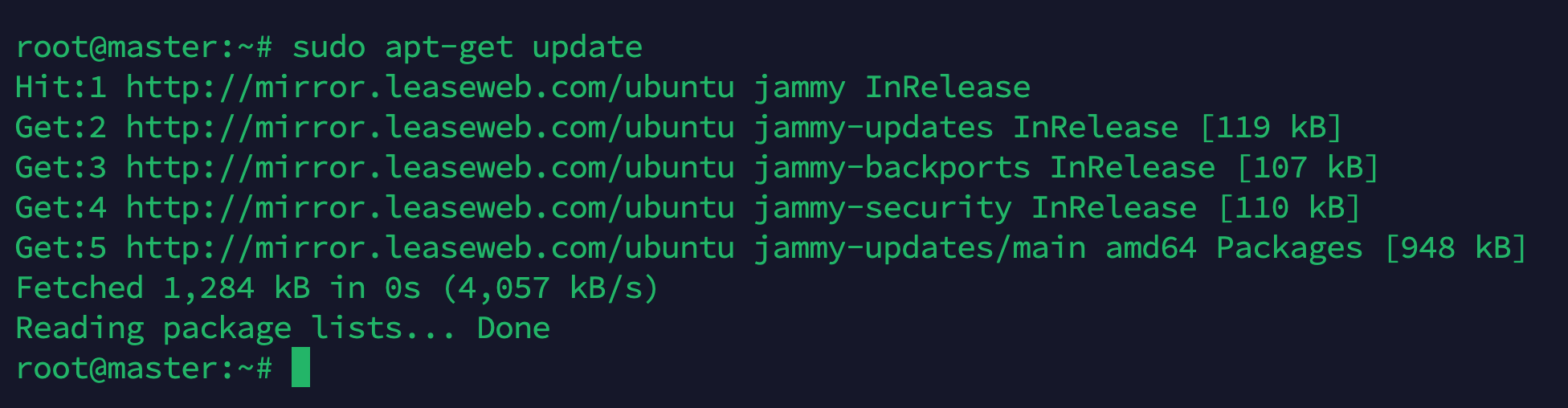

Step # 1: Staying on Top of the Latest Software Updates

The Ubuntu `apt update` command is used to install updates for packages installed on your system. It works by first updating the package list from the repository and then downloading and installing updated packages, if there are any.

So, you should start the demo with the following command:

sudo apt-get update

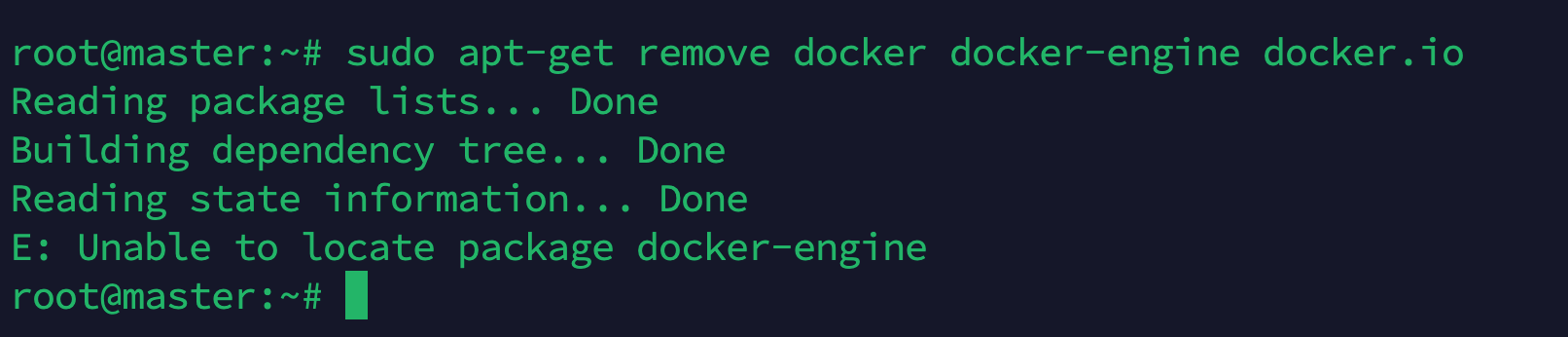

Step # 2: Uninstall Old Docker Version(s)

Uninstalling old versions of Docker is a relatively straightforward process.

First, back up any configuration files you want to keep before uninstalling Docker. You can then use a package manager (such as APT) on Linux systems to remove unwanted versions.

Manually removing all associated files is also possible, but this should only be done if absolutely necessary.

You can run the following command to remove the old Docker version.

sudo apt-get remove docker docker-engine docker.io

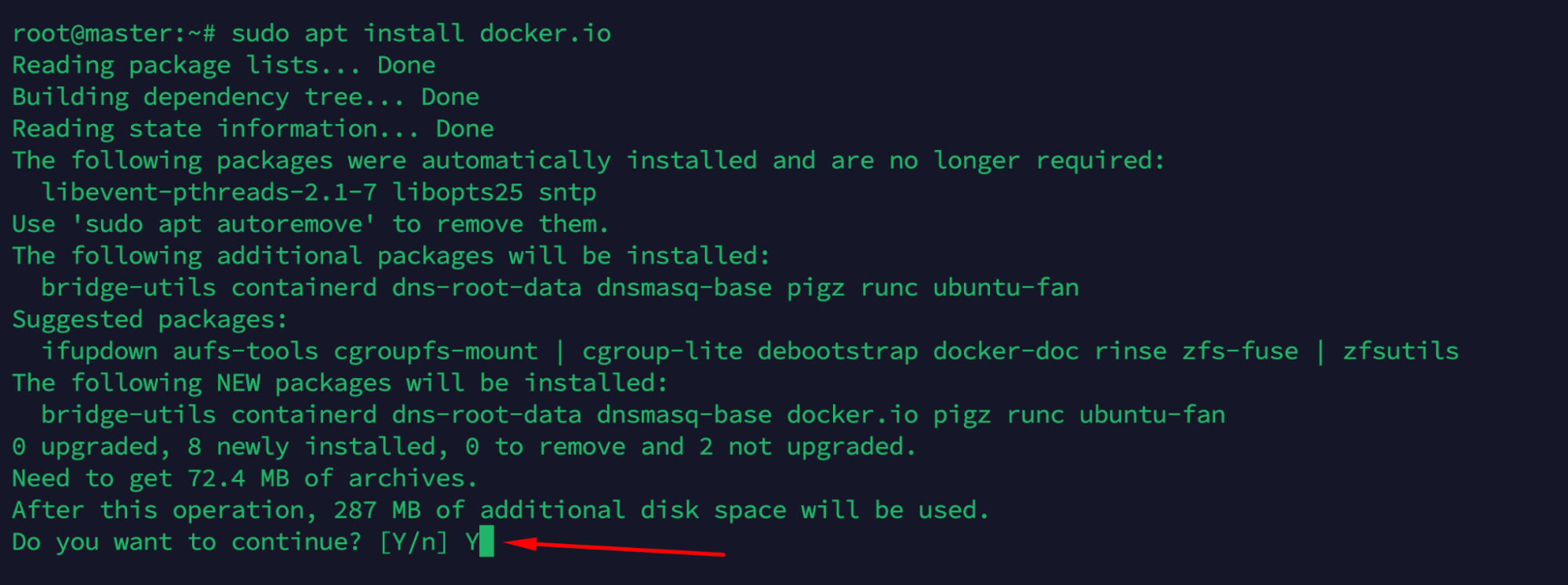

Step # 3: Installing Docker in Minutes With the Official Installer

After uninstalling the old Docker version, you need to install a recent version. The official Docker installer simplifies the process.

Start by downloading and installing Docker on your machine. Once the installation finishes, you’ll have access to all Docker features you need to start building, running, and managing containerized applications.

Run the following command to install Docker on Ubuntu 22.04

sudo apt install docker.io

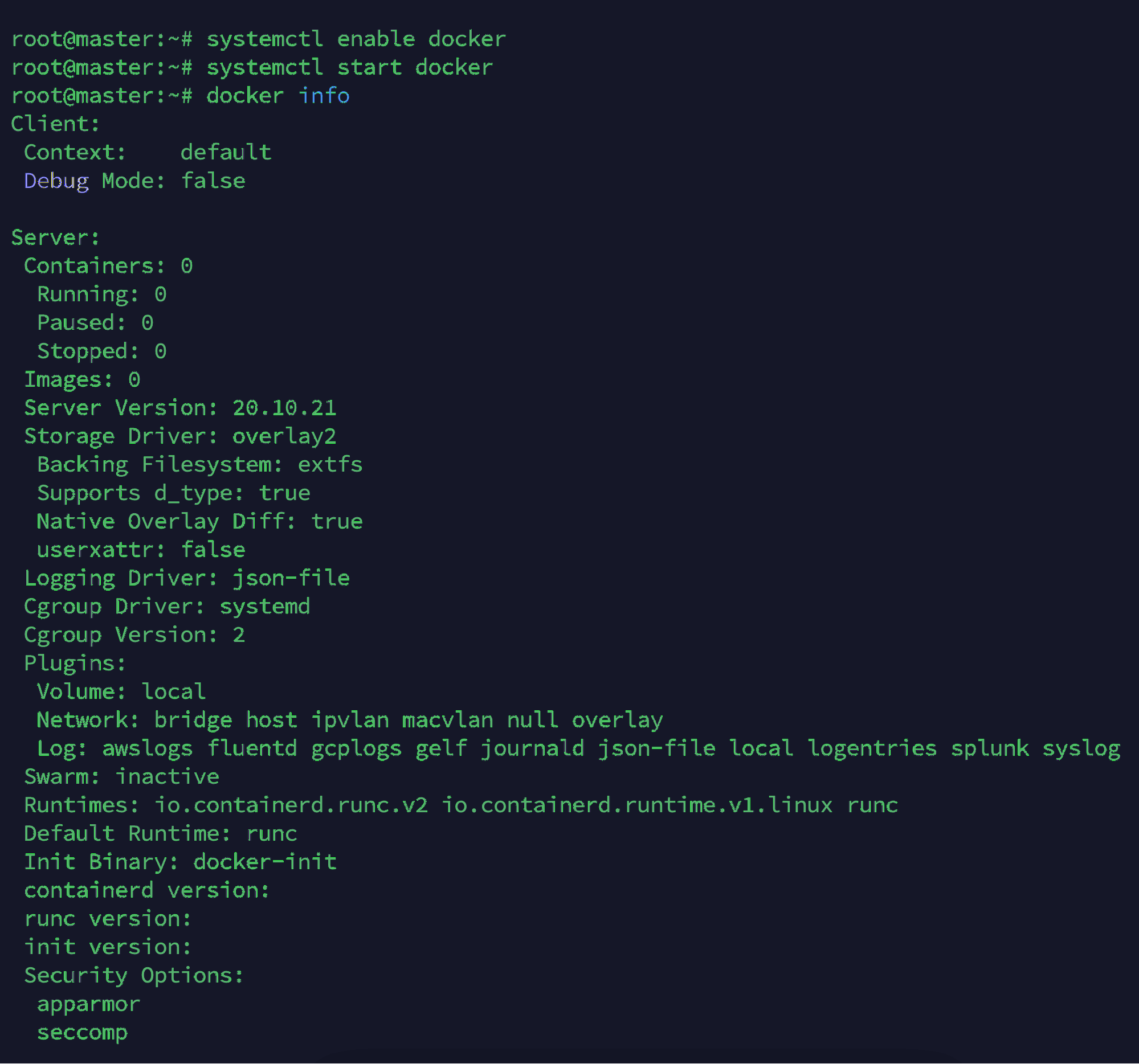

Step # 4: Enable and Start the Docker Service on Nodes

You need to run the ‘systemctl enable docker’ command to enable Docker service on your nodes. This will add the Docker daemon to the system startup and automatically restart it if it crashes.

Next, execute the ‘systemctl start docker’ command to start the Docker service. This initializes the process and loads all required images and containers in memory.

Finally, use the ‘docker info’ command to view the status of the Docker service. This will show information about active containers, disk usage, and other statistics about your installation.

systemctl enable docker

systemctl start docker

docker info

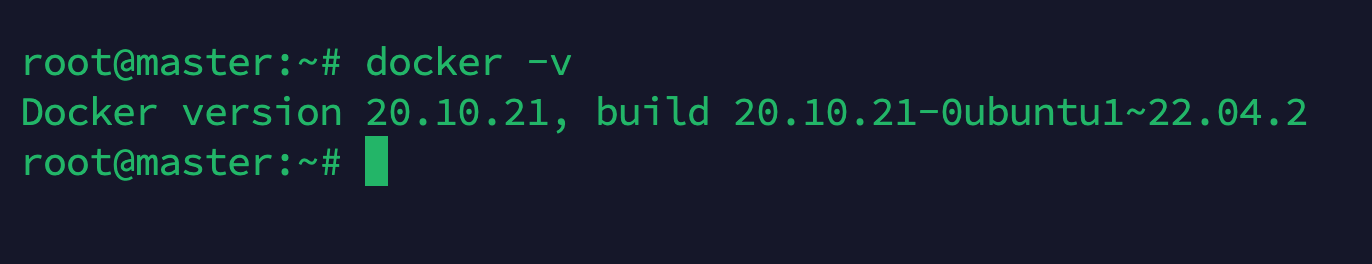

Step 5: Check the Active Docker Version

Checking the version of Docker you are using is simple, thanks to the “docker -v”. Additionally, this command can also be used to check if updates are available.

docker -v

Step 6: Create and Run Your First Docker Container

To create and run your first Docker container, you need to follow a couple of steps. Open the terminal and use the ‘docker run’ command to create a new container from an available image.

After that, you can use the ‘docker start’ command to run your newly created container, which will then be accessible from any compatible program.

Finally, Docker provides helpful commands like ‘docker logs’ and ‘docker stats’ for getting detailed information about what is happening inside the active containers.

Let’s run an Apache HTTP docker container.

sudo docker pull httpd

sudo docker run -d -p0.0.0.0:80:80 httpd:latest

sudo docker run -d -p0.0.0.0:80:80 httpd:latest creates and serves an Apache HTTP web server with Docker in the background. It runs the most recent version of HTTP on port 80, so you can access it from any IP address by visiting your web server’s domain name or IP address in a web browser. The command also maps port 80 inside the container to port 80 on the host machine, allowing external applications to access the Apache HTTP server.

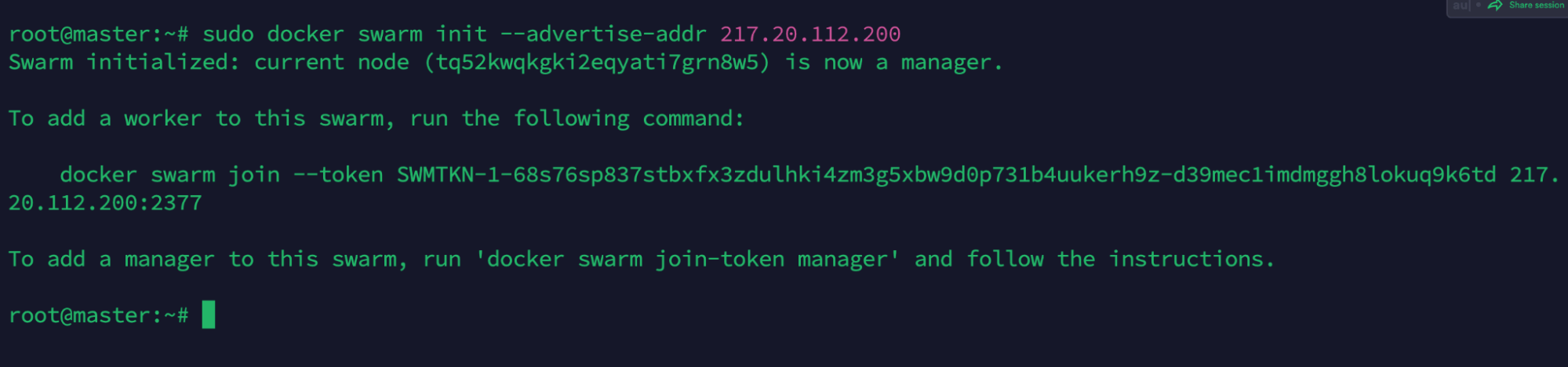

Step 7: Create Your First Swarm Cluster on Docker

To create a swarm cluster on Docker, you must first install and configure Docker, as well as create a network.

Next, you can use the ‘docker swarm init’ command to set up the master node for the cluster.

sudo docker swarm init --advertise-addr 217.20.112.200

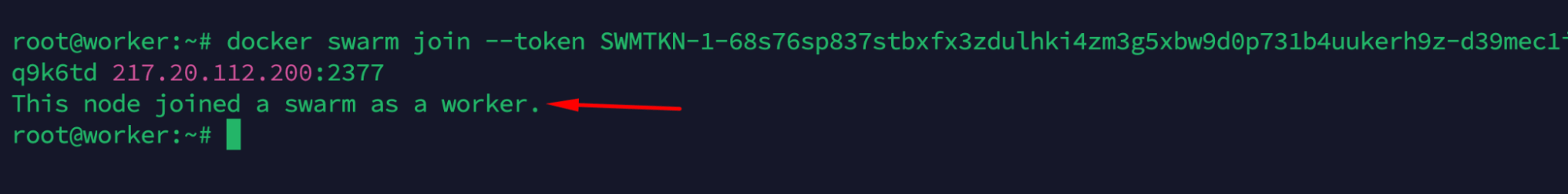

After this is done, you can add additional nodes through the ‘docker swarm join’ command.

Once all nodes are connected, your cluster will be active and ready to host containers with your applications and services.

Finally, consider using tools such as compose files or stacks to manage the services in your cluster in an organized manner.

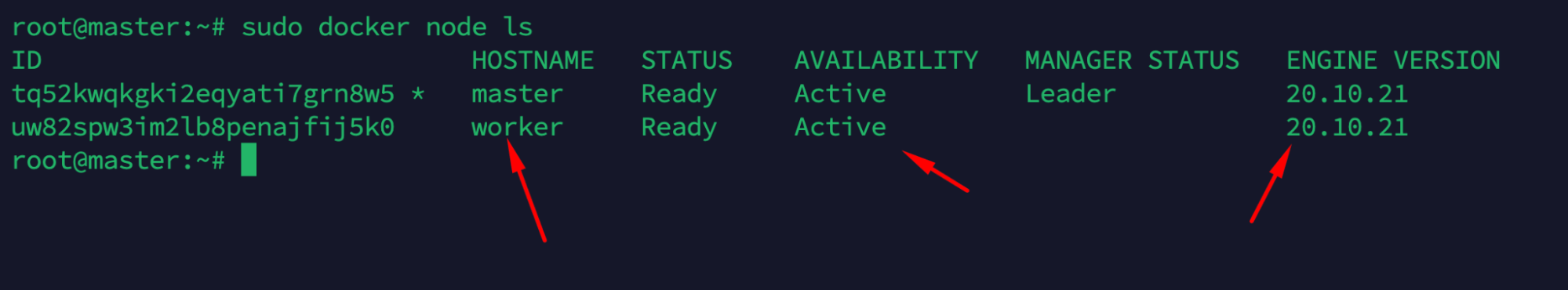

Step 8: Quickly List All the Workers Within a Docker Swarm

To quickly list all workers within a Docker Swarm, you can use the `docker node ls` command, which will display a list of nodes, along with their names and status.

The output includes the manager or master nodes as well as worker nodes along with their host address and whether they are reachable.

sudo docker node ls

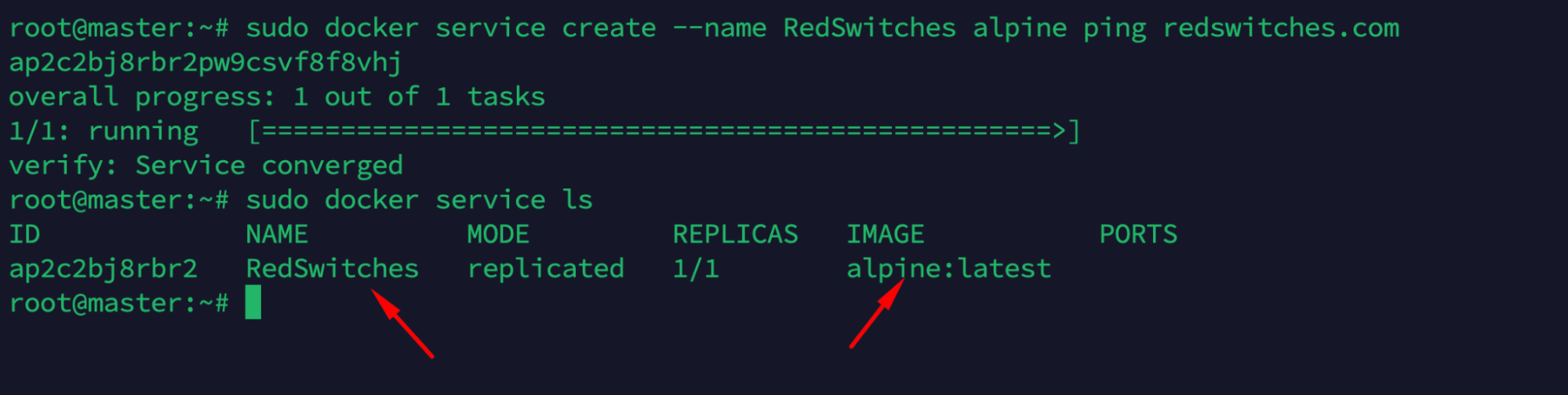

Step 9: – Deploy Your Service on Docker Swarm

Docker Swarm is a great option for deploying services because it automates the process of deploying applications on multiple nodes in a distributed system. With Docker Swarm, you can create and manage clusters of servers with ease.

Let’s go to the manager node and run the below command to deploy a service:

sudo docker service create --name RedSwitches alpine ping redswitches.com

The above command creates a Docker service named RedSwitches and runs the program ping on it to determine if the website redswitches.com is up and running.

This command needs to be executed with root privileges (sudo), which will initialize the creation of the service.

The image Alpine is used as a base for creating this Docker service, which allows for quick installation, easy resource control, and more secure deployments. Once created, the new container can be managed through several other commands issued in the terminal in order to customize it further or monitor its performance.

Step 10: Identify What’s Running on Your Docker Swarm Cluster

Determining what’s currently running on a Docker Swarm cluster requires a few simple steps.

First, users should run the “docker service ls” command in their terminal to receive an overview of all services and containers running on the cluster. This will provide details such as the names of the services, replicas, image versions, and the ports they are listening on.

Users can also use other Docker commands like “docker container ls”, which will list all containers running on the cluster and display their statuses.

sudo docker service ls

This concludes the demonstration.

Concluding a Docker Swarm?

Docker Swarm is a handy tool for managing and orchestrating multiple virtualized containers in a large cluster. It offers scalability, quickly resolves resource allocation issues and easy deployment. Furthermore, its wide array of features and open-source codebase makes it highly customizable to meet the diverse requirements of almost all industries. As such, Docker Swarm remains a powerful and widely adopted solution for container orchestration in the modern world.

FAQs

Q- Is Docker Swarm the same as Kubernetes?

→ No, the Docker Swarm is not the same as Kubernetes. While both Docker Swarm and Kubernetes are popular container orchestration tools, they have some important differences.

Kubernetes is a full-fledged container orchestration platform that offers advanced features such as self-healing, auto-scaling, and high availability. Additionally, it supports workloads on various cloud providers, including AWS, Azure, GCP, and more.

Meanwhile, Docker Swarm is a container clustering and scheduling tool designed to manage clusters of physical or virtual Docker hosts. It provides basic orchestration capabilities such as service discovery and scaling. Unlike Kubernetes, it does not offer advanced features such as auto-scaling or self-healing.

Q- Does Docker Swarm come with Docker?

→ No, Docker swarm does not come with Docker. Docker Swarm is a container orchestration platform built on top of the Docker engine and can be used to manage and scale containerized applications across multiple nodes. It provides native clustering capabilities and allows you to deploy and manage containers across a cluster of machines. It allows you to use all the features of the Docker engine, like networking, storage, and security. It also provides a set of APIs and command line tools that allow you to monitor and control the swarm.

Q- Where is Docker Swarm used?

→ Docker Swarm can be used in both cloud-based and on-premises environments for application scaling and deployment automation.